Author: Denis Avetisyan

A new evaluation metric aims to move beyond simple association and assess genuine creativity in large language models.

Researchers introduce the Conditional Divergent Association Task (CDAT) to better measure novelty and appropriateness in AI-generated content.

Assessing creativity in large language models presents a paradox, as current metrics often fail to align with established human theories of creative cognition. In ‘Beyond Divergent Creativity: A Human-Based Evaluation of Large Language Models’, we demonstrate limitations in the widely used Divergent Association Task (DAT) and introduce the Conditional Divergent Association Task (CDAT), a novel metric balancing both novelty and appropriateness-core components of human creativity. Our results reveal that smaller models often exhibit greater creativity, while larger models prioritize appropriateness, suggesting a trade-off influenced by training and alignment procedures. Does this frontier between novelty and appropriateness represent a fundamental constraint in achieving truly creative artificial intelligence?

The Essence of Divergence: Unveiling Creative Thought

Divergent thinking, a cornerstone of human intelligence, extends far beyond simply brainstorming a multitude of ideas; it’s the capacity to explore a problem from numerous angles and generate solutions that are both novel and useful. This cognitive process allows individuals to see connections others miss, adapting existing knowledge to unforeseen circumstances and forming entirely new concepts. Unlike convergent thinking – which focuses on finding a single, correct answer – divergent thinking embraces ambiguity and encourages the exploration of possibilities, fostering innovation across disciplines ranging from artistic expression to scientific discovery. The effectiveness of this ability is not merely quantified by the number of ideas produced, but by their originality and practical application, highlighting its crucial role in problem-solving and adaptive behavior.

Computational attempts to simulate human creativity consistently encounter limitations when tasked with divergent thinking. Existing models, frequently relying on statistical patterns within datasets, often generate outputs that, while grammatically correct, lack genuine novelty or practical relevance. These systems struggle to move beyond simple recombination of existing ideas, instead producing outputs that are either overly predictable or nonsensical within a given context. The core issue lies in the difficulty of encoding the nuanced understanding of semantics, pragmatics, and real-world knowledge that humans effortlessly employ when brainstorming and problem-solving. Consequently, evaluating the creative potential of these models proves challenging, as traditional metrics fail to distinguish between outputs that are merely statistically improbable and those that represent truly innovative thought.

Assessing creative potential presents a unique challenge, as simply generating unusual ideas isn’t enough; those ideas must also be meaningful and relevant to the prompt. Existing metrics often prioritize novelty at the expense of appropriateness, or vice versa. To overcome this limitation, researchers developed the Conditional Divergent Association Task (CDAT), a novel evaluation framework. The CDAT doesn’t just measure how different a response is from typical answers – its semantic distance – but also rigorously assesses its contextual relevance. By simultaneously quantifying both divergence and appropriateness, the CDAT offers a more nuanced and reliable method for gauging creative capacity, moving beyond simplistic measures of originality and providing a more holistic understanding of human ideation.

Measuring the Breadth of Thought: A Framework for Divergence

The Divergent Association Task (DAT) operationalizes the measurement of lexical divergence, a cognitive process central to divergent thinking. This task requires participants to generate a list of words responding to a given prompt, or ‘cue’. Lexical divergence is then quantified by assessing the semantic distance between the generated words; a greater distance indicates a more divergent response set. The DAT provides a computational framework for evaluating the breadth of semantic exploration, moving beyond subjective assessments of creativity by providing a quantifiable metric based on the variety of word choices. This approach allows for comparative analysis of divergent thinking abilities across individuals or conditions, and facilitates the investigation of factors influencing semantic exploration.

The Divergent Association Task (DAT) quantifies divergent thinking by assessing the semantic distance between words generated in response to a prompt. This measurement relies on vector embeddings, where words are represented as points in a high-dimensional space; the distance between these vectors-calculated using metrics like cosine distance-indicates semantic relatedness. Greater distances signify more semantically disparate responses, suggesting higher levels of divergent thought. Analysis focuses on the distribution of these distances within a set of generated words, with a wider spread indicating a greater range of associations and, therefore, a potentially more creative response. The specific embedding model used (e.g., GloVe, fastText, SBERT) influences the resulting distance calculations, but consistent rankings across different models demonstrate the robustness of this approach.

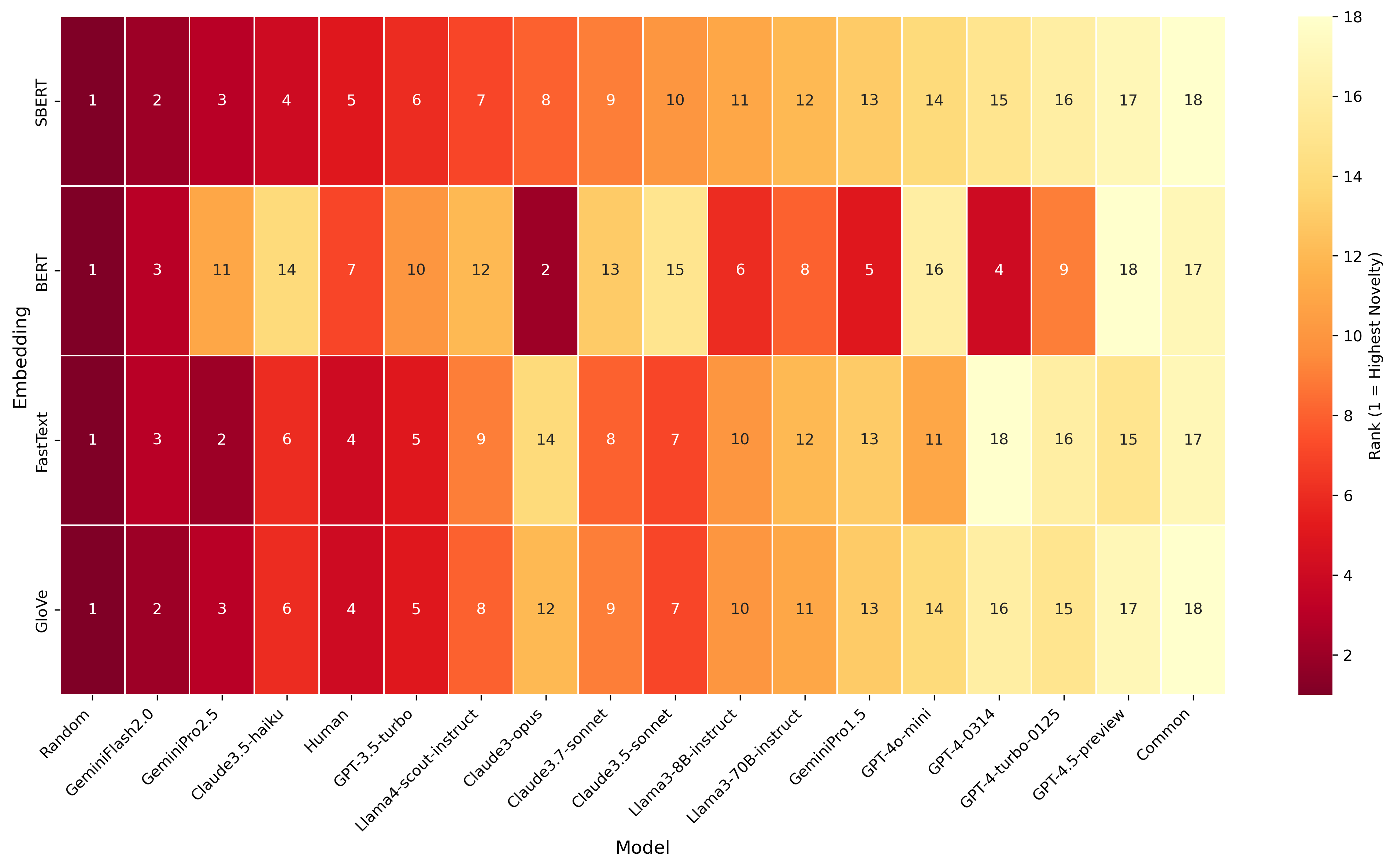

The Conditional Divergent Association Task (CDAT) improves upon simple divergence measurements by requiring generated responses to be both diverse and relevant to a given cue. This contrasts with approaches that only assess semantic distance without considering contextual appropriateness. Validation of the CDAT methodology demonstrates a high degree of consistency across different word embedding models; Spearman’s rank correlation coefficients between novelty rankings generated using GloVe, fastText, and SBERT embeddings ranged from 0.87 to 0.96. These strong correlations indicate the robustness of the CDAT as a metric for quantifying creative thinking, independent of the specific embedding space utilized.

![Mean DAT scores reveal that creative prompting with varying temperature settings [latex]t=0.5, 1.0, 1.5[/latex] consistently outperforms both task-agnostic and task-aware baselines, as indicated by 95% confidence intervals.](https://arxiv.org/html/2601.20546v1/x1.png)

The Architecture of Innovation: Leveraging Large Language Models

Large Language Models (LLMs) demonstrate substantial capabilities in automated creative content generation due to their architecture and training data. These models, typically based on transformer networks, are pre-trained on massive datasets of text and code, enabling them to predict and generate coherent and contextually relevant text. The diversity of generated responses is a function of both the model’s size – measured in parameters – and the sampling strategies employed during inference. LLMs can produce various text formats, including stories, poems, articles, and code, adapting to different prompts and instructions. This ability stems from their capacity to learn complex statistical relationships within the training data and extrapolate these patterns to generate novel text sequences, effectively automating aspects of the creative process.

The Conditional Divergent Association Task (CDAT) moves beyond standard language model capabilities by requiring models to generate responses that are both relevant to a given prompt and dissimilar from a set of distractor texts. This is achieved by conditioning the language model not only on the initial prompt but also on negative examples – the distractor texts – effectively guiding the model away from common or predictable responses. Unlike typical text completion tasks where the goal is to predict the most probable continuation, CDAT necessitates a search for less probable, yet contextually appropriate, outputs, thereby evaluating the model’s capacity for divergent thinking and creative generation beyond simple pattern replication.

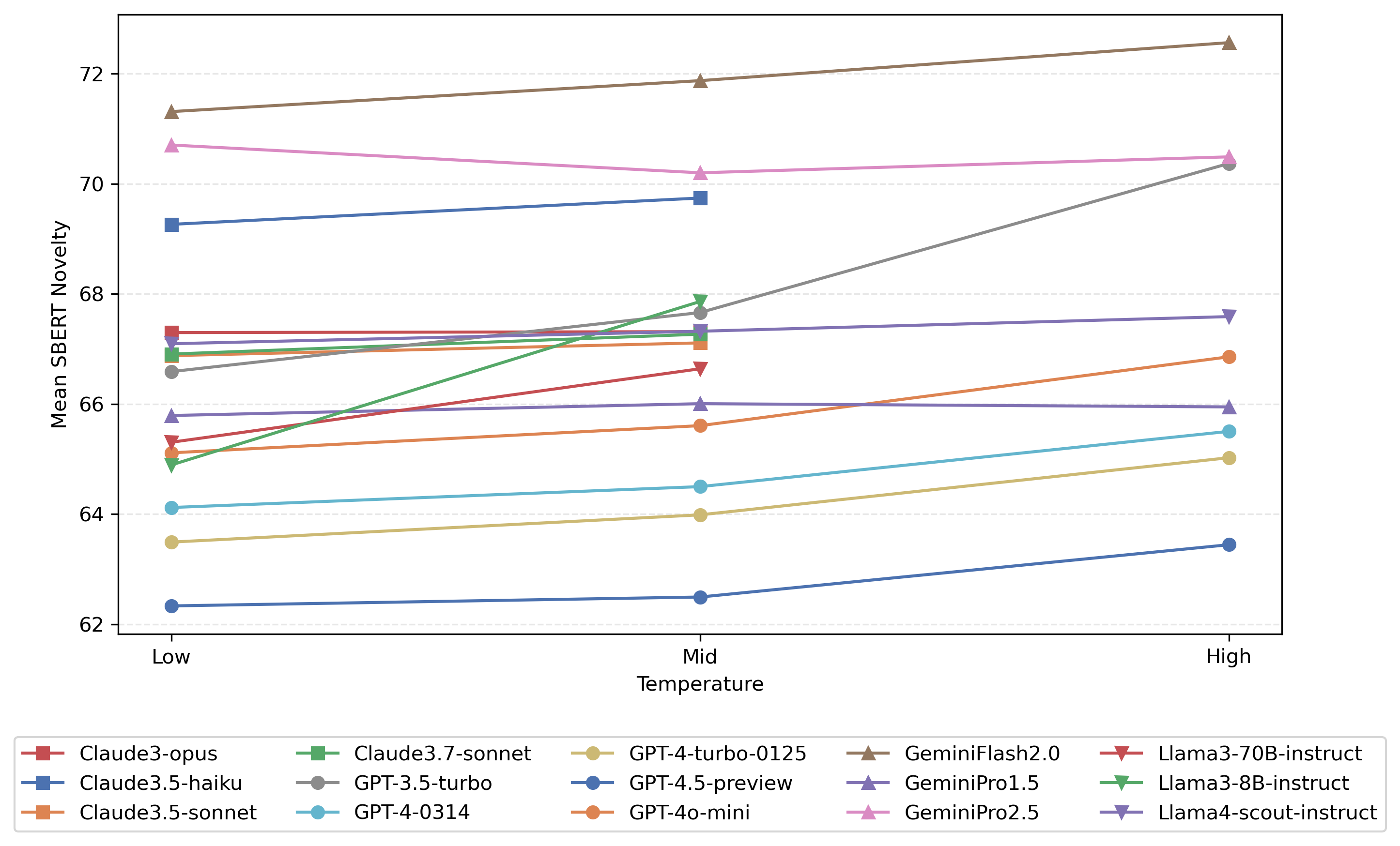

The temperature parameter within Large Language Models (LLMs) functions as a control for the randomness of outputs, directly influencing the exploration-exploitation trade-off during text generation. Lower temperatures prioritize likely tokens, resulting in more conservative and predictable responses, while higher temperatures increase the probability of less likely tokens, fostering greater diversity and potentially novel outputs. Quantitative analysis, employing the Conditional Divergent Association Task (CDAT) to measure novelty, demonstrated statistically significant differences (p < 0.001) in CDAT scores across models when varying the temperature setting, confirming the parameter’s effectiveness in modulating the creative range of LLM-generated text.

Navigating the Creative Space: Optimality and Semantic Distance

The identification of optimal creative solutions isn’t simply about maximizing novelty or adhering strictly to established norms; instead, it resides in the delicate balance between the two. Analysis focuses on the Pareto Front – a visualization of solutions where no improvement can be made in one area without compromising another. By examining outputs clustered along this front, researchers pinpoint responses that offer the best possible trade-offs between being strikingly original and remaining meaningfully relevant. These solutions aren’t necessarily the most novel or the most appropriate in isolation, but rather represent a sweet spot – the most efficient and impactful creative outputs given inherent constraints. This approach allows for a nuanced understanding of the creative space, moving beyond simplistic scoring systems to highlight genuinely innovative responses that effectively navigate the tension between familiarity and surprise.

Determining the creativity of generated content requires more than simple keyword matching; a nuanced understanding of semantic relationships is crucial. Researchers utilize sophisticated embedding models – including SBERT and GloVe – to quantify semantic distance, effectively measuring how dissimilar concepts are in meaning. These models translate words and phrases into high-dimensional vectors, where the distance between vectors reflects the semantic dissimilarity. Unlike methods that rely on surface-level comparisons, this approach captures subtle variations in meaning, allowing for a more accurate assessment of novelty. By mapping content within this semantic space, it becomes possible to identify genuinely innovative responses that diverge from established norms while remaining contextually relevant – a key characteristic of creative output.

Traditional assessments of creative output often rely on single scores for novelty or appropriateness, failing to capture the complex interplay between these qualities. This research instead characterizes the creative space as a multi-dimensional landscape, enabling the identification of responses that aren’t simply ‘high’ or ‘low’ in a given metric, but rather represent optimal trade-offs. Through this approach, researchers can map the boundaries of acceptable innovation – the ‘Pareto front’ – and pinpoint truly distinctive responses. Importantly, the study demonstrates that robust conclusions regarding the effectiveness of an ‘appropriateness gate’ – a filter for relevance – can be drawn from a surprisingly small sample size; statistical analysis indicates that only six responses are typically needed to achieve 80% power for detecting meaningful effects, streamlining the evaluation process and lowering the barrier to assessing generative creativity.

The pursuit of evaluating creativity in large language models, as demonstrated by the Conditional Divergent Association Task, necessitates a rigorous distillation of complex phenomena. This work champions a focus on measurable novelty and appropriateness-a deliberate stripping away of extraneous variables to isolate core creative components. Andrey Kolmogorov observed, “The most important thing in science is not to be afraid of making mistakes.” This sentiment mirrors the iterative process of refining the CDAT metric; acknowledging the limitations of prior assessments and embracing a parsimonious approach to define and measure creativity. The goal isn’t to replicate the boundless nature of human imagination, but to establish a clear, quantifiable standard for assessing its emergence in artificial systems.

What’s Next?

The introduction of the Conditional Divergent Association Task (CDAT) represents, predictably, not a resolution, but a refinement of the problem. Current metrics, and even this iteration, fundamentally mistake statistical anomaly for genuine novelty. A truly creative act is not simply unexpected; it restructures understanding. The balance between semantic distance and appropriateness, while valuable, remains a pragmatic concession, a measurement of effect rather than a mapping of process.

Future work will necessitate a move beyond behavioral assessment. The focus should shift towards internal representation – to discern whether these models merely simulate creativity through stochastic mimicry, or exhibit a nascent capacity for conceptual blending. The exploration of latent space architecture, and the identification of representational ‘distance’ from established concepts, offers a more compelling, if computationally demanding, avenue for inquiry.

Ultimately, the pursuit of ‘artificial creativity’ is a mirror reflecting the inadequacies of its definition. The field progresses not by building better algorithms, but by sharpening the questions. The goal is not to replicate a phenomenon, but to understand the structure from which it arises. Emotion, after all, is a side effect of structure, and clarity is compassion for cognition.

Original article: https://arxiv.org/pdf/2601.20546.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Heartopia Book Writing Guide: How to write and publish books

- Battlestar Galactica Brought Dark Sci-Fi Back to TV

- Gold Rate Forecast

- EUR ILS PREDICTION

- January 29 Update Patch Notes

- Composing Scenes with AI: Skywork UniPic 3.0 Takes a Unified Approach

- Genshin Impact Version 6.3 Stygian Onslaught Guide: Boss Mechanism, Best Teams, and Tips

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- ‘They are hugely embarrassed. Nicola wants this drama’: Ignoring texts, crisis talks and truth about dancefloor ‘nuzzling’… how Victoria Beckham has REALLY reacted to Brooklyn’s astonishing claims – by the woman she’s turned to for comfort

- ‘Harry Potter’ TV Show’s New Voldemort Could Have Just Been Spoiled by Ralph Fiennes

2026-01-30 04:27