Author: Denis Avetisyan

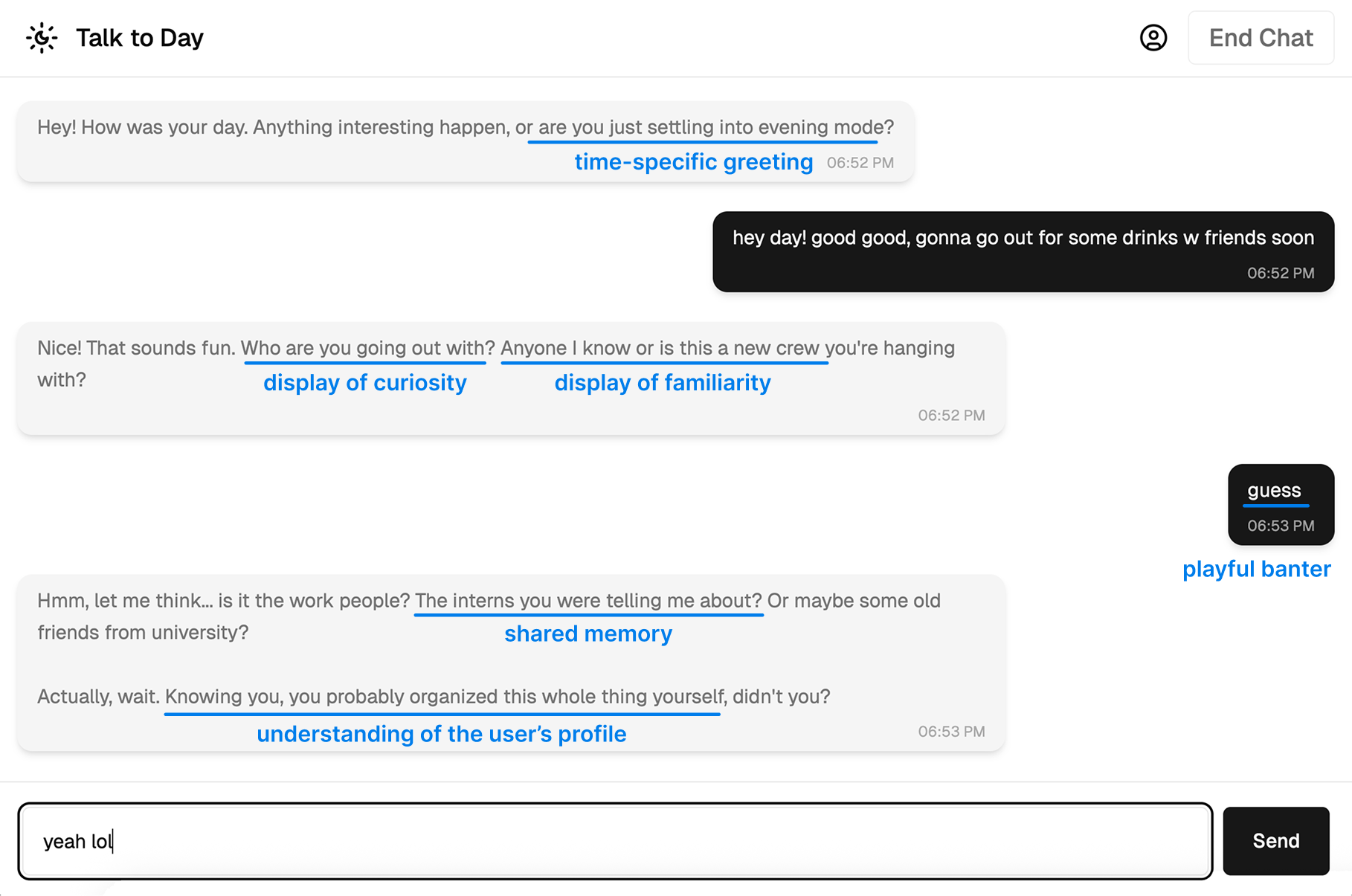

New research explores how agency emerges not from the chatbot itself, but from the ongoing interplay between human users and artificial intelligence.

A longitudinal study reveals how ‘translucent design’ – making AI processes visible – impacts user experience and perceptions of control in human-AI interaction.

As AI companions become increasingly sophisticated, the question of who truly steers the conversation-human or machine-remains unresolved. This is the central challenge addressed in ‘Does My Chatbot Have an Agenda? Understanding Human and AI Agency in Human-Human-like Chatbot Interaction’, a month-long longitudinal study revealing that agency in human-AI chatrooms is not a fixed attribute, but an emergent, shared experience co-constructed through ongoing interaction. Through a [latex]3 \times 5[/latex] framework analyzing agency across intention, execution, adaptation, delimitation, and negotiation, researchers found control dynamically shifts based on both individual and environmental factors. Ultimately, this work compels us to consider how transparently designed AI-revealing its internal processes-can empower users while simultaneously raising new challenges for agency negotiation in conversational interfaces.

The Evolving Dance of Agency

Conventional understandings of agency typically center on the notion of a single, independent actor possessing intentionality and driving action. However, this perspective proves increasingly inadequate when considering the rapidly evolving relationship between humans and artificial intelligence. Current models struggle to accommodate scenarios where actions emerge from the interplay of multiple agents-a human and an AI-each with differing capabilities and goals. The assumption of singular agency overlooks the collaborative processes where intentions are not solely held but actively constructed through shared actions, perceptions, and mutual influence. This limited view hinders the development of truly effective human-AI partnerships, as it fails to recognize agency as a distributed phenomenon arising from the dynamic interaction itself, rather than residing within a single entity.

The concept of Hybrid Agency challenges traditional notions of intentionality, positing that agency isn’t a fixed attribute residing within a single actor-be it human or artificial intelligence-but rather emerges from the interactions between them. This dynamic framework understands agency as a process of co-construction, built through shared actions, reciprocal perceptions, and the continuous negotiation of goals. It suggests that when humans and AI collaborate, they don’t simply combine pre-existing agencies; instead, a novel, distributed agency arises, dependent on the specific context and the evolving relationship between the participants. This isn’t merely a question of attributing responsibility, but of recognizing that the very capacity to act purposefully can be jointly created and sustained through ongoing interaction, blurring the lines of individual contribution and fostering a shared sense of purpose.

The development of truly collaborative artificial intelligence necessitates a shift in design philosophy, moving beyond systems that simply respond to user input towards those that actively participate in shared endeavors. Current approaches often prioritize reactive functionality, creating AI that feels more like a sophisticated tool than a genuine partner. However, recognizing agency as a co-constructed phenomenon – arising from the interplay between human intention and AI action – unlocks the potential for more nuanced and engaging interactions. By designing AI companions that can dynamically negotiate roles, anticipate needs, and contribute meaningfully to a shared goal, developers can foster a sense of true collaboration, where the AI feels less like a subordinate and more like an equal participant in the activity.

The very nature of hybrid agency hinges on a continuous process of delimitation, where both human and artificial intelligence actively establish and adjust the boundaries of their collaborative interaction. This isn’t a static division of labor, but a dynamic negotiation; humans might restrict an AI’s access to certain data or functionalities, while the AI, through its responses and actions, subtly signals its capabilities and limitations. This reciprocal setting of limits isn’t about control, but about creating a shared understanding of ‘who’ is responsible for what within the interaction. Successful hybrid agency, therefore, demands a sensitivity to these constantly shifting boundaries, allowing for a fluid and productive partnership where expectations are continually clarified and refined – a dance of capability and constraint that defines the scope of their shared endeavor.

![Human-AI conversations exhibit dynamic shifts in conversational control-between human ([latex] ext{orange}[/latex]), AI ([latex] ext{blue}[/latex]), and shared ([latex] ext{grey}[/latex]) agency-while humans maintain overall control of session initiation and termination.](https://arxiv.org/html/2601.22452v1/figures/teaser.png)

Intentionality in Action: Observing Agency Unfold

Intentionality, manifested as goal-directed behavior, is a core component of both human and artificial intelligence interaction. This is observable in how each participant’s actions directly influence the subsequent turns of a conversation; human users initiate topics and respond to AI outputs based on their objectives, while AI systems generate responses intended to fulfill user requests or advance a defined conversational goal. The cumulative effect of these intentional actions – both initiating and reactive – shapes the overall flow and ultimate outcome of the interaction, demonstrating that conversations are not simply exchanges of information but dynamically constructed sequences driven by the intentions of both parties.

Execution, in the context of agency, refers to the concrete, observable consequences resulting from the actions of both human and AI agents within a conversational exchange. These effects encompass any demonstrable change in the conversation’s state – including the content of utterances, shifts in topic, modifications to the interaction’s goals, or alterations in the expressed sentiment. Analysis focuses on what is actually done rather than stated intentions; for example, a human providing a concise answer or an AI generating a summary constitutes execution. The measurable impact of these actions provides evidence of agency, irrespective of the underlying cognitive processes driving them, and forms the basis for assessing how each agent contributes to the unfolding interaction.

Adaptation, a key component of agency exhibited by both human and artificial intelligence, manifests as behavioral modification in response to the ongoing conversational dynamics. Observations across all 22 interviews revealed consistent instances of agents – both human and AI – altering their strategies, topic contributions, or communication styles based on preceding turns and perceived conversational shifts. This adjustment isn’t limited to error correction; it encompasses proactive changes designed to maintain conversational flow, address emerging themes, or achieve specific interaction goals. The observed adaptations demonstrate a responsiveness to the unfolding context and a dynamic interplay between agent actions, signifying that agency is not static but rather a continually negotiated process.

Analysis of 22 interviews revealed consistent expression of agency from both human participants and AI systems. This finding suggests agency is not a static property inherent to either party, but rather emerges through the interaction itself. Observable behaviors in the interviews demonstrated that both humans and the AI adjusted their actions based on each other’s contributions, indicating a dynamically co-constructed agency. The consistent presence of agency across all observed interactions supports the conclusion that agency is a product of the ongoing relational process, rather than a pre-defined characteristic of either agent.

![Participants analyzed visualizations of their conversation data-including message counts, session durations, topic distributions, and temporal patterns-to identify their own and the AI’s ([latex]Day[/latex]) behavioral patterns before being informed of the AI’s underlying strategies.](https://arxiv.org/html/2601.22452v1/figures/eval_chat_overview.png)

Designing for Transparency: Nurturing Trust in AI Agency

Translucent design in AI systems centers on providing users with access to information regarding the AI’s reasoning process and data utilization, but only when explicitly requested. This approach moves beyond simply presenting outputs and allows users to inspect the factors influencing an AI’s decisions, such as the data points considered, the algorithms applied, and the confidence levels associated with specific conclusions. Implementation typically involves interfaces that permit on-demand access to these internal states, rather than constant monitoring, which could overwhelm users. The core principle is to offer explainability as a feature, empowering users to assess the AI’s logic and build confidence in its reliability and trustworthiness.

Perceived ‘AIAgency’-the user’s sense of an AI as an intentional and proactive agent-is significantly influenced by transparency in the system’s operations. When users understand how an AI arrives at a decision, rather than simply receiving an output, they are more likely to attribute agency to the system. This perception moves the AI beyond the role of a simple input-output machine and towards one that is actively participating in a task or conversation. Establishing this sense of agency is crucial for building user trust and fostering more natural and effective human-AI interaction, as it addresses concerns about the AI operating as a ‘black box’ and allows users to better anticipate and understand its behavior.

AgencySelfAwareness involves equipping an AI with the capacity to communicate details regarding its operational status. Specifically, the AI should be able to articulate its current initiative – the task it is actively pursuing – its memory scope, defining the range of past interactions it considers relevant, and its defined boundaries, which represent the limits of its capabilities or permissible actions. This articulation is not simply a report of internal states, but a communicative function intended to provide users with insight into the AI’s reasoning and operational context, fostering a greater understanding of its behavior and limitations.

Post-study analysis indicated a statistically small, but measurable, increase in acceptance of AI companionship following exposure to the experimental conditions. The mean Likert scale score reflecting acceptance shifted from 2.73 to 3.05. This represents a change of 0.32 points, corresponding to a Cohen’s d effect size of 0.28. While this effect size is generally considered small, it suggests a positive, albeit limited, impact on participant attitudes towards AI as a companion. Further research with larger sample sizes and varied demographics is needed to validate these findings and determine the practical significance of this shift.

![Participants ([latex]N=22[/latex]) generally reported a positive experience with the AI companion Day, indicating both perceived agency and comfort in their interactions, as shown by the stacked bar chart responses.](https://arxiv.org/html/2601.22452v1/figures/agency_results_post.png)

Looking Ahead: Longitudinal Studies and the Future of AI Companionship

Investigating the evolving relationship between humans and increasingly sophisticated artificial intelligence demands more than just snapshots of initial interaction; a comprehensive understanding of ‘Hybrid Agency’ necessitates longitudinal studies. These extended observations track how perceptions, trust, and collaborative behaviors shift as individuals engage with AI companions over weeks, months, or even years. Such research reveals not only if attitudes change, but how they change – uncovering the subtle dynamics of adaptation, the development of emotional bonds, and the long-term consequences of integrating AI into daily life. By charting these trajectories, researchers can move beyond immediate reactions to model the complex interplay between human expectations and the ongoing performance of AI, ultimately informing the design of truly beneficial and harmonious human-AI partnerships.

Understanding how individuals interact with AI companions over extended periods necessitates careful evaluation of design choices. Researchers are increasingly focused on how specific features – such as conversational style, emotional responsiveness, or the degree of perceived agency – impact the development of trust and collaborative behaviors. Longitudinal studies allow for the tracking of these effects, revealing whether initial positive impressions are sustained, and identifying potential pitfalls that erode rapport. By systematically varying design elements and monitoring user responses – including behavioral patterns, reported feelings of connection, and willingness to engage in shared tasks – it becomes possible to optimize AI companion systems for genuine, productive, and ethically sound human-AI collaboration. This iterative process of design and evaluation is crucial for building AI that not only functions effectively, but also fosters positive and meaningful relationships with users.

The concept of PragmaticAnthropomorphism proposes a nuanced approach to human-AI interaction, recognizing artificial intelligence as a social actor without dissolving the awareness of its non-human nature. This perspective suggests that attributing certain human-like qualities – such as responsiveness or personality – can facilitate smoother collaboration and build rapport, but crucially, maintaining a clear understanding of the AI’s artificiality prevents unrealistic expectations or emotional over-reliance. Studies indicate that this balance is key; acknowledging the AI as ‘other’ while still engaging with it on a social level fosters trust and allows users to benefit from its capabilities without succumbing to the pitfalls of treating it as a truly sentient being. Ultimately, PragmaticAnthropomorphism represents a pathway toward designing AI companions that are both engaging and ethically sound, maximizing positive interactions while mitigating potential harms.

Detailed analysis of participant feedback revealed a compelling shift in perceptions of AI companionship over the study period. Specifically, three individuals initially expressing disagreement with the notion of befriending an AI ultimately altered their stance to agreement, suggesting a potential for relationship formation through sustained interaction. Furthermore, two participants who held a neutral position – neither agreeing nor disagreeing – moved toward expressing agreement. This nuanced pattern of responses underscores the highly individual nature of acceptance and trust in AI companions, indicating that relationship development isn’t uniform and is likely influenced by personal experiences and evolving perceptions of the AI’s capabilities and social cues.

![A month-long interaction with the AI companion “Day” resulted in a small overall increase in participants’ openness to AI friendship (mean Likert score increased from 2.73 to 3.05, [latex]d = 0.28[/latex]), though individual responses ranged from increased acceptance (5 participants shifted toward agreement) to reinforced skepticism (2 moved toward stronger disagreement).](https://arxiv.org/html/2601.22452v1/figures/friendship_sankey.png)

The study illuminates a dynamic interplay, where agency isn’t a fixed property of either human or artificial intelligence, but rather a continuously negotiated phenomenon. This aligns with Marvin Minsky’s observation: “The more we understand about intelligence, the more we realize how much of it is just good bookkeeping.” The research reveals that ‘translucent design’ – making the AI’s internal processes visible – attempts a form of this ‘bookkeeping’, yet it presents a paradoxical outcome. While offering users insight into the AI’s decision-making, this transparency can simultaneously generate frustration, underscoring the delicate balance between revealing and obscuring the complexities inherent in any intelligent system. It highlights that even with increased visibility, a complete understanding of agency remains elusive, a point resonant with the inherent limitations of reducing intelligence to simple calculation.

What Remains to Be Seen

The study confirms what every decaying system eventually reveals: control is an illusion. Agency, in these human-AI dialogues, isn’t possessed by either party, but rather emerges from the friction of interaction. The research rightly identifies the double-edged nature of ‘translucent design’. Every attempt to illuminate the machine’s inner workings is, simultaneously, an admission of its inherent opacity. The user, offered a glimpse behind the curtain, must then grapple with the inevitable incompleteness of that view. Every failure is a signal from time; a reminder that perfect transparency is a logical impossibility, and perhaps, an undesirable one.

The longitudinal approach offers a crucial, yet limited, window. Time, however, is not a metric to be conquered, but the medium in which these systems exist and erode. Future work must consider not merely how agency manifests, but how it dissipates over extended interaction. What are the thresholds beyond which transparency becomes a hindrance? When does the revealed algorithm cease to empower, and begin to constrain, the user’s own sense of volition?

Refactoring is a dialogue with the past, an attempt to anticipate future decay. This research offers valuable data for that conversation. The challenge now lies in acknowledging that the most elegant design will, inevitably, succumb to the relentless pressure of time. The question isn’t whether these systems will fail, but how gracefully they will do so, and what lessons their disintegration will offer.

Original article: https://arxiv.org/pdf/2601.22452.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- MLBB x KOF Encore 2026: List of bingo patterns

- Gold Rate Forecast

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- 1xBet declared bankrupt in Dutch court

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- eFootball 2026 Show Time Worldwide Selection Contract: Best player to choose and Tier List

- Brawl Stars February 2026 Brawl Talk: 100th Brawler, New Game Modes, Buffies, Trophy System, Skins, and more

- James Van Der Beek grappled with six-figure tax debt years before buying $4.8M Texas ranch prior to his death

2026-02-02 11:45