Author: Denis Avetisyan

Researchers have developed a new AI framework that enables robots to perform complex, two-handed tasks with greater precision and adaptability.

DECO, a decoupled multimodal diffusion transformer, integrates vision, proprioception, and tactile sensing for improved bimanual dexterous manipulation, as demonstrated by the new DECO-50 dataset.

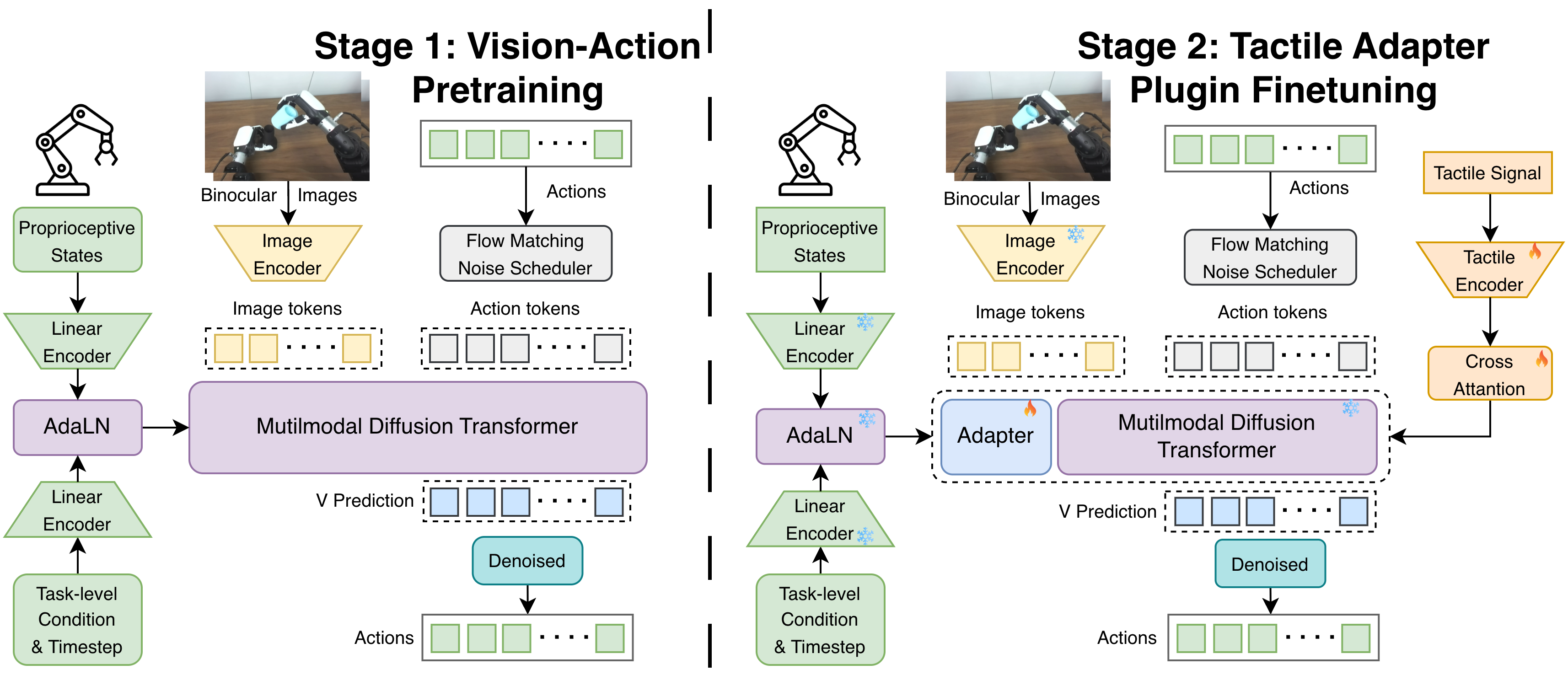

Achieving robust bimanual dexterous manipulation remains challenging due to the difficulty of effectively fusing diverse sensory inputs. This paper introduces DECO: Decoupled Multimodal Diffusion Transformer for Bimanual Dexterous Manipulation with a Plugin Tactile Adapter, a novel policy architecture that decouples multimodal conditioning to improve performance on contact-rich tasks. By integrating visual, proprioceptive, and tactile information via adaptive layer normalization and cross-attention mechanisms-alongside the introduction of the DECO-50 dataset comprising over 50 hours of bimanual manipulation data-DECO demonstrates enhanced dexterity and adaptability. Could this decoupled approach unlock new levels of autonomy for robots operating in complex, real-world environments?

The Challenge of Dexterous Robotic Control

The pursuit of truly dexterous robots encounters fundamental hurdles in replicating the intricate coordination and sensory processing inherent to human hands. Achieving this level of control isn’t simply a matter of increasing actuators or processing power; it demands a system capable of simultaneously managing numerous degrees of freedom while interpreting a constant stream of nuanced sensory data. Unlike pre-programmed robotic movements executed in static environments, human dexterity relies on real-time adjustments informed by tactile, visual, and proprioceptive feedback – allowing us to manipulate objects with varying shapes, weights, and fragility. Current robotic systems often struggle with this adaptability, exhibiting limitations in handling unpredictable scenarios or delicate tasks. The complexity arises not only from the mechanics of the hand itself, but also from the computational challenge of integrating these diverse sensory inputs and translating them into precise, coordinated movements – a feat that continues to push the boundaries of robotics and artificial intelligence.

Historically, robotic control systems have been engineered around meticulously crafted models of the robot itself and its anticipated environment. This approach, while effective in highly structured settings like assembly lines, falters when confronted with the unpredictable nature of real-world scenarios. The reliance on pre-programmed movements means even minor deviations – an object slightly out of place, an unexpected surface texture – can disrupt operations and lead to failure. These systems struggle with adaptability because accurately modeling the infinite variations of an unstructured environment is computationally prohibitive and practically impossible. Consequently, robots operating outside of carefully controlled conditions often exhibit rigidity and a lack of finesse, highlighting the limitations of model-based control in dynamic, real-world applications.

A persistent limitation in robotic control stems from the difficulty of fusing information from multiple sensors, notably the crucial sense of touch. While robots can often see and sense their environment through vision and proprioception, replicating the nuanced feedback provided by tactile sensors proves remarkably complex. Current systems frequently treat visual and tactile data as separate streams, hindering the robot’s ability to, for example, firmly yet gently grasp delicate objects or adapt to unexpected surface textures. This disjointed processing leads to clumsy manipulations and a reliance on pre-programmed motions, limiting adaptability in real-world scenarios where precision and responsiveness are paramount. Effectively integrating tactile feedback with other sensory inputs promises a new generation of robots capable of more fluid, reliable, and human-like interactions with their surroundings.

The pursuit of truly versatile robots necessitates a shift towards control systems capable of seamlessly integrating information from multiple sources. Current robotic manipulation often falters when faced with uncertainty or novelty, highlighting the limitations of relying on pre-programmed movements and precise environmental models. Instead, researchers are exploring paradigms that fuse visual, auditory, and, crucially, tactile feedback to create a more complete understanding of the robot’s surroundings and the objects it interacts with. This multi-modal approach allows for real-time adjustments to grip force, trajectory, and overall manipulation strategy, mirroring the nuanced control exhibited by humans. By effectively leveraging the synergy between these sensory inputs, robotic systems can move beyond rigid automation and achieve the adaptability required for complex tasks in unpredictable, real-world environments – ultimately paving the way for robots that can not only perform, but also learn and improvise.

DECO: A Foundation for Dexterous Manipulation

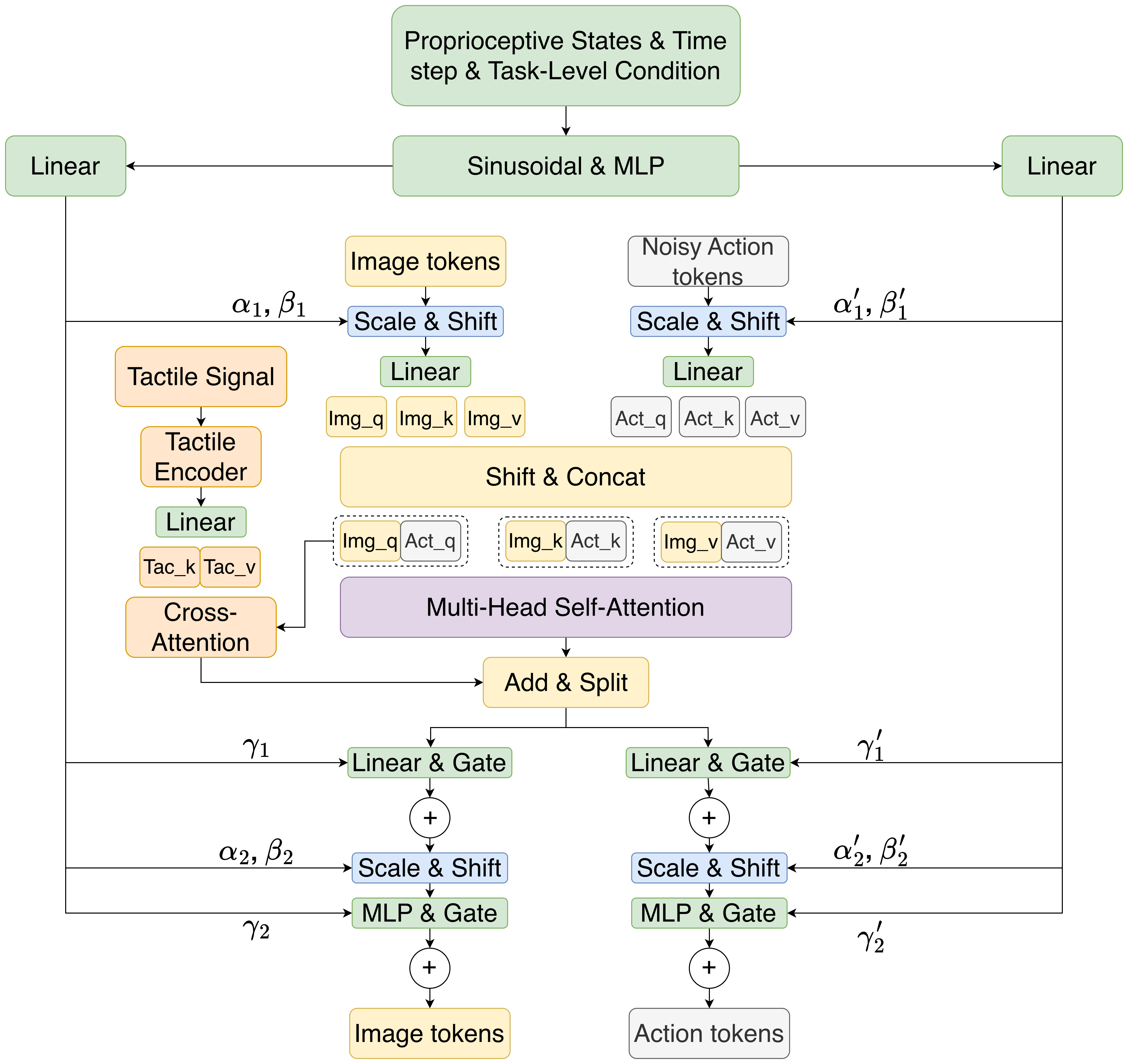

DECO employs the Diffusion Transformer (DiT) architecture as its foundation, representing a departure from recurrent or convolutional networks traditionally used in robotic manipulation. The DiT architecture, known for its capabilities in sequence modeling, is adapted to predict future robot actions conditioned on observed states. This allows DECO to model complex, multi-step manipulation tasks and generate diverse, plausible trajectories for both hands. The framework leverages the attention mechanism inherent in Transformers to capture long-range dependencies between robot states and actions, contributing to its robustness in bimanual dexterous manipulation scenarios where coordinated movements are critical. The use of a Transformer-based approach also facilitates parallelization during both training and inference, improving computational efficiency compared to sequential models.

DECO’s architecture independently processes visual, proprioceptive, and tactile sensory inputs before integrating them for action prediction. This decoupling allows the model to accept and utilize these modalities individually or in combination, offering robustness to sensor failures or varying data availability. Specifically, separate encoding pathways are established for each modality, generating distinct conditioning signals. These signals are then combined within the DiT architecture, enabling the framework to leverage information from any available sensor without requiring all modalities to be present during training or inference. This flexible integration is achieved through learned cross-attention mechanisms, allowing the model to dynamically weigh the importance of each sensory input based on the current task and environment.

Adaptive Layer Normalization (AdaLN) within the DECO framework modulates the activations of the diffusion transformer based on both proprioceptive states and task conditions. This is achieved by scaling and shifting the normalized activations using parameters derived from these conditioning inputs. Specifically, separate multi-layer perceptrons (MLPs) process the proprioceptive data-joint angles, velocities-and task conditions-goal pose, object properties-to generate the scale and shift parameters. These parameters are then applied to the layer normalization process, effectively biasing the transformer’s internal representations to reflect the current state of the robot and the desired task outcome. This conditioning method improves control stability by allowing the model to dynamically adjust its behavior based on real-time feedback and task requirements, resulting in more accurate and robust manipulation.

Rectified Flow Matching serves as the training objective for the DECO framework, addressing the challenges of action prediction in complex manipulation tasks. This loss function optimizes the model to learn a probability distribution over actions conditioned on observed states. Unlike traditional regression-based approaches, Rectified Flow Matching frames the problem as learning a velocity field that transports data samples from a simple noise distribution to the target data distribution, thereby improving sample efficiency and stability during training. The rectification process specifically mitigates issues arising from distributional shift between training and execution, enhancing the robustness of action predictions in dynamic and uncertain environments. By directly optimizing for the flow that maps noise to data, the framework can effectively learn complex manipulation strategies.

Tactile Feedback: Augmenting DECO’s Capabilities

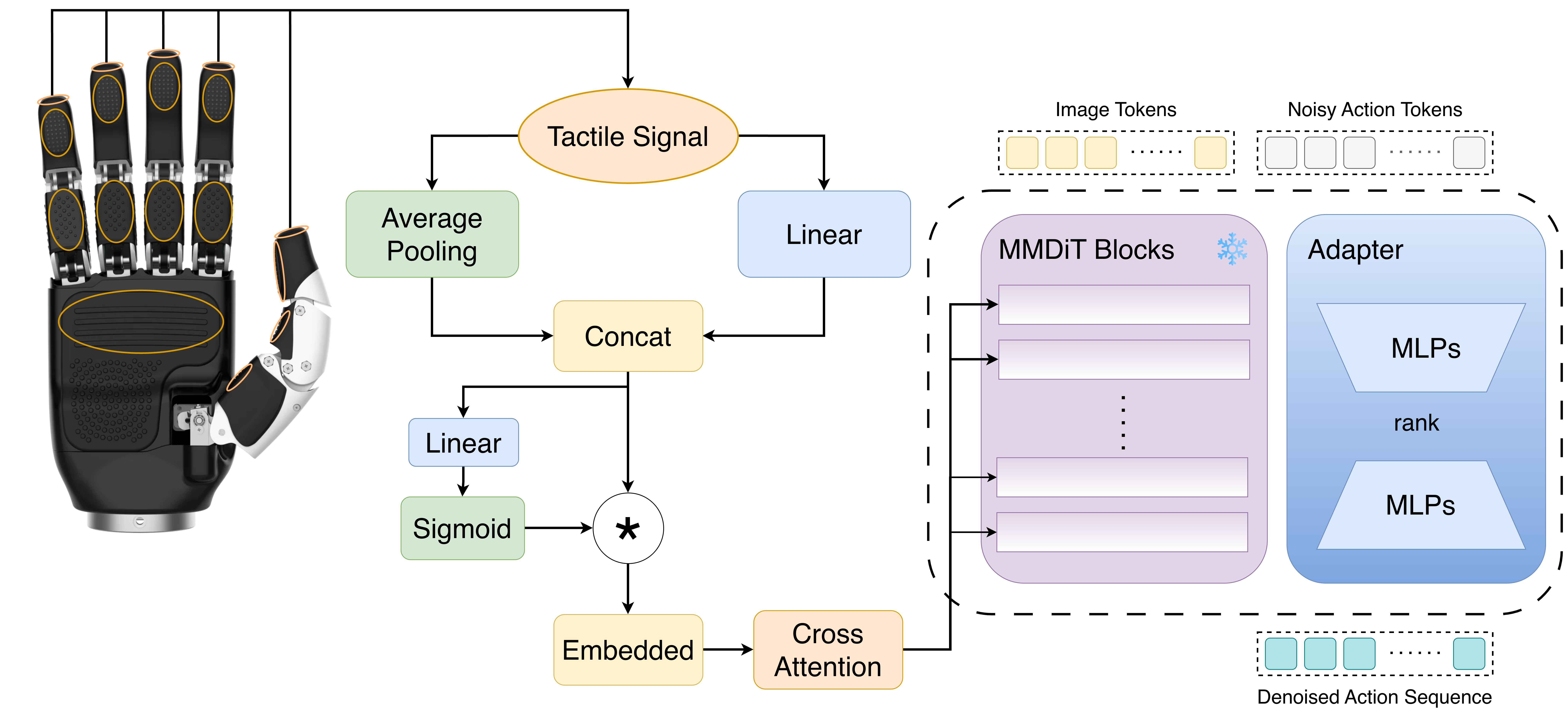

The Tactile Adapter facilitates the incorporation of tactile data into existing Distilled Expert Component Operation (DECO) models without requiring complete retraining. This integration is achieved by adding a minimal set of trainable parameters to the pre-trained DECO architecture, allowing the model to leverage tactile sensing for improved performance, particularly in scenarios demanding fine motor control or operating with limited visual information. Testing demonstrates a quantifiable increase in success rates for complex manipulation tasks when the adapter is employed, signifying the effectiveness of tactile augmentation for robotic systems.

The Tactile Adapter utilizes Cross Attention to integrate tactile data with the existing visual and proprioceptive feature spaces of the DECO model. This mechanism allows the model to selectively attend to relevant tactile information based on the current visual and proprioceptive context. Specifically, tactile features are projected into the same embedding space as the visual and proprioceptive features, and attention weights are computed to determine the contribution of each tactile feature to the overall representation. This fused representation then enables the DECO model to make more informed decisions, particularly in scenarios where visual information is insufficient or ambiguous, by effectively combining data from multiple sensory modalities.

The Tactile Adapter utilizes Low-Rank Adaptation (LoRA) to significantly reduce the computational demands of parameter tuning during integration with DECO. LoRA achieves this by freezing the pre-trained weights of the original DECO model and introducing trainable low-rank matrices to approximate weight updates. This approach limits the number of trainable parameters to less than 10% of the total model parameters, enabling efficient adaptation to tactile input without requiring extensive computational resources or risking catastrophic forgetting of previously learned skills. The reduction in trainable parameters directly translates to lower memory requirements and faster training times, facilitating deployment on resource-constrained robotic platforms.

The DECO framework’s modular design facilitates deployment across diverse robotic systems and manipulation tasks by decoupling tactile adaptation components from the core model. This architecture allows for the substitution of robotic hardware interfaces and the selective enabling or disabling of specific tactile features without requiring retraining of the entire model. Consequently, DECO can be readily integrated with robots possessing varying degrees of freedom, sensor configurations, and end-effector designs. Furthermore, the adaptability extends to different manipulation scenarios, including in-hand manipulation, assembly tasks, and contact-rich interactions, by simply modifying or adding task-specific tactile processing modules.

DECO-50: A Benchmark for Tactile-Augmented Dexterity

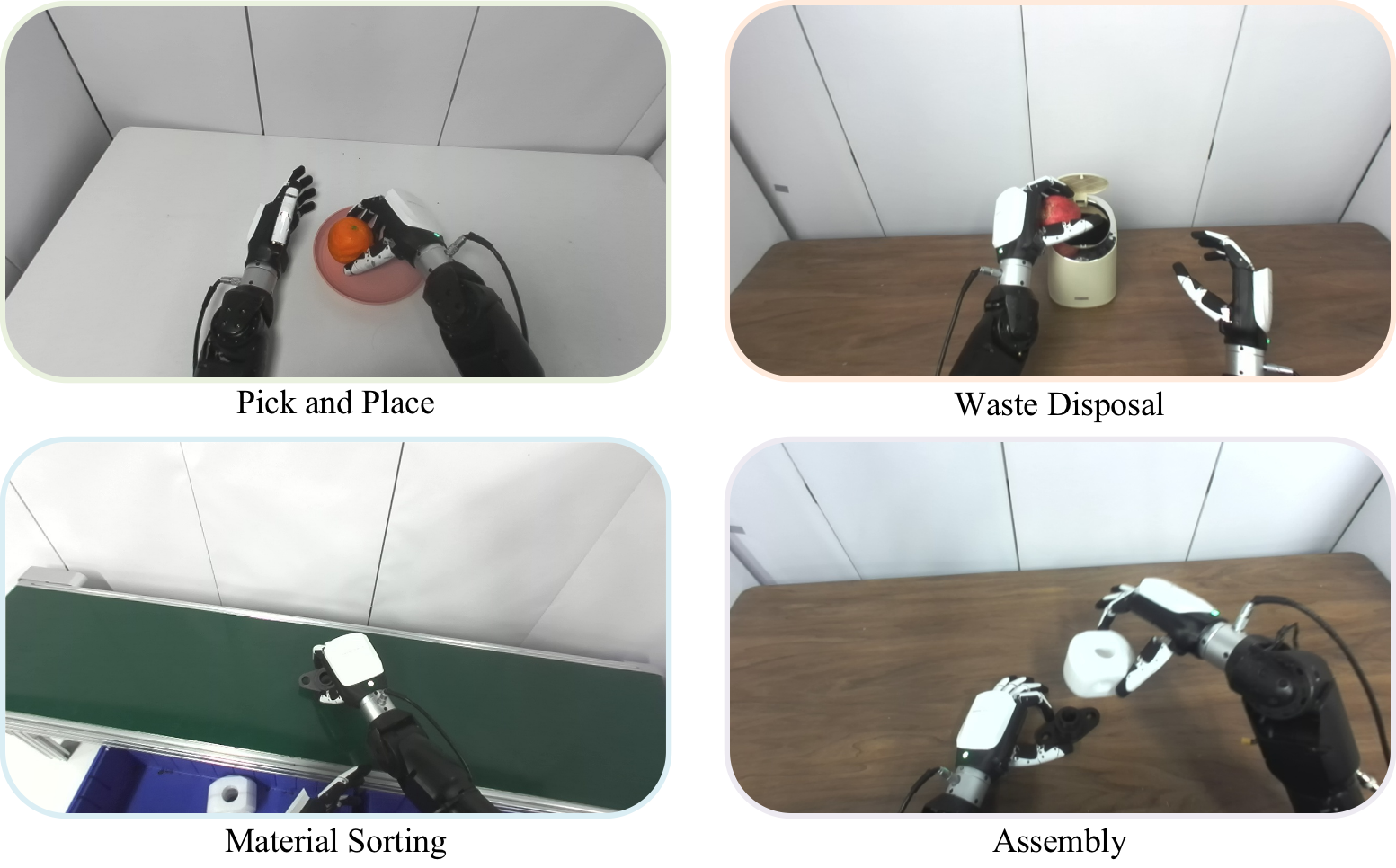

The DECO-50 dataset presents a significant advancement in benchmarking robotic dexterity, comprising four distinct scenarios and a total of 28 individual sub-tasks. This meticulously constructed resource is specifically engineered to rigorously evaluate a robot’s ability to perform complex, two-handed manipulations while actively utilizing tactile sensing. Unlike previous datasets focused on simpler actions, DECO-50 challenges robotic systems with tasks demanding precise coordination, force control, and adaptability – simulating real-world scenarios such as assembly, tool usage, and object rearrangement. The dataset’s design intentionally pushes the boundaries of current robotic control algorithms, exposing limitations and providing a standardized platform for measuring progress in the field of tactile-augmented manipulation.

The DECO-50 dataset isn’t simply a collection of robotic manipulation trials; it represents a deliberate effort to expose the weaknesses in current control algorithms. By encompassing four distinct scenarios and 28 nuanced sub-tasks, the dataset demands a level of dexterity and adaptability that many existing systems struggle to achieve. This complexity isn’t accidental; it’s designed to highlight specific challenges in areas like grasp planning, in-hand manipulation, and responding to unexpected contact forces. Consequently, performance on DECO-50 serves as a stringent benchmark, clearly identifying where robotic control needs to advance to handle real-world tasks with greater robustness and precision. The dataset’s difficulty, therefore, isn’t a flaw, but rather its defining characteristic, acting as a catalyst for innovation in the field of robotic manipulation.

Rigorous testing on the DECO-50 dataset reveals a marked performance increase when the DECO system is enhanced with a Tactile Adapter. Specifically, the integration of tactile sensing enables substantially improved results compared to standard robotic control approaches across the dataset’s diverse challenges. Notable gains are demonstrated in complex tasks, such as Stage 3 of the Waste Disposal scenario, where the system exhibits enhanced precision and adaptability. This suggests that tactile feedback is crucial for achieving robust bimanual manipulation, allowing robots to better interpret object properties and refine their actions in dynamic environments, ultimately pushing the boundaries of robotic dexterity.

The release of the DECO-50 dataset marks a significant advancement in robotics research, providing the community with an unprecedented resource for developing and evaluating bimanual dexterous manipulation skills. Comprising over 5 million video frames and 8,000 successful task trajectories, this extensive collection enables rigorous testing of robotic control algorithms in complex scenarios. By offering a benchmark for tactile-augmented dexterity, DECO-50 not only quantifies the performance of existing systems but also establishes a new state-of-the-art, encouraging innovation towards more robust and versatile robotic systems capable of tackling intricate real-world challenges. This rich dataset facilitates the creation of more adaptable robots that can reliably perform complex manipulation tasks, ultimately broadening their applicability across various industries and environments.

The development of DECO highlights a crucial tenet of robust system design: interconnectedness. Just as a single failing component can disrupt an entire organism, DECO’s decoupled multimodal approach recognizes that effective bimanual manipulation isn’t solely a matter of vision or proprioception, but the synergistic fusion of these inputs alongside tactile sensing. Vinton Cerf aptly observed, “Technology is meant to make life easier, not more complex.” DECO embodies this principle by streamlining the integration of diverse sensory data, ultimately simplifying the challenge of dexterous robotic control and promoting a more holistic understanding of manipulation tasks – mirroring the elegance that emerges from clarity and well-defined structure.

What Lies Ahead?

The pursuit of truly dexterous manipulation inevitably reveals the brittleness inherent in systems assembled from isolated components. DECO’s decoupling of modalities represents a step towards addressing this, yet the architecture still treats perception as a pipeline to action, rather than an interwoven, continuously refined understanding of the environment. Scalable dexterity won’t emerge from ever-larger datasets, but from a fundamental shift in how information is represented – a move away from feature vectors and towards systems that internalize constraints and affordances.

The DECO-50 dataset, while a valuable contribution, serves as a reminder of the limitations of synthetic benchmarks. Real-world contact is messy, unpredictable, and often violates the assumptions baked into simulated environments. Future work must prioritize data efficiency-learning from fewer, more representative examples-and focus on developing robust generalization capabilities. The challenge isn’t simply to perceive contact, but to anticipate it, to build a predictive model of interaction that allows the system to navigate uncertainty.

Ultimately, the question isn’t whether a robot can perform a specific manipulation task, but whether it can adapt to any task within its physical capabilities. This demands a move beyond task-specific training and towards systems that learn a general representation of manipulation primitives – a foundational ‘understanding’ of how objects interact, independent of the specific goal. The elegance of a solution will be measured not by its complexity, but by its simplicity – the fewest assumptions necessary to achieve robust, adaptable behavior.

Original article: https://arxiv.org/pdf/2602.05513.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Jason Statham’s Action Movie Flop Becomes Instant Netflix Hit In The United States

- Kylie Jenner squirms at ‘awkward’ BAFTA host Alan Cummings’ innuendo-packed joke about ‘getting her gums around a Jammie Dodger’ while dishing out ‘very British snacks’

- Gold Rate Forecast

- Hailey Bieber talks motherhood, baby Jack, and future kids with Justin Bieber

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Jujutsu Kaisen Season 3 Episode 8 Release Date, Time, Where to Watch

- How to download and play Overwatch Rush beta

- Brent Oil Forecast

- How to watch Marty Supreme right now – is Marty Supreme streaming?

2026-02-08 09:04