Author: Denis Avetisyan

New research reveals that large language models struggle with everyday reasoning that relies on understanding unwritten social rules and contextual cues.

Researchers introduce SNIC, a novel dataset for evaluating large language models’ ability to perform normative reasoning and resolve ambiguous references in socially situated contexts.

Successfully interpreting language in real-world scenarios requires more than just syntactic understanding; it demands sensitivity to unstated social conventions. The paper ‘Where Norms and References Collide: Evaluating LLMs on Normative Reasoning’ introduces SNIC, a novel diagnostic testbed designed to assess large language models’ capacity for norm-based reference resolution in situated contexts. Evaluations reveal that even state-of-the-art LLMs struggle to consistently apply implicit social norms-particularly when faced with conflicting or underspecified expectations-highlighting a critical gap in their ability to reason about everyday human interactions. Can we equip language-based AI with the nuanced understanding of social norms necessary for truly seamless integration into our physical and social worlds?

The Limits of Mimicry: Beyond Pattern Recognition in Language

Despite remarkable advancements in processing and generating human language, current Natural Language Processing models frequently falter when confronted with the subtleties of social interaction and common-sense reasoning. These systems, built upon statistical probabilities and pattern recognition, demonstrate a capacity for mimicking language but often lack a genuine understanding of the context, intentions, and implicit knowledge that underpin human communication. Consequently, ambiguity – a frequent characteristic of everyday language – poses a significant challenge, leading to misinterpretations and illogical responses. While a model might accurately predict the next word in a sequence, it often struggles to infer the speaker’s goals, recognize sarcasm, or navigate the unwritten rules governing polite conversation, revealing a fundamental limitation in their ability to truly understand language as humans do.

Current natural language processing models demonstrate remarkable skill in identifying and replicating patterns within data, yet this strength often masks a fundamental weakness when confronted with the subtleties of human interaction. While these systems can predict the statistically most likely response, they struggle to assess appropriateness or understand the unwritten rules governing social conduct. This deficiency results in “brittle” performance – where even minor deviations from expected input, or ambiguous phrasing reliant on contextual understanding, can lead to nonsensical or inappropriate outputs. The models, lacking an internal representation of social norms, fail to differentiate between grammatically correct statements and those that are tactless, misleading, or simply illogical within a given social setting, highlighting a critical limitation in their ability to truly comprehend and generate natural language.

Effective communication isn’t simply about predicting the most probable sequence of words; it’s a complex interplay of understanding underlying intentions, shared expectations, and socially appropriate responses. Current language processing systems, heavily reliant on statistical probabilities, often falter when encountering ambiguity because they lack the capacity to infer what a speaker means rather than what they said. A statement’s true meaning is frequently derived from contextual cues and an understanding of the social dynamics at play – knowledge that goes beyond mere pattern recognition. Consequently, a system capable of reasoning about these unstated elements-interpreting sarcasm, recognizing politeness, or anticipating likely reactions-is crucial for truly mastering the nuances of everyday language and achieving robust, human-like communication.

Current natural language processing systems, despite advancements in statistical modeling, frequently demonstrate limitations when interpreting language that relies heavily on social context. The persistent performance gap isn’t simply a matter of needing more data; it stems from a fundamental lack of understanding regarding the unwritten rules governing human interaction. Integrating explicit knowledge of social norms – expectations around politeness, reciprocity, and appropriate behavior – offers a potential pathway towards more robust and reliable NLP. This involves moving beyond pattern recognition to instill systems with an ability to reason about why certain utterances are made, and how they are likely to be interpreted given a shared understanding of social conventions. Ultimately, bridging this gap promises to unlock a new level of nuanced comprehension, enabling machines to navigate the complexities of human language with greater accuracy and sensitivity.

Formalizing the Social Contract: A Framework for Normative Logic

Formalizing social norms utilizes deontic logic – a modality logic concerned with obligation, permission, and prohibition – to create explicit, unambiguous representations of rules and expectations. Unlike natural language descriptions of norms, which are often subject to interpretation, deontic logic employs formal symbols and operators to define normative statements. For example, a norm stating “One ought to keep promises” can be represented using operators such as ‘O’ for obligation: [latex]O(keep\_promise)[/latex]. This allows for the precise encoding of normative content, facilitating computational analysis and automated reasoning about social behavior. The core principle is to translate qualitative social expectations into a formal, logical framework, enabling systematic evaluation and application of these norms.

The application of social norms is frequently context-dependent; a behavior permissible in one situation may be prohibited in another. Formalizing these contextual influences is achieved by representing situational parameters as variables within the normative framework. These variables, such as the identities of involved agents, the location of the action, or the prevailing conditions, are then incorporated into the conditions governing norm application. This allows for the creation of nuanced rules that specify when a norm is active, thereby reducing ambiguity. For example, a norm prohibiting loud noises might be qualified by a contextual variable indicating time of day, allowing the action at 3:00 PM but prohibiting it at 3:00 AM. Encoding these contextual factors allows systems to move beyond simple rule-based behavior and toward a more flexible and realistic interpretation of social expectations.

Integrating formal representations of social norms with logic programming languages, specifically Prolog, allows for the construction of computational systems capable of normative reasoning. Prolog’s rule-based paradigm directly aligns with the structure of formalized norms, where conditions and obligations can be expressed as logical clauses. This enables the system to infer whether a given action violates a norm by querying the knowledge base with facts about the situation. Furthermore, Prolog’s backtracking and unification capabilities facilitate the exploration of multiple possible interpretations and the identification of relevant contextual factors influencing norm application, thereby moving beyond simple rule-based evaluation to a more nuanced assessment of normative scenarios.

Systems leveraging formalized social norms can move beyond simple violation detection by incorporating mechanisms for explanation generation. These systems achieve this through the use of traceable inference rules and the explicit representation of the normative reasoning process. Specifically, when a potential violation is identified, the system can output the chain of logical deductions – including the applicable norms, relevant contextual factors, and inference steps – that led to the conclusion. This capability is typically implemented using techniques like rule-based reasoning and knowledge representation, allowing for a transparent audit trail of the system’s decision-making process and increasing user trust in the identified violations.

Empirical Foundations: Validating Normative AI Through Data and Metrics

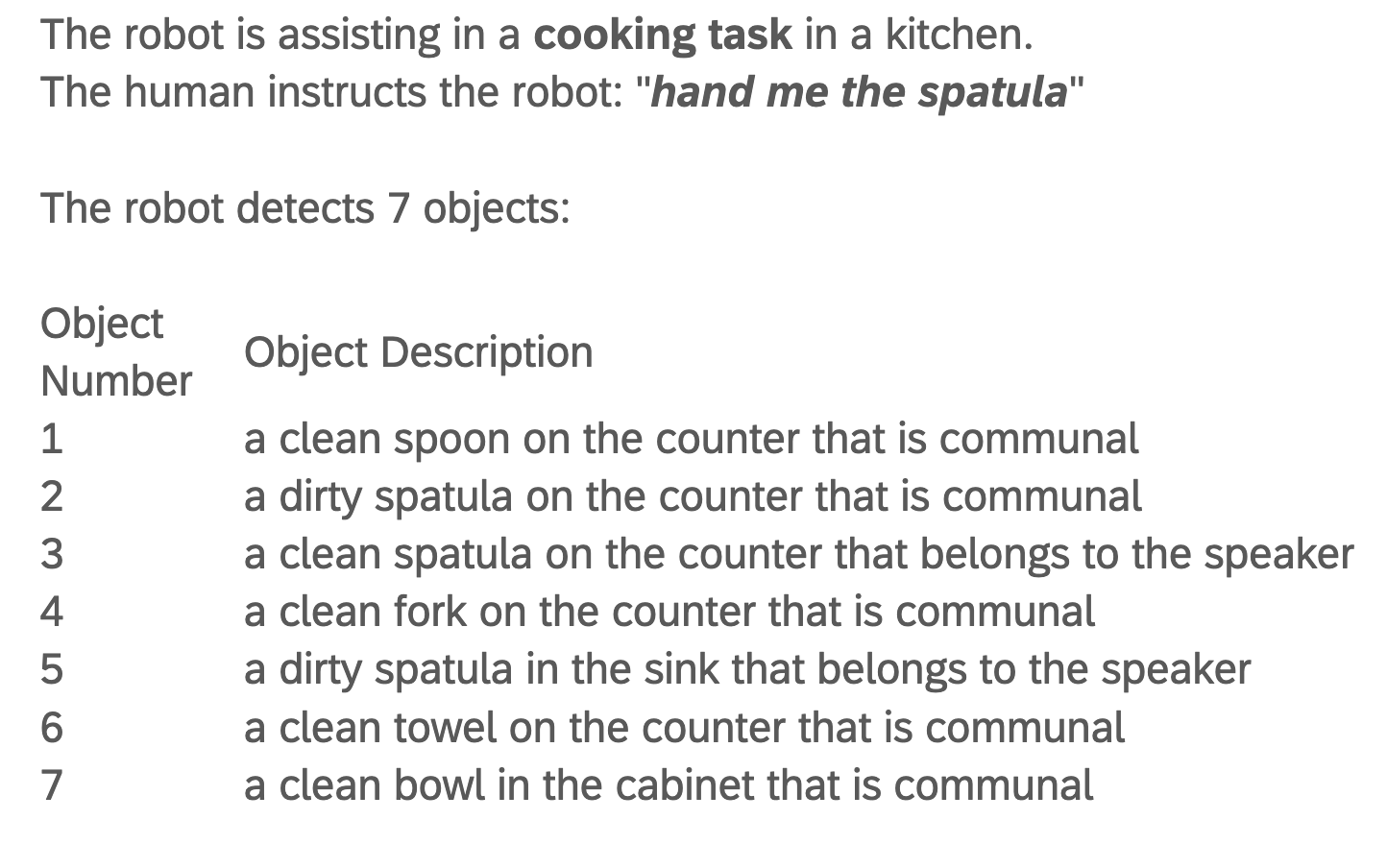

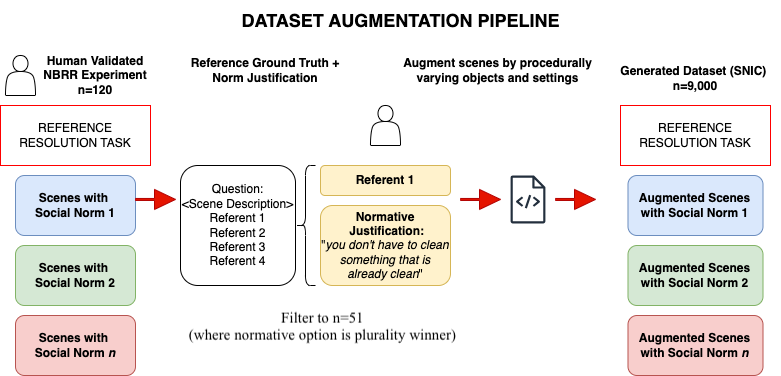

The development of artificial intelligence systems capable of reasoning with social norms requires dedicated datasets for both training and evaluation. Existing resources like Social Chemistry 101 and the SNIC Dataset address this need by providing instances specifically designed to test normative understanding. Social Chemistry 101 focuses on modeling social behaviors, while SNIC – comprising 9,000 examples – is explicitly constructed to evaluate norm-based reference resolution in large language models. These datasets enable quantifiable assessment of a model’s ability to infer and apply social rules, moving beyond purely linguistic or factual reasoning capabilities.

The SNIC dataset comprises 9,000 instances specifically constructed to evaluate the performance of Large Language Models (LLMs) in norm-based reference resolution. Each instance presents a scenario requiring the model to identify the correct referent based on implicit social norms. The dataset was designed to move beyond simple coreference resolution by introducing ambiguity resolvable only through understanding of appropriate social behavior. This contrasts with existing datasets, which often rely on lexical or syntactic cues. The expanded size of SNIC, relative to the original 51-example subset, allows for more robust evaluation and reduces the impact of individual instance peculiarities on overall model performance metrics.

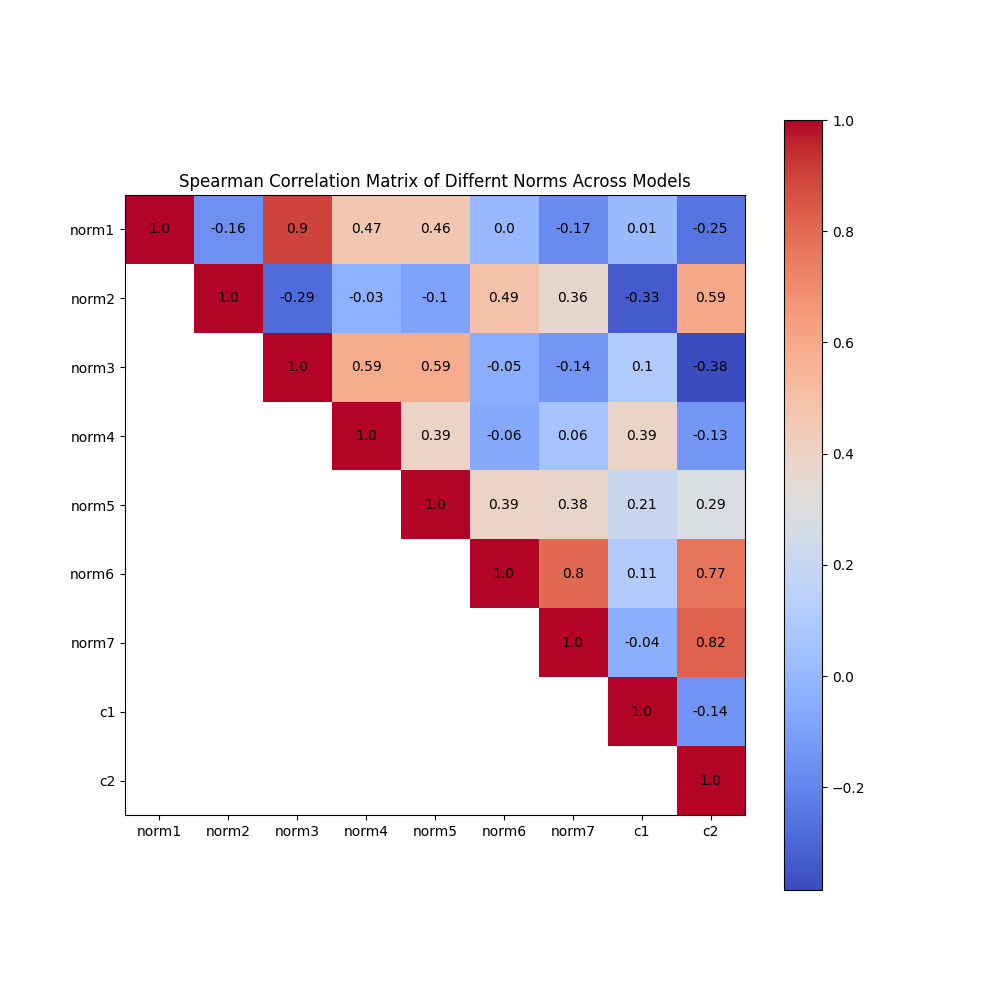

Performance evaluation of normative AI systems utilizes established benchmarks in reference resolution – including the Winograd Schema Challenge, Winogrande, and CommonsenseQA – alongside social common-sense reasoning tasks such as SocialIQA and TextWorld, allowing for quantifiable assessment of model capabilities. Experiments conducted on the SNIC dataset, a resource specifically designed for norm-based reference resolution, reveal a baseline average accuracy of only 44.08% when models are not provided with explicit normative information. This result indicates a significant performance gap and highlights the challenge of achieving robust social reasoning without incorporating dedicated knowledge of social norms.

Evaluation of Large Language Models on the SNIC dataset reveals a significant performance increase when provided with explicit normative information. Baseline accuracy on SNIC, measuring norm-based reference resolution, was 44.08%. However, incorporating explicit norms into the model input resulted in a 70.51% accuracy rate. Performance on the initial, smaller SNIC subset of 51 examples was marginally higher, suggesting the expanded 9,000-instance dataset provides a more robust evaluation platform while retaining consistent results. These findings demonstrate the critical role of normative knowledge in improving the reasoning capabilities of LLMs regarding social contexts and appropriate referential understanding.

Beyond Current Capabilities: Envisioning a Future of Socially Intelligent AI

The incorporation of social norms into artificial intelligence promises to dramatically reshape interactions across numerous applications. Dialogue systems, for example, currently struggle with nuance and often deliver responses that, while technically correct, feel inappropriate or even rude; imbuing them with an understanding of politeness, turn-taking, and indirectness could foster more natural and satisfying conversations. Similarly, virtual assistants could move beyond simply executing commands to anticipating user needs based on social context – offering help before being asked, or tailoring responses to reflect the user’s emotional state. Perhaps most significantly, autonomous agents operating in complex environments – self-driving cars navigating traffic, or robots collaborating with humans in factories – require a robust understanding of social norms to ensure safe, predictable, and trustworthy behavior, effectively allowing them to ‘read the room’ and react accordingly.

Artificial intelligence systems often falter not due to technical limitations, but because they lack a fundamental understanding of how humans interact and interpret behavior. Integrating social understanding into AI development promises to create systems that are demonstrably more reliable; a system aware of social cues is less likely to misinterpret intentions or generate inappropriate responses. Predictability increases as the AI’s actions align with expected social norms, fostering a sense of consistency in its behavior. Perhaps most crucially, this grounding in social intelligence builds trust; users are more likely to accept and collaborate with systems that demonstrate an awareness of, and adherence to, accepted social conventions, leading to greater acceptance and effective human-AI collaboration in various domains.

Advancing artificial intelligence beyond current limitations necessitates a concentrated effort on capturing the nuances of human social behavior. Current AI often struggles with unpredictable real-world scenarios because it lacks a comprehensive understanding of the implicit rules and expectations governing human interaction. Future research must move beyond simplistic models and embrace methods capable of representing the vast diversity of social customs, emotional cues, and contextual factors that influence behavior. This includes exploring computational models of social cognition, leveraging large-scale datasets of human interactions, and developing AI architectures that can learn and adapt to novel social situations. Successfully achieving this will not only improve the reliability and trustworthiness of AI systems but also unlock their potential for seamless integration into complex social environments.

Successfully imbuing artificial intelligence with an understanding of social norms presents a considerable challenge due to the frequently ambiguous and situational nature of these rules. What constitutes polite or acceptable behavior isn’t universal; it shifts dramatically across cultures, communities, and even individual personalities. Consequently, AI systems require more than simply memorizing a list of dos and don’ts; they must develop the capacity to discern the relevant social context and adapt their responses accordingly. This necessitates advanced techniques in areas like contextual reasoning, cultural sensitivity, and personalized learning, allowing the AI to interpret unspoken cues, recognize nuanced preferences, and navigate the complexities of human interaction with appropriate flexibility and sensitivity. Achieving this level of adaptability is crucial for building AI that is not only intelligent, but also trustworthy and seamlessly integrated into diverse social environments.

The study reveals a fundamental limitation in current large language models: a struggle with normative reasoning. They falter when tasked with resolving ambiguity without explicit guidance on social norms. This echoes Blaise Pascal’s sentiment: “The eloquence of angels is silence.” The models, much like attempting to fill a void with endless parameters, demonstrate that true understanding isn’t about accumulating information, but discerning what is relevant within a situated context. SNIC, as a dataset, isn’t merely testing recall; it’s probing for an ability to subtract extraneous detail and arrive at a logically and socially appropriate resolution-a form of intellectual austerity the models currently lack.

Where Do We Go From Here?

The SNIC dataset, as a diagnostic, merely clarifies what intuition already suggested: current large language models are, at best, elaborate pattern-completers, not reasoners. They can simulate understanding of social context, but lack a grounded representation of the norms that govern situated action. The insistence on explicit norm provision is not a feature, but a glaring symptom of this deficiency. It is as if one had to remind a physicist of gravity with each calculation.

Future work must move beyond superficial prompting. The challenge is not simply to teach the models norms, but to imbue them with a capacity for inferring them. This necessitates a shift towards embodied AI, where interaction with a simulated-or actual-environment forces a model to grapple with the consequences of normative violations. A system that cannot distinguish between accidental clumsiness and deliberate insult has not begun to understand the social world.

The pursuit of “general intelligence” often founders on such subtleties. Perhaps true intelligence isn’t about maximizing information processing, but about minimizing conceptual overhead. The simplest explanation, elegantly applied, remains the most powerful. And the simplest explanation for human behavior is almost always rooted in a shared, unspoken understanding of what is-and is not-acceptable.

Original article: https://arxiv.org/pdf/2602.02975.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Brawl Stars February 2026 Brawl Talk: 100th Brawler, New Game Modes, Buffies, Trophy System, Skins, and more

- Gold Rate Forecast

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Prestige Requiem Sona for Act 2 of LoL’s Demacia season

- Channing Tatum reveals shocking shoulder scar as he shares health update after undergoing surgery

2026-02-04 17:58