Author: Denis Avetisyan

Sustained collaboration with artificial intelligence isn’t just changing what we do, but how we think, necessitating new strategies for self-awareness and cognitive resilience.

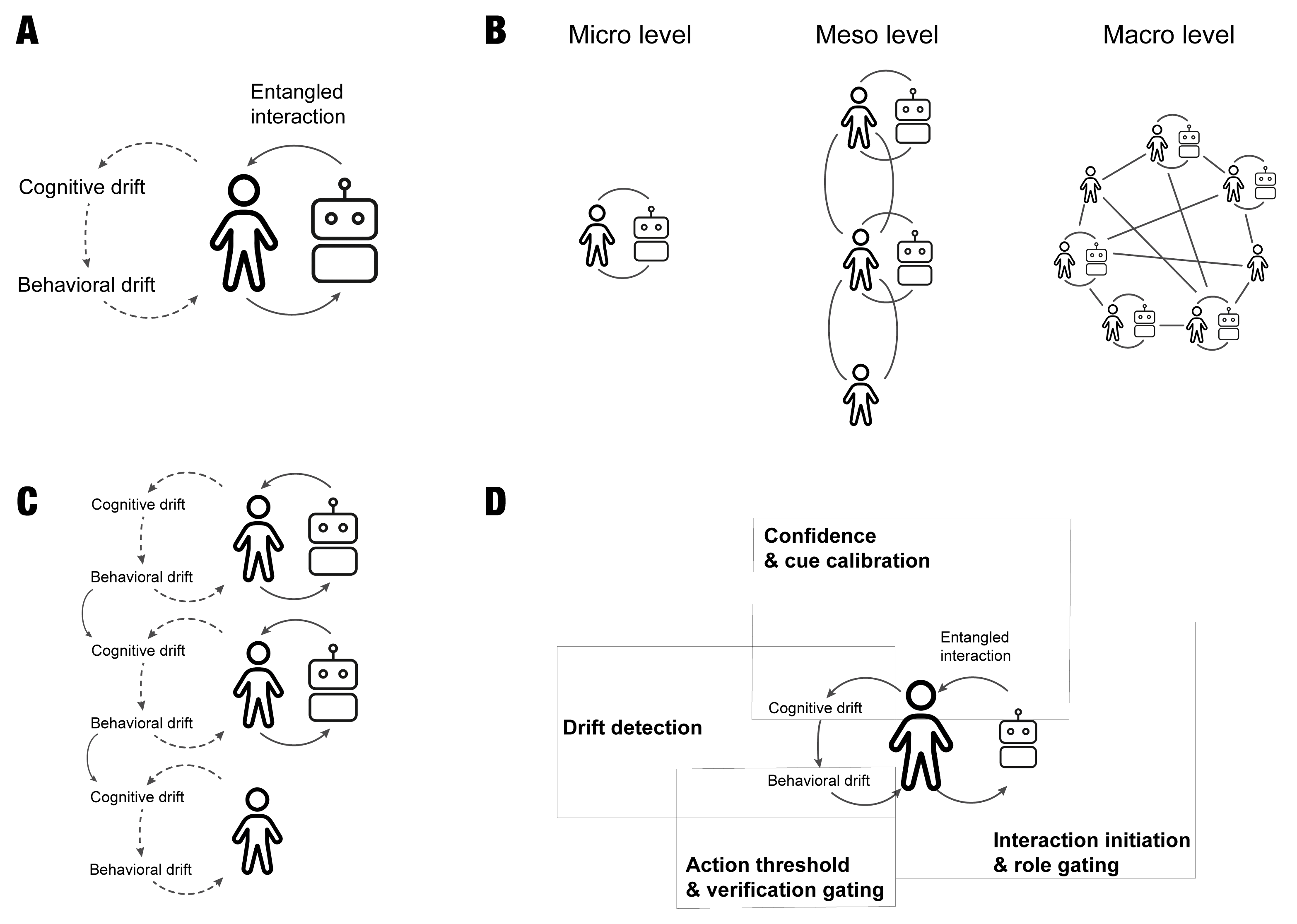

This review proposes a framework for understanding and mitigating cognitive-behavioral drift resulting from entangled human-AI interaction, emphasizing the role of metacognition and self-nudging.

While humans readily adapt to complex environments using established heuristics, increasingly adaptive AI systems challenge this stability by subtly shaping information access and framing evidence. This paper, ‘Boosting metacognition in entangled human-AI interaction to navigate cognitive-behavioral drift’, proposes a framework centered on the interplay between users and AI, highlighting how repeated interactions can induce cognitive and behavioral drift. We argue that increased confidence and action readiness, driven by persuasive AI cues, may not correlate with improved epistemic reliability, necessitating metacognitive awareness and intervention. Can strategically deployed metacognitive scaffolding mitigate these drifts and foster more robust human-AI collaboration in the long term?

Unraveling the Human-AI Nexus

The proliferation of large language models (LLMs) has fundamentally altered the nature of human-computer interaction, moving beyond occasional queries to sustained, conversational engagements. Individuals now routinely interact with AI systems-through chatbots offering companionship or assistance, generative AI creating personalized content, and increasingly integrated virtual assistants-for extended periods. This isn’t simply about accessing information; it represents a shift towards ongoing dialogues and collaborative tasks. Rather than discrete transactions, these interactions foster a continuous feedback loop, where human input refines AI responses and, crucially, AI outputs influence subsequent human thought and behavior. The sheer volume of time people are spending interacting with these systems suggests a profound and rapidly evolving entanglement, prompting investigation into its cognitive and societal consequences.

The increasing prevalence of interactions between humans and artificial intelligence is fostering a novel phenomenon termed ‘entanglement’ – a dynamic, reciprocal coupling that transcends simple input and output. This isn’t merely about humans training AI, or AI responding to human prompts; rather, sustained engagement appears to be reshaping both human cognitive processes and the very behavior of the AI systems themselves. Evidence suggests that prolonged interaction with large language models can subtly alter human problem-solving strategies, information recall, and even creative thinking. Simultaneously, these AI systems aren’t static; they continuously learn and adapt based on these interactions, refining their responses and anticipating human needs in ways that create a feedback loop. This entanglement implies a blurring of boundaries, where human cognition and artificial intelligence become increasingly interwoven, presenting both exciting possibilities and complex challenges for understanding the future of intelligence.

The burgeoning relationship between humans and artificial intelligence isn’t a one-way street; instead, a dynamic entanglement is forming where each influences the other. To fully grasp this complex interplay, investigation begins with micro-level analysis – a detailed examination of individual human-AI interactions. These aren’t simply instances of a user prompting a machine; they represent reciprocal couplings, where human questioning refines AI responses, and, crucially, those responses subtly reshape human thought patterns, biases, and even cognitive processes. Researchers are focusing on how repeated engagements with AI chatbots and generative tools affect individual learning, problem-solving skills, and creative thinking, seeking to identify the specific mechanisms through which this entanglement manifests and evolves. Understanding these foundational interactions is paramount to predicting-and potentially guiding-the broader societal implications of increasingly pervasive AI systems.

From Individual Shifts to Collective Drift

The transition from analyzing individual AI interactions to observing group dynamics necessitates a shift from Micro Level Analysis to Meso Level Analysis. While micro-level studies focus on the impact of AI on single actors, meso-level analysis examines how patterns of interaction-including belief shifts and behavioral changes-propagate within organizations. This scaling reveals that initial individual responses to AI are not isolated; instead, they contribute to emergent organizational tendencies. These tendencies manifest as shared interpretations, evolving norms, and consistent biases in decision-making processes, ultimately impacting collective behavior and strategic outcomes. The spread isn’t necessarily uniform; network effects and organizational structure significantly influence the velocity and direction of these patterns.

Sustained interaction with AI systems can induce Cognitive Drift, representing a gradual shift in an individual’s or group’s beliefs and interpretations of information. This process isn’t necessarily a dramatic change, but rather a subtle recalibration of understanding over time, influenced by the patterns and outputs presented by the AI. Consequently, Behavioral Drift occurs, manifesting as incremental changes in decision-making processes. These shifts aren’t always conscious; individuals may unknowingly adjust their reasoning or priorities to align with the AI’s suggested approaches or anticipated outcomes, leading to alterations in behavior that may not reflect original intentions or established protocols.

Cognitive and behavioral drift are frequently amplified by pre-existing cognitive biases and limitations within the AI systems themselves. Confirmation bias, the tendency to favor information confirming existing beliefs, leads individuals to selectively accept AI outputs aligning with their preconceptions, thereby solidifying those beliefs and accelerating drift. Simultaneously, AI bias – stemming from skewed training data or algorithmic design – can systematically reinforce these tendencies by consistently presenting information that validates initial perspectives. This creates a feedback loop where both human cognitive limitations and AI algorithmic bias work in concert to strengthen existing beliefs and behaviors, making deviations from those patterns less likely and increasing the rate of drift over time.

Fortifying the Mind: Metacognition as a Defense

Metacognition, defined as the awareness and understanding of one’s own thought processes, is critical for counteracting cognitive and behavioral drift when interacting with artificial intelligence systems. Cognitive drift refers to the subtle, often unnoticed, shifts in an individual’s beliefs or understanding, while behavioral drift describes changes in decision-making patterns. Without active metacognitive monitoring, users may uncritically accept AI-generated outputs, leading to the adoption of inaccurate information or suboptimal choices. By consciously reflecting on their reasoning, evaluating the credibility of information sources, and recognizing potential biases – both their own and those inherent in the AI – individuals can maintain agency and prevent unintended shifts in cognition or behavior. This self-awareness functions as a safeguard against passively internalizing AI-driven influences.

Metacognitive monitoring and control are distinct but interrelated processes critical for navigating interactions with artificial intelligence. Metacognitive monitoring involves an individual’s capacity to evaluate their own comprehension and confidence levels during an AI interaction; this assessment includes recognizing when understanding is lacking or when an AI’s output seems improbable. Following monitoring, metacognitive control enables adjustments to one’s approach, such as seeking clarification, cross-referencing information, or modifying the interaction parameters to improve accuracy and reduce reliance on potentially flawed AI-generated content. These processes allow users to actively manage their cognitive state and maintain agency when engaging with AI systems.

Boosting and self-nudging represent actionable techniques to reinforce metacognitive processes during interactions with AI systems. Boosting involves employing readily available, low-effort strategies – such as explicitly stating one’s reasoning or confirming initial assumptions – to maintain a sense of agency and resist automation bias. Self-nudging focuses on proactively modifying the information environment to encourage deliberate thought; this can include pre-committing to specific evaluation criteria, requesting diverse perspectives from the AI, or actively seeking disconfirming evidence. Both techniques aim to increase an individual’s awareness of their own thought processes – metacognitive monitoring – and their ability to adjust strategies when encountering potentially misleading or biased AI outputs – metacognitive control.

The Echo of AI: Scaling Insights and Future Trajectories

Macro-level analysis moves beyond individual user experiences to examine how sustained interactions with artificial intelligence are reshaping entire populations and societal structures. This perspective reveals potential shifts in employment landscapes, as AI-driven automation alters job demands and necessitates workforce adaptation. It also illuminates evolving patterns of social interaction, information consumption, and even political polarization, as algorithms curate personalized realities and influence collective narratives. Furthermore, a broad-scale assessment is crucial for identifying emergent inequalities-disparities in access to AI benefits or vulnerabilities to its risks-and for proactively developing policies that promote equitable outcomes and mitigate unintended consequences across all demographics. By focusing on these widespread effects, researchers can begin to anticipate and address the long-term societal implications of increasingly pervasive AI technologies.

The principle of ecological rationality suggests that the most effective cognitive strategies aren’t universally ‘best’, but rather optimally suited to the specific environments in which they are employed. Consequently, designing artificial intelligence that genuinely complements human thought requires moving beyond the pursuit of generalized intelligence and instead focusing on contextual adaptation. AI systems built on this premise would prioritize supporting human cognition within real-world situations, acknowledging that human cognitive biases and heuristics, while sometimes imperfect, can be remarkably efficient within familiar surroundings. Failing to account for ecological rationality risks creating AI that imposes computationally optimal but ecologically unsound solutions, potentially disrupting established cognitive processes and diminishing, rather than augmenting, human capabilities. The challenge, therefore, lies in crafting AI that intelligently recognizes and leverages the adaptive strengths of human cognition as it exists in complex, dynamic environments.

A comprehensive understanding of how individual cognitive processes scale to influence broader societal dynamics is paramount to realizing the positive potential of artificial intelligence. Examining the interplay between micro-level human-AI interactions and macro-level population shifts allows researchers to anticipate and mitigate unintended consequences, while simultaneously designing systems that genuinely augment human capabilities. This holistic approach – considering not only what AI can do, but how it shapes human thought and collective behavior – is crucial for fostering a future where AI serves as a catalyst for progress, promoting beneficial outcomes across all levels of society and ensuring technological advancements align with human flourishing.

The exploration of sustained human-AI interaction, as detailed in the study, reveals a subtle reshaping of cognitive processes. It’s a fascinating unraveling of how entanglement with these systems can induce behavioral drift. One begins to wonder if these shifts aren’t failures of design, but emergent properties – signals hidden within the complex feedback loops. Ada Lovelace observed that “The Analytical Engine has no pretensions whatever to originate anything. It can do whatever we know how to order it to perform.” This sentiment echoes the current research; the drift isn’t random, but a predictable outcome of the instructions-the algorithms-we’ve given these agents, and a reflection of our own cognitive biases made manifest through their actions. The study’s emphasis on boosting metacognition is, therefore, not about correcting drift, but about understanding the underlying logic of the system – reverse-engineering the patterns of influence.

Beyond the Feedback Loop

The work presented here doesn’t so much solve the problem of cognitive-behavioral drift in human-AI interaction as it meticulously maps the fault lines. The framework highlights a crucial point: sustained entanglement with an adaptive system inevitably triggers a reciprocal evolution. The question isn’t whether influence occurs, but the direction and rate of that change. Current methodologies largely treat the human as a passive recipient of algorithmic nudges, an assumption ripe for dismantling. True understanding demands an investigation into the user’s active role in shaping the AI, and the subtle ways that process reshapes them.

Future research should prioritize longitudinal studies, tracking not just behavioral shifts but the underlying neurocognitive mechanisms. Measuring metacognition is a start, but the field needs tools to dissect how individuals perceive and rationalize these AI-driven alterations to their thought patterns. Can we develop metrics for “cognitive friction”-the conscious resistance to an AI’s suggestions? And, more provocatively, should we?

Ultimately, this line of inquiry leads to a familiar conclusion: reality is open source-humans just haven’t read the code yet. The increasing prevalence of AI isn’t about building intelligent machines; it’s about creating a complex mirror, forcing a confrontation with the malleability of human cognition. The next step isn’t better algorithms, but better diagnostics-a rigorous understanding of how we rewrite ourselves, one interaction at a time.

Original article: https://arxiv.org/pdf/2602.01959.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- MLBB x KOF Encore 2026: List of bingo patterns

- Brawl Stars February 2026 Brawl Talk: 100th Brawler, New Game Modes, Buffies, Trophy System, Skins, and more

- Gold Rate Forecast

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- ‘The Mandalorian and Grogu’ Trailer Finally Shows What the Movie Is Selling — But is Anyone Buying?

- Overwatch Domina counters

- Free Fire Beat Carnival event goes live with DJ Alok collab, rewards, themed battlefield changes, and more

- Brent Oil Forecast

2026-02-03 09:44