Author: Denis Avetisyan

New research explores how robots can move beyond pre-programmed instructions to respond dynamically to real-world events triggered by sound.

Researchers present Habitat-Echo, a simulation platform, and a hierarchical approach to enable sound-triggered robotic manipulation and multi-modal learning.

Current mobile manipulation research largely confines agents to passive execution of predefined instructions, hindering true autonomy in dynamic environments. This work, ‘From Instruction to Event: Sound-Triggered Mobile Manipulation’, introduces a paradigm shift, enabling agents to actively perceive and react to sound-emitting objects without explicit command. We present Habitat-Echo, a data platform integrating acoustic rendering with physical interaction, and a hierarchical baseline demonstrating successful autonomous response to auditory events, even isolating primary sound sources amidst interference. Could this approach unlock more robust and adaptable robotic systems capable of navigating and interacting with complex, real-world scenarios?

Beyond Sensory Reliance: The Ascendance of Auditory AI

The prevailing approach to embodied artificial intelligence has historically prioritized visual processing, creating systems heavily reliant on cameras and image analysis. This emphasis, while yielding progress in controlled environments, overlooks the substantial and often more reliable information contained within the auditory domain. Sound offers a 360-degree perception of surroundings, functions effectively in low-light or obscured conditions, and can convey crucial data about object properties – such as size, material, and velocity – that are difficult to ascertain visually. Consequently, a significant limitation of current robotics is its reduced ability to navigate and interact effectively in dynamic, real-world settings where visual input may be incomplete, unreliable, or simply unavailable; a reliance on vision alone hinders truly robust and adaptable artificial intelligence.

The over-reliance on visual input in contemporary robotics presents significant limitations when operating within dynamic, real-world environments. Obstructions – from dense fog and poor lighting to the simple act of a human walking between a robot and its target – can render vision-based systems ineffective, causing navigation failures or incomplete task execution. Furthermore, visual data requires substantial processing power, creating delays that hinder responsiveness, particularly crucial in time-sensitive applications. Consequently, robots lacking alternative sensory modalities struggle to maintain consistent performance beyond highly controlled, visually ideal conditions, impacting their utility in unpredictable scenarios such as search and rescue, disaster response, or even everyday human-robot collaboration.

A new approach to robotics prioritizes acoustic signals as the primary driver of interaction, representing a significant departure from the current visual dominance in embodied artificial intelligence. This shift enables robots to perceive and react to their surroundings with greater reliability, particularly in situations where visual input is compromised-such as low-light conditions or obstructed views. By focusing on sound, these systems can identify objects, locate speakers, and even anticipate actions based on auditory cues, fostering a more natural and intuitive exchange between humans and robots. The result is a heightened level of robustness and adaptability, allowing robots to navigate complex, real-world scenarios with increased confidence and efficiency, ultimately paving the way for more seamless and effective human-robot collaboration.

Simulating Reality: Habitat-Echo and the Physics of Sound

Habitat-Echo integrates advanced acoustic rendering into the Habitat simulation platform by moving beyond simple point-source audio to model sound propagation within virtual environments. This is achieved through the implementation of ray tracing and acoustic modeling techniques that simulate how sound waves interact with surfaces and objects. The system supports the generation of realistic sound fields, accounting for factors such as distance attenuation, reflections, and occlusion. Specifically, Habitat-Echo enables the simulation of complex auditory scenes, allowing for the creation of environments where sound behaves in a physically plausible manner, enhancing the realism of the simulation for both visual and auditory perception.

Habitat-Echo employs Room Impulse Responses (RIRs) as a core component of its acoustic modeling. RIRs capture the characteristics of sound propagation in a given environment, detailing how a sound reflects off surfaces before reaching a listener. By convolving an audio source with an appropriate RIR, the system simulates realistic sound propagation, including effects like reverberation, reflections, and spatial localization. This technique accounts for the geometry and material properties of the simulated space, enabling accurate sound spatialization and a physically plausible auditory environment. The system supports pre-recorded RIRs as well as real-time RIR generation, allowing for dynamic acoustic environments.

The implementation of physically-based acoustic rendering within Habitat-Echo facilitates the development of tasks requiring agents to respond to auditory stimuli. These tasks can range from sound source localization and identification to navigation based on acoustic cues, and can incorporate complex scenarios with reverberation, occlusion, and multiple sound events. Agent training leverages this realistic auditory environment to improve performance in tasks such as identifying specific sounds within noisy environments, responding to emergency signals, or following verbal instructions, thereby enabling the development of more robust and adaptable artificial intelligence systems capable of operating in complex, real-world scenarios.

Decomposition and Action: A Hierarchical Planning Framework

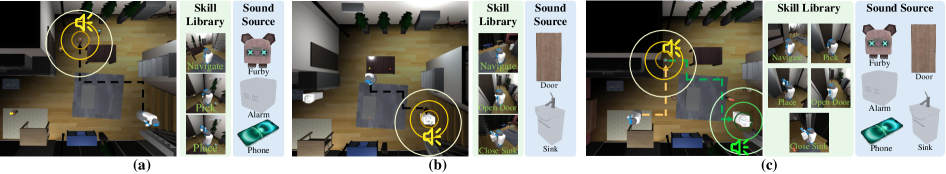

The system architecture utilizes a Hierarchical Task Planner (HTP) to address complex task execution. This HTP integrates an Omni-modal Large Language Model (Omni-LLM) as its central reasoning engine, enabling the decomposition of high-level goals into a series of sequential sub-tasks. The Omni-LLM processes multi-modal input, specifically acoustic events, to understand the task context and generate a plan represented as a chain of skills. This approach differs from direct skill invocation by introducing an intermediate reasoning step, allowing the system to adapt to novel situations and generalize across different task instances. The HTP effectively bridges the gap between abstract goals and concrete actions, facilitating robust and flexible task completion.

The system’s Omni-LLM functions as a task decomposition engine, processing auditory input to initiate action sequences. Upon detecting an acoustic event, the LLM analyzes the sound and correlates it with a high-level goal. This goal is then broken down into a series of discrete, executable steps represented as skills. The LLM generates an ordered sequence of these skills, forming a plan to achieve the identified goal. This skill sequence serves as input for the low-level policy models, dictating the specific actions the agent should perform in response to the initial auditory trigger and guiding the completion of the task.

Low-level Policy Models function as the execution component of the system, translating the skill chain generated by the Hierarchical Task Planner into concrete actions. These models receive discrete skill commands – such as “pick up object” or “place object” – and are responsible for controlling the agent’s actuators to perform those skills in a robotic environment. Effectiveness is achieved through a reactive, stimulus-response mechanism where sound events trigger the planning process, and the subsequent skill chain execution by the Policy Models enables sound-triggered action completion. The success of this architecture relies on the Policy Models’ ability to reliably perform individual skills as defined within the pre-defined Skill Library.

Evaluation of the Qwen2.5-Omni-7B based hierarchical task planner yielded a 27.48% success rate on the SonicStow task, which requires the agent to stow specific objects in response to audio cues. Performance on the SonicInteract task, involving more complex interactions with objects triggered by sound, was 19.72%. These success rates represent the overall ability of the system to correctly interpret audio, plan a sequence of actions, and execute those actions to achieve the designated task goals within the simulated environment.

The system’s operational foundation is a pre-defined Skill Library, comprising a set of discrete action primitives. Performance metrics indicate robust individual skill execution; the Pick Skill demonstrates an 88.73% success rate, while the Place Skill achieves a 66.66% success rate. These values represent the percentage of attempts in which the respective skill successfully completes its designated action, and are calculated across a comprehensive test dataset. The reliance on pre-defined, high-performing skills contributes to the overall system reliability and reduces the complexity of real-time decision-making.

Beyond Single Cues: Navigating Acoustic Complexity

The system’s capacity for complex auditory processing is demonstrated through tasks demanding what is termed Bi-Sonic Manipulation. These scenarios present an agent with multiple, sequential sound sources, each requiring a distinct response. Successfully navigating such challenges necessitates not only the accurate localization of each sound but also a prioritization strategy to determine the order of interaction. The agent must effectively discern which sound demands immediate attention, and then execute the appropriate action before addressing subsequent auditory cues – a process mirroring the cognitive demands of real-world environments filled with competing stimuli. This ability to manage and respond to multiple sound sources in sequence highlights a significant step towards creating agents capable of robust and adaptive behavior in complex acoustic landscapes.

The ability to pinpoint the location of sound sources is fundamental to navigating complex acoustic environments. Accurate sound localization enables an agent to discern the origin of each auditory stimulus, which is crucial for prioritizing responses and initiating appropriate actions. This process isn’t simply about detecting that a sound exists, but rather determining where it’s coming from in three-dimensional space – a feat complicated by echoes, reverberations, and the presence of multiple competing sounds. Without robust localization, an agent risks misinterpreting cues, responding to phantom sources, or failing to identify critical signals amidst background noise, ultimately hindering its ability to perform sound-triggered manipulations or interact effectively with its surroundings. The precision of this spatial awareness directly impacts the success rate of complex tasks requiring sequential attention to multiple sound events.

Acoustic interference presents a considerable hurdle for agents relying on auditory input, as overlapping sound waves can mask or distort crucial signals. This phenomenon demands more than simple sound detection; successful navigation of complex soundscapes requires sophisticated prioritization strategies. The system must effectively filter competing auditory information, discerning relevant sounds from noise and determining which source requires immediate attention. This isn’t merely about identifying all sounds, but rather intelligently focusing on the most important one at any given moment – a process akin to the ‘cocktail party effect’ observed in human hearing. Without such prioritization, the agent risks misinterpreting commands or failing to respond to critical stimuli, hindering its ability to perform complex, sound-triggered manipulations.

Initial trials with the Bi-Sonic Manipulation task revealed an approximate 20-30% success rate in identifying and interacting with the primary sound source, signifying a foundational capability in complex auditory environments. This performance, while preliminary, demonstrates the framework’s potential to discern and prioritize between competing acoustic signals – a critical step toward managing multiple sound-triggered actions. Although challenges remain in consistently achieving high accuracy across both sound sources, these early results establish a promising benchmark for future development and refinement of the system’s sound localization and prioritization algorithms, paving the way for more robust and reliable multi-source acoustic manipulation.

The developed framework demonstrates proficiency in complex, sound-triggered manipulations through validation on tasks such as SonicStow and SonicInteract. SonicStow challenges the system to locate and stow objects based on auditory cues, requiring precise sound localization and motor control. Meanwhile, SonicInteract necessitates dynamic responses to varying sounds, prompting the system to perform specific actions-like pushing or rotating an object-in reaction to different acoustic signals. Successful execution of both tasks highlights the framework’s capacity to not merely detect sounds, but to integrate auditory information into a cohesive plan for physical interaction, representing a significant step toward more adaptable and responsive robotic systems.

The pursuit of robotic autonomy, as detailed in this work, necessitates moving beyond pre-programmed instructions. The system elegantly demonstrates a shift towards event-driven action, a responsiveness to the environment signaled by acoustic cues. This aligns perfectly with Vinton Cerf’s observation: “The internet treats everyone the same.” The Habitat-Echo platform, enabling sound-triggered manipulation, embodies this principle; the agent reacts to stimuli irrespective of its initial state, mirroring the internet’s non-discriminatory data transmission. Abstractions age, principles don’t. This research prioritizes the fundamental principle of reactive behavior over complex, rigid programming, a move toward truly adaptable embodied AI.

The Simplest Echo

The presented work, while a necessary articulation of sound-triggered manipulation, merely establishes a foundation. The complexity inherent in translating acoustic events into robust, generalizable action remains largely unaddressed. Current approaches, even within the Habitat-Echo environment, rely on pre-defined task schemas. True autonomy demands a move beyond this-a system that doesn’t simply react to sound, but interprets it within a broader, self-maintained world model. The goal shouldn’t be to catalog every possible sound-action pairing, but to cultivate a capacity for compositional generalization.

A significant limitation lies in the fidelity of the acoustic simulation. While Habitat-Echo provides a valuable testbed, the gulf between simulated acoustics and the messy reality of sound propagation remains considerable. Future work must prioritize realistic audio rendering, including reverberation, occlusion, and the effects of material surfaces. More critically, the system must learn to disambiguate overlapping sounds – the natural state of any environment. A single, clean trigger is a laboratory fiction.

Ultimately, the pursuit of sound-triggered manipulation is not about building robots that hear instructions. It’s about building systems capable of opportunistic action – of recognizing, in the ambient noise, the possibility of meaningful interaction. The most fruitful path forward likely involves minimizing explicit instruction and maximizing the agent’s capacity to discover affordances through self-directed exploration. Less telling, more listening.

Original article: https://arxiv.org/pdf/2601.21667.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Gold Rate Forecast

- 1xBet declared bankrupt in Dutch court

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-01-30 09:38