Author: Denis Avetisyan

This review systematically explores the design landscape for artificial intelligence systems intended to enhance and interact with live musical performance.

A comprehensive design space is presented, analyzing existing live music agents to identify key dimensions and opportunities for innovation at the intersection of music technology, human-computer interaction, and artificial intelligence.

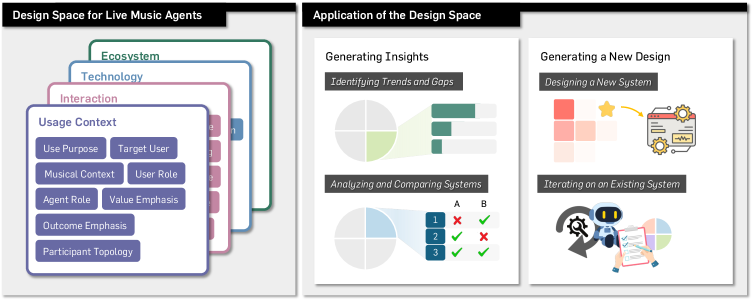

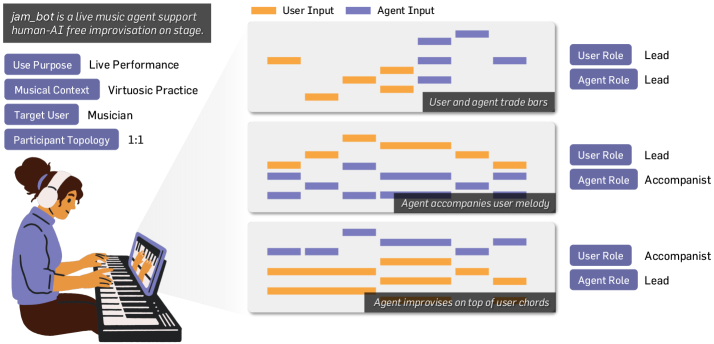

Despite decades of interdisciplinary research into supporting real-time musical interaction, the field lacks a unified understanding of existing approaches. This paper, ‘A Design Space for Live Music Agents’, addresses this gap by systematically analyzing 184 systems to map the current landscape of intelligent agents for live music performance. Our resulting design space categorizes these systems across dimensions of usage, interaction, technology, and ecosystem, revealing key trends and opportunities for innovation. How might this structured overview facilitate more effective collaboration and accelerate the development of truly creative human-AI musical partnerships?

Deconstructing the Musical Dialogue

Historically, the creation of music has followed a largely linear path, distinctly dividing roles between the composer who conceives the work, the performer who interprets it, and the audience who receives it. This separation, while enabling complex compositions, inherently restricts the potential for immediate, reciprocal exchange. The composer’s vision is typically fixed before performance, leaving limited space for the performer to improvise or respond to the audience’s energy, and even less for the audience to directly influence the musical direction. Consequently, the dynamic interplay characteristic of many folk traditions or jam sessions – where music evolves in real-time through collective improvisation – is often absent in more formal musical settings. This traditional model, though deeply ingrained, presents a challenge for those seeking to create genuinely interactive and responsive musical experiences that blur the lines between creator and receiver.

Despite the proliferation of digital audio workstations and virtual instruments, a critical gap remains in replicating the subtleties of live musical performance. Current tools often introduce latency – a delay between input and output – which disrupts the immediate feedback loop essential for improvisation and ensemble interplay. Beyond timing, these systems frequently struggle with expressive control; capturing the dynamic variations in timbre, articulation, and phrasing that define a human performer proves challenging. While software can meticulously reproduce pre-programmed sounds, translating the unpredictable nuances of a live performance-a breathy vocal inflection, a slight pressure change on a string, the subtle shifts in tempo reflecting emotional intent-requires a level of sensitivity and responsiveness that many existing platforms haven’t yet achieved, hindering truly interactive and fluid musical experiences.

![The limited presence of live coding ([latex]3.3\%[/latex]) and extended-reality ([latex]1.1\%[/latex]) interfaces in current systems highlights significant opportunities for developing innovative live music agents that leverage code-based collaboration and immersive spatial interactions.](https://arxiv.org/html/2602.05064v1/x13.png)

Rewriting the Score: Live Music Agents as Emergent Systems

Live music agents signify a departure from traditional music production and performance workflows, focusing on systems engineered for real-time musical composition and delivery. These systems prioritize immediacy, enabling musical material to be generated and modified during a performance rather than being pre-recorded or fully composed beforehand. This shift necessitates architectures capable of handling continuous audio streams, responding to performer input with minimal latency, and adapting to dynamic performance contexts. The core principle is the facilitation of spontaneous musical ideas and their immediate translation into audible output, representing a move towards a more fluid and interactive musical experience.

Live music agents utilize computational resources to facilitate immediate responses to both performer input and audience feedback. This real-time interaction is achieved through various methods including audio analysis, motion tracking, and network data processing. Systems are designed to analyze these inputs and generate corresponding outputs – such as modified audio, visual effects, or altered musical arrangements – with minimal latency. The processing power enables agents to dynamically adjust performance parameters based on evolving conditions, creating a responsive and interactive musical environment. This capability distinguishes these agents from pre-programmed systems and allows for improvisation and unique performance variations.

A comprehensive analysis of 184 existing live music agent systems was conducted to identify common architectures, functionalities, and limitations. This research categorized systems based on interaction modality – performer-to-system, audience-to-system, and system-to-system – and further delineated them by core technical approaches including algorithmic composition, reactive sound design, and generative visual accompaniment. The resulting framework details key parameters for system evaluation – latency, scalability, and creative control – and provides a taxonomy of design choices to facilitate future innovation and interoperability within the field. This structured overview aims to move beyond ad-hoc development and enable a more systematic approach to building and deploying advanced live music agent technologies.

From Symbols to Sound: Decoding the Agent’s Core

Symbolic music formats, most notably MIDI, offer a discrete and standardized method for representing musical parameters such as pitch, duration, velocity, and timing. Unlike audio waveforms which are continuous analog signals, symbolic data utilizes numerical codes to define musical events, enabling precise control and manipulation. This digital representation facilitates algorithmic composition, automated performance, and detailed analysis of musical structure. The structured nature of symbolic data allows for efficient storage, transmission, and editing, making it a foundational element in modern music production and computational musicology. Furthermore, the discrete nature of symbolic representation lends itself to machine learning applications, allowing algorithms to learn patterns and generate new musical content based on defined parameters.

The agent’s internal processing converts symbolic music representations, such as MIDI data, into perceivable audio through the generation of AudioWaveforms. This conversion involves multiple stages, including digital signal processing to synthesize sound based on the parameters defined in the symbolic representation. The resulting AudioWaveforms are time-series data representing variations in air pressure, which, when transduced by speakers, create the auditory experience. The fidelity and characteristics of these waveforms are directly determined by the algorithms and parameters employed during the conversion process, influencing factors such as timbre, pitch, and dynamics.

Analysis of live music agent designs has revealed a 31-dimensional design space, parameterized by 165 discrete codes. These dimensions represent controllable aspects of agent behavior, encompassing parameters related to musical structure, sonic texture, and real-time performance characteristics. The 165 codes define specific values or configurations within those dimensions, allowing for a systematic categorization and comparison of different agent architectures. This framework enables quantitative analysis of design choices and facilitates the development of new agents with targeted performance capabilities, providing a comprehensive method for characterizing the breadth of possible live music agent designs.

![The prevalence of rule-based, stochastic, and shallow neural network approaches in live music agents has decreased since 1984, with task-specific deep neural networks ([latex]DNNs[/latex]) becoming dominant after 2012 and generative AI methods emerging, though currently limited, since 2022.](https://arxiv.org/html/2602.05064v1/x11.png)

The Echo of Improvisation: Genre and the Agent’s Adaptation

Live music agents demonstrate a remarkable capacity to foster musical improvisation, enabling performers to venture into spontaneous creation without reliance on pre-determined structures. These agents achieve this by dynamically interpreting and responding to subtle cues from musicians, effectively acting as collaborators in real-time composition. This facilitation isn’t merely reactive; skilled agents anticipate potential musical directions, offering supportive harmonic or rhythmic frameworks that encourage exploration while maintaining coherence. The result is a fluid exchange where performers are empowered to take risks and develop novel ideas, leading to performances characterized by unique and unrepeatable moments of artistic expression. This collaborative dynamic highlights the agent’s role not as a controller, but as a catalyst for spontaneous creativity, transforming a potential void of unplanned moments into a vibrant landscape of musical innovation.

An agent’s proficiency in interpreting and reacting to a performer’s individual style is frequently, and significantly, shaped by the conventions of a particular musical genre, notably Jazz. This connection stems from the improvisational nature inherent in genres like Jazz, where nuanced responses and stylistic mirroring are crucial for successful musical dialogue. The agent must not only recognize the performer’s choices – harmonic, melodic, rhythmic – but also anticipate likely continuations based on established genre practices. Consequently, an agent designed to collaborate effectively with a Jazz musician will require a different knowledge base and response strategy than one intended for a Classical or Electronic music performer. This specialization highlights how genre serves as a crucial contextual framework for interpreting artistic expression and facilitating meaningful interaction between human and artificial intelligence.

A comprehensive analysis of 184 distinct systems – encompassing performance practices, technological implementations, and theoretical frameworks – establishes a robust foundation for advancing the study of musical improvisation. This large-scale investigation reveals underlying patterns and critical variables influencing successful agent-performer interaction, offering quantifiable insights previously unavailable to researchers. The resulting dataset and methodological approach are designed to encourage further exploration at the intersection of music, artificial intelligence, and cognitive science, ultimately fostering innovation in both performance and the development of intelligent musical tools. This work isn’t merely descriptive; it provides a springboard for future studies aiming to refine agent algorithms, model creative processes, and deepen understanding of the complex dynamics inherent in live musical performance.

The exploration of design spaces, as detailed in the paper, inherently involves a process of deconstruction and re-evaluation. This mirrors a core tenet of systems thinking – understanding how components interact by intentionally probing their boundaries. As Ken Thompson famously stated, “Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.” This sentiment elegantly captures the necessity of simplicity and transparency within complex systems – a principle directly applicable to the design of live music agents. The paper’s systematic analysis aims to expose the underlying assumptions and constraints of current approaches, essentially ‘debugging’ the existing design landscape to reveal opportunities for more robust and creative AI in live music performance. It’s about acknowledging the inherent limitations and designing with those in mind, rather than attempting to mask them with complexity.

Where the Music Takes Us

The articulation of this design space for live music agents isn’t a culmination, but an invitation to deconstruction. Every exploit starts with a question, not with intent. The systematization of existing approaches reveals, perhaps predictably, the areas most rigidly defended – and thus, those ripe for subversion. Current systems largely operate within pre-defined musical constraints; the next iteration isn’t about building better improvisation engines, but about systems that actively challenge the definition of musicality itself.

A critical limitation lies in the assessment metrics. Evaluating ‘creativity’ or ‘engagement’ through conventional HCI lenses feels…circular. The agent isn’t meant to replicate human performance; it’s meant to expose the underlying rules of performance. Future work must focus on metrics that quantify unexpectedness, structural disruption, and the agent’s capacity to force a re-evaluation of musical norms in both itself and its human collaborators.

The true potential isn’t in automated performance, but in automated investigation. A live music agent, properly constructed, becomes a tool for reverse-engineering the very foundations of musical experience. The question, then, isn’t “can it play?” but “what does it reveal when it breaks?”

Original article: https://arxiv.org/pdf/2602.05064.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Jason Statham’s Action Movie Flop Becomes Instant Netflix Hit In The United States

- Kylie Jenner squirms at ‘awkward’ BAFTA host Alan Cummings’ innuendo-packed joke about ‘getting her gums around a Jammie Dodger’ while dishing out ‘very British snacks’

- Brawl Stars February 2026 Brawl Talk: 100th Brawler, New Game Modes, Buffies, Trophy System, Skins, and more

- Gold Rate Forecast

- Hailey Bieber talks motherhood, baby Jack, and future kids with Justin Bieber

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- MLBB x KOF Encore 2026: List of bingo patterns

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- Brent Oil Forecast

2026-02-06 10:42