Author: Denis Avetisyan

A new decentralized control framework leverages artificial intelligence to enable multi-agent systems to efficiently map complex environments.

![The study demonstrates that an AI-augmented Distributed Two-Objective Coverage (D2OC) approach significantly improves coverage efficiency-as evidenced by the refined sample distribution-compared to a standard mission completion without AI, a performance gain achieved through an adaptive learning process monitored by the decreasing trajectory of the loss function [latex] L [/latex].](https://arxiv.org/html/2601.21126v1/figs/loss2.png)

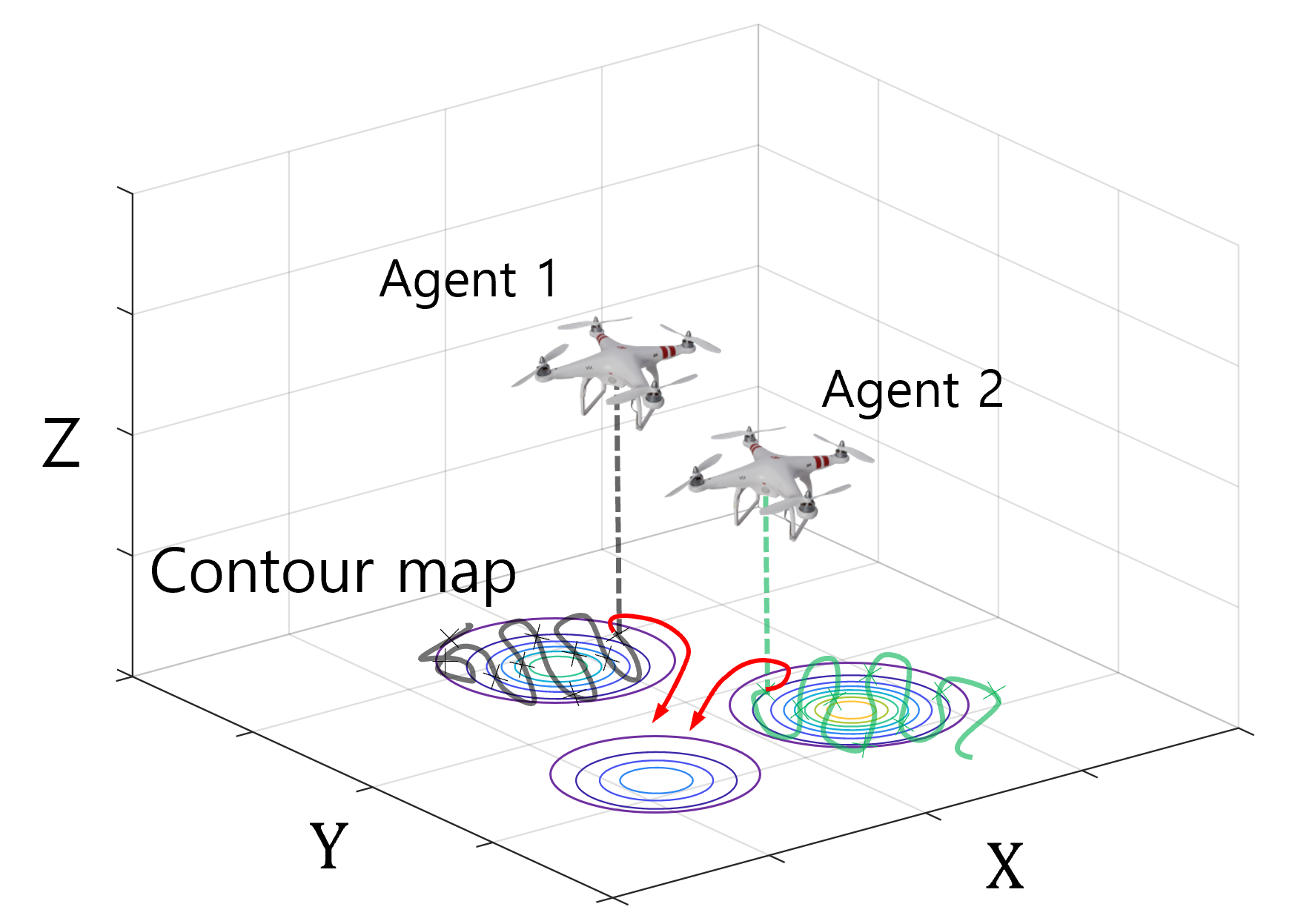

This work introduces an AI-augmented density-driven optimal control method for robust and scalable environmental mapping in multi-agent systems using Wasserstein metric-based exploration.

Effective environmental mapping with multi-agent systems is often hampered by uncertainties in sensing and communication, particularly when relying on pre-existing maps or biased priors. This paper introduces ‘AI-Augmented Density-Driven Optimal Control (D2OC) for Decentralized Environmental Mapping’, a novel framework leveraging optimal transport and machine learning to enable robust and scalable reconstruction of complex spatial distributions. By adaptively balancing exploration and exploitation via a dual multilayer perceptron module, D2OC achieves demonstrably higher-fidelity reconstructions compared to conventional decentralized approaches, with convergence rigorously proven under the Wasserstein metric. Could this AI-augmented approach unlock new capabilities for autonomous systems operating in dynamic and unpredictable environments?

Mapping the Void: Accepting Imperfect Environmental Data

Conventional environmental mapping techniques frequently assume the availability of comprehensive datasets, a premise rarely met in practice. Real-world environments are inherently dynamic, subject to unpredictable changes from weather patterns to unforeseen events, rendering complete data acquisition exceptionally difficult, if not impossible. This reliance on full coverage creates a significant limitation, as gaps in information can lead to inaccurate assessments of risk and hinder effective mitigation strategies. Consequently, methodologies that can function effectively with incomplete or fragmented data are crucial for providing timely and reliable environmental insights, particularly in scenarios where rapid response is essential. The challenge lies not in obtaining perfect information, but in intelligently interpreting what is available to build a representative and actionable understanding of the environment.

Current environmental monitoring techniques frequently encounter difficulties when data streams are disrupted or coverage is patchy, a common occurrence in remote or actively changing landscapes. Traditional approaches, reliant on complete datasets, falter when faced with intermittent signals from sensors or gaps in surveyed areas, particularly those posing significant hazards. Consequently, researchers are developing resilient strategies – including probabilistic mapping and data imputation techniques – to effectively reconstruct environmental states even with incomplete information. These methods aim to estimate conditions in unsampled regions, assess the uncertainty inherent in those estimations, and prioritize further data collection to reduce risk and improve the accuracy of hazard assessments – ultimately enabling more informed decision-making despite the challenges of real-world data limitations.

Contemporary environmental monitoring increasingly relies on autonomous systems operating in challenging conditions where consistent communication is not guaranteed. These systems must therefore prioritize intelligent exploration strategies, moving beyond simple pre-programmed routes to dynamically assess and map areas of interest. Researchers are developing algorithms that enable these agents to prioritize data collection based on uncertainty, effectively ‘asking’ where information is most needed, even with intermittent connectivity. This approach allows for the creation of increasingly accurate environmental models, despite limited communication, by focusing exploration on areas where data gaps are significant and potential hazards are likely – a crucial capability for monitoring remote, dynamic, or disaster-stricken environments.

Efficient environmental exploration is not merely desirable, but often a necessity dictated by logistical and economic realities. Complete data acquisition, involving exhaustive searches of potentially hazardous areas, quickly becomes impractical and fiscally unsustainable, particularly across large or complex terrains. Resource limitations – encompassing time, personnel, and energy expenditure – demand intelligent strategies that prioritize information gain over blanket coverage. Consequently, researchers are increasingly focused on developing algorithms and robotic systems capable of actively seeking informative data points, rather than passively collecting everything within range. This shift towards targeted exploration, driven by constraints on resources, promises to unlock more effective and scalable environmental monitoring solutions, offering a pragmatic approach to understanding dynamic and often unpredictable landscapes.

AI-Driven Exploration: A Framework for Intelligent Sampling

The AI-Augmented D2OC framework utilizes a dynamic sampling strategy where the generation of exploratory samples is not uniform but is instead modulated by the perceived criticality of different regions within the environment. This criticality is determined through an adaptive weighting mechanism which assigns higher probabilities to areas exhibiting greater potential for information gain or significant environmental features. Consequently, the system concentrates exploration efforts on these prioritized regions, improving the efficiency of data collection and reducing the time required to map and understand the environment. The weighting is adjusted iteratively based on incoming data, allowing the framework to dynamically refine its exploration strategy and focus on the most informative areas.

The AI-Augmented D2OC framework incorporates a Dual Multilayer Perceptron (MLP) architecture to quantify environmental uncertainty, directing exploratory efforts towards regions expected to yield the greatest informational benefit. This MLP utilizes an AdaptiveStd loss function which, during testing, exhibited bounded oscillation between 1.4e-3 and 2.4e-3. This stability in the loss function indicates consistent adaptation of the uncertainty estimation, even under conditions of limited or intermittent communication between agents. The system’s ability to maintain this performance despite communication constraints is critical for operation in challenging and dynamic environments where continuous connectivity cannot be guaranteed.

The AI-Augmented D2OC framework utilizes a virtual uncertainty mechanism to promote exploration of areas not yet mapped by the agent network. This is achieved by assigning a high initial uncertainty value to all locations prior to visitation. As agents explore and gather data, the uncertainty values are updated based on observed evidence; however, unvisited regions retain their elevated virtual uncertainty. This artificially inflated uncertainty serves as an incentive for agents to prioritize exploration of these areas, ensuring comprehensive environmental coverage and preventing premature convergence on known regions. The system does not rely on actual environmental uncertainty for these initial exploration targets, only the lack of data, which is crucial in environments where initial sensing is limited or unreliable.

The AI-Augmented D2OC framework incorporates strategies for maintaining operational efficiency under conditions of intermittent agent communication. This is achieved through a decentralized architecture where each agent can independently execute exploration and data collection, reducing reliance on constant connectivity. Agents utilize locally stored data and models to make informed decisions, only requiring periodic synchronization to share accumulated knowledge and refine the global exploration strategy. This design mitigates the impact of communication failures or delays, improving the system’s robustness in environments characterized by limited bandwidth, signal obstruction, or network instability. The system’s performance is therefore less sensitive to communication constraints than centralized approaches, enabling continued operation and data acquisition even during periods of connectivity loss.

Strategic Coverage: Prioritizing Information Over Completeness

Non-uniform coverage strategies deviate from the traditional approach of systematically mapping an environment with equal resolution across all areas. Instead, these strategies dynamically prioritize regions based on factors such as object density, anticipated robot path, or semantic importance. This prioritization is achieved through algorithms that allocate mapping resources – such as sensor time or robot movement – disproportionately to these critical areas. Consequently, the framework achieves a more efficient exploration process by rapidly building detailed models of high-interest zones while accepting a lower resolution or delayed mapping of less crucial regions, reducing overall computational load and mission duration.

Non-uniform coverage is achieved through the implementation of several distinct methodologies. Ergodic Exploration prioritizes areas based on maximizing long-term information gain, effectively sampling the environment over time to ensure comprehensive data collection. Information-Theoretic Coverage leverages principles of information gain to actively seek out regions that reduce uncertainty in the environmental map, focusing on areas with high information density. Distributed Optimal Transport, conversely, addresses coverage as an optimization problem, assigning mapping tasks to agents to minimize a cost function related to coverage completeness and travel distance, resulting in an efficient distribution of mapping effort.

Prioritization of mapping efforts towards critical areas within an environment demonstrably reduces the computational time and resource expenditure required for accurate model creation. This efficiency stems from concentrating data acquisition on regions of higher importance – for example, areas with increased feature density or those directly relevant to task objectives. By strategically allocating mapping resources, the framework minimizes redundant data collection in less significant zones, leading to a faster build time for the environmental model and a reduction in required processing power, memory usage, and overall energy consumption. The resulting model achieves comparable accuracy to uniformly mapped environments while requiring fewer resources.

The integration of Ergodic Exploration, Information-Theoretic Coverage, and Distributed Optimal Transport provides a resilient mapping solution suitable for varied environments. Ergodic Exploration ensures complete coverage over time, while Information-Theoretic Coverage prioritizes areas that reduce uncertainty in the environmental model most efficiently. Distributed Optimal Transport then facilitates coordinated mapping across multiple agents or sensors, minimizing redundant data collection. This combined approach allows the system to adapt to changes in the environment, such as the introduction of new obstacles or dynamic features, by dynamically adjusting coverage priorities and sensor allocation, resulting in a consistently accurate and up-to-date environmental model even in complex and evolving scenarios.

Beyond the Map: Real-World Impact and Future Resilience

The AI-Augmented Distributed Cooperative Localization (D2OC) framework represents a significant advancement in mapping technology, especially when faced with the challenges of incomplete information or unreliable communication networks. Unlike traditional methods that struggle with sparse or intermittent data, this system leverages artificial intelligence to intelligently fill in gaps and maintain map accuracy. By employing AI-driven prediction and data fusion, D2OC can construct a coherent map even when individual sensors experience temporary outages or provide limited observations. This robustness is achieved through a novel approach to data association and state estimation, allowing the system to operate effectively in dynamic and unpredictable environments where conventional mapping techniques would falter, offering enhanced reliability and precision in critical applications.

The AI-Augmented D2OC framework has proven effective in a practical application: mapping landfill methane plumes, a significant environmental and safety concern. Simulations reveal the system not only accurately delineates these hazardous areas, enabling targeted mitigation efforts, but also outperforms traditional decentralized mapping techniques. This superiority is quantified by a demonstrably lower steady-state Wasserstein distance – a metric indicating improved convergence and higher fidelity in the resulting maps. Essentially, the framework creates a more precise and reliable representation of the plume’s extent, facilitating informed decision-making regarding gas capture, ventilation, and ultimately, risk reduction for nearby communities and the environment.

Further development of the AI-Augmented D2OC framework prioritizes a transition towards dynamic, real-world application. Researchers intend to integrate the system with continuously updating sensor networks, allowing for real-time mapping and hazard assessment. This will move beyond static map creation to an adaptive process where the framework learns from environmental feedback – adjusting exploration strategies and refining map accuracy based on incoming data. Such an approach promises not only improved mapping fidelity in complex and changing environments, but also the potential for predictive modeling, anticipating plume movement or identifying emerging hazards before they escalate, ultimately enhancing the system’s utility for proactive environmental management and public safety.

Accurate and timely mapping is increasingly vital for navigating complex environmental issues and safeguarding communities. Traditional mapping methods often struggle with the scale and dynamism of real-world challenges, hindering effective responses to events like pollution dispersal, natural disasters, and infrastructure failures. The demand for robust mapping techniques extends beyond mere visualization; it necessitates systems capable of operating with incomplete data, adapting to changing conditions, and providing actionable insights for mitigation and prevention. Consequently, advancements in this field are not simply technological improvements, but essential components of a proactive approach to environmental stewardship and public safety, enabling more informed decision-making and ultimately, more resilient communities.

The pursuit of elegant solutions in multi-agent systems, as presented in this work on AI-augmented decentralized control, invariably leads to unforeseen complications. The promise of reconstructing complex spatial distributions with improved efficiency feels… familiar. Ken Thompson once observed, “Software is a gas. It expands to fill the available memory.” Similarly, these ‘revolutionary’ frameworks, designed to balance exploration and exploitation, will eventually expand to accommodate every edge case production can throw at them. The Wasserstein metric may offer an optimal transport solution on paper, but the real world tends to favor pragmatic compromises over theoretical perfection. It’s a beautiful algorithm, until it encounters its first sensor failure.

So, What Breaks First?

This AI-augmented dance with Wasserstein metrics and decentralized control is, predictably, not a panacea. The presented framework certainly shifts some parameters – promises of improved efficiency and robustness are always attractive. But let’s be clear: production will find the edge cases. The real challenge isn’t reconstructing spatial distributions; it’s reconstructing them reliably when faced with sensor drift, communication loss, and agents actively attempting to deceive the system – because they always will. The adaptive exploration/exploitation balance, while elegant in simulation, will likely require constant recalibration in any real-world deployment.

The inevitable next step, of course, isn’t more sophisticated machine learning. It’s a deeper dive into the limitations of optimal transport itself. How does this approach scale with dimensionality? What are the computational bottlenecks when dealing with genuinely complex, dynamic environments? The field will likely circle back to older, ‘less elegant’ methods – the ones discarded for failing to meet theoretical ideals – simply because they’re more forgiving of real-world noise. Everything new is old again, just renamed and still broken.

Ultimately, this work highlights a familiar truth: a beautiful algorithm is merely a well-defined failure mode waiting to happen. The question isn’t whether this framework works, but when it breaks, and what the cost of that breakage will be. Expect a flurry of papers addressing these practical concerns – and then, inevitably, a new ‘revolutionary’ approach that promises to solve all the problems, only to introduce a fresh set of them.

Original article: https://arxiv.org/pdf/2601.21126.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- 1xBet declared bankrupt in Dutch court

- Gold Rate Forecast

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- James Van Der Beek grappled with six-figure tax debt years before buying $4.8M Texas ranch prior to his death

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

2026-02-01 01:58