Swarm Intelligence Takes Flight: AI-Powered Indoor Exploration

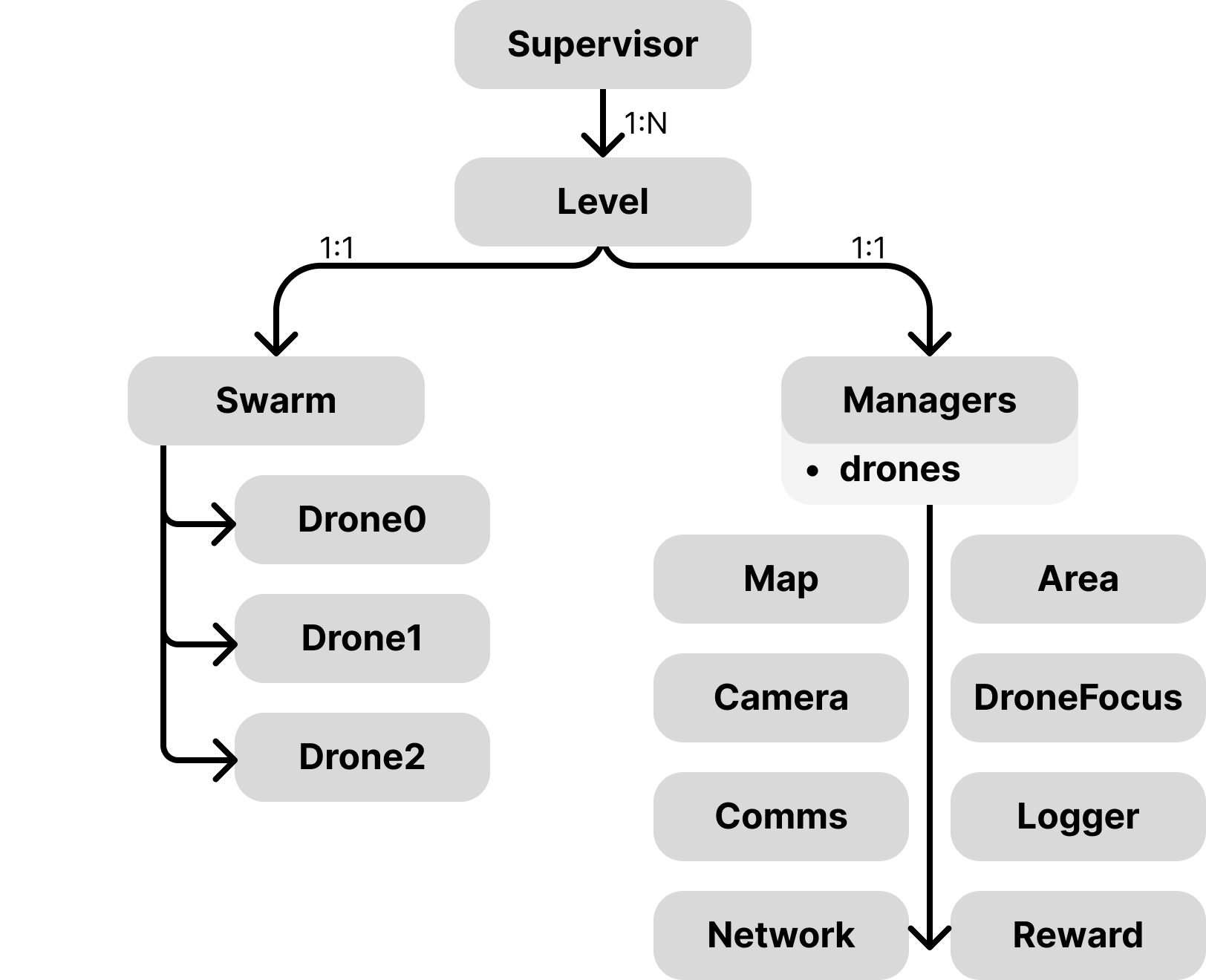

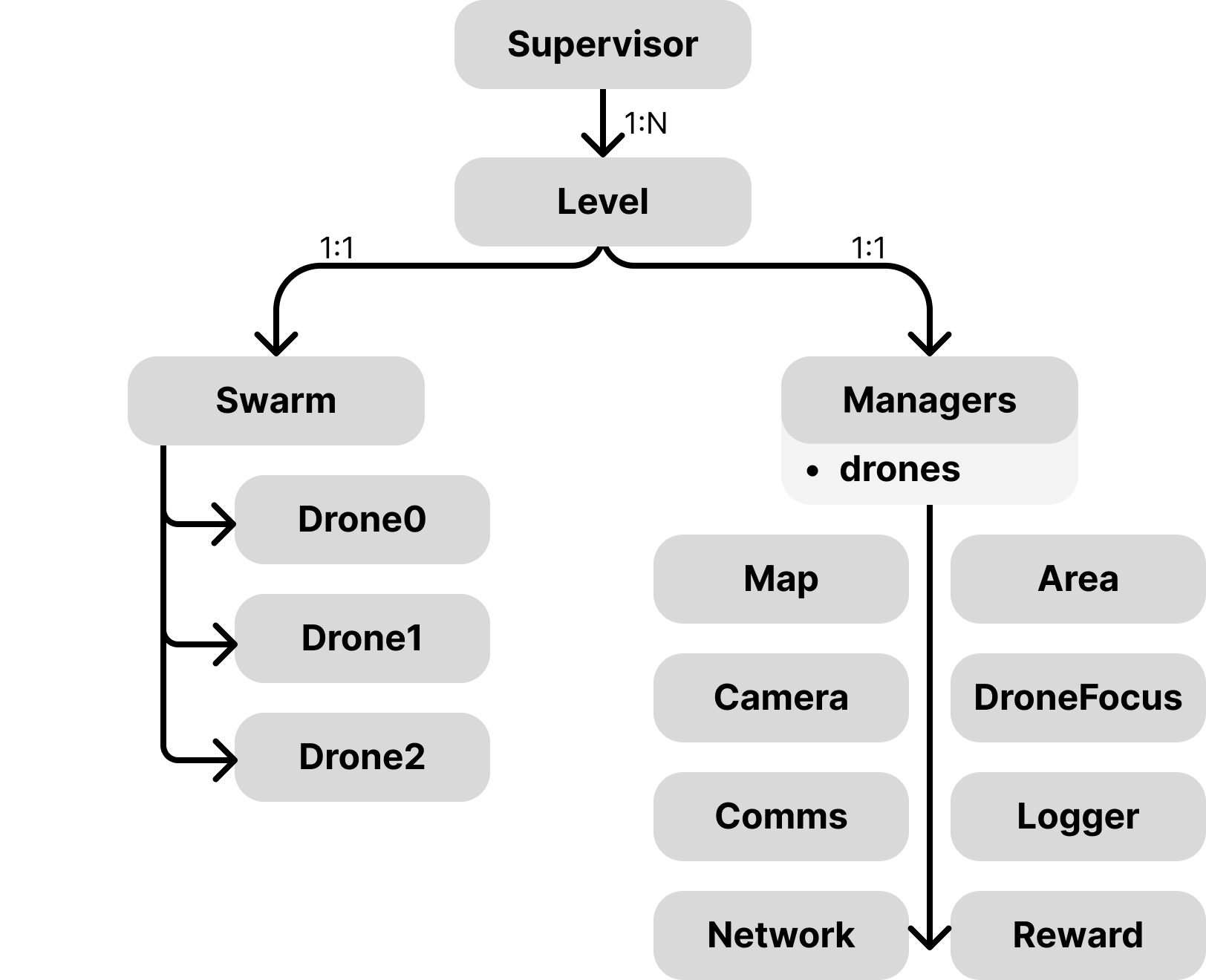

This research details a novel framework for training teams of autonomous agents to map and navigate complex indoor spaces.

This research details a novel framework for training teams of autonomous agents to map and navigate complex indoor spaces.

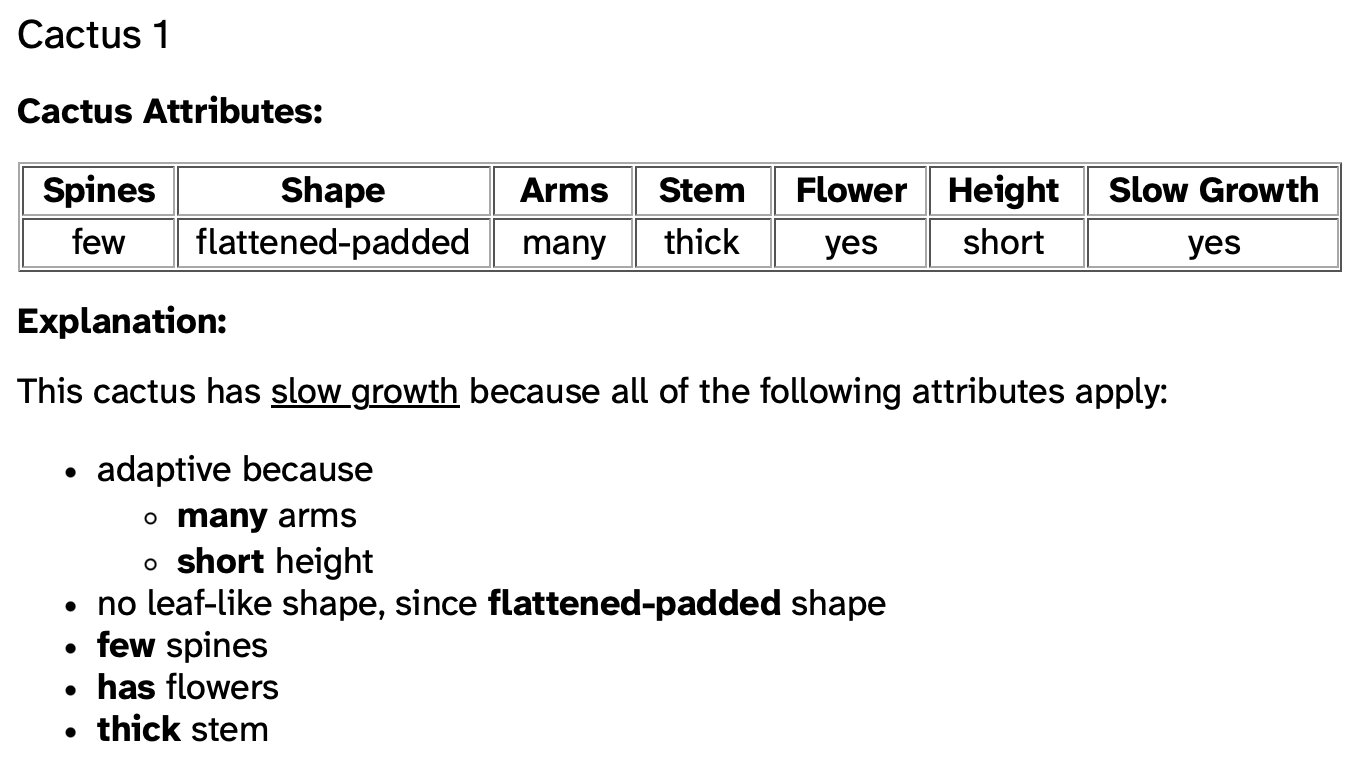

New research shows that stripping away irrelevant details from symbolic AI explanations can significantly improve how easily people grasp complex systems.

New research reveals that large language models struggle with everyday reasoning that relies on understanding unwritten social rules and contextual cues.

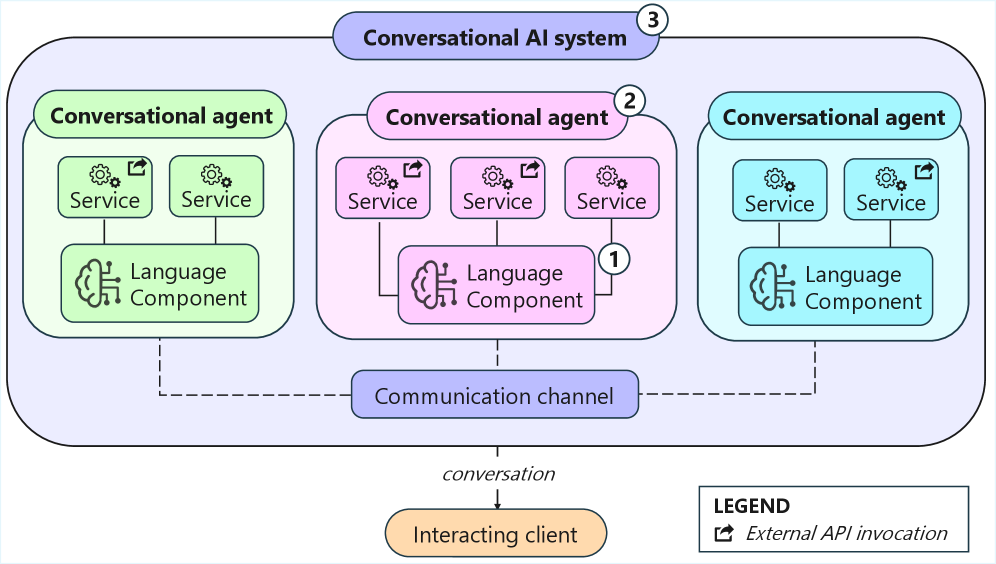

As conversational AI systems become increasingly complex, ensuring their reliability requires a shift towards systematic and automated quality assurance.

This tutorial offers a unified approach to integrating reasoning capabilities into information retrieval, moving beyond simple pattern matching to address complex information needs.

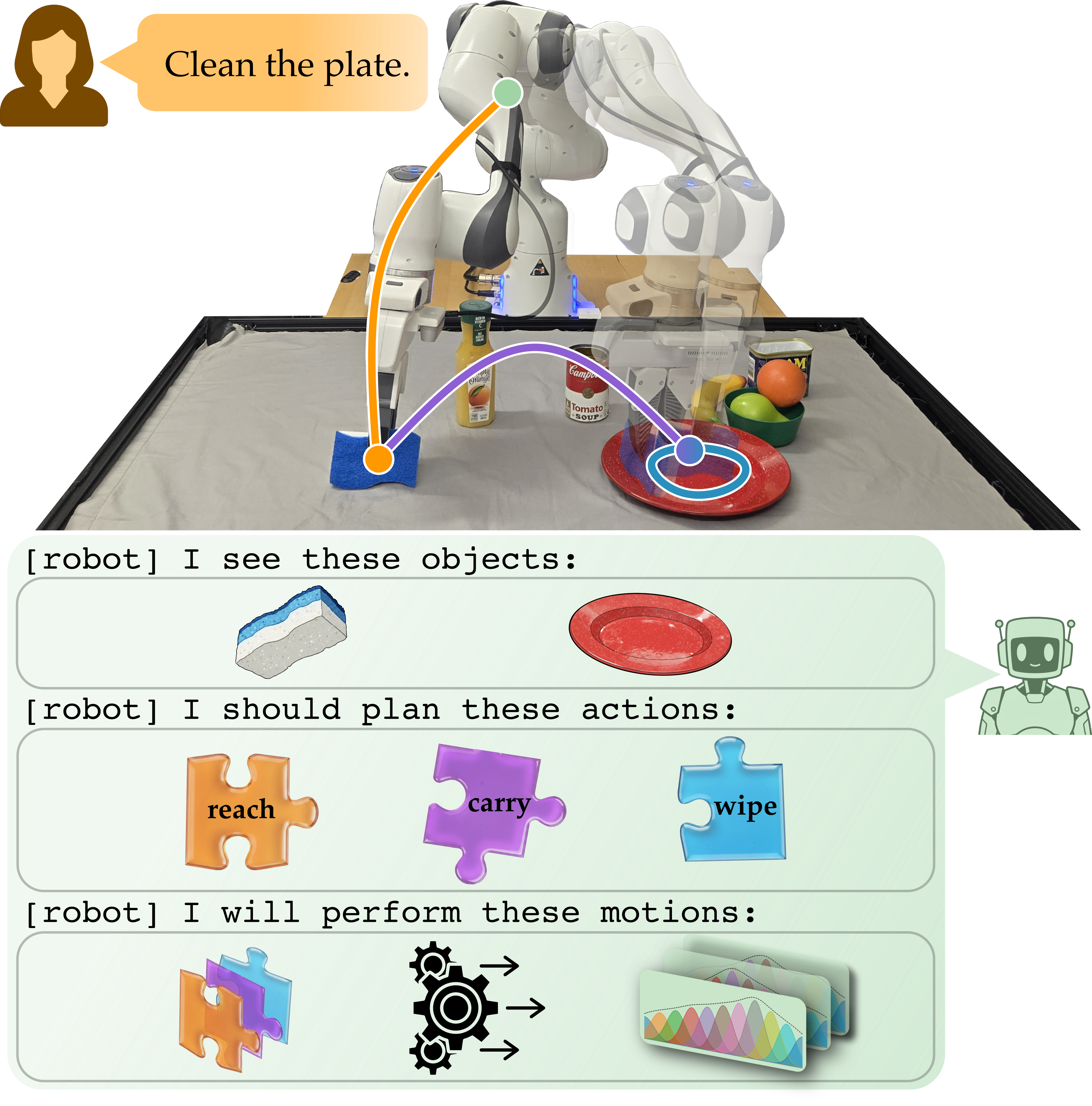

A new framework bridges the gap between natural language and robotic action, allowing robots to perform complex tasks based on spoken instructions.

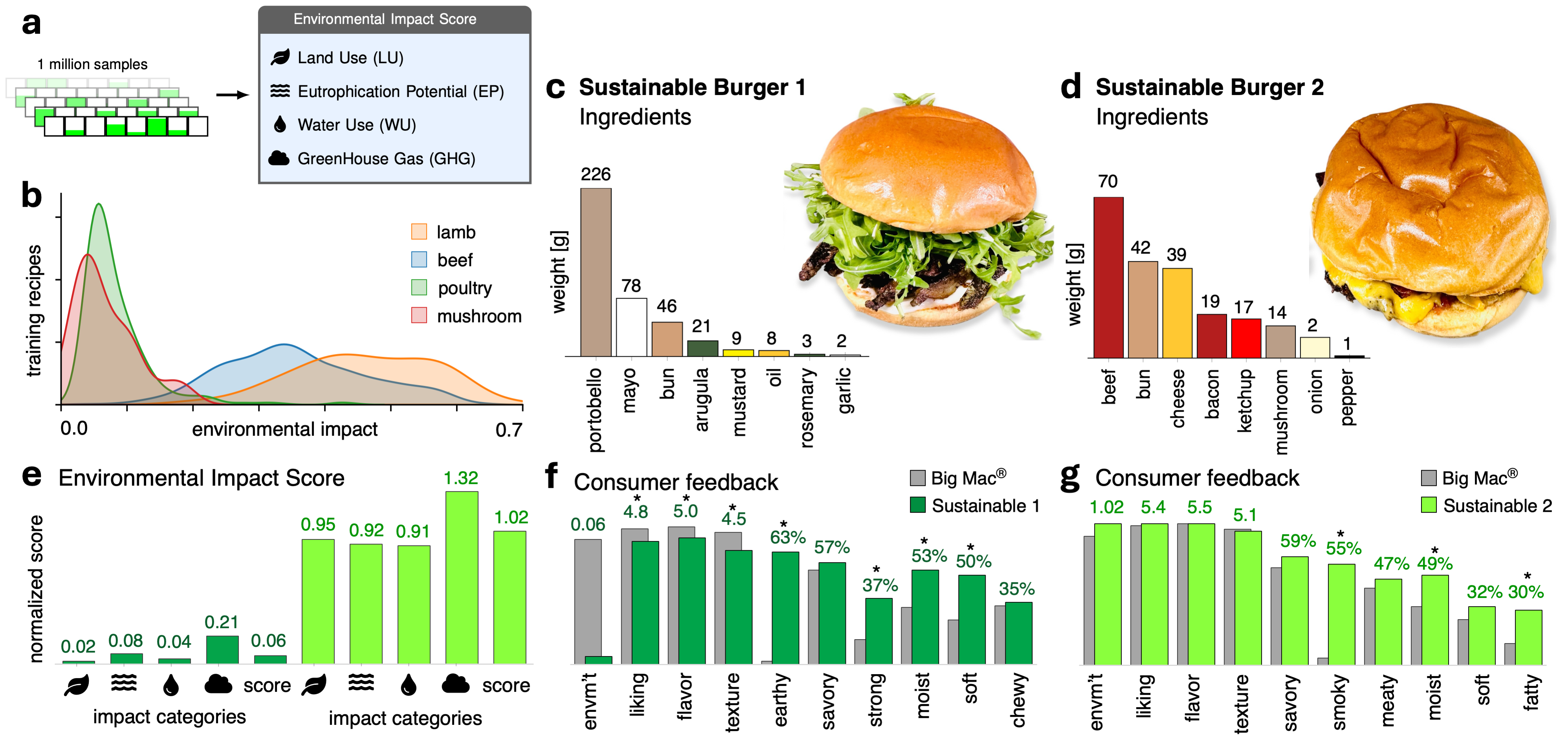

New research shows artificial intelligence can generate burger recipes that satisfy human palates while prioritizing sustainability and nutrition.

![The framework synthesizes complex problem-solving capabilities through a three-stage process-skill acquisition from diverse data, agentic supervised fine-tuning mirroring expert reasoning with dynamic skill pruning, and multi-granularity reinforcement learning-guided by a structured reasoning flow [latex]Draft \to Check \to Refine \to Finalize[/latex] and curriculum-based skill distribution to generate challenging problems for downstream solver training.](https://arxiv.org/html/2602.03279v1/x3.png)

Researchers have developed a new method for creating increasingly complex reasoning problems to better train artificial intelligence systems.

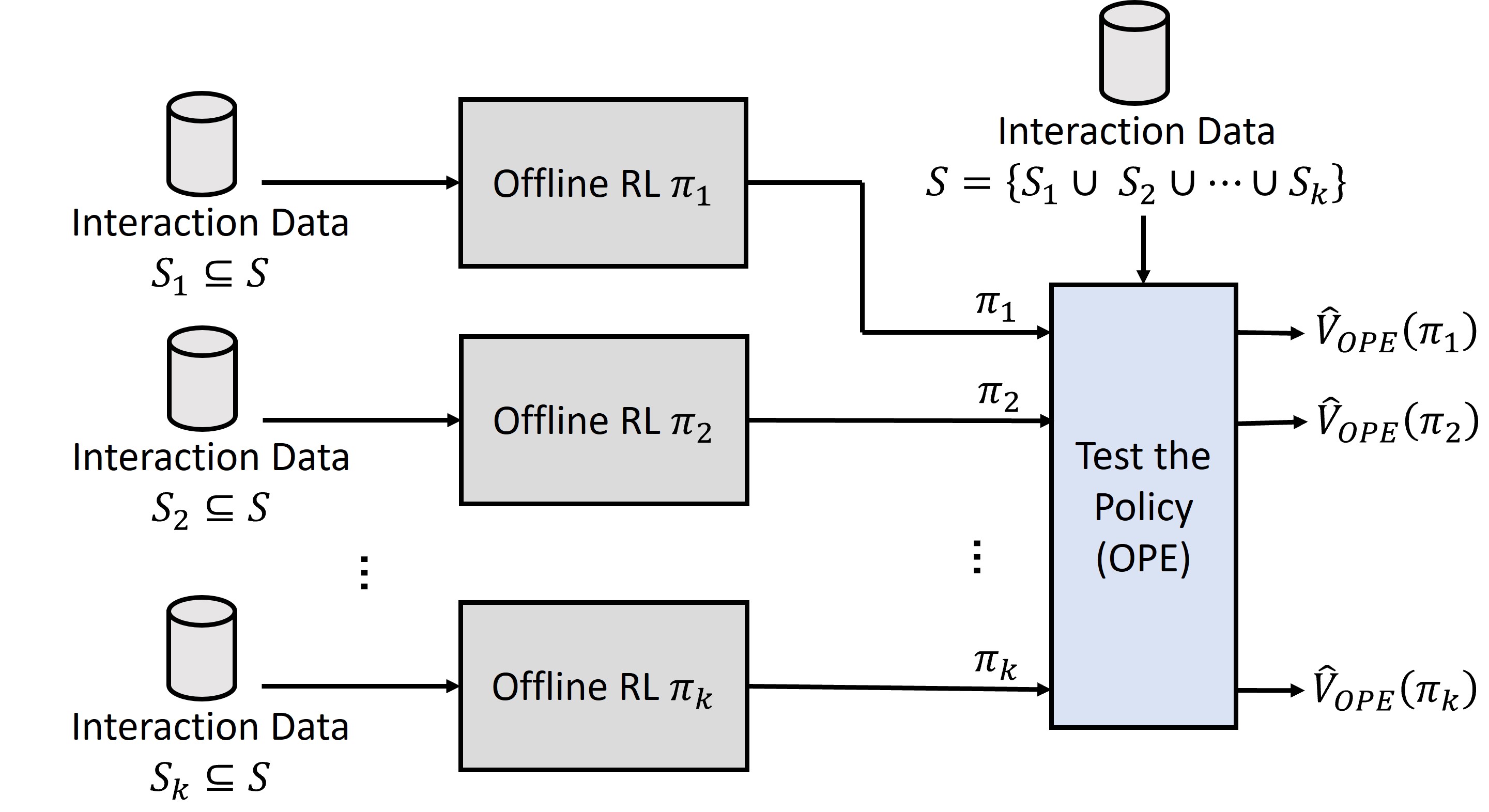

New research demonstrates how leveraging human physiological data and off-policy evaluation can significantly improve the performance and usability of reinforcement learning agents in collaborative robotic systems.

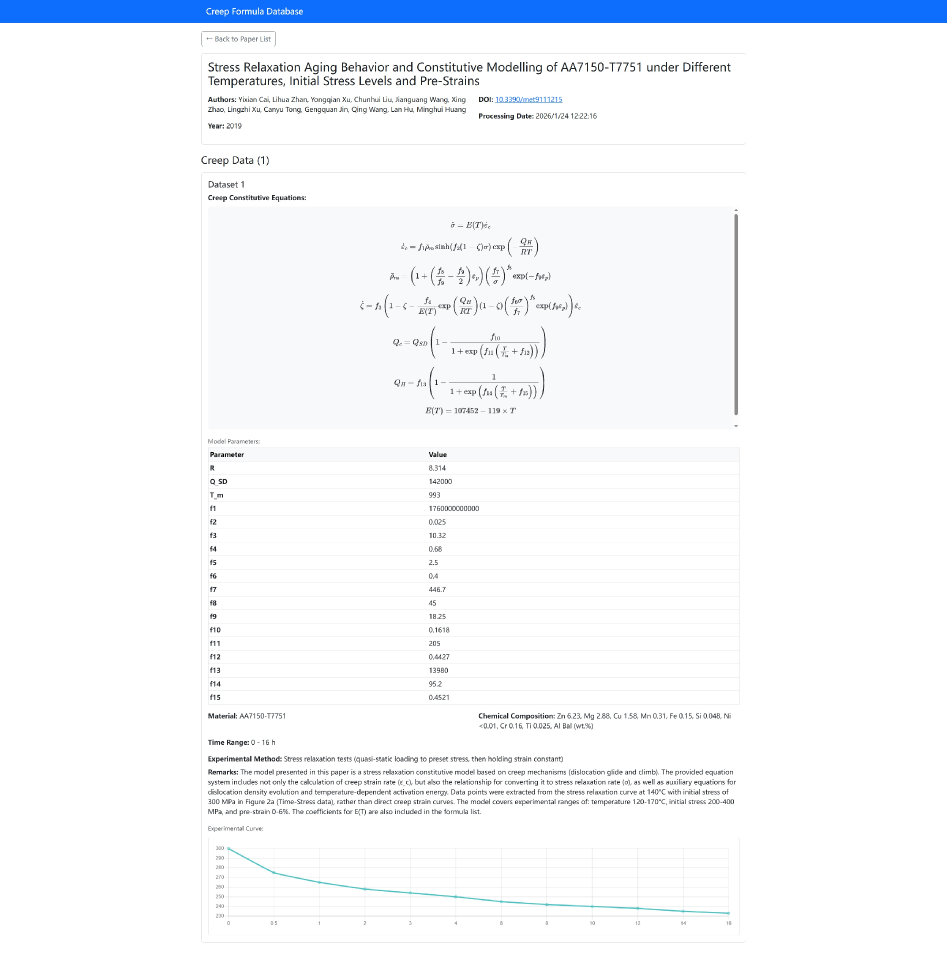

A new framework uses intelligent software to automatically build comprehensive databases from scientific papers, accelerating materials science research.