Robots That Talk to Each Other Explore Further

New research demonstrates how coordinating movement with radio signal strength allows teams of robots to map complex environments more efficiently.

New research demonstrates how coordinating movement with radio signal strength allows teams of robots to map complex environments more efficiently.

![The replication of the box generator, denoted as [latex] g_{box} [/latex], confirms the consistency and robustness of the foundational system established in Figure 1.](https://arxiv.org/html/2602.12270v1/box.png)

As generative AI reshapes creative landscapes, a fundamental challenge arises: how do we define and protect ownership in a world of algorithmically-derived art?

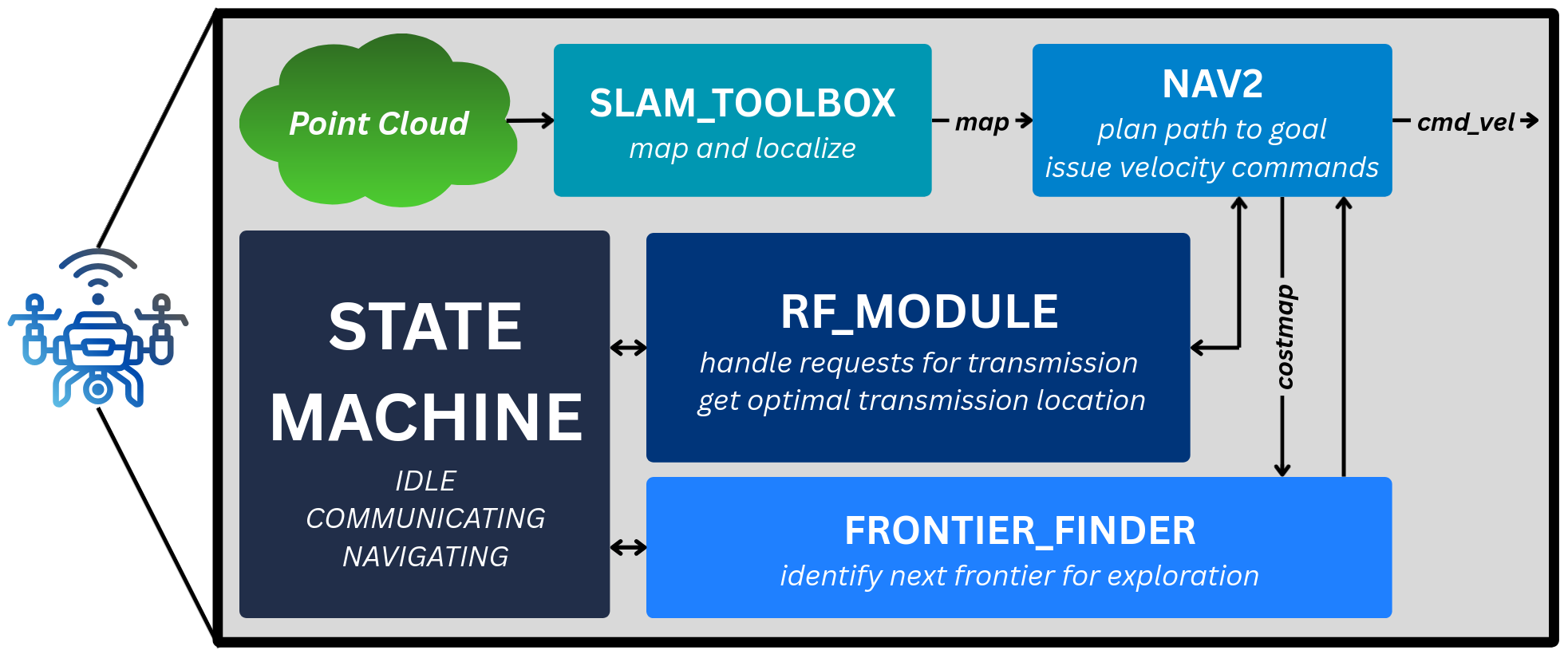

A new framework enables robots to acquire complex manipulation skills simply by observing human videos, offering a low-cost path to advanced automation.

![Descriptor ranking, assessed via ARFS scores and predicated on the maximum bond-projected force constant [latex] max\_pfc [/latex], demonstrates a clear distinction between descriptor groups originating from structural and compositional analyses-those derived using “MATMINER”-and those extracted from “LOBSTER” calculation data, highlighting differing predictive capabilities in characterizing material properties.](https://arxiv.org/html/2602.12109v1/Figs/arfs_imp_max_pfc.png)

A new critical assessment reveals how incorporating quantum-chemical bonding descriptors significantly improves machine learning predictions of key materials properties.

Researchers have developed a new framework that empowers agents to ‘imagine’ potential scenarios, dramatically improving their ability to understand complex human-object interactions.

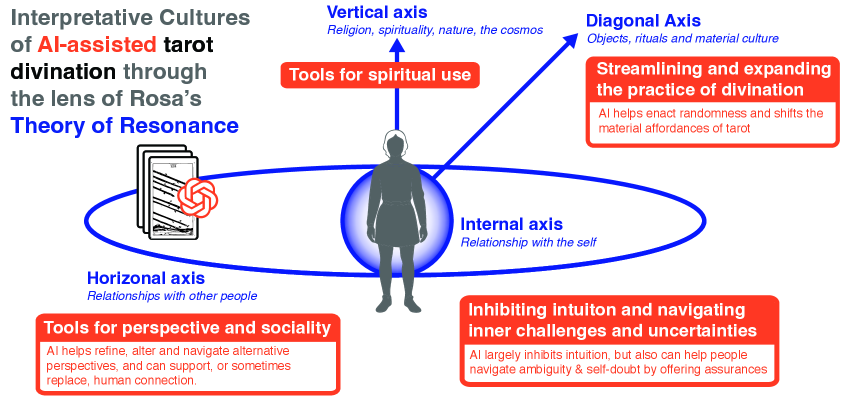

A new study examines how tarot practitioners are integrating artificial intelligence into their readings, transforming the practice of divination and the search for personal meaning.

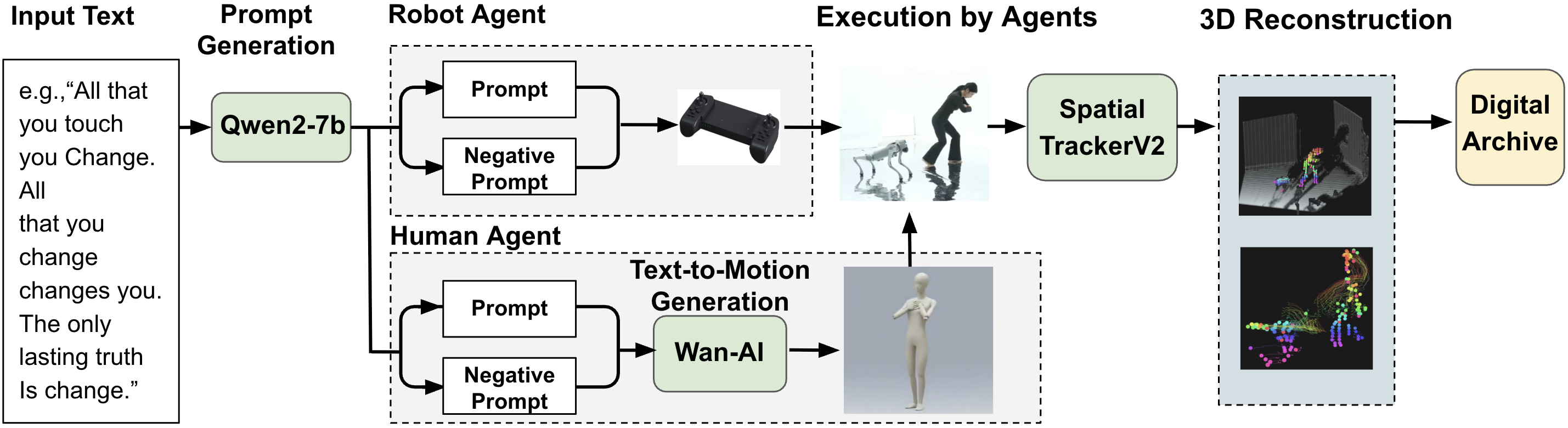

A new framework, ReTracing, explores how generative AI systems encode and perpetuate biases through the choreography of human, robotic, and virtual interactions.

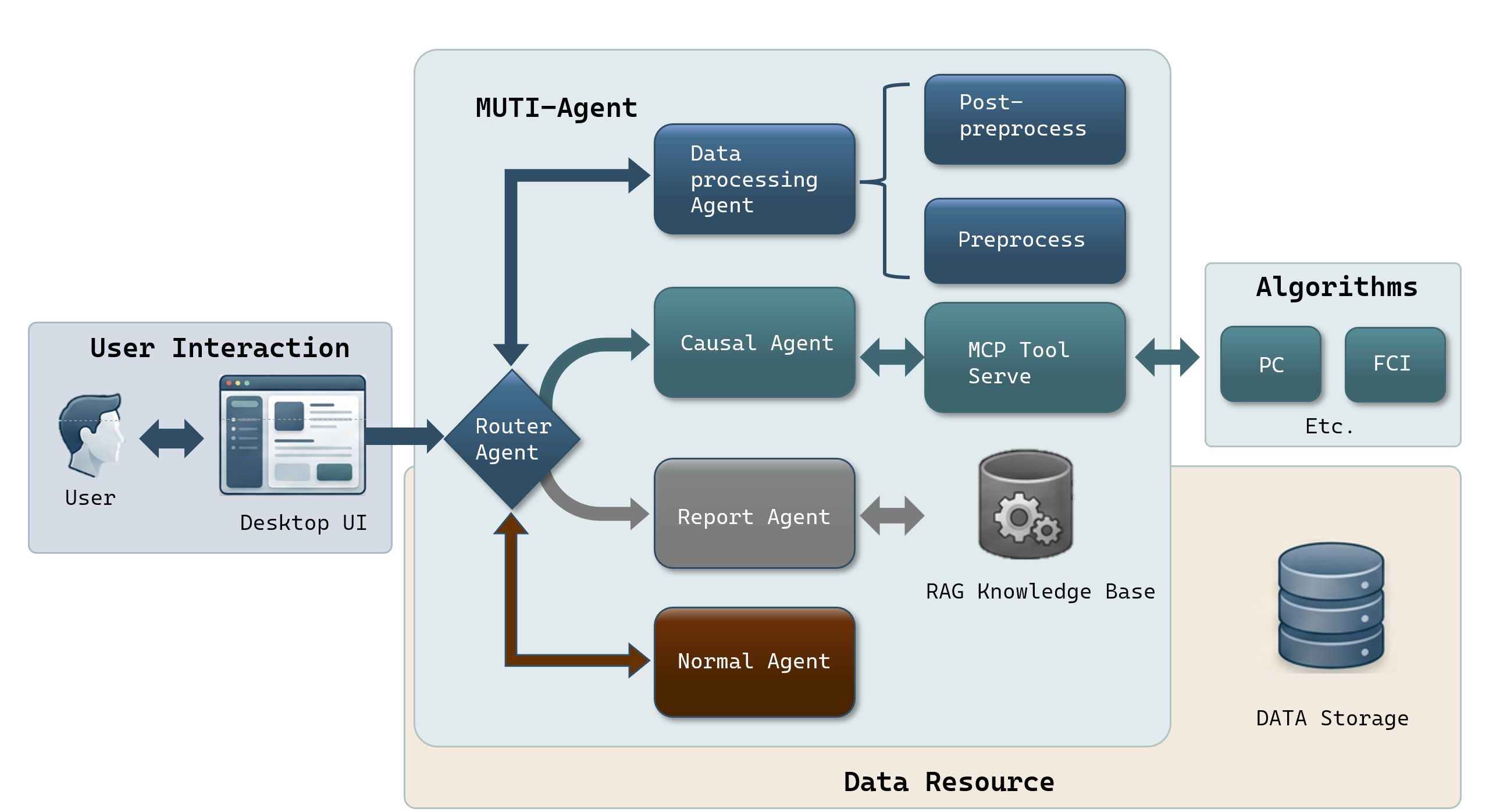

Researchers can now leverage a new multi-agent system that uses natural language to simplify and accelerate the complex process of causal inference.

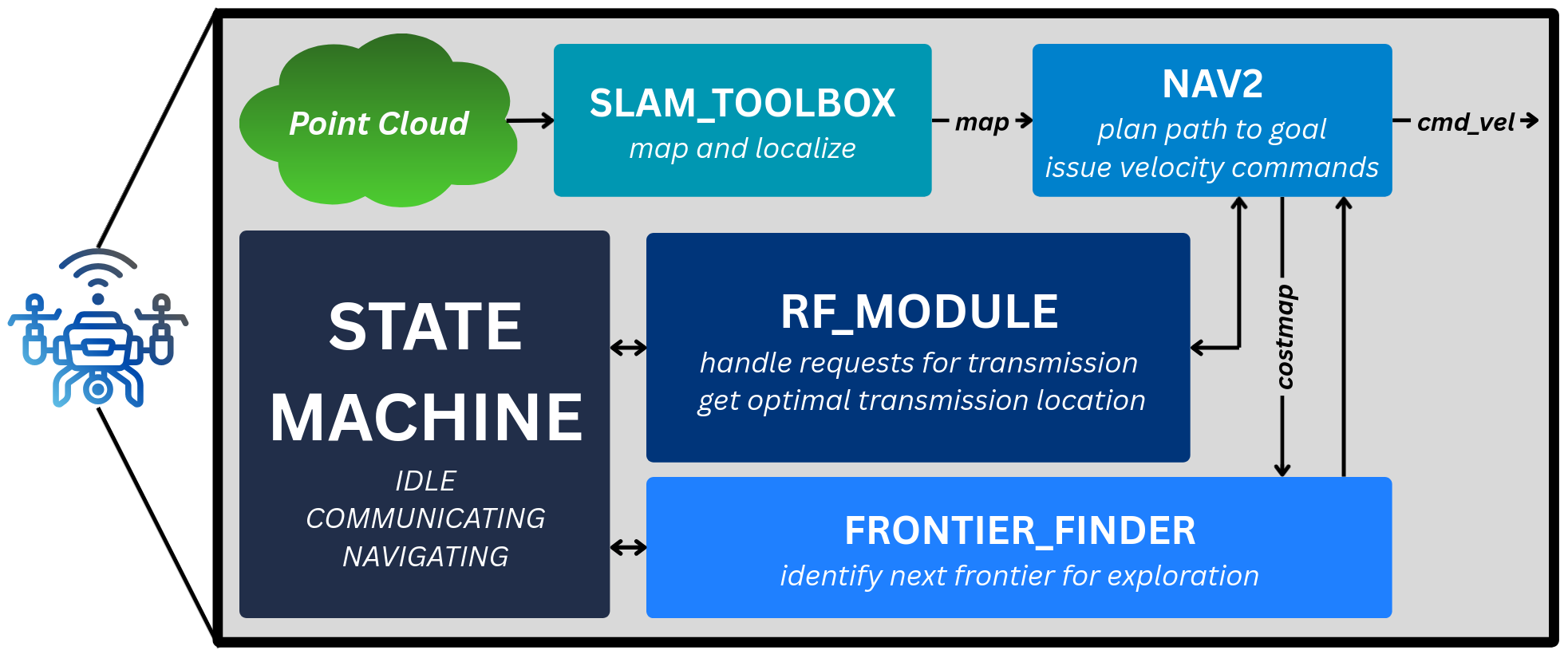

This review explores how the next generation of wireless technology will unlock a new era of capabilities for robotics, enabling safer, more intelligent, and collaborative machines.

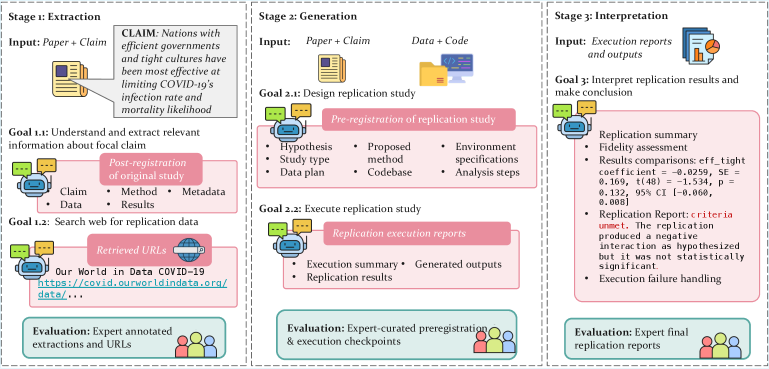

A new benchmark assesses whether large language model agents can reliably reproduce findings in the social and behavioral sciences, revealing critical hurdles beyond just code execution.