Robots That Work Together: The Rise of Multi-Agent Systems

![A multi-agent planning system dissects user instructions and visual scenes to orchestrate robotic action, achieved through a collaborative architecture-comprising activation, planning, and monitoring agents-each refined via supervised fine-tuning on datasets [latex]L_1[/latex] and [latex]L_2[/latex] derived from the VIKI benchmark.](https://arxiv.org/html/2601.18733v1/Figure/MAS_Plan.png)

A new benchmark challenge is pushing the boundaries of collaborative robotics, demanding increasingly sophisticated coordination and adaptability from teams of diverse machines.

![A multi-agent planning system dissects user instructions and visual scenes to orchestrate robotic action, achieved through a collaborative architecture-comprising activation, planning, and monitoring agents-each refined via supervised fine-tuning on datasets [latex]L_1[/latex] and [latex]L_2[/latex] derived from the VIKI benchmark.](https://arxiv.org/html/2601.18733v1/Figure/MAS_Plan.png)

A new benchmark challenge is pushing the boundaries of collaborative robotics, demanding increasingly sophisticated coordination and adaptability from teams of diverse machines.

Artificial intelligence is rapidly reshaping innovation, forcing a critical re-evaluation of intellectual property frameworks worldwide.

Researchers have developed a new framework that allows humanoid robots to seamlessly combine movement and manipulation, even on challenging and unpredictable terrain.

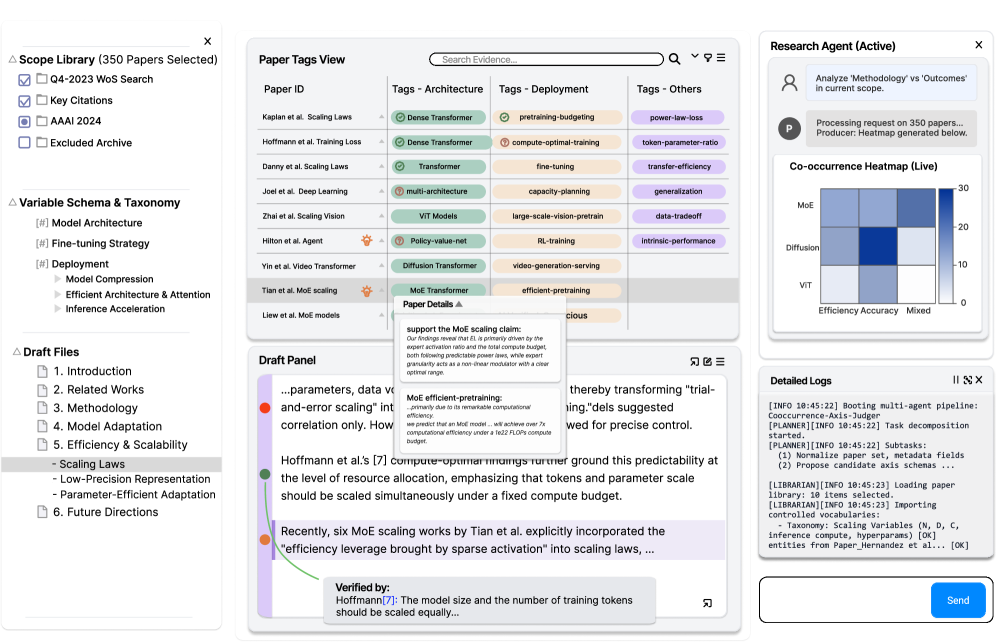

New research introduces a system that trains artificial intelligence to not just find, but understand scientific papers and answer complex questions using the latest reinforcement learning techniques.

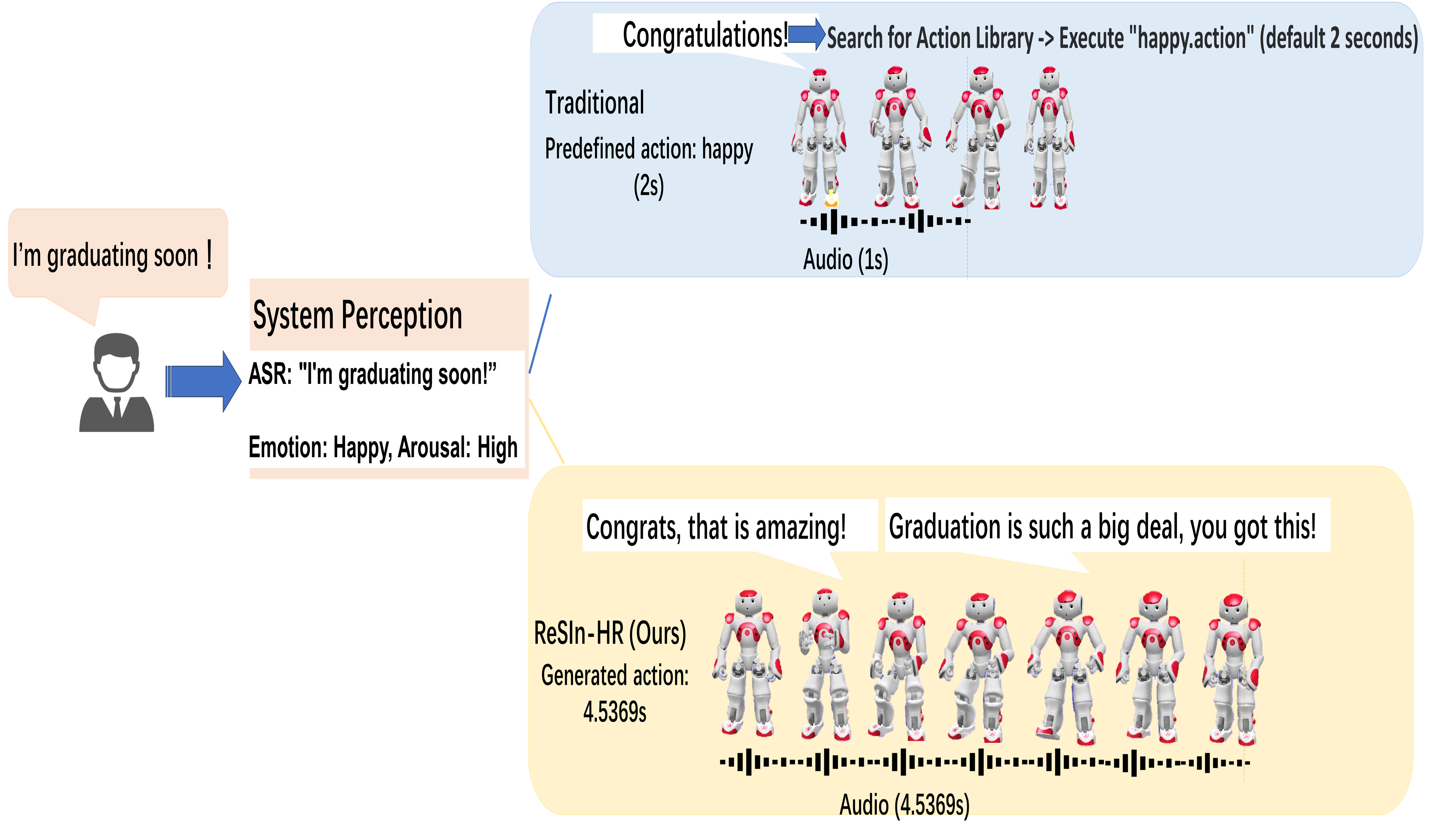

Researchers have developed a new framework enabling humanoid robots to dynamically generate gestures that align with spoken emotion, creating more natural and engaging interactions.

Researchers are leveraging the power of AI not to produce research, but to rigorously verify existing claims and uncover hidden assumptions in systematic reviews.

This review explores how artificial intelligence is enabling self-driving laboratories to accelerate research in the complex world of soft materials.

Artificial intelligence is rapidly changing how businesses identify potential trademark conflicts, offering both opportunities and challenges for a historically manual process.

A new framework categorizes how humans and robots can dynamically collaborate on construction projects, paving the way for more flexible and efficient building processes.

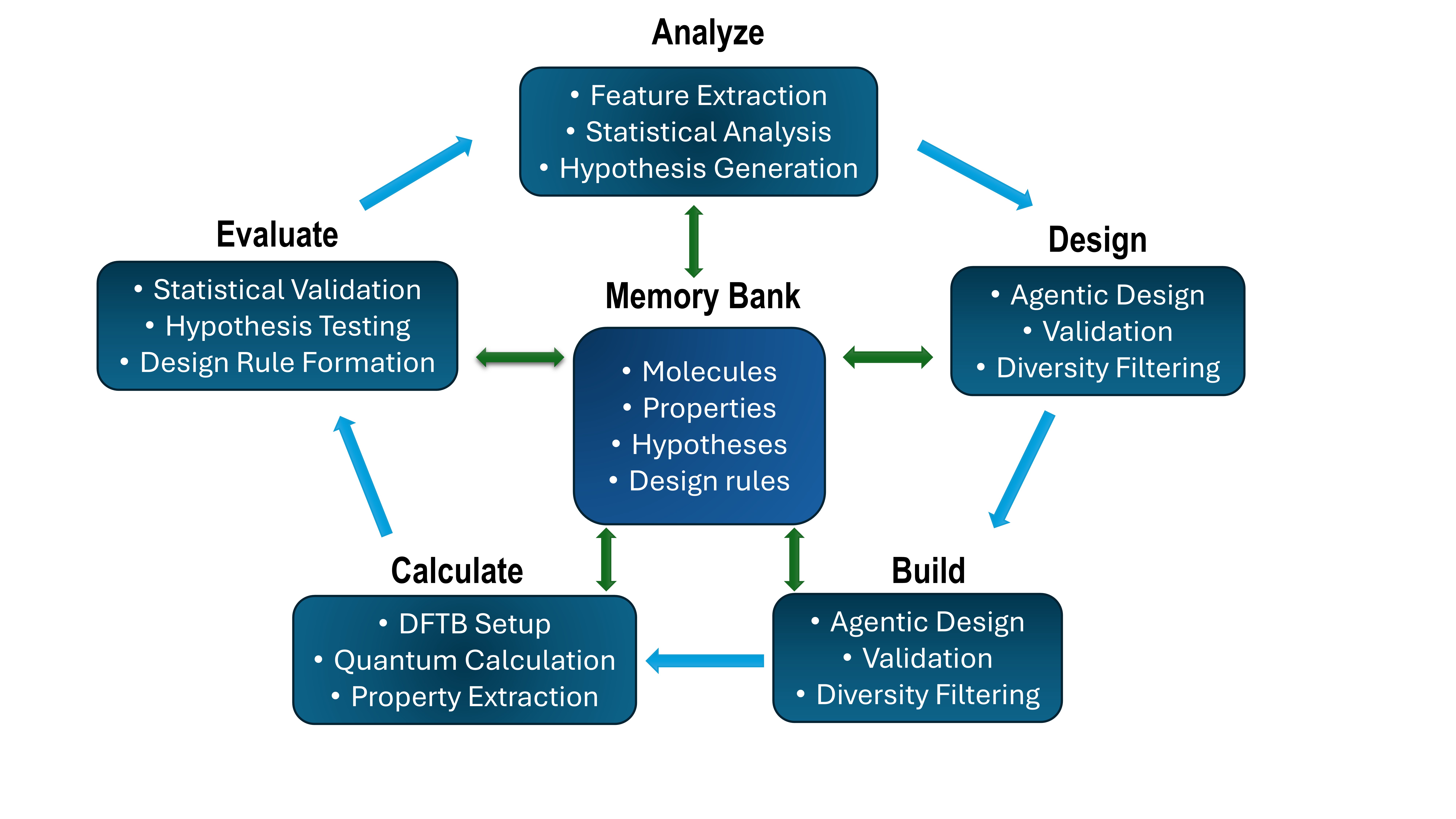

Researchers have created an AI system capable of independently discovering the principles governing the design of organic photocatalysts for efficient hydrogen production.