Author: Denis Avetisyan

As AI agents increasingly collaborate, researchers are shifting focus from simply observing emergent behavior to establishing a science for understanding and maximizing the benefits of teamwork.

This review proposes a framework for evaluating multi-agent systems by quantifying ‘collaboration gain’ and attributing performance to specific design factors.

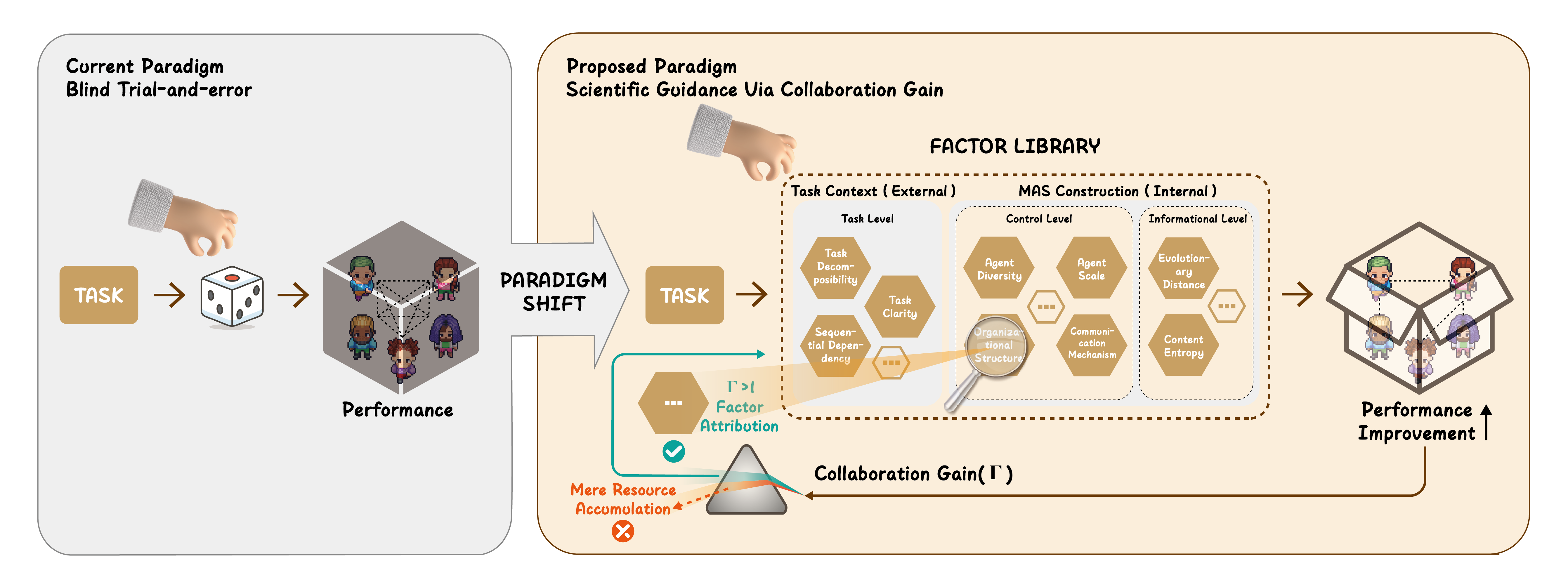

Despite recent advances in LLM-powered multi-agent systems demonstrating impressive capabilities across complex domains, progress remains hampered by a reliance on empirical trial-and-error rather than principled scientific investigation-a challenge addressed in ‘Towards a Science of Collective AI: LLM-based Multi-Agent Systems Need a Transition from Blind Trial-and-Error to Rigorous Science’. This work advocates for a shift towards a design science, introducing a framework centered on quantifying ‘collaboration gain’ Γ-isolating performance improvements directly attributable to agent interaction-and systematically attributing that gain to specific design factors. By constructing a comprehensive factor library and establishing Γ as a standard metric, can we finally move beyond ad-hoc experimentation and establish a truly rigorous science of collective AI?

The Perils of Empiricism in Multi-Agent System Design

The creation of effective Multi-Agent Systems (MAS) is frequently hampered by a reliance on what is essentially blind trial-and-error. Developers often iterate through countless configurations of agent behaviors and interaction rules, hoping to stumble upon a solution that yields desired collective outcomes. This process isn’t simply time-consuming; it demands substantial computational resources for simulation and evaluation. Each iteration represents an experimental attempt, lacking the guiding principles of a systematic methodology. Consequently, progress can be incremental and unpredictable, with little assurance that improvements stem from genuine synergistic behavior rather than chance occurrences or exhaustive searching of the parameter space. The absence of a more principled approach dramatically increases the cost and complexity of MAS development, particularly as systems grow in scale and sophistication.

The promise of Multi-Agent Systems often hinges on achieving [latex]\Gamma > 1[/latex], a state where collective intelligence generates outcomes exceeding the sum of individual contributions. However, current development practices frequently stumble due to an incomplete grasp of the factors influencing this synergistic effect. While agents may appear to collaborate, the observed benefits could simply stem from the aggregation of resources – a scenario lacking true collective problem-solving. A systematic understanding of how agent diversity, communication protocols, environmental dynamics, and individual learning strategies interact is crucial. Without pinpointing these contributing elements, developers struggle to intentionally cultivate genuine collective intelligence, instead relying on chance encounters and inefficient experimentation, ultimately limiting the potential of MAS to address complex challenges.

Distinguishing true collaborative synergy from simple resource aggregation presents a significant challenge in multi-agent system development. Often, observed performance improvements are merely the result of combining individual agent capabilities – a situation where the whole is equal to the sum of its parts. However, genuine collaboration, quantified by a collaboration factor [latex]Γ > 1[/latex], indicates a synergistic effect where the combined performance exceeds individual contributions. Without a defined methodology to isolate and measure this factor, it becomes exceedingly difficult to determine whether observed gains stem from actual teamwork or simply from the accumulation of resources, hindering the development of truly intelligent and effective multi-agent systems and misattributing the source of increased efficacy.

![The collaboration gain Γ quantifies whether multi-agent system (MAS) performance exceeds the sum of single-agent system (SAS) performances under equivalent computational budgets, with [latex]\Gamma > 1[/latex] indicating true collaborative benefit beyond simple resource accumulation.](https://arxiv.org/html/2602.05289v1/section3.jpg)

Scientific Guidance: A Framework for Systemic Improvement

Scientific Guidance utilizes Factor Attribution to systematically deconstruct Multi-Agent System (MAS) performance into quantifiable contributions from individual factors. This methodology moves beyond holistic performance evaluation by identifying which variables exert the most significant influence on key performance indicators. Factor Attribution involves statistically isolating the impact of each factor – encompassing agent characteristics, environmental parameters, and interaction protocols – through techniques such as sensitivity analysis and variance decomposition. The resulting data allows developers to prioritize optimization efforts, focusing on factors with the highest leverage over system behavior and enabling targeted interventions to improve overall MAS performance. [latex] \Delta Y = \sum_{i=1}^{n} \frac{\partial Y}{\partial x_i} \Delta x_i [/latex] represents a simplified linear approximation of how changes in factors [latex] x_i [/latex] affect the system output [latex] Y [/latex].

The Collaboration Gain Metric, denoted as Γ, provides a quantitative assessment of Multi-Agent System (MAS) performance relative to a single, equivalent agent. Γ is calculated as the ratio of MAS performance to the performance of a Single-Agent System performing the same task with equivalent resource allocation. A value of [latex]Γ > 1[/latex] indicates a demonstrable benefit from collaboration, exceeding what could be achieved through simple resource scaling. This metric specifically isolates the gains attributable to agent interaction and coordination, preventing misattribution of performance improvements solely to increased computational resources. The metric allows for objective comparison and validation of MAS designs by distinguishing genuine collaborative advantages from improvements derived solely from parallelization or increased capacity.

The Factor Library is a systematically organized collection of variables identified as having a quantifiable impact on Multi-Agent System (MAS) performance. This repository includes both intrinsic factors – characteristics of individual agents such as learning rate or communication bandwidth – and extrinsic factors relating to the environment or inter-agent relationships, such as resource distribution or network topology. Each factor is associated with metadata detailing its data type, measurement units, and potential range of values. The Factor Library facilitates targeted optimization by allowing developers to isolate and manipulate specific variables, enabling a data-driven approach to MAS improvement and reducing the complexity of system-wide tuning. It supports both experimental analysis and model-based prediction of MAS behavior under varying conditions.

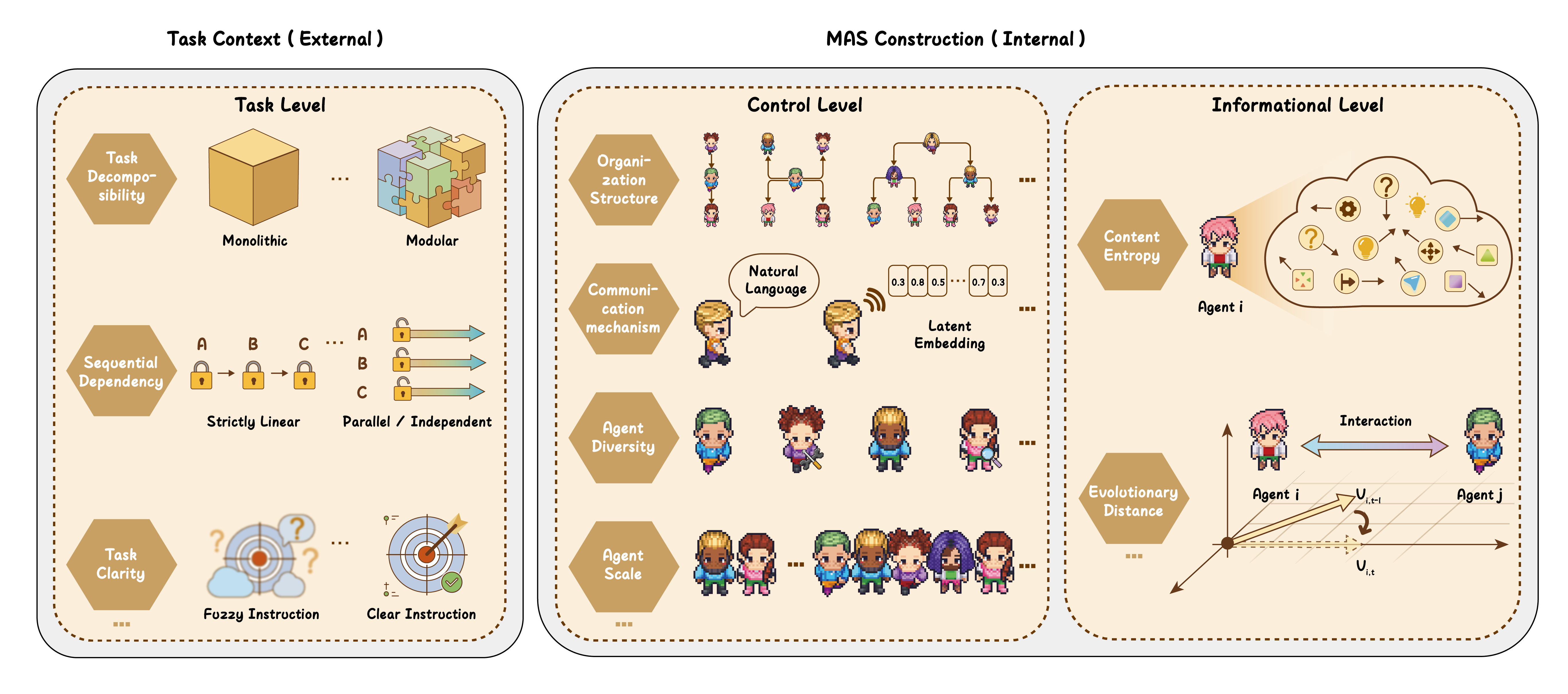

Deconstructing Performance: Control and Information Dynamics

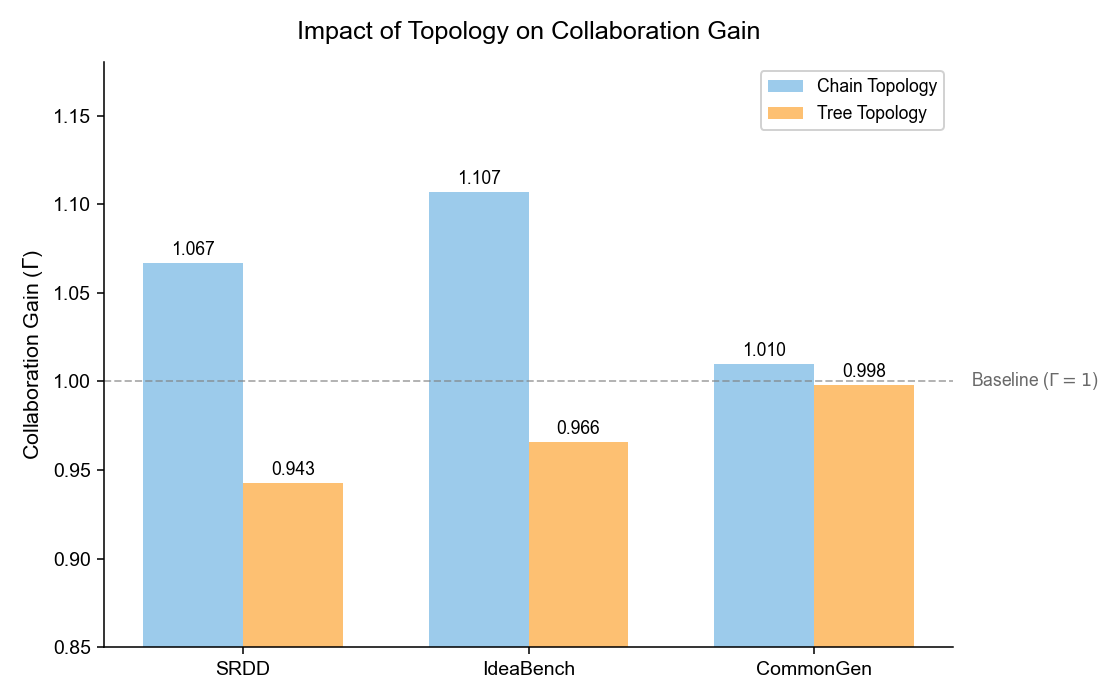

The Factor Library utilizes ‘Control Level Factors’ to characterize static elements influencing multi-agent system (MAS) performance. These factors represent pre-execution design choices that establish the system’s foundational architecture. Specifically, ‘Organizational Structure’ defines the agent relationships and hierarchical arrangements within the MAS, while ‘Communication Mechanisms’ detail the protocols and channels used for inter-agent messaging. These elements are considered ‘control’ factors as they are directly configurable by the system designer and remain relatively constant throughout execution, providing a baseline for anticipated performance characteristics. Analysis of these factors allows for the identification of architectural bottlenecks and potential improvements before deployment.

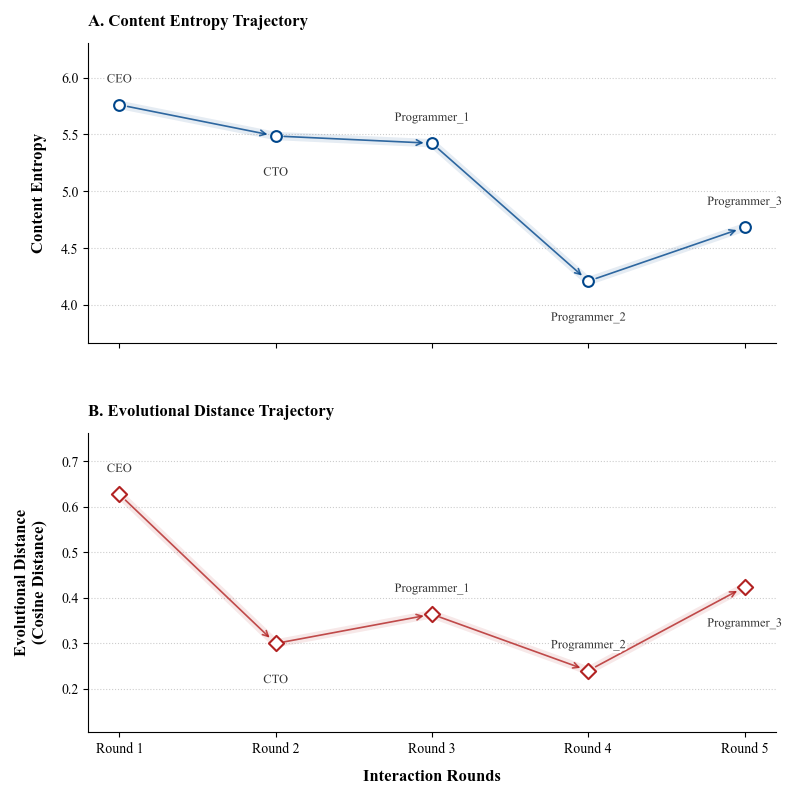

Information Level Factors within Multi-Agent Systems (MAS) assess performance based on the characteristics of information exchanged during system execution. Specifically, ‘Content Entropy’ quantifies the unpredictability or randomness of the information content itself; higher entropy indicates greater diversity and potentially increased exploration, while lower entropy suggests more focused, predictable communication. ‘Evolutionary Distance’ measures the degree of divergence between agent strategies or behaviors over time; a larger distance signifies greater adaptation and potentially novel solutions, but also the risk of instability or conflict. These factors are not static architectural components, but rather dynamic metrics reflecting the ongoing interactions and adaptations of agents as they operate within the system.

Maximizing performance in Multi-Agent Systems (MAS) necessitates analyzing the interaction between static architectural configurations and the dynamic behavior of agents during runtime. ‘Control Level Factors’, representing pre-defined organizational structures and communication protocols, establish a foundational operating environment. However, the efficacy of these presets is directly influenced by ‘Information Level Factors’ – characteristics of the information exchanged, such as its complexity ([latex]Content Entropy[/latex]) and the degree of novelty encountered during interaction ([latex]Evolutionary Distance[/latex]). A disconnect between the architectural baseline and the information landscape can lead to inefficiencies, while alignment facilitates optimized agent coordination and system-level performance. Therefore, effective MAS design and optimization require a holistic assessment of this interplay, rather than focusing solely on either architectural presets or dynamic execution in isolation.

Task Characteristics and Their Influence on System Efficiency

The efficiency of multi-agent systems is profoundly shaped by the degree to which a given task can be broken down into independent components. When a task exhibits high decomposability, individual agents can address distinct subtasks concurrently, maximizing parallel processing and overall system throughput. This contrasts sharply with tasks requiring sequential completion, where the performance of one agent directly bottlenecks the progress of others. Research indicates that systems designed to exploit task decomposability demonstrate significantly reduced completion times and improved resource utilization, as agents operate with greater autonomy and reduced interdependency. Conversely, poorly decomposed tasks force agents into competition for shared resources or necessitate complex coordination mechanisms, diminishing the benefits of a distributed approach and potentially negating any performance gains from the multi-agent architecture.

The degree to which tasks must be completed in a specific sequence profoundly affects the efficiency of multi-agent systems. When substantial sequential dependency exists – meaning one task must precede another – opportunities for parallel processing diminish, creating bottlenecks and limiting overall system throughput. This constraint forces agents to operate more serially, reducing the benefits of distributed problem-solving. Conversely, tasks with minimal sequential dependency allow agents to work concurrently, leveraging the power of collaboration and significantly accelerating completion times. Consequently, the architecture of a task and the degree to which it can be broken down into independent, concurrently executable components is a critical design consideration for maximizing the potential of any multi-agent system.

Task clarity stands as a cornerstone of successful multi-agent systems, directly influencing an agent’s ability to contribute meaningfully to a collective goal. When goals and requirements are ambiguously defined, agents expend valuable resources interpreting directives rather than executing them, leading to inefficiencies and potential conflicts. A well-defined task provides each agent with a clear understanding of its role, expected outcomes, and the criteria for success, thereby minimizing miscommunication and redundant effort. This precise articulation of objectives facilitates streamlined coordination, enabling agents to effectively share information, allocate resources, and synchronize their actions. Consequently, systems characterized by high task clarity demonstrate superior performance, increased robustness, and a greater capacity to adapt to dynamic environments – ultimately achieving optimal results through focused, collaborative effort.

The Future of Collective Intelligence: LLMs and Beyond

The advent of Large Language Models (LLMs) represents a significant leap forward in the development of truly autonomous agents within Multi-Agent Systems (MAS). These models, trained on massive datasets, possess an unprecedented ability to understand and generate human-like text, enabling agents to not only perceive their environment through language but also to formulate plans and execute tasks with a degree of sophistication previously unattainable. Unlike traditional rule-based systems, LLMs allow for flexible, context-aware decision-making, empowering agents to adapt to dynamic situations and collaborate effectively with both other agents and human users. This capability moves beyond simple reactivity towards proactive problem-solving, where agents can decompose complex goals into manageable steps, reason about potential outcomes, and adjust their strategies accordingly – fundamentally reshaping the potential of MAS across diverse applications.

The integration of Large Language Models represents a significant leap towards creating truly adaptable agents within Multi-Agent Systems. These models move beyond pre-programmed responses, enabling agents to analyze complex situations and dynamically adjust their strategies based on evolving circumstances. This isn’t simply about reacting to stimuli; agents powered by LLMs can anticipate potential challenges, coordinate actions with peers to achieve shared objectives, and even learn from past experiences to refine their decision-making processes. The capacity for nuanced understanding and flexible behavior allows these agents to navigate unpredictable environments and collaborate effectively, exceeding the limitations of traditionally scripted autonomous entities and paving the way for more robust and efficient collective intelligence.

The convergence of Large Language Models and the ‘Scientific Guidance’ framework represents a substantial leap forward for Multi-Agent Systems. This integrated approach doesn’t merely enhance existing capabilities, but unlocks previously unattainable levels of performance and efficiency, even when scaled to systems encompassing millions of agents. Demonstrated results reveal significant reductions in computational cost – specifically, token usage – ranging from 28.1% to 72.8%. This substantial decrease suggests a pathway toward more sustainable and scalable intelligent systems, allowing for increasingly complex problem-solving and coordination without prohibitive resource demands. The framework effectively guides the LLM-powered agents, optimizing their communication and task execution, thereby maximizing collective intelligence and minimizing redundant processes.

The pursuit of collective intelligence through Large Language Model-based Multi-Agent Systems demands a departure from haphazard experimentation. This work champions a rigorous, scientific approach-one that meticulously dissects performance gains arising from agent interaction. It echoes Bertrand Russell’s observation: “The whole is more than the sum of its parts.” Indeed, simply assembling agents isn’t enough; the true value lies in understanding how their collaboration generates emergent behavior and quantifying the ‘collaboration gain’ – a crucial step towards building robust and predictable systems. A focus on factor attribution, as outlined in the paper, ensures that design choices are not merely intuitive, but demonstrably impactful on the collective’s overall efficacy.

Beyond Trial and Error

The pursuit of collective intelligence via Large Language Model-based multi-agent systems has, until now, largely resembled a sophisticated form of trial and error. This work suggests a necessary, if belated, shift towards rigorous scientific construction. The emphasis on ‘collaboration gain’ – isolating the benefit of interaction, rather than simply observing emergent behavior – is crucial. However, true scalability does not reside in increased computational power, but in clarity of design. The field must move beyond simply demonstrating emergent abilities and begin to systematically dissect the factors that enable them.

A key limitation remains the inherent complexity of these systems. Attributing performance is a start, but a complete understanding requires a holistic view. Each agent, each interaction, is a component of a larger ecosystem, and modifying one element inevitably ripples through the whole. Future work must prioritize developing methods to model these systemic effects, acknowledging that optimization of individual components does not guarantee optimization of the collective.

The challenge, ultimately, is not to build more complex agents, but to understand the fundamental principles that govern their interaction. Only then can one hope to construct truly scalable and robust collective intelligence – systems where elegant simplicity, not brute force, dictates performance.

Original article: https://arxiv.org/pdf/2602.05289.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Jason Statham’s Action Movie Flop Becomes Instant Netflix Hit In The United States

- Kylie Jenner squirms at ‘awkward’ BAFTA host Alan Cummings’ innuendo-packed joke about ‘getting her gums around a Jammie Dodger’ while dishing out ‘very British snacks’

- Brawl Stars February 2026 Brawl Talk: 100th Brawler, New Game Modes, Buffies, Trophy System, Skins, and more

- Gold Rate Forecast

- Hailey Bieber talks motherhood, baby Jack, and future kids with Justin Bieber

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- MLBB x KOF Encore 2026: List of bingo patterns

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- Brent Oil Forecast

2026-02-06 20:10