Author: Denis Avetisyan

This review examines how generative AI is changing legal fact verification, focusing on the crucial balance between automation and maintaining professional expertise.

Research explores the integration of generative AI into legal workflows, emphasizing accountability, transparency, and effective human-computer interaction.

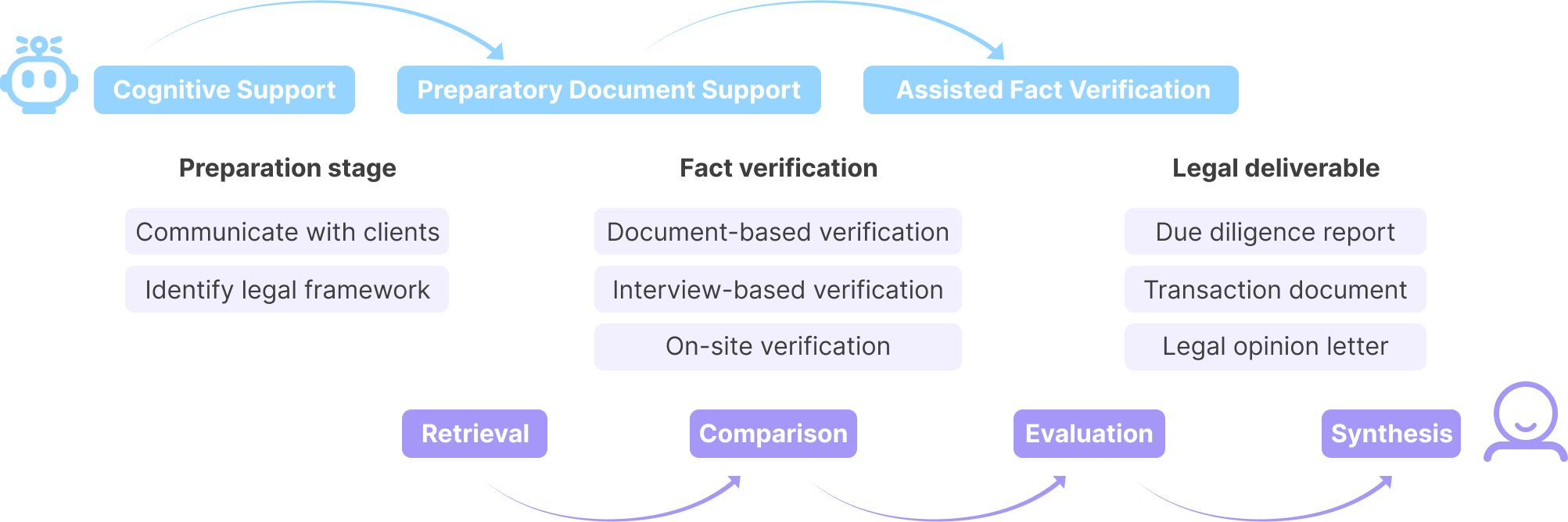

While legal practice increasingly leverages automation, critical fact verification remains largely unexplored in the age of generative AI. This research-’Reimagining Legal Fact Verification with GenAI: Toward Effective Human-AI Collaboration’-investigates how legal professionals perceive and anticipate integrating GenAI into their workflows, revealing a cautious optimism tempered by concerns regarding accuracy, liability, and transparency. Our findings, based on interviews with 18 lawyers, highlight a need for auditable AI systems that prioritize professional judgment and accountability, rather than simply maximizing efficiency. How can we design human-AI collaborations that effectively balance the potential of GenAI with the ethical and legal responsibilities inherent in legal fact verification?

The Evolving Landscape of Legal Truth

The modern legal landscape is characterized by an explosion of data, as professionals increasingly turn to digital records, social media, and expansive databases to build cases and support arguments. This reliance, however, introduces significant challenges to fact verification; the sheer volume of information makes comprehensive review impractical, and the potential for misinformation or biased data is substantial. Establishing the veracity of evidence is no longer simply a matter of meticulous document examination, but rather a complex process of data authentication, source evaluation, and pattern recognition. Consequently, legal teams must navigate not only the content of information, but also its origin, context, and potential for manipulation, demanding new tools and strategies to ensure factual accuracy and maintain the integrity of legal proceedings.

The foundations of legal proceedings have long rested on meticulous document review and due diligence, yet these traditionally relied-upon methods are increasingly strained by the sheer volume and complexity of modern information. A comprehensive review, once feasible, now presents a significant logistical hurdle, demanding extensive attorney time and paralegal resources. More critically, this manual process is inherently susceptible to human error – crucial details can be overlooked, patterns missed, and biases unconsciously applied. Consequently, inaccuracies, even minor ones, can significantly impact case outcomes, potentially leading to unfavorable rulings, protracted litigation, and erosion of trust in the legal system. The limitations of these established techniques are prompting a critical reevaluation of fact verification practices within legal circles.

Contemporary legal disputes increasingly hinge on intricate factual scenarios, often involving massive datasets and nuanced interpretations of evidence. This escalating complexity necessitates a shift beyond traditional fact verification methods. Simply reviewing documents or conducting standard due diligence proves insufficient when facing arguments built upon layers of data, technical analyses, or specialized knowledge. A more rigorous and efficient approach – one leveraging advanced analytical tools and systematic verification processes – is now critical for establishing solid factual foundations. Such an approach not only strengthens legal arguments but also mitigates the risk of errors stemming from human oversight, ultimately ensuring more just and accurate outcomes in an increasingly data-driven legal landscape.

The Bedrock of Legal Reasoning: Fact and Verification

Legal reasoning is predicated on the establishment of accurate factual bases; therefore, effective fact verification is a critical component of legal practice. The strength of any legal argument – whether presented in pleadings, motions, or during trial – is directly proportional to the reliability of the underlying facts. Establishing these facts requires a systematic process of investigation and corroboration, utilizing primary and secondary sources. Failure to adequately verify factual claims can lead to erroneous conclusions, flawed legal strategies, and potentially adverse outcomes for clients. Consequently, legal professionals must prioritize and implement robust fact verification procedures as an integral part of their analytical and representational duties.

Information verification within the fact verification workflow encompasses a systematic evaluation of data sources to ascertain their authenticity, accuracy, and relevance. This process includes cross-referencing information against multiple independent sources, assessing the credibility of those sources-considering factors such as author expertise, publication bias, and potential conflicts of interest-and corroborating evidence with primary documentation whenever possible. Techniques employed range from simple source comparison to advanced methods like reverse image searching, metadata analysis, and forensic document examination. Successful information verification minimizes the risk of basing legal arguments on inaccurate or fabricated data, thereby bolstering the reliability of factual foundations upon which legal decisions are made.

The strength of any legal argument rests directly on the accuracy and completeness of the underlying facts; a flawed factual basis inevitably compromises the validity of the legal reasoning applied. Consequently, thorough factual investigation is not merely a preliminary step, but a critical determinant of both the persuasiveness of a case and the ultimate accountability of legal professionals. Errors or omissions in factual development can lead to incorrect legal conclusions, unjust outcomes, and potential malpractice claims, emphasizing that the extent of factual investigation directly correlates with the reliability of legal proceedings and the responsible exercise of legal practice.

The Algorithmic Paradox: AI and Legal Accountability

The increasing integration of AI-generated content into legal workflows – including document drafting, legal research, and predictive analysis – introduces substantial accountability challenges. AI models, trained on potentially biased datasets, can perpetuate and amplify existing societal biases, leading to inaccurate or unfair legal outcomes. Establishing responsibility for errors within AI-generated content is complex; current legal frameworks struggle to assign liability to developers, users, or the AI itself. The probabilistic nature of many AI systems means outputs are not always deterministic or reliably attributable to specific inputs, further obscuring the causal chain necessary for legal accountability. Verification of AI-generated legal content requires significant human effort to identify and correct inaccuracies, potentially negating efficiency gains and raising concerns about the overall reliability of these systems in high-stakes legal contexts.

AI model opacity, often referred to as the “black box” problem, poses a fundamental challenge to legal accountability due to the requirement for epistemic transparency in judicial and administrative processes. Legal proceedings necessitate a clear understanding of the reasoning behind any evidence or conclusion presented; however, many AI models, particularly complex neural networks, operate in ways that are difficult, if not impossible, for humans to fully decipher. This lack of interpretability hinders the ability to scrutinize the AI’s reasoning, assess potential biases in its training data, or verify the accuracy of its conclusions, directly conflicting with the legal principle that judgments must be based on understandable and verifiable evidence. Consequently, reliance on opaque AI systems can undermine due process and the ability to effectively challenge evidence derived from those systems.

Current development trajectories for AI systems applied to legal contexts often prioritize metrics such as efficiency and predictive accuracy. However, research indicates a crucial need to re-evaluate these priorities, shifting towards systems that demonstrably enhance transparency in reasoning processes. This includes designing AI that facilitates, rather than replaces, human legal expertise, allowing for informed review and validation of AI-generated outputs. Maintaining robust human oversight is paramount, as it enables practitioners to identify and address potential inaccuracies or biases inherent in algorithmic decision-making, ultimately ensuring responsible and accountable application of AI within the legal field.

The pursuit of integrating Generative AI into legal fact verification, as detailed in the research, echoes a fundamental truth about all complex systems: they are perpetually evolving through a series of adjustments. This mirrors Alan Turing’s observation: “There is no limit to what can be achieved if it is not forbidden.” The article highlights the necessity of ‘epistemic transparency’ in these AI systems – a system’s ability to demonstrate how it arrived at a conclusion. Like any aging structure, these systems require continuous assessment and refinement to ensure accountability and maintain professional expertise. The research posits that AI should support legal professionals, not replace them, and this collaborative approach acknowledges that errors are inevitable steps toward a more mature and reliable system. Ultimately, the goal isn’t to eliminate fallibility, but to build systems capable of learning from, and gracefully accommodating, the passage of time and the accumulation of experience.

What’s Next?

The integration of Generative AI into legal fact verification, as this work demonstrates, isn’t simply a matter of efficiency gains. It’s a temporal negotiation. Each automated step, each suggested connection, introduces a new layer of potential decay – a drift from the original intent of legal truth-seeking. The crucial question isn’t whether AI can verify facts, but how gracefully the system ages as it accumulates these automated assertions. Every bug in these systems isn’t a technical error; it’s a moment of truth in the timeline, revealing the inherent fragility of algorithmic certainty.

Future research must move beyond assessing performance metrics and focus on the archaeology of these systems. Understanding how AI’s ‘knowledge’ evolves – which precedents are favored, which are subtly discarded – is paramount. Technical debt in this context isn’t merely a development shortcut; it’s the past’s mortgage paid by the present, a slow erosion of epistemic integrity. The emphasis should shift toward systems that not only report findings but also meticulously document their reasoning – a complete lineage of assertions, allowing for retrospective analysis and correction.

Ultimately, the longevity of these systems depends on acknowledging their inherent impermanence. The goal isn’t to build flawless AI, but to create tools that facilitate a continuous process of verification, correction, and refinement-a kind of legal palimpsest, where layers of automated and human judgment coexist, each revealing and correcting the traces of what came before.

Original article: https://arxiv.org/pdf/2602.06305.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Gold Rate Forecast

- Jason Statham’s Action Movie Flop Becomes Instant Netflix Hit In The United States

- Kylie Jenner squirms at ‘awkward’ BAFTA host Alan Cummings’ innuendo-packed joke about ‘getting her gums around a Jammie Dodger’ while dishing out ‘very British snacks’

- Hailey Bieber talks motherhood, baby Jack, and future kids with Justin Bieber

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- KAS PREDICTION. KAS cryptocurrency

- Jujutsu Kaisen Season 3 Episode 8 Release Date, Time, Where to Watch

- How to download and play Overwatch Rush beta

- Brent Oil Forecast

2026-02-09 15:44