Author: Denis Avetisyan

A new framework merges the power of large language models with structured knowledge to unlock creative design possibilities.

![Generative ontologies transcend descriptive vocabularies by establishing constraints that enable large language models to function as active grammars for design creation, ensuring validity through a formalized system-a principle akin to establishing that [latex] \forall x \in V : \text{ontology}(x) \implies \text{validity}(x) [/latex], where <i>V</i> represents the vocabulary and validity is guaranteed by the ontological framework.](https://arxiv.org/html/2602.05636v1/figures/fig-defining-gen-ontology.png)

This review introduces Generative Ontology, a method for combining ontologies and language models to generate valid and novel designs, demonstrated in the context of tabletop game design.

While large language models excel at fluent generation, they often lack the structural validity required for complex design tasks, and traditional ontologies struggle with novelty. This paper introduces Generative Ontology: When Structured Knowledge Learns to Create, a framework that synthesizes the strengths of both approaches by encoding domain knowledge as executable schemas that constrain LLM outputs. We demonstrate this through a multi-agent pipeline-GameGrammar-capable of generating complete tabletop game designs from thematic prompts, ensuring coherence between mechanisms and components. Could this approach-grounded in structured knowledge yet fueled by creative generation-unlock similarly robust and imaginative outputs in domains beyond games, such as music composition or software architecture?

The Paradox of Fluency: Structural Integrity in Generative Models

While Large Language Models demonstrate remarkable proficiency in generating text that sounds natural and grammatically correct, a phenomenon termed ‘Structural Hallucination’ reveals a critical limitation. These models can produce outputs that, though fluent at the sentence level, lack overall coherence and logical consistency when viewed as a complete structure – be it a story, argument, or plan. Essentially, the model prioritizes local plausibility – making each sentence individually sensible – over global integrity, potentially leading to narratives that meander, arguments that contradict themselves, or instructions that fail to achieve a desired outcome. This disconnect between linguistic fluency and structural soundness presents a significant challenge in deploying LLMs for tasks demanding complex, multi-step reasoning or consistent world-building, and highlights the need for innovative approaches to enforce and evaluate global coherence in generated content.

The crafting of compelling game experiences has long been the domain of human designers, individuals who intuitively understand how players perceive challenges, navigate narratives, and react to emergent systems. This expertise isn’t simply about logical problem-solving; it involves a nuanced grasp of psychology, aesthetics, and the unpredictable nature of human engagement. Designers build cohesive worlds not through rigid rules alone, but by anticipating player behavior and subtly guiding them through carefully constructed sequences of discovery and reward. Replicating this intuitive process algorithmically proves exceptionally difficult, as current methods struggle to capture the subtle interplay between player agency and environmental storytelling that defines a truly immersive and satisfying game. The challenge lies not just in generating content, but in ensuring that content feels intentionally designed, contributing to a unified and meaningful experience – a feat that currently demands the uniquely human capacity for empathetic prediction and artistic vision.

Formalizing Creativity: A Foundation in Ontological Structure

Generative Ontology is a methodology that integrates explicitly represented ontological knowledge with Large Language Models (LLMs) to produce outputs within a specific domain. This approach moves beyond purely statistical LLM generation by grounding the process in formalized knowledge structures, enabling the creation of content that is not only syntactically correct but also semantically valid and contextually relevant. By encoding domain-specific concepts, relationships, and constraints as ontological data, the system guides the LLM’s output, fostering both creativity and adherence to predefined rules and logical consistency. The intended outcome is a system capable of generating novel and meaningful outputs that are demonstrably ‘correct’ with respect to the established knowledge base.

The Tabletop Game Ontology serves as the foundational knowledge representation for generative processes by providing a standardized vocabulary for dissecting games into their constituent parts. This ontology decomposes games into core elements such as agents, actions, objects, rules, states, and victory conditions, defining relationships between these elements. By explicitly defining these components and their interdependencies, the ontology enables the LLM to generate game content – including scenarios, characters, and mechanics – that adheres to established structural principles and maintains internal consistency. The vocabulary is not limited to specific game genres, allowing for application across a broad range of tabletop game designs, and facilitates the creation of both novel and coherent game elements.

The application of Whitehead’s metaphysics to this generative framework centers on the principle of linking abstract ‘forms’ with ‘actual entities’. In this context, abstract forms represent generalized concepts within the defined domain – for example, ‘quest’ or ‘character ability’ – while concrete instances are specific realizations of those concepts – a particular quest titled ‘The Lost Artifact’ or a character ability called ‘Fireball’. By establishing these relationships within the ontological schema, the system moves beyond simply generating syntactically correct outputs; it ensures that generated content is grounded in meaningful, domain-specific concepts and their concrete manifestations, thereby fostering creative generation that remains structurally coherent and logically valid.

An Ontological Schema functions as a formalized representation of domain-specific knowledge, utilizing a structured vocabulary and defined relationships between concepts. This schema is implemented as a set of rules and constraints governing the permissible combinations and attributes of elements within the domain. For Large Language Model (LLM) generation, the schema serves as a guiding framework, directing the LLM to produce outputs that conform to the established knowledge structure. By enforcing these structural constraints, the schema ensures the validity of generated content, preventing the creation of illogical or nonsensical outputs and maintaining consistency with the defined domain.

![This Game Ontology, represented as an OntoUML class diagram, structures a game's domain through aggregates of [latex] ext{Player}[/latex], [latex] ext{Mechanism}[/latex], and [latex] ext{Component}[/latex] connected by typed relationships to enforce a generative framework.](https://arxiv.org/html/2602.05636v1/figures/fig1-game-ontology-ontouml.png)

Decomposition and Agency: A Modular Approach to Game Creation

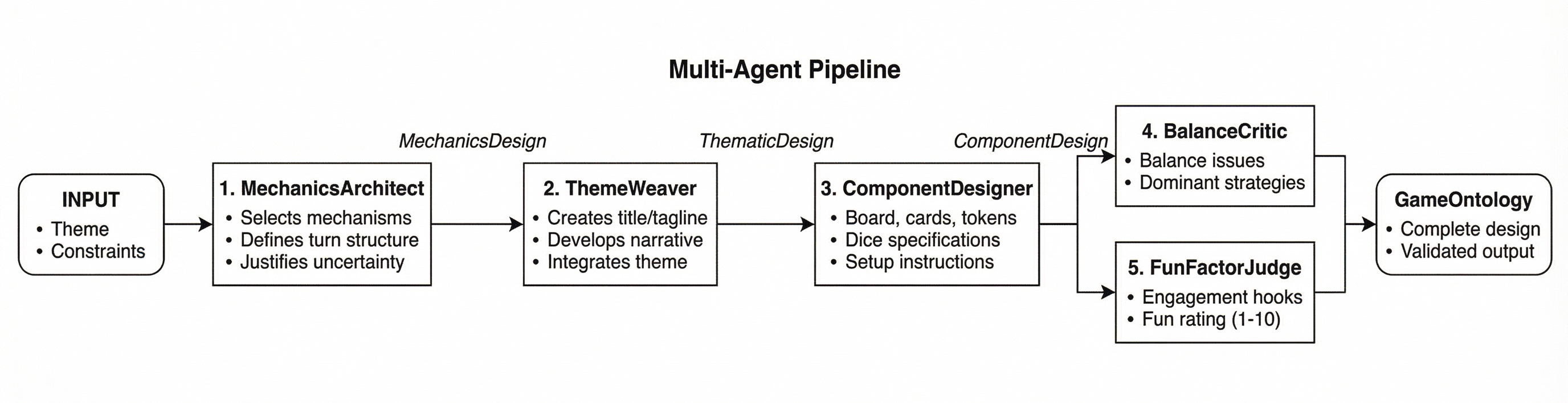

The core of our game design methodology is a Multi-Agent System comprised of three specialized agents. The ‘Component’ agent focuses on generating the physical and conceptual elements of the game, such as cards, tokens, and boards. The ‘Mechanism’ agent defines the rules and procedures governing gameplay, including action selection, resource management, and scoring. Finally, the ‘Player Dynamics’ agent models player behavior, considering factors like strategic choices, interaction patterns, and potential motivations. This modular approach allows each agent to concentrate on its specific area of expertise, contributing to a more comprehensive and coherent game design process.

Decomposing the game design process into specialized agents – handling components, mechanisms, and player dynamics independently – enables focused content generation for each element. This modular approach simplifies the overall design task by reducing the complexity of coordinating interrelated features simultaneously. By isolating these aspects, the system minimizes conflicts and inconsistencies that frequently arise in holistic game generation. This decomposition also facilitates structural coherence; each agent operates within its defined scope, ensuring generated content aligns with the pre-defined ontological schema and contributes logically to the overall game structure, rather than introducing extraneous or conflicting elements.

Retrieval-Augmented Generation (RAG) is implemented to enhance agent performance by grounding generated content in existing board game knowledge. Specifically, the system queries the BoardGameGeek (BGG) database – a comprehensive repository of board game rules, components, themes, and player reviews – to retrieve relevant information. This retrieved data is then incorporated as context during the generation process, informing agent decision-making in areas such as component design, mechanism definition, and player dynamic modeling. The use of RAG allows the agents to draw upon a substantial corpus of existing game design principles and patterns, promoting novelty while maintaining a foundation in established practices and providing contextual inspiration for creative outputs.

The implementation utilizes a Pydantic BaseModel to enforce a pre-defined Ontological Schema during game component generation. This schema defines the expected structure and data types for each element of the game, such as the properties of a card, the parameters of a rule, or the attributes of a player. By validating generated outputs against this model, the system ensures structural coherence and prevents the creation of invalid or illogical game components. The Pydantic library provides data validation and settings management, allowing for automatic type checking and ensuring that all generated elements conform to the established ontological framework, thereby facilitating downstream processing and integration.

Ensuring Structural Validity: Constraints and Verification

Validation Functions within the framework operate as essential control mechanisms, verifying the structural integrity of generated game elements before integration. These functions systematically assess whether proposed combinations of game components adhere to pre-defined rules and relationships, effectively preventing the creation of logically inconsistent or non-functional designs. This gatekeeping process involves checking data types, permissible values, and interdependencies between elements, ensuring that all generated content conforms to the established ontological constraints of the game system. By enforcing these structural rules, Validation Functions contribute to the overall robustness and reliability of the generated game designs.

DSPy facilitates the definition of ‘ontological contracts’ through a declarative language that specifies permissible combinations and relationships between game elements. These contracts are implemented as validation functions which are automatically integrated into the generation pipeline. This allows the framework to constrain the search space during output generation, ensuring that proposed game designs adhere to pre-defined structural rules. The system provides tools for both defining these rules – specifying, for example, that a ‘sword’ must have a ‘hilt’ and a ‘blade’ – and for enforcing them during the generation process, effectively guiding the model towards structurally valid and coherent outputs without sacrificing creative freedom.

The implementation of validation functions within the DSPy framework directly addresses the problem of ‘Structural Hallucination’ – the generation of game designs containing logically inconsistent or non-functional elements. By enforcing predefined ontological contracts, these functions assess generated outputs for structural coherence before integration into the game design. This process reduces the incidence of invalid game states, ensuring generated designs are more reliably playable and reducing the need for manual correction or redesign. The result is a significant improvement in the efficiency of game development and a decrease in the potential for runtime errors caused by structurally flawed content.

![The knowledge system utilizes a Retriever Service to query a corpus of 2,000 board games stored in ChromaDB (via [latex]384[/latex]-dimensional embeddings) or a SQLite database, enabling both semantic and structured information retrieval.](https://arxiv.org/html/2602.05636v1/figures/fig-knowledge-system.png)

Towards Automated Creativity and Beyond: A New Paradigm

The advent of automated game design represents a paradigm shift in how interactive experiences are created, offering the potential to dramatically accelerate innovation and reduce associated development costs. By leveraging artificial intelligence, designers can offload repetitive tasks – such as level generation, character balancing, and rule creation – allowing them to focus on higher-level creative direction and refinement. This isn’t simply about replacing human designers; it’s about augmenting their capabilities, enabling rapid prototyping and exploration of a far wider design space than previously feasible. The framework facilitates iterative design cycles, quickly generating variations and allowing for data-driven assessment of player engagement, ultimately streamlining the path from concept to compelling gameplay. This increased efficiency translates into faster time-to-market and the capacity to experiment with bolder, more innovative game mechanics.

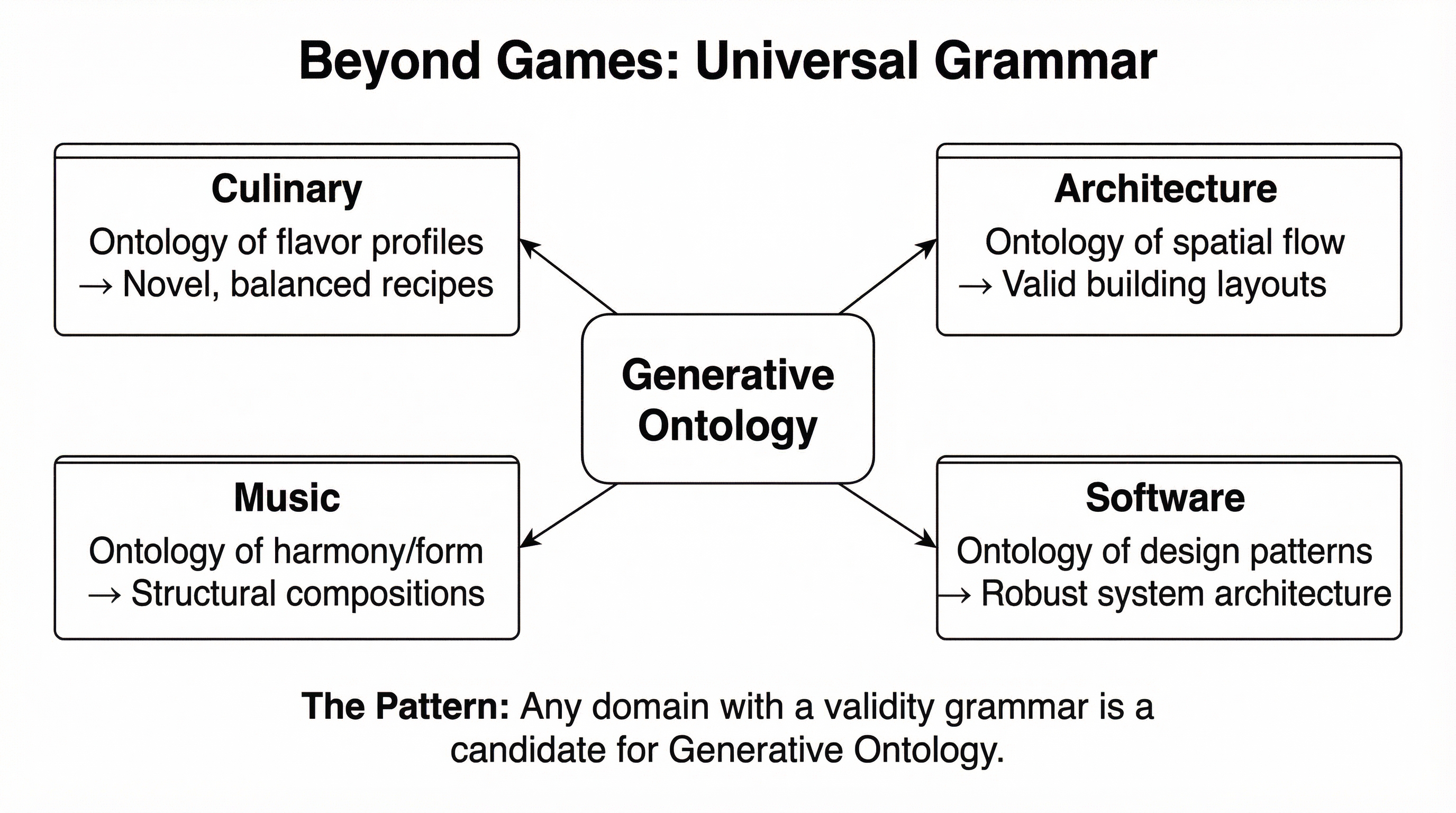

The innovative framework driving automated game design extends far beyond entertainment applications. Its core principles – defining constraints, generating variations within those constraints, and evaluating outcomes based on defined metrics – represent a broadly applicable approach to structured creativity. This methodology can be readily adapted to fields like architectural design, where algorithms could generate building layouts optimizing for space, light, and material usage, or even in scientific hypothesis generation, where the system could propose novel experimental setups based on existing data and theoretical frameworks. By formalizing the creative process, the system offers a pathway to accelerate innovation and explore a wider range of possibilities than traditional methods might allow, effectively serving as a powerful tool for problem-solving across diverse disciplines.

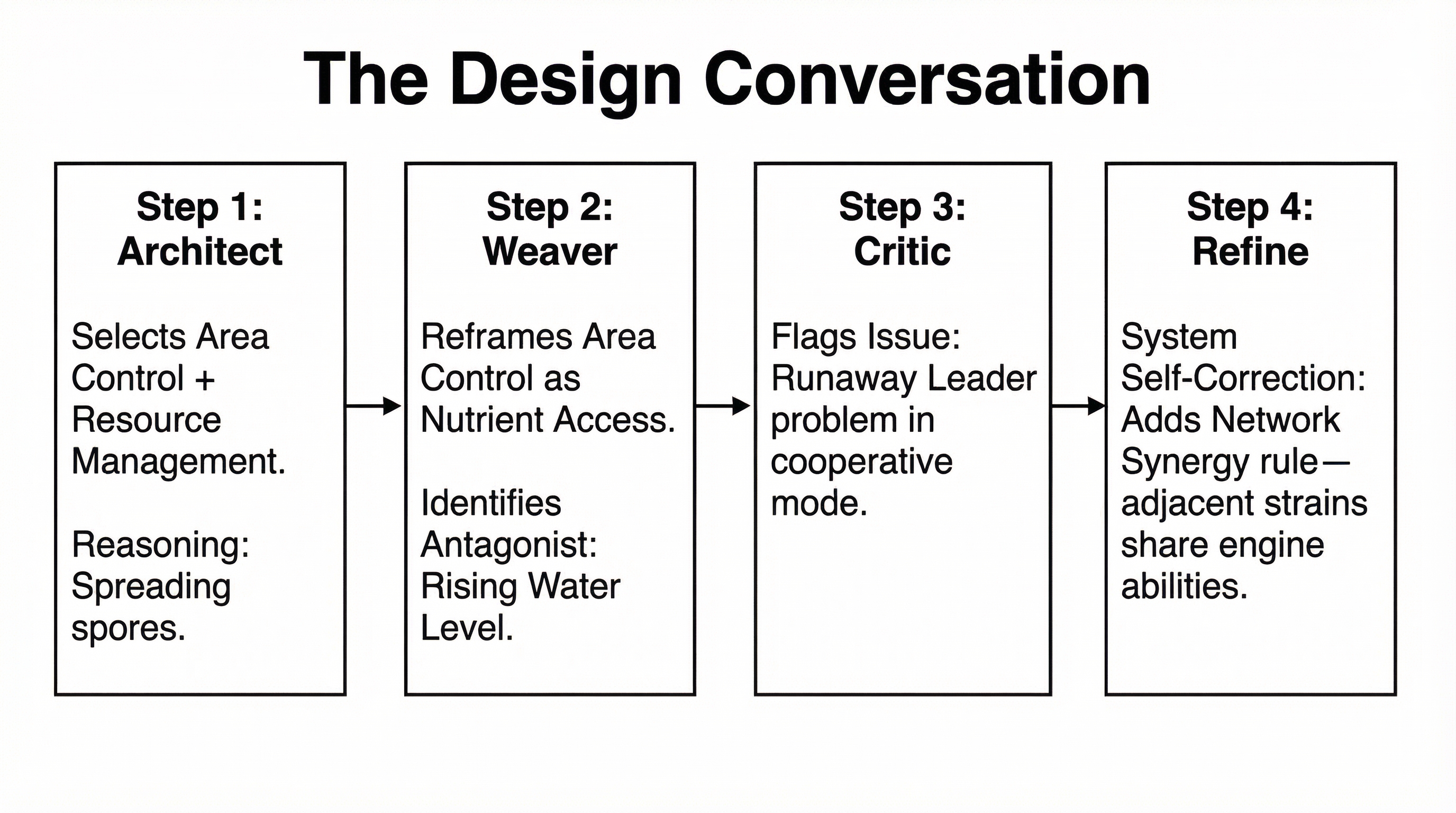

Current research endeavors are centered on fostering collaborative design capabilities within the artificial intelligence agents. The aim is to move beyond individual design generation towards a system where multiple agents can iteratively build upon each other’s ideas, refining game mechanics and levels through a process akin to co-creation. This collaborative refinement is anticipated to yield designs exhibiting a level of complexity and nuance currently unattainable, leading to emergent gameplay experiences-unpredictable, dynamic scenarios arising from the interaction of complex systems. By enabling agents to not only generate initial designs but also to critique, adapt, and synthesize those of others, developers envision a future where truly engaging and replayable games are born from the synergistic interplay of artificial intelligence.

Recent evaluations of automatically generated game designs reveal a surprisingly high level of player engagement, as quantified by a ‘Fun Factor Judge Rating’ of 7 out of 10. This metric, assessed by experienced game reviewers, indicates the generated designs successfully incorporate key elements of compelling gameplay – notably tension, replayability, and overall enjoyment. The results suggest the framework isn’t simply producing structurally sound game levels, but designs that actively hold a player’s interest and encourage continued interaction. This achievement is particularly noteworthy given the designs originate from an automated process, demonstrating the potential for artificial intelligence to contribute meaningfully to creative fields beyond mere procedural content generation.

The pursuit of Generative Ontology, as detailed in this work, echoes a fundamental principle of algorithmic construction. It isn’t simply about producing a solution, but ensuring the generated designs adhere to a logically sound framework – a provable validity, much like a mathematical theorem. As Robert Tarjan once stated, “Complexity is not a bug, it’s a feature of reality.” This resonates with the challenge of representing rich game design spaces – the inherent complexity demands a robust ontological structure to guide the generative process. Without such a foundation, the Large Language Model’s creativity, while impressive, risks producing outputs that, while novel, lack internal consistency or fail to meet the defined constraints of the design space. The framework, therefore, attempts to tame complexity, not by eliminating it, but by structuring it for verifiable generation.

What Lies Ahead?

The presented work, while demonstrating a functional synthesis of symbolic and subsymbolic reasoning, merely scratches the surface of a profound challenge: the formalization of creativity itself. The assertion that designs are ‘valid’ relies, presently, on heuristic evaluation. A truly robust Generative Ontology demands a formal, provable notion of design optimality – a metric independent of human judgment, and demonstrable via asymptotic analysis of the generated solution space. Current validation methods, while sufficient for initial exploration, lack the rigor expected of a mathematical framework.

Future investigation must address the inherent limitations of relying on Large Language Models as oracles of semantic correctness. These models, trained on statistical correlations, are susceptible to generating outputs that appear valid but lack genuine grounding in the ontological constraints. A critical path involves developing methods to verify the internal consistency of generated designs – to prove, rather than merely test, that they adhere to the specified axioms and invariants. The exploration of alternative knowledge representation formalisms, beyond current ontology languages, may prove necessary.

Ultimately, the ambition of automating creativity necessitates a re-evaluation of what constitutes ‘novelty’. The generation of permutations of existing concepts, however cleverly orchestrated, is not creation. A truly generative system must exhibit the capacity to synthesize concepts beyond its training data – a feat that requires not merely statistical prowess, but a demonstrable capacity for logical inference and abstract reasoning. The pursuit of such a system will undoubtedly reveal the limits of current computational paradigms, and potentially necessitate a rethinking of the very foundations of artificial intelligence.

Original article: https://arxiv.org/pdf/2602.05636.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Gold Rate Forecast

- Jason Statham’s Action Movie Flop Becomes Instant Netflix Hit In The United States

- Kylie Jenner squirms at ‘awkward’ BAFTA host Alan Cummings’ innuendo-packed joke about ‘getting her gums around a Jammie Dodger’ while dishing out ‘very British snacks’

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Hailey Bieber talks motherhood, baby Jack, and future kids with Justin Bieber

- How to download and play Overwatch Rush beta

- Jujutsu Kaisen Season 3 Episode 8 Release Date, Time, Where to Watch

- KAS PREDICTION. KAS cryptocurrency

- Brent Oil Forecast

2026-02-08 14:09