Author: Denis Avetisyan

A new system intelligently designs how multiple AI agents collaborate to generate code, achieving better results with fewer resources.

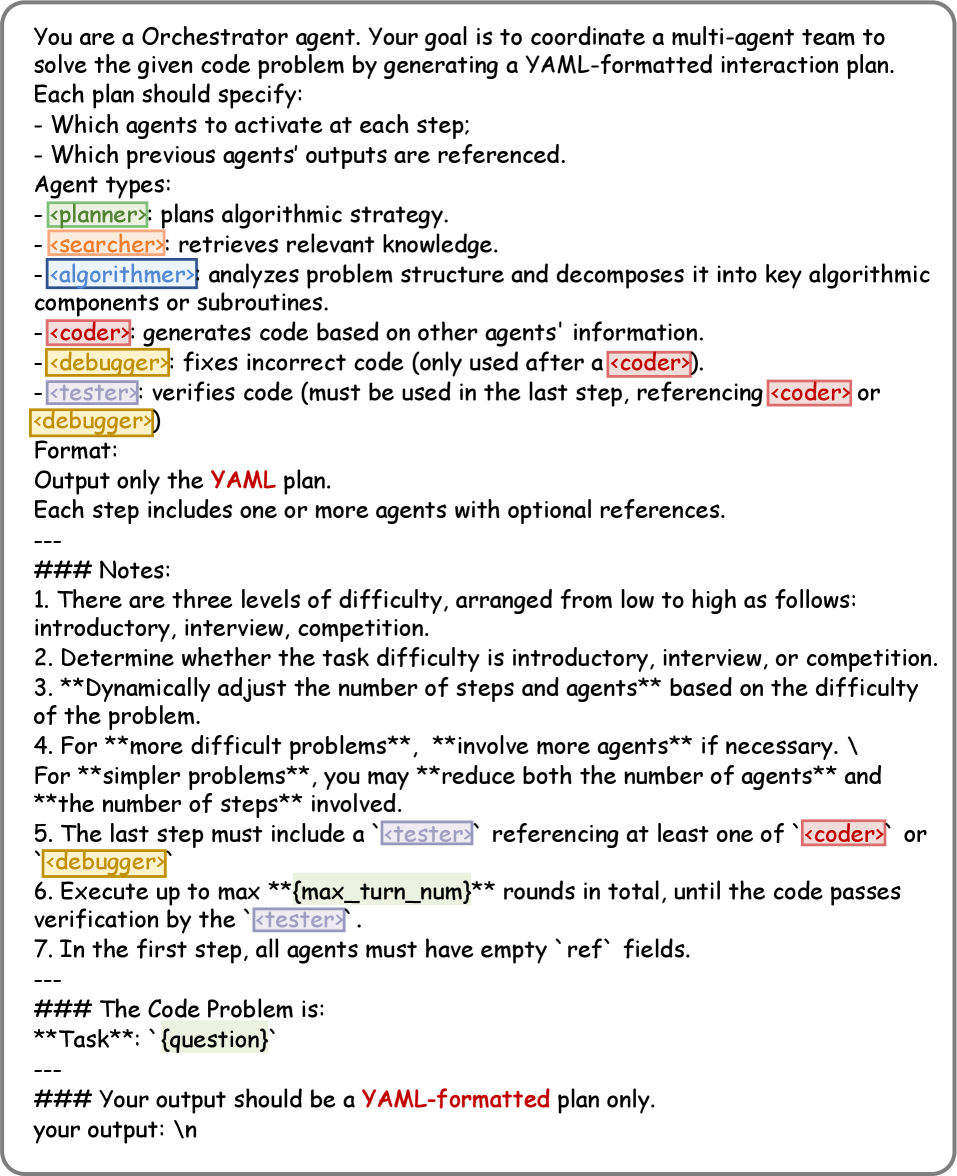

![AgentConductor addresses complex code problems through a three-stage process: initial supervised fine-tuning [latex]SFT[/latex] on varied network topologies instills structural understanding in the base [latex]Qwen-2.5-Instruct-3B[/latex] language model, followed by reinforcement learning with [latex]GRPO[/latex] to create a task-specific orchestrator capable of adapting network difficulty, and culminating in dynamic, multi-turn topology generation for end-to-end problem solving.](https://arxiv.org/html/2602.17100v1/x3.png)

AgentConductor leverages reinforcement learning to optimize interaction topologies for multi-agent systems tackling competition-level code generation tasks.

While large language model-driven multi-agent systems show promise for complex tasks like code generation, their reliance on static interaction topologies limits adaptability and efficiency. This paper introduces ‘AgentConductor: Topology Evolution for Multi-Agent Competition-Level Code Generation’, a reinforcement learning-optimized system that dynamically generates task-adapted interaction topologies for LLM agents. By inferring agent roles and task difficulty, AgentConductor constructs density-aware directed acyclic graphs, achieving state-of-the-art accuracy with significant reductions in both communication overhead and token cost. Could this approach to dynamic topology optimization unlock even greater potential for collaborative problem-solving in complex AI systems?

The Illusion of Intelligence: Why Sequential Processing Fails

Contemporary code generation models frequently encounter difficulties when tackling intricate problems that demand sophisticated reasoning and strategic planning. These systems typically operate through sequential processing – generating code line by line – which proves inadequate for tasks requiring a broader understanding of the overall objective and the interdependencies between different code segments. This linear approach limits the model’s ability to anticipate future requirements, backtrack from errors, or explore alternative solutions, resulting in code that may be syntactically correct but semantically flawed or inefficient. The inherent rigidity of sequential generation contrasts sharply with human problem-solving, where individuals dynamically formulate plans, decompose complex tasks into manageable sub-problems, and iteratively refine their approach based on intermediate results and insights. Consequently, current models often struggle with problems demanding more than straightforward algorithmic implementation, highlighting a critical gap between their capabilities and the complexities of real-world software development.

Current code generation models, while increasingly sophisticated, often encounter limitations when faced with escalating complexity and varied coding demands. Scaling these models – increasing their size and computational requirements – doesn’t consistently yield proportional improvements in performance, hitting bottlenecks related to memory constraints and training time. Furthermore, these models frequently demonstrate a lack of adaptability; a system trained on one type of coding problem may struggle significantly when presented with a task requiring a different approach or utilizing unfamiliar libraries. This rigidity stems from their reliance on pre-defined patterns and a limited capacity to generalize learning across diverse coding scenarios, hindering their effectiveness in real-world applications demanding flexible and robust code synthesis.

Current code generation models often operate with a rigid, pre-defined approach, limiting their effectiveness when confronted with intricate problems. This inflexibility stems from a core deficiency: the inability to reassess and modify their problem-solving strategy in response to the outcomes of intermediate steps. Unlike human programmers who constantly evaluate progress and adapt their techniques, these models proceed linearly, even if initial steps reveal a suboptimal path. Consequently, performance significantly degrades on tasks demanding dynamic planning, such as complex algorithm design or debugging, as the model remains committed to a potentially flawed strategy despite accumulating evidence suggesting a need for change. This lack of ‘reflective’ reasoning prevents them from capitalizing on early insights and efficiently navigating the solution space.

![The APPS evaluation demonstrates that increasing graph sparsity [latex]\mathcal{S}_{\text{complex}}[/latex] improves performance and reduces the number of tokens required for code generation, outperforming representative baselines.](https://arxiv.org/html/2602.17100v1/x4.png)

AgentConductor: A Distributed System – Because Monoliths Always Fail

AgentConductor addresses the shortcomings of conventional code generation techniques – namely, limited scalability, difficulty adapting to complex requirements, and a lack of robust error handling – by implementing a multi-agent system. Traditional methods often rely on monolithic models or sequential pipelines, hindering parallelization and iterative refinement. This system, conversely, distributes the code generation process across multiple specialized agents, each contributing unique capabilities. The agents collaborate to decompose problems, generate code fragments, and integrate them into a complete solution. This distributed architecture facilitates greater modularity, allowing for easier maintenance, extension, and adaptation to evolving project needs. Furthermore, the agent-based approach enables parallel execution, potentially reducing overall generation time and improving resource utilization.

The OrchestratorAgent functions as the central control mechanism within the AgentConductor system, dynamically configuring how specialized agents communicate and collaborate to solve coding tasks. Rather than a static interaction model, the OrchestratorAgent assesses the current problem-solving state and adjusts the network topology – specifically, which agents interact with each other – to optimize performance. This dynamic management facilitates a collaborative approach where agents with distinct expertise – such as code synthesis, testing, or documentation – can be selectively engaged and reconfigured throughout the code generation process, allowing for efficient resource allocation and improved problem-solving capabilities.

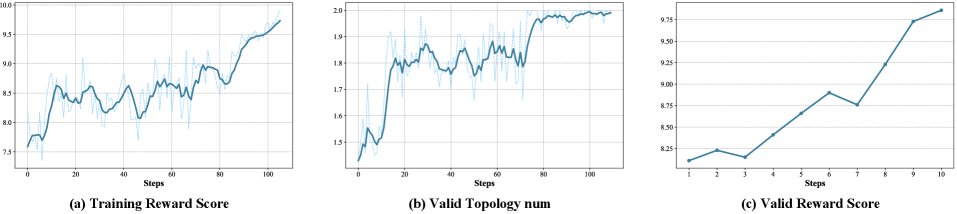

AgentConductor employs reinforcement learning to dynamically adjust the communication pathways between its constituent agents. The system defines interaction topology as the pattern of agent-to-agent communication, and uses a reward function to incentivize configurations that improve code generation performance. Specifically, the reinforcement learning algorithm learns to select optimal connection strategies – determining which agents should directly interact with each other – based on the current task and the expertise of each agent. This allows for efficient resource allocation, directing specialized agents to relevant sub-problems and minimizing redundant computation. The learning process iteratively refines the interaction topology, enabling the system to adapt to varying problem complexities and agent capabilities, ultimately improving the quality and speed of code generation.

Dynamic Interaction Topologies: Because Static Solutions Are a Fantasy

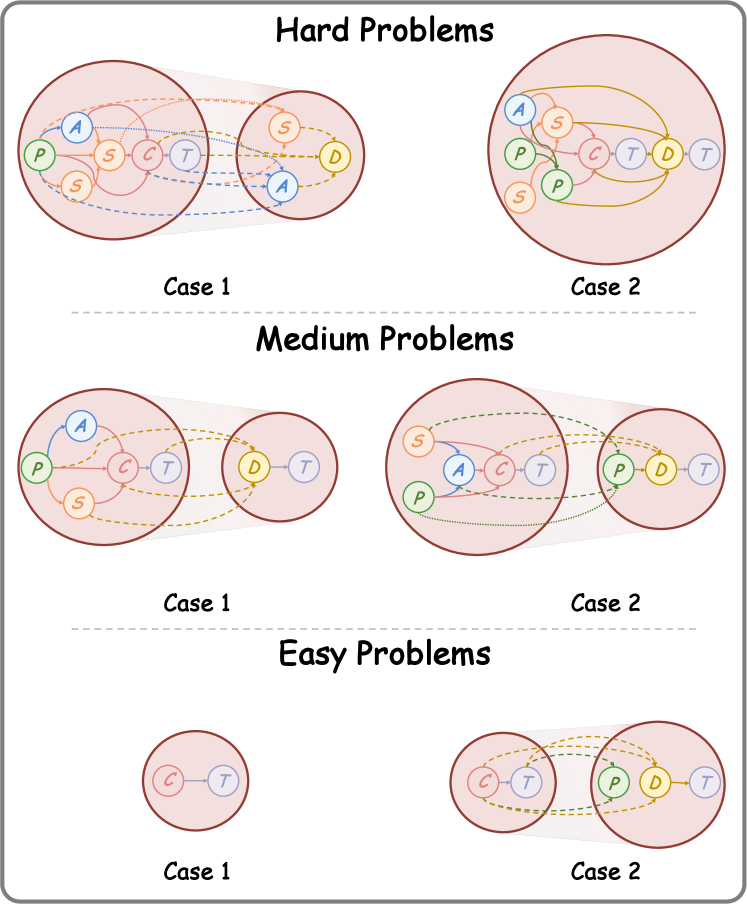

AgentConductor utilizes DynamicTopologyEvolution to modify inter-agent communication pathways during problem-solving. This system doesn’t employ a static communication graph; instead, the connections between specialized agents – RetrievalAgent, PlanningAgent, AlgorithmicAgent, CodingAgent, DebuggingAgent, and TestingAgent – are adjusted responsively. The configuration changes are driven by characteristics of the current coding problem, including its assessed difficulty and underlying structural complexity. This adaptive approach aims to optimize information flow, minimizing communication overhead while ensuring agents can effectively collaborate to arrive at a solution. The system determines which agents need to directly exchange information based on the problem’s requirements, effectively creating a problem-specific communication network.

The GraphComplexityReward function quantifies the suitability of a given agent communication topology based on both its efficiency and the problem’s inherent complexity. This reward is calculated by considering the number of communication links – representing overhead – alongside a metric reflecting the problem’s structural requirements, such as the dependencies between subtasks or the necessary information flow. A higher reward indicates a topology that effectively balances minimizing communication cost with maintaining sufficient connectivity for optimal problem solving; the function penalizes both overly sparse topologies that limit information exchange and excessively dense topologies that introduce unnecessary communication overhead, thereby guiding the reinforcement learning process towards efficient collaborative strategies.

AgentConductor utilizes a suite of specialized agents – RetrievalAgent, PlanningAgent, AlgorithmicAgent, CodingAgent, DebuggingAgent, and TestingAgent – each contributing a distinct function to the problem-solving process. The RetrievalAgent focuses on sourcing relevant information, while the PlanningAgent develops a high-level strategy. The AlgorithmicAgent translates the plan into concrete algorithmic steps, which are then implemented by the CodingAgent. Following code generation, the DebuggingAgent identifies and rectifies errors, and finally, the TestingAgent verifies the solution’s correctness. These agents operate within a dynamically adjusted communication framework, allowing for flexible collaboration based on the specific demands of the coding problem at hand.

Supervised Fine-Tuning (SFT) is implemented as a prerequisite to reinforcement learning within AgentConductor, utilizing Chain-of-Thought (CoT) prompting to establish a baseline for valid topology generation. This process involves training the model on a dataset of problem instances and corresponding optimal or near-optimal agent communication topologies. CoT prompting guides the model to not only predict the topology but also to articulate the reasoning behind its choices, improving the reliability and interpretability of the generated structures. The resulting SFT model provides a strong initialization for subsequent reinforcement learning, significantly reducing the exploration space and accelerating convergence by ensuring the agent begins with a capacity to produce syntactically and logically sound communication pathways between specialized agents.

Performance and Scalability: Because Bragging Rights Matter (Eventually)

AgentConductor establishes new benchmarks in automated code generation by achieving state-of-the-art performance across a diverse suite of challenging datasets. Rigorous testing on established benchmarks – including HumanEval, MBPP, APPS, LiveCodeBench, and the competitive CodeContests – demonstrates the system’s robust capabilities. This performance isn’t merely incremental; AgentConductor consistently surpasses prior methods, indicating a significant advancement in the field. By excelling on datasets that demand both functional correctness and complex problem-solving, the system highlights its potential to tackle real-world software development challenges and automate increasingly sophisticated coding tasks.

AgentConductor distinguishes itself through enhanced capabilities in tackling intricate code generation challenges that demand sequential logic and strategic planning. Unlike conventional methods often limited by their inability to decompose problems into manageable steps, this system excels at multi-step reasoning. By effectively orchestrating a collaborative network of agents, it navigates complex tasks with greater efficiency and accuracy. This approach yields substantial performance gains on benchmarks like APPS, LiveCodeBench, and CodeContests, where AgentConductor consistently surpasses established techniques, demonstrating a significant leap forward in automated software development and complex problem-solving within the realm of artificial intelligence.

Evaluations across diverse code generation benchmarks reveal AgentConductor’s substantial capabilities; the system achieves a pass@1 accuracy of 58.8% on the challenging APPS dataset, demonstrating proficiency in solving a wide range of programming problems. Further testing on LiveCodeBench (v4) yields a 46.3% pass@1 accuracy, highlighting the system’s ability to handle more complex, real-world coding tasks. Moreover, AgentConductor extends its success to competitive programming challenges, attaining a 38.8% pass@1 accuracy on the CodeContests dataset, signifying its potential for automating aspects of professional software development.

AgentConductor demonstrably surpasses existing code generation benchmarks, achieving substantial performance gains on several challenging datasets. Specifically, evaluations reveal a 14.6 percentage point improvement over the next best performing system on the APPS benchmark, a significant margin indicating a considerable leap in problem-solving capability. This advantage extends to more complex tasks, with AgentConductor exceeding the performance of its closest competitor by 3.1 percentage points on LiveCodeBench and a further 1.1 percentage points on the highly competitive CodeContests platform. These results collectively underscore AgentConductor’s ability to not merely generate code, but to produce correct and functional solutions at a rate previously unattainable by alternative methods.

AgentConductor demonstrates exceptional proficiency in code generation, achieving pass@1 accuracies of 97.5% on the challenging HumanEval dataset and 95.1% on the MBPP benchmark. These results signify a substantial advancement over existing methodologies; specifically, AgentConductor surpasses the performance of the second-best performing system by a margin of 1.0 percentage point on HumanEval and 0.7 percentage points on MBPP. This heightened accuracy indicates the system’s capability to reliably produce functional code from natural language prompts, even when faced with complex algorithmic challenges, and highlights its potential to significantly enhance automated software development workflows.

AgentConductor’s efficiency stems from its innovative DifficultyAwareTopology, a resource allocation strategy that dynamically adjusts computational resources based on the complexity of individual coding tasks. This topology doesn’t treat all problems equally; instead, it intelligently distributes processing power, assigning more substantial resources to challenging multi-step problems while conserving energy on simpler ones. By analyzing the inherent difficulty of each task, the system minimizes computational cost without sacrificing performance, leading to a substantial improvement in both speed and economic viability. This adaptive approach ensures that AgentConductor doesn’t simply generate code, but does so with optimal resource utilization, making it a practical solution for large-scale software development automation.

The demonstrated performance of AgentConductor across diverse code generation benchmarks suggests a significant leap toward automating complex software development tasks. Achieving state-of-the-art results on challenging datasets like HumanEval and CodeContests isn’t merely incremental improvement; it signals the potential for multi-agent systems to fundamentally reshape how software is created. By effectively decomposing problems and allocating resources through its DifficultyAwareTopology, the system addresses limitations of traditional approaches, particularly in scenarios requiring intricate reasoning and planning. This capability extends beyond simple code completion, hinting at a future where automated agents can collaboratively tackle entire software projects, drastically reducing development time and costs, and potentially unlocking innovation through broader accessibility to coding.

The Future of Collaborative AI: Because We’re All Just Temporary Fixes

The AgentConductor framework establishes a novel approach to artificial intelligence by moving beyond monolithic systems and embracing collaboration between specialized agents. This paradigm allows complex problems to be decomposed into smaller, more manageable tasks, each handled by an agent possessing unique expertise – be it natural language processing, data analysis, or strategic planning. Instead of a single AI attempting to solve everything, AgentConductor facilitates a dynamic interplay, where agents communicate, negotiate, and combine their capabilities to achieve a common goal. This distributed intelligence not only enhances problem-solving efficiency but also promotes adaptability and robustness, as the system can continue functioning even if individual agents encounter difficulties or limitations – a significant advancement with implications for fields ranging from scientific discovery and financial modeling to disaster response and personalized healthcare.

Ongoing development of the AgentConductor framework prioritizes refinements to how its constituent agents interact and learn. Researchers are investigating interaction topologies beyond simple hierarchies, exploring dynamic network configurations that allow agents to form temporary coalitions based on task demands. Crucially, the design of reward functions is receiving considerable attention; moving beyond singular, global rewards to incorporate nuanced, multi-objective rewards that incentivize both individual agent proficiency and collective success is paramount. This includes exploring reward shaping techniques and intrinsic motivation mechanisms to encourage exploration and adaptation in complex environments, ultimately aiming to enhance the system’s robustness and overall performance across a wider range of challenging problems.

The true potential of collaborative AI hinges on a continuously expanding ecosystem of specialized agents, each possessing unique skills and knowledge. Current research is actively exploring methods for automated agent discovery, allowing systems to identify and incorporate new capabilities without explicit human intervention. This includes developing algorithms that can assess an agent’s reliability and competence before integrating it into a collaborative workflow. Furthermore, innovative approaches to agent integration are being investigated, focusing on seamless communication and task allocation, even among agents with vastly different architectures and training data. This dynamic combination of agent expansion and automated integration promises to unlock increasingly complex problem-solving capabilities, enabling AI systems to adapt and evolve in response to novel challenges and opportunities.

The AgentConductor framework envisions a future where artificial intelligence transcends isolated task completion and enters an era of true collaborative problem-solving with humans. This isn’t merely about AI assisting with existing workflows, but about constructing intelligent systems capable of dynamically integrating human expertise and computational power to address complex, multifaceted challenges. By fostering seamless interaction and shared understanding, AgentConductor aims to unlock solutions in areas ranging from climate change and disease eradication to sustainable resource management and equitable access to education. The potential extends beyond simply augmenting human capabilities; it anticipates a synergistic partnership where AI agents and human collaborators learn and adapt together, accelerating innovation and achieving outcomes previously considered unattainable, ultimately reshaping how humanity confronts its most critical global issues.

“`html

The pursuit of ever-more-complex multi-agent systems, as demonstrated by AgentConductor’s dynamic topology optimization, feels…familiar. It’s a beautifully intricate solution to a problem created by the last beautifully intricate solution. One recalls Edsger W. Dijkstra’s observation: “Program testing can be effectively used to reveal the presence of bugs, but it can hardly be used to prove the absence of bugs.” This paper showcases impressive gains in code generation through LLM orchestration, yet one suspects that each optimized topology introduces new, subtler failure modes. The system might reliably produce more code, but predictably, production will uncover edge cases the reinforcement learning hadn’t anticipated. It’s not a lack of progress; it’s simply that the tech debt always accrues, and one leaves notes for the digital archaeologists.

What Lies Ahead?

AgentConductor, in its pursuit of optimized LLM interaction topologies, presents a predictable elegance. The system navigates the space of possible agent arrangements with a grace that suggests, momentarily, a solution. But every abstraction dies in production. The inherent brittleness of reinforcement learning, trained in a simulated competition, will inevitably surface when faced with the chaotic influx of real-world code generation requests. The question isn’t if the optimal topology will degrade, but when, and what unforeseen edge cases will trigger its collapse.

Future work will undoubtedly focus on robustness – attempting to shield this carefully constructed system from the inevitable entropy of deployment. This will likely involve meta-learning approaches, or perhaps even systems designed to proactively detect topological decay and initiate self-repair. However, the deeper challenge lies in acknowledging that the very notion of a ‘best’ topology is transient. Problem spaces evolve, LLMs are updated, and what works today will become a performance bottleneck tomorrow.

Ultimately, AgentConductor illuminates a familiar truth: every optimization is a temporary reprieve. The pursuit of dynamic topologies is valuable, but it’s a Sisyphean task. Perhaps the real innovation won’t be in finding the perfect arrangement, but in designing systems that fail gracefully, and adapt continuously – acknowledging that everything deployable will eventually crash, at least it dies beautifully.

Original article: https://arxiv.org/pdf/2602.17100.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- 1xBet declared bankrupt in Dutch court

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Gold Rate Forecast

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Bikini-clad Jessica Alba, 44, packs on the PDA with toyboy Danny Ramirez, 33, after finalizing divorce

2026-02-22 06:24