Author: Denis Avetisyan

New research reveals that pairing teaching assistants with AI feedback tools significantly improves the quality of revisions students make to their work.

![When teaching assistants are empowered to readily adopt and refine suggestions generated by an AI feedback system-FeedbackWriter-student revisions demonstrate a quality increase equivalent to moving a student from the 50th to the 70th percentile, attributable to the AI’s capacity to deliver actionable feedback that fosters independent learning-a benefit exceeding that of solely human-provided feedback [latex] (Cohen’s\ d = 0.50) [/latex].](https://arxiv.org/html/2602.16820v1/img/teaser3.png)

A randomized trial demonstrates that AI-mediated feedback, delivered through the FeedbackWriter platform, results in higher quality rubric-based feedback and improved student writing in large undergraduate courses.

Despite growing interest in leveraging artificial intelligence to enhance educational practices, the impact of AI-mediated feedback on student writing remains largely unexplored. This research, detailed in ‘AI-Mediated Feedback Improves Student Revisions: A Randomized Trial with FeedbackWriter in a Large Undergraduate Course’, addresses this gap through a randomized controlled trial ([latex]\mathcal{N}=354[/latex]) demonstrating that providing teaching assistants with AI-generated suggestions during feedback delivery significantly improves the quality of student revisions. Specifically, gains were observed as TAs increasingly adopted these AI-supported insights. How might this human-AI collaboration reshape writing assessment and, more broadly, personalized learning experiences in higher education?

The Sisyphean Task of Student Feedback

The provision of effective feedback on student writing represents a substantial undertaking for higher education instructors, particularly when evaluating knowledge-intensive essays. These assignments, demanding comprehensive understanding and nuanced articulation of complex concepts, require detailed assessment beyond surface-level errors. The cognitive load associated with thoroughly analyzing each student’s work-identifying strengths, pinpointing areas for development, and formulating constructive guidance-is inherently time-consuming. Moreover, instructors strive to balance providing sufficient detail for meaningful improvement with the practical constraints of workload and class size, creating a persistent challenge in fostering student learning through written feedback. This difficulty is amplified by the subjective nature of evaluating complex arguments and the need to tailor feedback to individual student needs and learning styles.

Conventional feedback practices in higher education frequently suffer from inconsistencies that hinder student progress. Subjectivity in grading and commenting can lead to variations in how similar work is assessed, leaving students uncertain about expectations and areas needing refinement. Moreover, feedback often focuses on surface-level errors rather than underlying conceptual misunderstandings or higher-order thinking skills, failing to cultivate genuine independent learning. This emphasis on correction, rather than guidance, can inadvertently discourage students from taking risks or developing their own critical voice, ultimately impeding their ability to become self-regulated learners capable of continuous improvement beyond the immediate assignment.

The escalating demands on higher education instructors are creating a critical need for feedback mechanisms that can adapt to growing class sizes. As student populations expand, the time required to provide meaningful, individualized commentary on assignments becomes increasingly unsustainable, potentially leading to superficial evaluations or delayed responses. This situation doesn’t merely increase instructor workload; it directly impacts the quality of feedback students receive, hindering their ability to learn from mistakes and refine their understanding of complex topics. Consequently, institutions are actively seeking scalable solutions-such as automated feedback tools, peer review systems, and innovative assessment designs-to maintain educational rigor and ensure that students continue to benefit from constructive guidance, even within the constraints of large-enrollment courses.

Rubrics: A False Promise of Consistency

Rubric-based evaluation systems, while designed to ensure consistent assessment, are critically dependent on the fidelity between the rubric’s criteria and the feedback actually provided to students. A well-defined rubric establishes clear expectations, but if instructor or automated feedback does not directly address these criteria, the benefits of structured assessment are diminished. Discrepancies between rubric elements and corresponding feedback can introduce inconsistencies, reduce student understanding of performance expectations, and invalidate the rubric’s intended function as a standardized measurement tool. Therefore, consistent training and quality control are essential to guarantee alignment and maximize the reliability and validity of rubric-based evaluations.

AI-mediated feedback systems are being developed to automate components of the evaluation process, with the goal of reducing the time demands on instructors and increasing efficiency. These systems function by applying natural language processing and machine learning techniques to student submissions, identifying areas for improvement, and generating feedback statements. Automation targets include tasks such as identifying grammatical errors, assessing argument structure, and checking for adherence to assignment guidelines. While full automation remains a long-term goal, current implementations focus on assisting instructors by pre-populating feedback templates or flagging submissions requiring detailed review, thereby streamlining the evaluation workflow and potentially allowing for more students to receive detailed guidance.

AI-mediated feedback systems offer a solution to the limitations of individualized guidance in educational settings by automatically analyzing student submissions against pre-defined rubrics. Evaluation results indicate a statistically significant improvement in revision quality when students receive feedback augmented by AI; specifically, the observed effect size of 0.50 Cohen’s d represents a substantial positive impact. This effect size translates to an approximate shift in student performance from the 50th percentile to the 70th percentile, demonstrating a meaningful increase in the effectiveness of the revision process with AI assistance.

![AI-mediated feedback from FeedbackWriter significantly increases both the length of responses and the extent of rubric coverage compared to the baseline ([latex]p < 0.001[/latex]).](https://arxiv.org/html/2602.16820v1/img/feedback_coverage_count.png)

FeedbackWriter: Another Tool in a Broken System

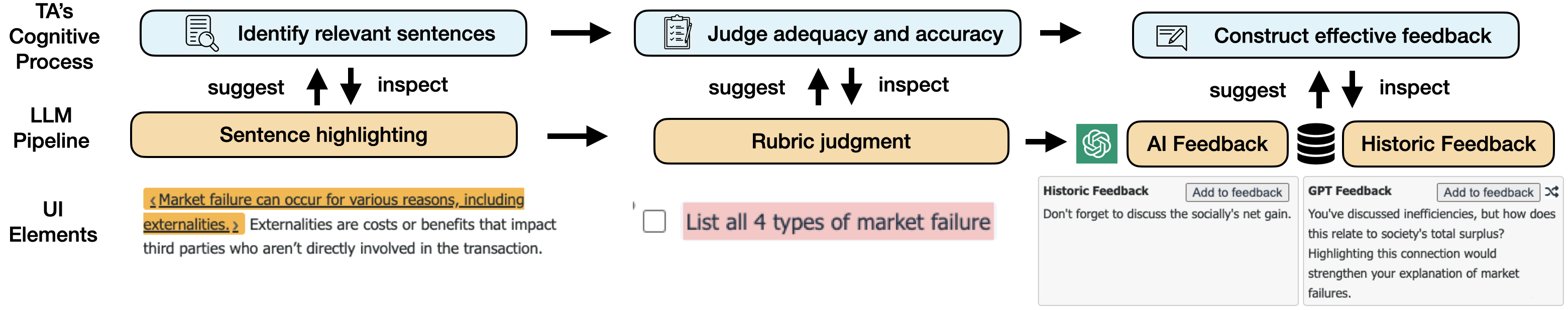

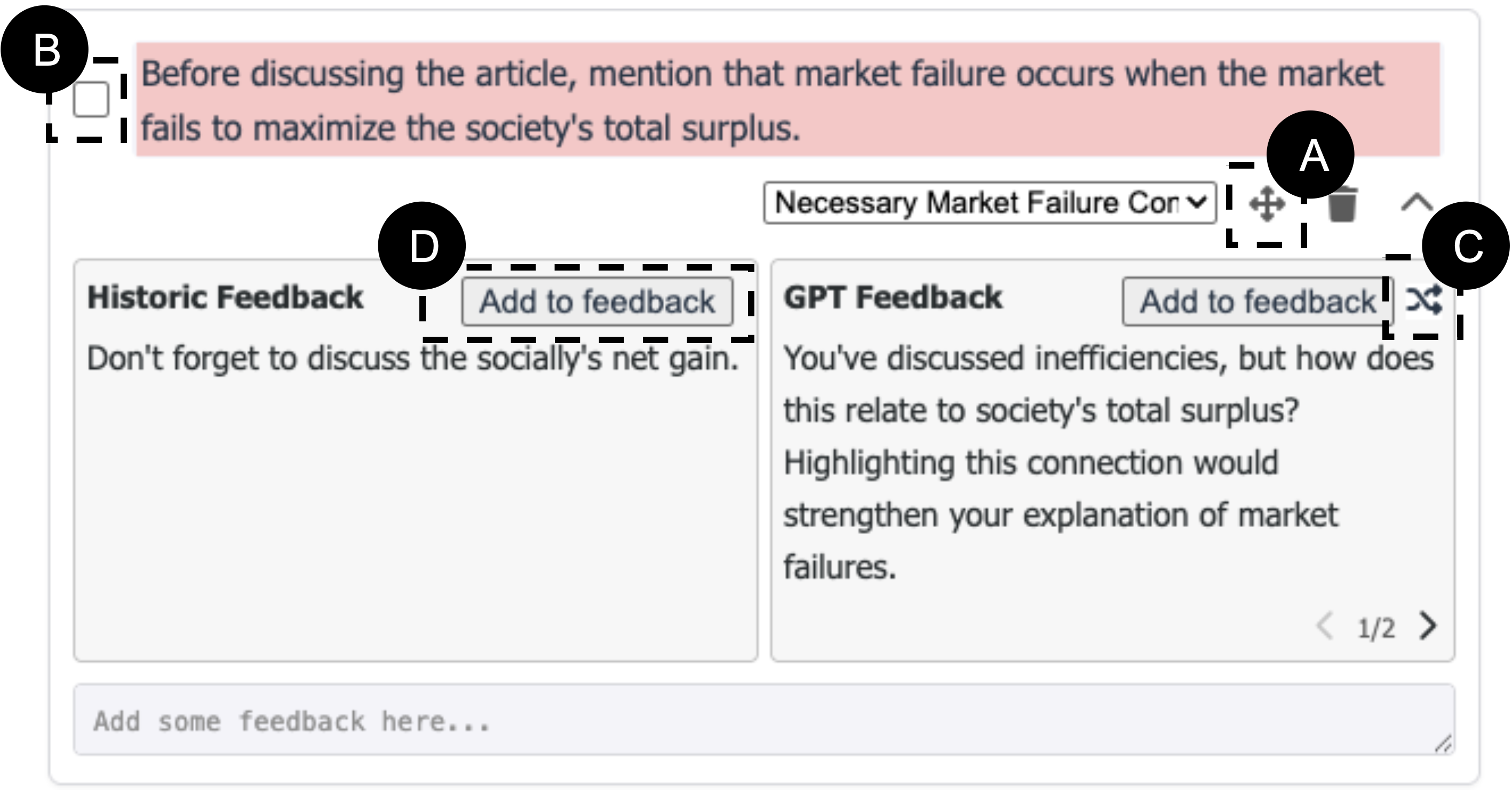

FeedbackWriter is a system designed to facilitate AI-mediated feedback on student submissions. The system functions by generating AI-driven suggestions for improvement directly within student work, which are then presented to instructors for review. This allows instructors to accept, modify, or reject the AI suggestions before they are delivered to students. The core functionality centers around providing instructors with a tool to augment, rather than replace, their feedback process, aiming to increase efficiency and consistency in evaluating student work. The system supports integration with existing learning management systems and is designed to handle a variety of assignment types and rubric criteria.

FeedbackWriter incorporates text highlighting to pinpoint specific passages in student submissions that require attention. This feature is coupled with AI-driven judgment, which analyzes the highlighted text against predefined rubric criteria to identify areas for improvement. The system doesn’t simply flag errors; it assesses the student’s work in relation to the established evaluation standards, providing a basis for targeted feedback suggestions. This process allows the AI to move beyond surface-level grammatical checks and address higher-order concerns related to argumentation, clarity, and content relevance as defined within the rubric.

FeedbackWriter incorporates a historical feedback database to enhance the relevance and efficiency of AI-generated suggestions. The system analyzes previously provided instructor comments, utilizing this data to inform new feedback and offer instructors a pre-populated starting point for review. Evaluation using a Large Language Model (LLM) demonstrated that students receiving feedback generated with this AI-mediated approach achieved a 5% higher quality score in their final drafts when compared to students who received solely human-provided feedback, indicating a measurable improvement in student work.

The Illusion of Impact: Humans Still Required

The integration of artificial intelligence into feedback mechanisms is most effective when paired with instructor oversight; AI suggestion editing allows educators to critically examine and adjust automatically generated comments, guaranteeing both factual correctness and consistency with established teaching philosophies. This collaborative approach moves beyond simple automation, enabling instructors to tailor feedback to specific student needs and assignment goals, rather than relying on generalized responses. By refining AI suggestions, educators retain control over the pedagogical message, ensuring that guidance promotes deeper understanding and skill development, while simultaneously increasing the efficiency of the feedback process.

Recent evaluations demonstrate that pairing artificial intelligence with human instructors significantly improves both the quality and effectiveness of student feedback. The collaborative approach yields feedback assessed at 0.893 for actionability – meaning students clearly understand what steps to take – and an impressive 0.926 in fostering independent learning. This surpasses the scores achieved with solely human-generated feedback, suggesting that AI’s capacity to provide targeted guidance, when refined by an instructor’s expertise, empowers students to become more self-directed learners. The system doesn’t simply offer corrections; it actively cultivates a student’s ability to analyze their own work and identify areas for growth, leading to a more profound and lasting understanding of the material.

The feedback system demonstrates versatility beyond traditional assessments, extending effectively to Writing-to-Learn (WTL) assignments. These assignments, prioritizing the development of understanding and thought processes over polished final products, often pose unique challenges for automated feedback. However, the system adeptly shifts its focus, offering guidance on the clarity of reasoning, the strength of evidence, and the logical flow of ideas-elements central to WTL’s pedagogical goals. This capability highlights the system’s potential to support a broader range of learning activities, moving beyond simple error detection to foster deeper cognitive engagement and iterative improvement in student thinking, regardless of the assignment’s ultimate deliverable.

![AI-mediated feedback significantly improved the quality of student revisions, as evidenced by a substantial increase in scores from initial to final drafts ([latex]p<0.001^{\<i>\</i>\*}[/latex]).](https://arxiv.org/html/2602.16820v1/img/histogram_quality.png)

The study highlights a familiar pattern: tools intended to enhance human capability often become another layer of complexity. This research, demonstrating improved revisions through AI-mediated feedback, feels less like innovation and more like sophisticated patching. It’s a temporary reprieve from the fundamental problem of assessing subjective work. The bug tracker will inevitably fill with edge cases the algorithm misses, and the teaching assistants will spend more time refining AI suggestions than providing original thought. As Brian Kernighan observed, “Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.” The same principle applies here; striving for perfect automated feedback distracts from the messy, nuanced reality of evaluating student writing. It’s not about better feedback, it’s about managing the inevitable fallout.

What’s Next?

The demonstrated improvement in revision quality, while statistically sound, merely shifts the location of failure. The system doesn’t eliminate problematic writing; it externalizes the cost of remediation. Future iterations will inevitably reveal edge cases – the nuanced argument the AI flags as incoherent, the perfectly valid stylistic choice deemed ‘incorrect’ by the rubric-bound algorithm. Tests are, after all, a form of faith, not certainty.

The real challenge lies not in refining the AI’s suggestions, but in understanding what constitutes ‘quality’ in writing itself. This research sidesteps the question of whether the revisions move students toward genuinely better communication, or simply toward satisfying the automated expectations of a grading system. Scaling this approach demands a clear-eyed assessment of that trade-off. The potential for unintended consequences – a homogenization of voice, a reliance on algorithmic approval – feels substantial.

One suspects the most valuable data won’t emerge from the revisions themselves, but from the teaching assistants’ quiet edits after the AI has had its say. Those corrections, born of human judgment overriding automated suggestion, will chart a more honest map of the system’s limitations. It is there, in the friction between algorithm and expertise, that the true costs – and perhaps the genuine benefits – will become apparent.

Original article: https://arxiv.org/pdf/2602.16820.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- 1xBet declared bankrupt in Dutch court

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Gold Rate Forecast

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Bikini-clad Jessica Alba, 44, packs on the PDA with toyboy Danny Ramirez, 33, after finalizing divorce

2026-02-22 01:24