Author: Denis Avetisyan

A new framework leverages Bayesian optimization to efficiently refine policies within complex simulations, offering a powerful tool for addressing real-world environmental issues.

This review details a novel approach combining Bayesian optimization and Gaussian process models for policy optimization and sensitivity analysis within agent-based models.

Understanding and managing coupled human-environment systems presents a fundamental challenge due to their inherent complexity and emergent behaviors. This is addressed in ‘Environmental policy in the context of complex systems: Statistical optimization and sensitivity analysis for ABMs’, which introduces a novel statistical framework to accelerate policy optimization within computationally intensive agent-based models (ABMs). By combining Bayesian optimization with Gaussian process models, this approach efficiently identifies optimal and interpretable policies, demonstrated through a resource harvesting simulation using the classic Sugarscape model. Can this framework unlock more effective and robust environmental policies by bridging the gap between complex systems modeling and practical implementation?

The Inevitable Complexity of Emergent Systems

Many systems-from ant colonies and stock markets to the spread of disease and urban traffic patterns-display behaviors that arise not from centralized control, but from the local interactions of individual components. These phenomena, known as emergent behaviors, are notoriously difficult to predict using traditional analytical methods, which often rely on simplifying assumptions about linearity and equilibrium. The sheer complexity of these interactions-where countless individual actions combine to produce a system-wide outcome-can overwhelm conventional modeling techniques. For example, predicting the precise route a driver will take during rush hour, or anticipating a sudden shift in consumer sentiment, becomes nearly impossible when considering the multitude of influencing factors and individual choices. This unpredictability highlights the limitations of approaches that attempt to understand the whole by dissecting it into isolated parts, and necessitates new tools capable of capturing the dynamic, decentralized nature of these complex systems.

Agent-based modeling provides a computational framework for dissecting the intricate relationships that drive collective behaviors in complex systems. Rather than relying on aggregate data or top-down equations, this approach simulates the actions of individual, autonomous agents-be they people, animals, or even molecules-and observes how their interactions give rise to macroscopic patterns. This bottom-up methodology proves particularly valuable when analytical solutions are intractable, or when heterogeneity among individuals significantly influences system-level outcomes. By defining agent behaviors and environmental rules, researchers can explore a vast design space of ‘what-if’ scenarios, identifying critical thresholds, unintended consequences, and potential interventions with a level of nuance often inaccessible to traditional modeling techniques. The resulting simulations aren’t merely predictions, but rather explorations of plausible futures, offering insights into the dynamics of adaptation, innovation, and resilience within the system.

Agent-Based Modeling (ABM) diverges from traditional modeling by representing a system not as a monolithic entity, but as a collection of independent, decision-making entities – the agents. Each agent operates with its own defined behaviors and rules, interacting with its environment and other agents without centralized control. This bottom-up approach allows researchers to simulate complex dynamics that arise from these interactions, revealing emergent patterns and system-level outcomes often impossible to predict through conventional methods. Consequently, ABM proves particularly valuable for exploring the potential impacts of various policies or interventions, offering a virtual testing ground to assess consequences and refine strategies before real-world implementation – from understanding the spread of infectious diseases to optimizing urban planning and forecasting economic trends.

Policy as Navigation in a Sea of Interactions

Policy Optimization within Agent-Based Models (ABMs) is a process of determining the optimal values for model parameters to achieve predefined objectives. This involves defining a quantifiable outcome metric-representing the desired system behavior-and then systematically adjusting parameter values to maximize this metric. The core principle is to treat the ABM as a complex function where the parameters are inputs and the outcome metric is the output; identifying the input values that yield the highest output is the goal. This is often achieved through iterative experimentation, where different parameter combinations are tested, and the results are used to refine the search for optimal settings. The process is particularly valuable in scenarios where analytical solutions are intractable due to the inherent complexity of the ABM and the interactions between agents.

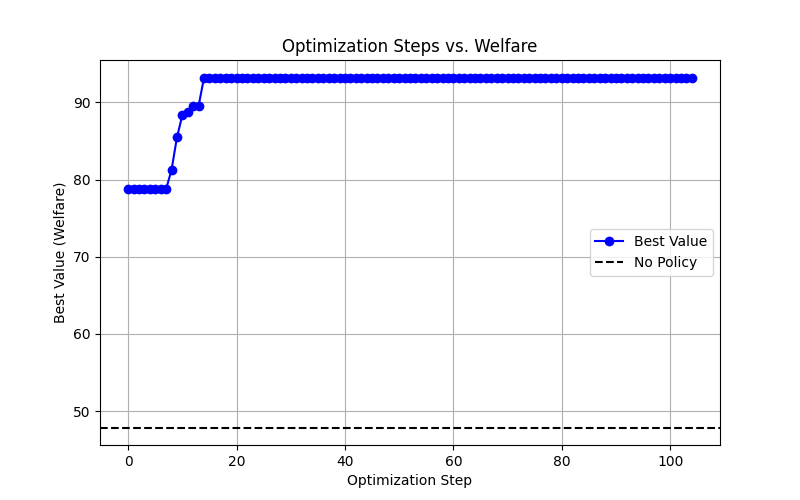

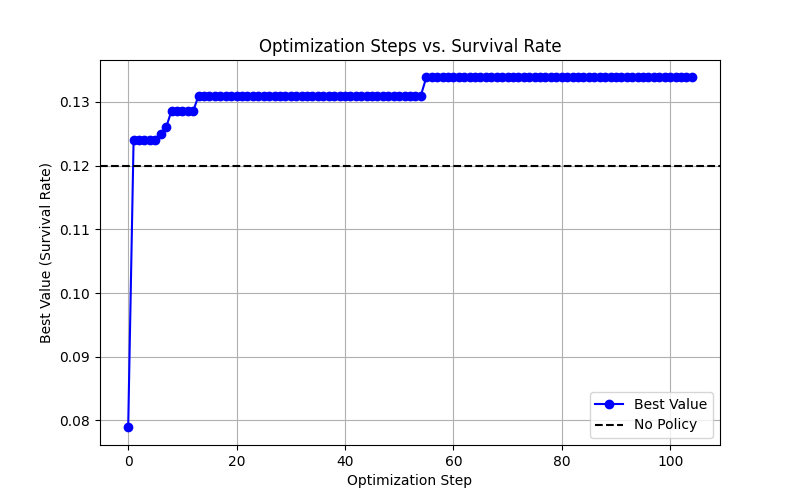

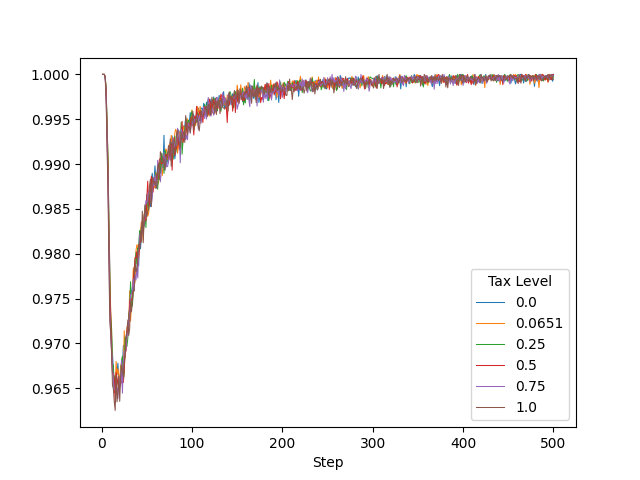

Bayesian Optimization addresses the challenge of efficiently identifying optimal parameter settings within Agent-Based Models (ABMs) by employing a sequential design strategy. This method treats the ABM as a ‘black box’ function – its internal mechanisms are not explicitly used, only its input-output relationship. The algorithm iteratively proposes new parameter sets based on a probabilistic model, balancing exploration of uncertain regions with exploitation of promising ones. Empirical results indicate that this approach typically achieves convergence – a stable, optimal solution – in approximately 60 iterations, representing a significant reduction in computational cost compared to exhaustive or random search methods.

The computational expense associated with Agent-Based Modeling (ABM) often necessitates efficient optimization strategies. Evaluating a single parameter set within an ABM can require substantial processing time; empirical data indicates approximately 11.5 hours are needed for complete data generation for each configuration. This prolonged evaluation period makes exhaustive search methods impractical. Consequently, techniques like Bayesian Optimization, which minimize the number of required evaluations, are particularly well-suited for ABM parameter tuning, as they can achieve convergence with a limited number of computationally expensive simulations.

Uncovering the Hidden Levers of System Behavior

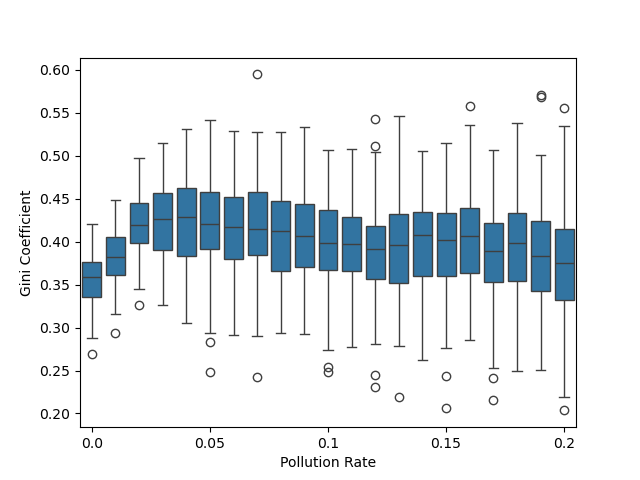

Sensitivity analysis within the Agent-Based Model (ABM) systematically assesses the impact of alterations to defined state variables on the model’s resulting behavior. This involves perturbing individual state variables – such as population size, resource availability, or environmental conditions – and observing the corresponding changes in key outcome metrics. By quantifying these relationships, sensitivity analysis reveals which state variables exert the most influence on the ABM’s overall results, allowing for targeted investigation of critical drivers and improved understanding of system dynamics. The magnitude and direction of these changes are recorded to determine the relative importance of each variable.

Prioritizing optimization efforts based on variable influence is a core principle of efficient model calibration. Rather than attempting to adjust all parameters within an agent-based model (ABM), focusing on those with the greatest impact on desired outcomes significantly reduces computational cost and improves the speed of convergence. This targeted approach leverages the understanding that certain state variables exert disproportionately large effects on system behavior, meaning adjustments to these variables will yield more substantial changes in model results than adjustments to less sensitive parameters. Consequently, resources can be allocated strategically, enhancing both the efficiency and the robustness of the overall optimization strategy.

Sensitivity analysis conducted within the agent-based model (ABM) demonstrated statistically significant relationships (p < 0.05) between optimal policy outcomes and specific state variables. Specifically, variations in pollution rate and agent metabolism were identified as key drivers influencing the efficacy of different policy choices. This finding indicates that the optimal policy is not static, but rather contingent upon the values of these state variables. Consequently, incorporating these variables into the policy optimization strategy improves both the efficiency – by focusing computational resources on the most impactful parameters – and the robustness of the resulting policies, ensuring their continued effectiveness under varying conditions.

Witnessing the Ripple Effects of Policy Decisions

The Sugarscape model demonstrates how seemingly simple policies can dramatically reshape the well-being and longevity of its agents. Policies such as ProductionCap, which limits individual resource acquisition, and TradeTax, which levies a cost on exchanges, directly influence the amount of resources available to each agent, consequently affecting their Welfare – a measure of accumulated resources – and their SurvivalRate, the proportion of agents remaining active in the simulation. These policies don’t operate in isolation; their effects ripple through the agent-based system, creating complex interactions and highlighting the interconnectedness of economic and environmental factors within a simulated society. The model allows researchers to explore how different policy levers can be adjusted to achieve desired outcomes, offering insights into the potential consequences of real-world interventions.

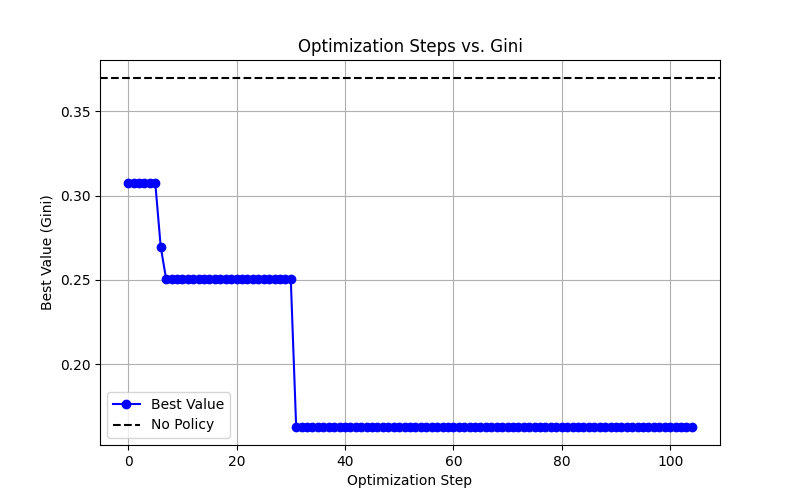

Policy evaluation within the Sugarscape model extends beyond simply maximizing aggregate welfare or survival rates; a comprehensive approach also necessitates consideration of distributional effects. Optimization algorithms can be specifically designed to balance these often-competing objectives, seeking policies that not only enhance overall system performance but also promote greater equity among agents. Metrics like the Gini coefficient, which quantifies income inequality – or, in this case, resource distribution – become crucial indicators during the optimization process. By incorporating such measures, the model aims to identify policies that minimize disparities and ensure a more just allocation of resources, moving beyond purely utilitarian outcomes to address concerns of fairness and social well-being. This allows for a nuanced assessment of policy impacts, revealing whether gains are broadly shared or concentrated among a select few.

Evaluations within the Sugarscape model consistently reveal that implemented policies-such as production caps and trade taxes-yield superior outcomes when contrasted with a scenario lacking any intervention. Notably, the application of Bayesian Optimization techniques facilitated a substantial 2.5-fold reduction in the Gini coefficient-a key metric of income inequality-when compared to purely random policy selection. This indicates a marked improvement in equitable resource distribution. Furthermore, the model extends beyond simple economic indicators by incorporating the impact of negative externalities, specifically pollution, thereby enhancing the realism and complexity of the policy evaluation process and providing a more holistic assessment of societal well-being.

Toward Simulations That Reflect the Messiness of Reality

Agent heterogeneity and initial endowment represent fundamental forces in shaping complex system dynamics. Simulations often assume uniformity, yet real-world populations exhibit significant variation in capabilities, preferences, and starting resources. This variation isn’t merely cosmetic; it profoundly influences individual behaviors and, consequently, emergent patterns at the system level. An agent’s initial endowment – the resources it possesses at the start of a simulation – dictates its opportunities and constraints, impacting its trajectory and interactions with others. Consequently, agents with differing endowments may adopt drastically different strategies, leading to uneven outcomes and potentially reinforcing existing inequalities. Recognizing and incorporating these inherent differences is therefore critical for building simulations that accurately reflect the nuances of real-world systems and for developing policies that account for diverse needs and vulnerabilities.

Simulations often treat agents as abstract entities with unlimited resources, yet biological systems are fundamentally constrained by metabolic rates – the speed at which organisms consume energy and materials. Incorporating metabolism into agent-based models introduces a critical layer of realism, forcing agents to actively manage resources to survive and reproduce. This necessitates trade-offs between activities like foraging, reproduction, and defense, mirroring the pressures faced by living organisms. Consequently, the simulation captures emergent behaviors driven by resource scarcity and energy expenditure, such as competition for limited supplies, the evolution of efficient resource utilization strategies, and population dynamics reflecting birth and death rates tied to energy balance. By acknowledging these biophysical limitations, the model generates more plausible scenarios and allows for the exploration of how metabolic constraints shape complex systems.

The development of effective policies often relies on simplified models, yet real-world populations exhibit significant heterogeneity in attributes like initial resources and metabolic rates. Integrating these factors into the optimization framework – the very process used to design those policies – allows for a more nuanced evaluation of potential outcomes. Simulations incorporating agent-based modeling, accounting for varied endowments and consumption rates, reveal how policies perform not just on average, but across diverse demographic groups. This approach moves beyond simply maximizing overall welfare, enabling the creation of policies specifically designed to mitigate inequalities and ensure robustness against varying conditions. Consequently, solutions generated through this integrated framework are demonstrably more resilient and equitable, addressing the needs of a wider spectrum of individuals within the simulated environment and offering a pathway toward more just and sustainable outcomes.

The pursuit of optimal environmental policies, as explored within the framework of agent-based modeling, reveals a familiar irony. The study diligently seeks to refine interventions within a simulated Sugarscape, yet echoes a timeless truth: every attempt to impose order upon a complex system introduces new vulnerabilities. As René Descartes observed, “Cogito, ergo sum” – I think, therefore I am. This seemingly simple declaration speaks to the very heart of systems design. The act of modeling, of defining agents and their interactions, is itself an assertion of existence, a creation that, like all creations, is subject to entropy. The framework detailed here, with its Bayesian optimization and sensitivity analysis, is not a solution, but a temporary alignment of forces, a compromise frozen in time. Dependencies will shift, unforeseen consequences will emerge, and the ‘optimal’ policy will inevitably require recalibration, a constant reminder that systems aren’t built-they grow, and often, in unexpected directions.

What’s Next?

The pursuit of optimized policy within agent-based models, as demonstrated by this work, isn’t a quest for control, but a carefully constructed delay of inevitable divergence. The framework offered – Bayesian optimization coupled with Gaussian processes – merely refines the instruments with which one postpones chaos, not abolishes it. It clarifies, with statistical rigor, the pathways to temporary stability, knowing full well that each optimized parameter is, in effect, a seed for future fragility. There are no best practices – only survivors; those policies which, by accident or design, persist longest against the background radiation of unforeseen consequences.

The true limitation isn’t computational cost, nor model fidelity, but the fundamental assumption that a ‘good’ policy can be defined in the first place. Such definitions are transient, anchored to specific initial conditions and a limited understanding of the system’s attractor landscape. Future work must embrace uncertainty not as a nuisance to be minimized, but as the defining characteristic of complex systems. Exploration of policy robustness – its capacity to degrade gracefully under perturbation – will prove far more valuable than the pursuit of peak performance in idealized scenarios.

Ultimately, order is just cache between two outages. The focus will inevitably shift from optimization – a search for the optimal trajectory – to adaptation. Agent-based models, therefore, should be less concerned with predicting the future, and more with simulating the capacity of systems to absorb, and even benefit from, unexpected shocks. The goal isn’t to engineer resilience, but to cultivate it.

Original article: https://arxiv.org/pdf/2602.17079.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- 1xBet declared bankrupt in Dutch court

- Gold Rate Forecast

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- James Van Der Beek grappled with six-figure tax debt years before buying $4.8M Texas ranch prior to his death

2026-02-21 15:30