Author: Denis Avetisyan

New research demonstrates a method for transferring grasp planning from traditional rigid robot grippers to the more versatile, but challenging, domain of soft robotics.

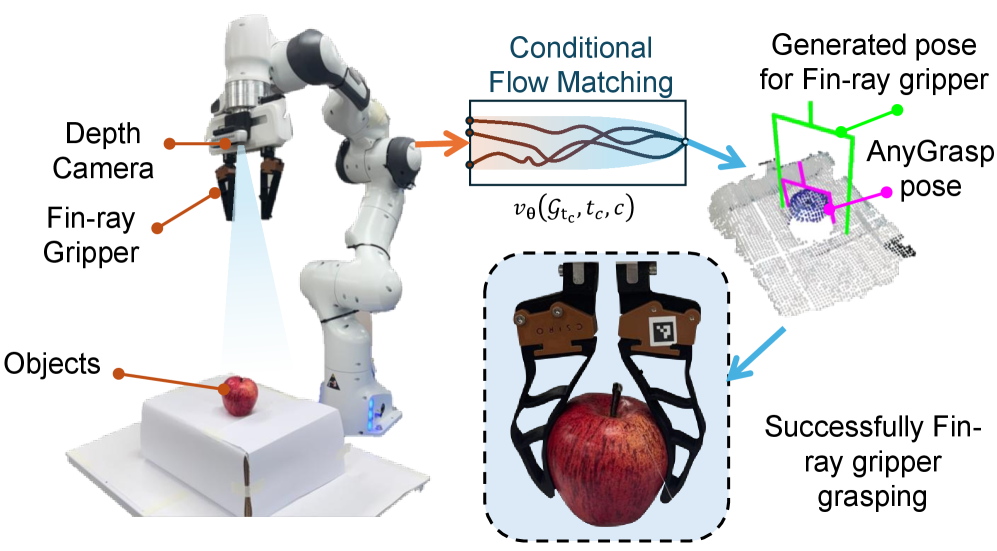

![The Conditional Flow Matching (CFM) framework synthesizes grasps by learning a continuous transformation-guided by a conditional velocity field [latex]\mathbf{v}_{\theta}[/latex]-from initial rigid poses generated by Anygrasp [latex]\mathcal{G}_{\text{Anygrasp}}[/latex] to target soft gripper poses [latex]\mathcal{G}_{\text{CFM}}[/latex], a process parameterized by a feed-forward MLP and conditioned on scene geometry encoded by a U-Net autoencoder into a latent vector.](https://arxiv.org/html/2602.17110v1/x2.png)

Conditional Flow Matching enables the effective adaptation of grasp poses for soft Fin-ray grippers, improving robustness and manipulation capabilities.

Despite advances in robotic manipulation, a performance gap persists when transferring grasp strategies between rigid and soft grippers due to differing compliance characteristics. This is addressed in ‘Grasp Synthesis Matching From Rigid To Soft Robot Grippers Using Conditional Flow Matching’, which introduces a novel framework leveraging Conditional Flow Matching (CFM) to map grasp poses from rigid to soft Fin-ray grippers. The approach learns a continuous transformation via a U-Net autoencoder conditioned on object geometry, demonstrably improving grasp success rates on both seen and unseen objects-achieving up to 46% success for unseen objects compared to 25% with baseline rigid poses. Could this data-efficient methodology pave the way for more adaptable and scalable soft robotic manipulation systems?

The Limits of Precision: Why Robots Struggle to Grasp the Real World

Conventional robotic grasping strategies frequently depend on meticulously detailed, three-dimensional models of objects, a practice proving increasingly problematic outside of controlled laboratory environments. These precise models demand accurate prior knowledge – dimensions, weight distribution, surface characteristics – yet the real world rarely provides such clarity. A slight deviation from the expected shape, an unanticipated texture, or even subtle changes in lighting can render these models inaccurate, causing the robot to fail to secure a stable grasp. This brittleness stems from the reliance on perfect information; a small amount of uncertainty – common in everyday settings – cascades into significant errors, hindering a robot’s ability to reliably manipulate objects it hasn’t encountered precisely as predicted. Consequently, researchers are actively exploring methods that minimize dependence on pre-defined models, favoring approaches capable of adapting to unforeseen variations and incomplete data.

Conventional robotic grasp planning frequently falters when confronted with the ambiguities of real-world perception. These methods typically demand complete and accurate information about an object’s shape and pose, yet robots often operate with incomplete data – a partially obscured view, a low-resolution scan, or sensor noise distorting the perceived geometry. This sensory deprivation significantly diminishes the reliability of grasp algorithms, as the robot struggles to confidently identify stable grasp points. The success rate plummets because even minor inaccuracies in the perceived object model can lead to collisions or unstable grasps, highlighting a critical limitation in deploying robots within dynamic and unpredictable environments. Consequently, research is increasingly focused on developing techniques that can infer object properties from limited data or robustly handle uncertainty in sensor readings, paving the way for more adaptable and resilient robotic manipulation.

![A data validation pipeline was used to train a grasp pose transformation model by comparing initial poses generated by AnyGrasp ([latex]\mathcal{G}_{Anygrasp}[/latex], magenta) with manually adjusted, successful soft gripper poses ([latex]\mathcal{G}_{CFM}[/latex], green) obtained through executing grasps on a variety of objects and lifting them, as demonstrated in the experimental setup.](https://arxiv.org/html/2602.17110v1/x3.png)

Reconstructing Reality: Generative Models as a Path to Robust Grasping

Generative models address the challenge of reconstructing 3D geometry from partial observations, a common problem in robotic manipulation. Incomplete data, arising from sensor occlusion or noise, hinders reliable grasp planning; generative models offer a solution by probabilistically inferring the missing geometric details. These models learn a distribution over plausible object shapes given the available data, allowing the system to generate complete representations even with significant data loss. This capability is crucial for robust grasp planning as it enables the robot to identify stable and feasible grasp points on the completed geometry, increasing success rates in cluttered environments and with partially visible objects.

A U-Net autoencoder is utilized to create a condensed representation of object geometry from depth images. The U-Net architecture, comprising an encoder and decoder, processes the input depth image and compresses it into a lower-dimensional vector, termed the condition vector. This vector encapsulates the object’s shape information in a latent space. The encoder downsamples the input through convolutional layers, extracting hierarchical features, while the decoder reconstructs a representation from these features. The resulting condition vector serves as a concise and efficient descriptor of the object’s form, suitable for subsequent processing by a diffusion model to complete missing geometric details.

Diffusion models operate by progressively adding Gaussian noise to data until it becomes pure noise, then learning to reverse this process to generate new samples. This is achieved through a Markov chain of diffusion steps, parameterized by a neural network trained to predict the noise added at each step. For geometry completion, this allows the model to iteratively refine an initial, noisy completion, guided by the observed partial data, resulting in high-quality and diverse reconstructions. Unlike Variational Autoencoders (VAEs) or Generative Adversarial Networks (GANs), diffusion models avoid mode collapse and offer improved sample diversity, crucial for robust grasp planning scenarios requiring multiple plausible object completions.

Synthesizing Grasps with Inferred Geometry: Bridging the Perception-Action Gap

The incorporation of generated geometric data into the grasp synthesis pipeline facilitates robotic grasp planning under conditions of incomplete sensory input. This is achieved by providing the system with a reconstructed representation of the target object, even when full observation is unavailable. The pipeline uses this geometric information to predict feasible grasp poses, effectively mitigating the challenges posed by partial observations and enabling the robot to attempt grasps based on an estimated object shape. This approach allows for grasp planning in scenarios where direct, complete visual data is obstructed or insufficient, improving the robustness of the grasping process.

The Anygrasp methodology, originally developed for grasp planning with rigid robotic grippers, was modified to incorporate the generated geometric priors. This adaptation involved augmenting the Anygrasp cost function with terms that penalize grasps inconsistent with the observed or inferred geometry of the target object. Specifically, the geometric information constrains the possible grasp poses, reducing the search space and increasing the likelihood of generating stable grasps. By factoring in object shape and pose, the modified Anygrasp algorithm demonstrates improved robustness to partial observations and enhances grasp stability compared to implementations relying solely on visual or tactile feedback.

The described system successfully extends grasp synthesis beyond rigid grippers to include soft robotic manipulators, specifically the Fin-ray Gripper. Unlike rigid grippers, soft grippers necessitate detailed contact modeling to accurately predict interactions and maintain stability during grasping. Integration of geometric priors allows for effective control of these nuanced contact dynamics, resulting in an overall grasp success rate of 38% when utilizing the Fin-ray Gripper. This demonstrates the system’s capability to adapt to the unique challenges presented by compliant, deformable grasping surfaces.

![The proposed soft gripper poses [latex]\mathcal{G}_{soft}[/latex] demonstrate improved grasp success rates when benchmarked against the original AnyGrasp poses [latex]\mathcal{G}_{Anygrasp}[/latex].](https://arxiv.org/html/2602.17110v1/x4.png)

Demonstrated Resilience: Validating the System and Charting Future Directions

Rigorous experimental validation was conducted using a Franka Emika Panda robot equipped with an Intel RealSense D415 depth camera to assess the proposed grasping methodology. This robotic platform facilitated a controlled environment for evaluating the system’s ability to perceive and manipulate objects. Through a series of tests involving both seen and unseen objects in cluttered scenes, the setup demonstrated a marked improvement in grasping success. The combination of robotic precision and depth sensing proved crucial in showcasing the efficacy of the approach, paving the way for potential real-world applications in automated manipulation and robotic systems.

The robotic grasping system demonstrated a notable ability to successfully acquire objects even when presented with incomplete visual information, a key feature for operation within realistic, cluttered environments. Experimental validation, utilizing a Franka Emika Panda robot and Intel RealSense D415 depth camera, revealed an overall grasp success rate of 38%. This represents a significant improvement over the baseline Anygrasp system, which achieved a success rate of only 15%. The capacity to function effectively from partial views highlights the system’s robustness and potential for deployment in complex scenarios where complete object visibility is not guaranteed, paving the way for more adaptable and reliable robotic manipulation.

Experimental results demonstrate a substantial improvement in robotic grasping performance when utilizing this novel approach, particularly concerning the ability to generalize to novel objects. The system achieves a 34% success rate when grasping objects it has previously encountered, a significant leap from the 6% success rate of the baseline method. Importantly, performance extends beyond memorization; the system exhibits even greater proficiency when confronted with entirely unseen objects, attaining a 46% success rate compared to the baseline’s 25%. This indicates a capacity for robust perception and adaptable grasping strategies, moving beyond simple object recognition toward true robotic dexterity.

The robotic grasping system demonstrated a remarkable ability to generalize to novel objects, particularly excelling with specific geometries. Evaluations revealed a 100% success rate when grasping previously unseen cylindrical objects, a substantial improvement over the 75% achieved by the baseline method. Furthermore, the system successfully grasped 50% of unseen rectangular objects, a significant feat considering the baseline achieved zero success. This performance suggests the approach effectively learns features crucial for stable grasping, allowing for reliable manipulation of common object shapes even in unfamiliar scenarios and hinting at a strong foundation for future advancements in robotic dexterity.

Continued development centers on enhancing the robotic grasping system’s adaptability through two key avenues. Researchers aim to integrate tactile sensing, allowing the robot to ‘feel’ an object’s surface and adjust its grip for increased reliability, particularly in scenarios with varying textures or uncertain object properties. Simultaneously, exploration of more sophisticated generative models is underway; these advanced algorithms promise to move beyond current capabilities by predicting optimal grasp configurations with greater accuracy and efficiency. This combined approach – fusing sensory feedback with predictive modeling – is expected to yield a system capable of robustly grasping a wider range of objects in increasingly complex and unpredictable environments, ultimately bridging the gap towards truly versatile robotic manipulation.

The study highlights a crucial aspect of robotic systems: the interconnectedness of structure and behavior. Successfully transferring grasp poses from rigid to soft grippers via Conditional Flow Matching demonstrates that a system’s adaptability isn’t simply about adding flexibility, but understanding how that flexibility interacts with the underlying mechanics. As Barbara Liskov noted, “It’s one of the things I’ve learned-that you don’t fix things by adding features; you fix them by simplifying.” This principle resonates strongly with the research; by focusing on the fundamental relationship between gripper geometry and grasp stability – and employing a generative model like CFM to bridge the gap – the authors achieve robust manipulation, proving that elegant solutions often stem from clarity rather than complexity.

What Lies Ahead?

The demonstrated transfer of grasp syntheses, while promising, merely shifts the locus of the problem. The core difficulty isn’t generating a pose, but ensuring its realization within a complex, deformable system. The fidelity of the simulation, the model of the Fin-ray gripper itself, becomes the immediate bottleneck. Improving this model, however, invites an infinite regress – greater accuracy demands more parameters, and thus, more data to constrain them. A truly scalable solution will likely require abandoning the pursuit of perfect simulation in favor of learning directly from interaction, embracing the inherent messiness of physical systems.

Furthermore, the current approach treats the gripper as a passive executor of a pre-determined plan. Future work should explore grasp adaptation – allowing the gripper to refine its pose during contact, responding to unexpected disturbances or object properties. This necessitates a move beyond purely generative models toward systems that integrate perception and control within a unified framework. The true cost of freedom, it seems, isn’t the complexity of the hardware, but the burden of continuous, real-time recalibration.

Ultimately, the elegance of a solution will not be measured by the ingenuity of the algorithm, but by its invisibility. A successful system should simply work, adapting to unforeseen circumstances without requiring explicit programming. The challenge, then, is not to build cleverness into the gripper, but to design a structure that anticipates and accommodates the inevitable imperfections of the world.

Original article: https://arxiv.org/pdf/2602.17110.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- 1xBet declared bankrupt in Dutch court

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Gold Rate Forecast

- Bikini-clad Jessica Alba, 44, packs on the PDA with toyboy Danny Ramirez, 33, after finalizing divorce

- James Van Der Beek grappled with six-figure tax debt years before buying $4.8M Texas ranch prior to his death

2026-02-21 10:53