Author: Denis Avetisyan

A new approach to markerless robot detection and pose estimation leverages deep learning to dramatically improve the accuracy and reliability of collaborative Simultaneous Localization and Mapping (SLAM) in complex environments.

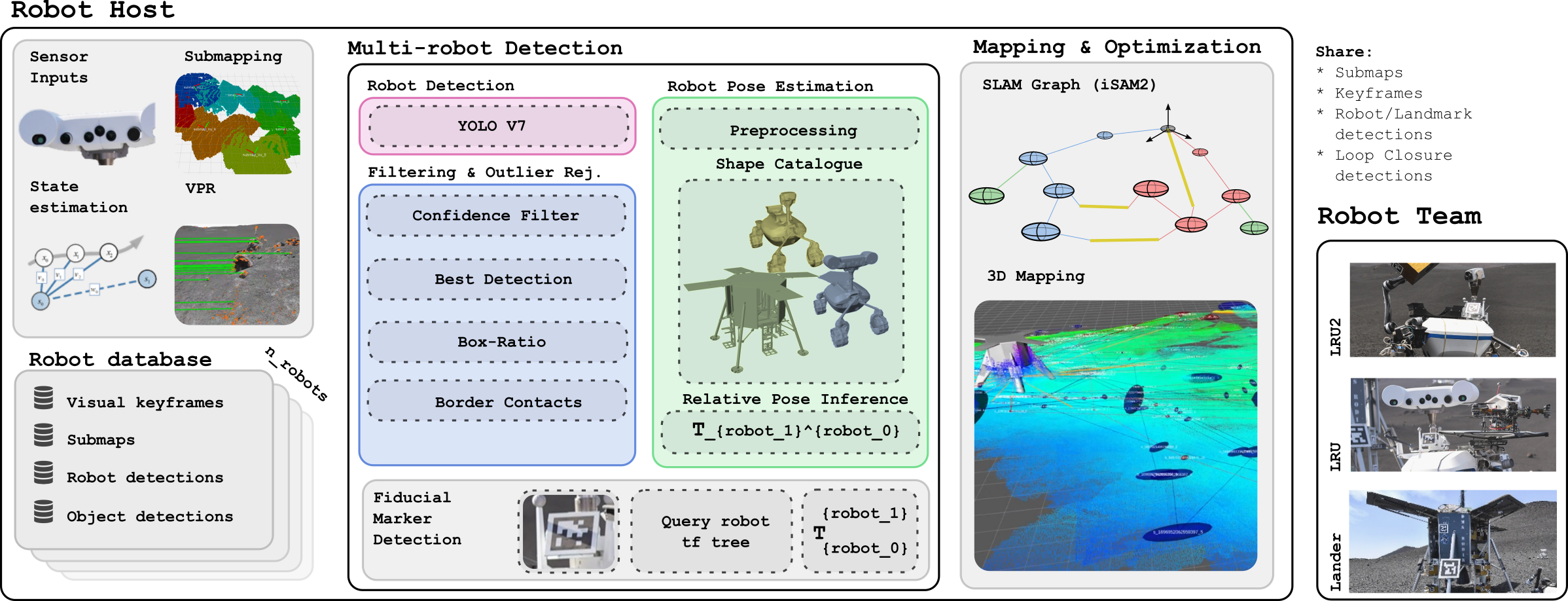

This review details a deep learning framework for markerless robot detection and 6D pose estimation, enhancing decentralized multi-robot SLAM performance and overcoming the limitations of traditional marker-based systems.

Establishing robust data associations between multiple robots remains a fundamental challenge in multi-agent simultaneous localization and mapping (SLAM). This paper, ‘Markerless Robot Detection and 6D Pose Estimation for Multi-Agent SLAM’, addresses this limitation by introducing a novel approach to inter-robot localization based on deep learning-driven, markerless 6D pose estimation. We demonstrate that this system significantly improves the accuracy and robustness of decentralized multi-robot SLAM, particularly in environments where traditional marker-based methods fail. Could this markerless approach unlock more scalable and resilient multi-robot systems for exploration and mapping in complex, real-world scenarios?

The Challenge of Scale: Mapping Beyond Single Robots

Single-robot Simultaneous Localization and Mapping (SLAM) systems, while effective in limited spaces, face significant hurdles when applied to expansive environments. The core issue resides in the computational burden; as the robot explores further, the size of the map – and the calculations needed to maintain it – grows exponentially. This increasing complexity strains onboard processing capabilities, leading to slower performance and potential system failure. Equally problematic is the accumulation of error, or ‘drift’. Each measurement contains a degree of uncertainty, and these small errors compound over time and distance, causing the map to become distorted and inaccurate. [latex] \Delta Pose = \in t_{t_0}^{t_i} \hat{v}(t) dt [/latex] illustrates how even small velocity estimation errors [latex] \hat{v}(t) [/latex] integrate over time to produce significant pose deviations. Consequently, traditional SLAM approaches often struggle to create consistent, globally accurate maps in large-scale scenarios, necessitating alternative strategies for robust environmental representation.

Addressing the limitations of single-robot Simultaneous Localization and Mapping (SLAM) in expansive environments, collaborative multi-robot systems distribute the computational burden of map creation across multiple agents. This parallel processing significantly accelerates mapping speed and enhances robustness. However, this distributed approach introduces considerable challenges; robots must accurately identify and integrate data from peers-a process known as data association-while simultaneously resolving potential inconsistencies arising from independent pose estimations and sensor noise. Maintaining a globally consistent map requires sophisticated algorithms to merge local maps, correct for accumulated drift, and ensure that features are uniquely identified across the entire robotic team. Successfully navigating these complexities is critical for realizing the full potential of multi-robot SLAM in large-scale applications, from autonomous warehouse navigation to environmental monitoring.

The efficacy of multi-robot simultaneous localization and mapping (SLAM) fundamentally relies on each robot’s ability to precisely determine its own location within the shared environment. Traditional pose estimation methods, while adequate for single robots, often falter when scaled to multi-robot systems due to increased noise and the challenges of coordinating multiple sensor streams. Consequently, researchers are actively developing advanced techniques, including Kalman filters, particle filters, and optimization-based approaches like [latex] \chi^2 [/latex] minimization, to achieve robust and accurate localization. These methods strive to fuse data from diverse sensors – including lidar, cameras, and inertial measurement units – while simultaneously accounting for uncertainties and potential data inconsistencies. The pursuit of improved pose estimation isn’t merely about refining existing algorithms; it’s about creating systems capable of maintaining a consistent and globally accurate map even as robots navigate complex, expansive, and dynamically changing environments.

Robust Detection: Seeing Beyond the Sensors

A markerless robot detection pipeline was developed employing deep learning techniques for identifying robots within complex visual environments. This pipeline utilizes both YOLO v7, a single-stage object detection model known for its speed and efficiency, and Transformer architectures, which excel at capturing long-range dependencies in image data. The combination of these architectures allows for robust detection across varying viewpoints, lighting conditions, and levels of occlusion. The system is designed to operate without requiring pre-placed markers or specialized environmental setups, increasing its applicability in dynamic and unstructured scenes. Output from the pipeline includes bounding box detections and associated confidence scores for each identified robot instance.

The robot detection pipeline generates 6D Pose Estimates, providing information regarding both the 3D position ([latex]x, y, z[/latex]) and 3D orientation (roll, pitch, yaw) of detected robots within the scene. This localization is achieved without the need for pre-placed fiducial markers or reliance on external tracking systems. The output provides a complete description of the robot’s spatial configuration, allowing for accurate positioning and orientation relative to the environment, and is essential for applications such as collaborative robotics, autonomous navigation, and scene understanding.

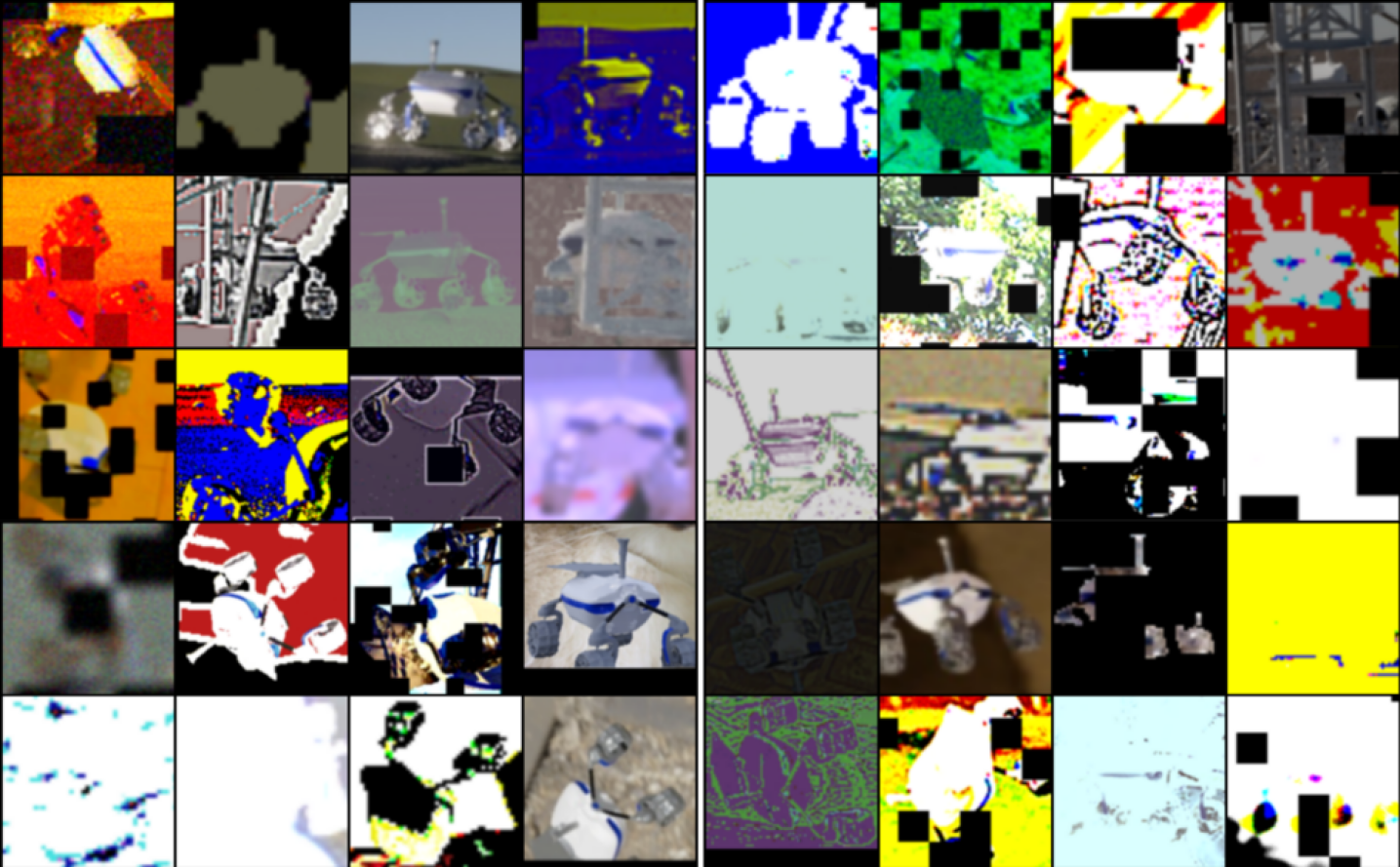

To enhance the generalization and robustness of robot detection and pose estimation models, training datasets were augmented with synthetically generated data created using the OAISYS and BlenderProc simulation tools. OAISYS facilitated the creation of physically realistic sensor data, while BlenderProc enabled the generation of diverse scenes with varied lighting conditions, backgrounds, and robot poses. This synthetic data was combined with real-world datasets to increase the volume and variability of training examples, mitigating potential biases and improving model performance in challenging or unseen environments. The use of synthetic data specifically addressed limitations in real-world data acquisition, such as the difficulty of collecting labeled data for rare or hazardous scenarios.

![A markerless detection pipeline estimates 6D Pose Estimates by first cropping the image, then using an encoder-decoder network to establish [latex]2D-3D[/latex] correspondences, segment foreground/background, and identify surface regions before feeding the correspondences to a pose regression network.](https://arxiv.org/html/2602.16308v1/images/lru_pose_estimation.png)

Integrated Mapping: Building a Consistent World

The system constructs a global Simultaneous Localization and Mapping (SLAM) graph by integrating pose estimates obtained from multiple Lightweight Rover Units (LRUs). Each LRU provides its measured pose, which is then used as a node in the graph. Edges connecting these nodes represent the spatial relationships and constraints derived from the LRU’s pose data, including inter-robot distances and relative orientations. This distributed approach allows for the creation of a unified map despite data originating from independent mobile platforms. The graph structure facilitates optimization algorithms, like iSAM2, to refine the overall map and robot trajectories by minimizing inconsistencies across the entire multi-robot system.

iSAM2 (Incremental Smoothing and Mapping 2) is utilized as the pose graph optimization backend due to its efficiency in handling large-scale SLAM problems. Unlike traditional batch optimization methods, iSAM2 performs optimization incrementally, adding new robot poses and landmarks to the graph without re-optimizing the entire map each time. This is achieved through a two-stage process: a smoothing stage that updates the variances of existing variables and a marginalization stage that removes outdated variables to limit computational cost and memory usage. By leveraging this incremental approach and utilizing sparse matrix techniques, iSAM2 minimizes cumulative drift and maintains a consistent map even with a large number of robot poses and a long operational timeframe. The algorithm’s ability to efficiently update the pose graph is critical for real-time performance in multi-robot SLAM scenarios.

Robust loop closure detection is achieved through the integration of Scan Context and NetVLAD techniques. Scan Context generates a compact, viewpoint-invariant signature of a laser scan by representing it as a distribution over angular differences, allowing for efficient similarity comparisons. NetVLAD, a neural network-based approach, extracts a global descriptor from individual scans, enabling place recognition even with significant changes in viewpoint or appearance. These descriptors are indexed and queried to identify previously visited locations, providing constraints for the pose graph optimization process and reducing accumulated drift in the multi-robot SLAM system. Both methods contribute to improved data association by enhancing the reliability of identifying and correcting loop closures, thereby increasing the overall map accuracy and consistency.

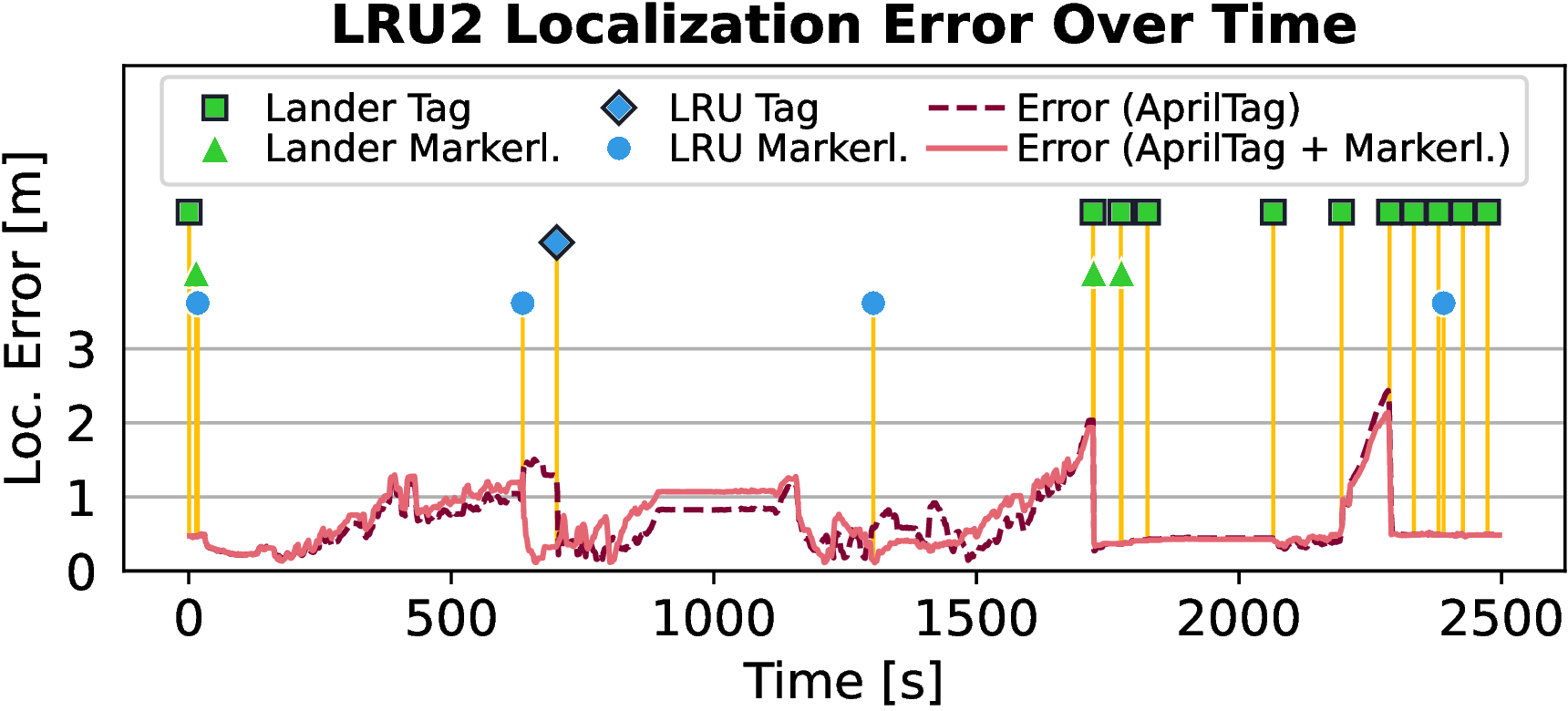

![Visual multi-robot SLAM successfully localized two rovers throughout Mission 2, demonstrating error correction after observing the lander and another rover ([latex]LRU2[/latex]) without markers, and showing comparable performance with and without markerless observations.](https://arxiv.org/html/2602.16308v1/images/run05/lru2_error_icra.png)

Validated Performance: Accuracy in the Real World

Rigorous evaluation confirms the enhanced mapping precision achieved by this system when contrasted with conventional techniques. Quantitative data, meticulously verified against the established ground truth provided by both VICON motion capture and D-GNSS positioning systems, reveals a statistically significant improvement in map accuracy. This validation process doesn’t rely on simulated environments; instead, real-world performance is assessed, demonstrating a reduction in mapping errors and a more faithful representation of the surveyed space. The convergence of these independent verification methods-VICON’s high-precision local tracking and D-GNSS’s global positioning-provides compelling evidence for the system’s reliability and its capacity to generate maps of superior quality compared to existing approaches.

The multi-robot system exhibits a compelling scalability, demonstrably reducing the time required to map expansive environments as the number of collaborating robots increases. This performance isn’t merely incremental; the system leverages parallel processing capabilities to distribute the computational load, effectively diminishing the time complexity associated with Simultaneous Localization and Mapping (SLAM). Testing revealed that doubling the robot count did not proportionally increase processing time, indicating a near-linear improvement in mapping speed. This efficient scaling allows for rapid creation of detailed maps over larger areas-critical for applications like disaster response, large-scale infrastructure inspection, and environmental monitoring-while maintaining acceptable computational demands on individual robotic units.

Rigorous testing confirmed the system’s resilience under challenging real-world conditions. The mapping accuracy remained consistently high despite variations in ambient lighting, ranging from bright sunlight to dim indoor environments, and even with the introduction of simulated sensor noise. This robustness is achieved through a combination of advanced filtering techniques and a redundant sensor architecture, allowing the system to effectively mitigate the impact of imperfect data. Comprehensive evaluations, including controlled experiments with varying levels of noise and illumination, demonstrated that the system maintains reliable performance even when faced with conditions that would typically degrade the accuracy of conventional robotic mapping systems. The consistent results highlight the system’s potential for deployment in diverse and unpredictable environments.

Field tests revealed a significant advancement in multi-robot Simultaneous Localization and Mapping (SLAM) through a novel markerless robot detection system, achieving up to a 400% increase in detection range compared to conventional methods. This expanded range directly translates to improved instantaneous localization accuracy, enabling more robust and efficient collaborative mapping. Importantly, the markerless approach demonstrated performance statistically equivalent to systems relying on AprilTag markers, as detailed in Figure 6, suggesting a viable pathway to reduce reliance on pre-placed infrastructure and enhance the adaptability of multi-robot teams in dynamic environments. The system’s ability to maintain comparable precision without external markers positions it as a promising solution for applications requiring flexible and scalable robotic collaboration.

Future Trajectories: Towards Truly Scalable Autonomy

Submapping represents a significant advancement in robotic mapping by dividing a large environment into smaller, more manageable subsections that can be mapped concurrently. This parallelization drastically reduces the computational burden traditionally associated with Simultaneous Localization and Mapping (SLAM), allowing robots to explore and map larger spaces with limited onboard resources. Instead of processing the entire environment as a single unit, submaps enable focused data association and loop closure detection within individual sections, dramatically improving efficiency and real-time performance. Furthermore, this approach facilitates scalability; as the environment expands, additional submaps can be seamlessly integrated without exponentially increasing computational demands, making it a crucial technique for deploying autonomous systems in expansive and dynamic settings. The strategy not only accelerates map construction but also enhances the robustness of the system against failures, as localized errors within one submap are less likely to propagate across the entire map.

Current autonomous navigation systems often rely heavily on LiDAR for creating detailed 3D maps and localization, but these systems can struggle in visually-deprived or dynamically-changing environments. Researchers are increasingly investigating the synergistic combination of LiDAR and visual SLAM to overcome these limitations. By fusing data from both sensor modalities, robots can achieve greater robustness; visual SLAM provides rich texture information and handles dynamic changes more effectively, while LiDAR offers precise depth measurements and excels in low-light conditions. This complementary approach allows for more accurate and reliable mapping, even when one sensor’s data is degraded or unavailable, ultimately leading to more adaptable and resilient autonomous systems capable of operating in a wider range of real-world scenarios.

Effective robotic autonomy hinges not merely on creating maps, but on intelligently utilizing them. Current research prioritizes dynamic task allocation, allowing robots to adapt to changing priorities and unforeseen obstacles within a mapped environment. This involves algorithms that assess task feasibility based on robot capabilities, map data – including traversability and estimated travel times – and real-time sensor input. Sophisticated path planning strategies, moving beyond static routes, are being developed to enable robots to re-route dynamically, avoid collisions, and optimize for energy efficiency. Furthermore, these adaptive systems often incorporate learning mechanisms, allowing robots to refine their task allocation and path planning decisions over time based on past experiences and successes, ultimately improving overall mission performance and resilience in complex, real-world scenarios.

The pursuit of robust multi-robot SLAM necessitates a ruthless pruning of complexity. This paper demonstrates this principle by discarding reliance on markers, a traditional but limiting constraint. As Bertrand Russell observed, “The point of contact between two sciences is not the contact between two separate domains, but the elucidation of a common territory.” This work effectively bridges the gap between theoretical SLAM algorithms and practical implementation by offering a markerless detection method-a common territory for all robotic systems-that leverages deep learning for 6D pose estimation. The resultant system achieves improved performance, particularly in environments where markers are impractical, validating the elegance of reduction.

What Remains?

The pursuit of robust multi-agent SLAM inevitably encounters the limits of perception. This work, while mitigating the frailties of marker-based systems, does not erase those limits. The core challenge isn’t merely detecting agents, but understanding their intent within a shared, imperfect map. Current methods remain tethered to visual data; sensor fusion-truly integrating disparate inputs beyond simple weighting-remains a largely unrealized ideal.

Future work must address the inherent ambiguity of pose estimation. Six degrees of freedom define position, yet true understanding requires anticipating movement. Predictive modeling-incorporating agent dynamics and environmental constraints-offers a potential, if computationally expensive, path forward. Simplicity, however, should not be sacrificed. Clarity is the minimum viable kindness.

Ultimately, the goal isn’t flawless mapping, but graceful degradation. A system that knows what it doesn’t know-and can operate effectively within that uncertainty-represents a more pragmatic, and arguably more intelligent, approach. The illusion of perfect information is a vanity; useful autonomy demands acknowledging its absence.

Original article: https://arxiv.org/pdf/2602.16308.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Gold Rate Forecast

- 1xBet declared bankrupt in Dutch court

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-02-19 17:45