Author: Denis Avetisyan

A new framework automatically generates realistic tasks for robots, dramatically improving their ability to understand and interact with the physical world.

![RoboGene cultivates a system where task generation emerges from the interplay of exploratory sampling-guided by a Least Frequently Used [latex] LFU [/latex] strategy-and iterative refinement through self-reflection, all while anchoring performance to sustained learning from Human-in-the-Loop [latex] HITL [/latex] feedback within a long-term memory module.](https://arxiv.org/html/2602.16444v1/x1.png)

This work introduces RoboGene, a diversity-driven agentic framework for pre-training vision-language-action models with physically grounded robotic tasks.

Acquiring diverse, real-world interaction data remains a key bottleneck in advancing general-purpose robotic manipulation. This limitation motivates the work presented in ‘RoboGene: Boosting VLA Pre-training via Diversity-Driven Agentic Framework for Real-World Task Generation’, which introduces an agentic framework for automated generation of physically plausible and diverse robotic tasks. By integrating diversity-driven sampling, self-reflection mechanisms, and human refinement, RoboGene significantly enhances the pre-training of Vision-Language-Action models and improves their real-world generalization capabilities. Could this approach unlock a new era of scalable and adaptable robotic learning, moving beyond reliance on manually curated datasets?

The Inevitable Failure of Narrow Expertise

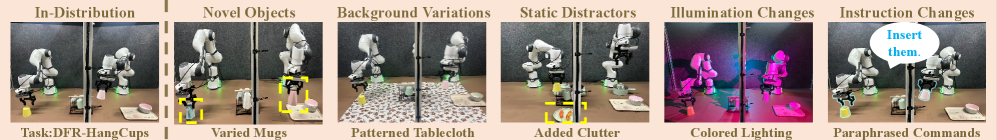

Robotic systems, despite advances in controlled environments, consistently falter when confronted with the unpredictable nature of real-world tasks. This limitation stems from a reliance on narrowly defined parameters and pre-programmed routines, creating a significant barrier to widespread adoption. Unlike human dexterity, which effortlessly adapts to unforeseen circumstances, robots often require painstaking re-programming for even slight variations in an objective – a process that is both time-consuming and inefficient. This struggle with generalization isn’t merely a matter of software; it’s a fundamental challenge in bridging the gap between a robot’s ability to perform a specific action and its capacity to understand and adapt to novel situations, ultimately hindering their usefulness beyond highly structured settings.

Conventional robotics often confines machines to executing a limited repertoire of pre-programmed actions, creating a significant obstacle to widespread adoption in dynamic environments. These systems typically demand substantial human intervention – often complete re-programming – whenever confronted with even slight deviations from their intended operational parameters. This reliance on explicitly defined skills proves particularly problematic because real-world tasks rarely conform to neat, pre-defined categories; a robot capable of grasping a specific type of mug, for instance, may struggle with a bottle or a uniquely shaped object. The inflexibility inherent in these approaches drastically limits a robot’s ability to adapt, learn, and perform reliably across the diverse and unpredictable challenges presented by everyday life, ultimately hindering its potential for truly autonomous operation.

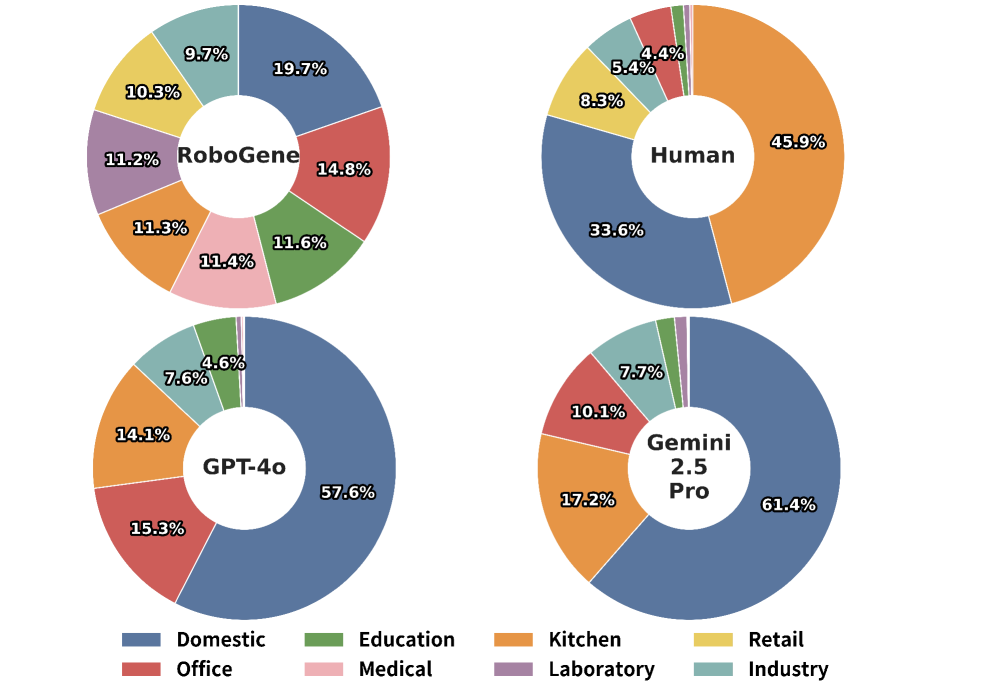

The development of truly adaptable robotic systems is hampered by a critical challenge: the need for extensive and varied training data representing a wide spectrum of possible manipulation tasks. Current methods often struggle to create enough realistic scenarios for robots to learn effectively, leading to brittle performance when faced with novel situations. To address this, researchers introduced RoboGene, a system designed to automatically generate a massive and diverse set of robotic manipulation tasks. Critically, RoboGene doesn’t just prioritize quantity; it ensures that nearly all generated tasks – achieving a remarkable 99% physical feasibility – are actually possible for a robot to execute, avoiding wasted training on unrealistic or impossible actions and accelerating the learning process towards robust, real-world applicability.

The Illusion of Control: An Agentic Framework

RoboGene operates as an agentic framework designed for the autonomous generation of Robotic Manipulation Tasks. This is achieved through the integration of Vision-Language-Action Models, which enable the system to interpret visual input, understand natural language instructions, and translate them into executable robotic actions. Unlike traditional robotic task programming which relies on explicit, manual specification, RoboGene dynamically creates tasks based on its internal models and environmental perception, allowing for increased flexibility and adaptability in robotic applications. The framework effectively bridges the gap between high-level task descriptions and low-level robot control signals without requiring human intervention in the task definition process.

RoboGene represents a shift away from conventional robotic task programming which relies heavily on human-defined specifications. Historically, robotic manipulation tasks required detailed, manual creation of trajectories, grasp points, and object interactions. This process is time-consuming, requires specialized expertise, and limits adaptability. RoboGene, conversely, autonomously generates complete task definitions, including object selection, manipulation steps, and desired outcomes, eliminating the need for explicit human task specification and enabling the robot to independently formulate and execute complex procedures.

RoboGene incorporates a physical feasibility assessment module to validate generated robotic manipulation tasks against the robot’s kinematic and dynamic limitations. This assessment utilizes the robot’s forward kinematics and dynamics models to determine if a proposed task-defined by a sequence of actions-is achievable without violating joint limits, exceeding torque limits, or resulting in unstable configurations. Evaluations demonstrate a physical feasibility rate of 0.99, indicating that 99% of generated tasks are determined to be physically achievable, a statistically significant improvement over all compared baseline methods for task validation.

The Entropy of Exploration: Diversity and Self-Correction

RoboGene utilizes Least Frequently Used Sampling (LFUS) as a core component of its task generation process to actively increase task diversity. LFUS operates by tracking the frequency with which specific objects and skills are incorporated into generated tasks. During task creation, LFUS prioritizes the selection of objects and skills that have been used least often, effectively shifting the distribution away from commonly explored areas of the task space. This proactive approach ensures continuous exploration of underrepresented combinations, resulting in a task space currently containing 719 unique objects and 108 unique skills, and mitigating potential biases towards frequently utilized elements.

RoboGene’s Self-Reflection Mechanism operates as a pre-execution validation process for generated tasks. This mechanism utilizes a rule-based system and constraint checking to identify potential inconsistencies, such as physically impossible object arrangements or actions requiring non-existent tools. Identified issues trigger automated corrections; for example, repositioning objects to ensure stability or substituting requested tools with available alternatives. This internal scrutiny minimizes the occurrence of failed execution attempts due to task definition errors, increasing overall system efficiency and reducing reliance on external feedback loops for basic error correction.

RoboGene’s Long-Term Memory Module functions as a repository for data derived from both human feedback and analyses of failed task executions. This module systematically consolidates information regarding task characteristics, environmental factors, and execution outcomes. The resulting dataset, encompassing 719 unique objects and 108 distinct skills, is then leveraged to inform and refine subsequent task generation, prioritizing the creation of feasible and diverse challenges. This iterative process of data consolidation and application enables RoboGene to adapt and improve its task generation capabilities over time, moving beyond purely random exploration.

The Inevitable Regression to the Mean: Benchmarks and Limits

RoboGene distinguishes itself through a demonstrated capacity to autonomously generate a wide range of robot tasks that are not only varied but also physically realizable. Rigorous benchmarking reveals a significant performance advantage over current state-of-the-art large language models, including GPT-4o, Gemini 2.5 Pro, and π0. Across five distinct tasks, RoboGene achieves an average success rate of 48%, a notable improvement compared to baseline datasets and established methodologies. This capability suggests a substantial step forward in robotic autonomy, allowing for the creation of complex scenarios without extensive human intervention and paving the way for robots capable of adapting to dynamic and unpredictable environments.

RoboGene’s core functionality hinges on a sophisticated Vision-Language-Action Model, which bridges the gap between abstract task descriptions and concrete robotic execution. This model doesn’t simply understand commands; it interprets visual input, deciphers natural language instructions, and then translates those into a sequence of actionable commands for a robot. The system effectively decomposes complex goals into a series of low-level motor controls, allowing the robot to perceive its environment, understand the desired outcome, and autonomously plan a path to completion. This translation process is crucial, enabling RoboGene to not only generate novel tasks but also to reliably execute them in a physical setting, marking a significant advancement in robotic autonomy and task generalization.

RoboGene demonstrates a marked improvement in robotic task completion, specifically showcased by its performance on the DFR-GrillSkewers challenge. The system achieved a 30% success rate when translating generated tasks into executable robot actions, utilizing a Vision-Language-Action (VLA) policy. This outcome represents a substantial leap forward when contrasted with datasets built from human curation, which currently attain only a 5% success rate on the same task. The significant difference highlights RoboGene’s capacity to autonomously generate and execute complex robotic procedures with greater reliability, suggesting a pathway towards more efficient and adaptable robotic systems capable of surpassing human-defined benchmarks.

The pursuit of robust robotic systems, as demonstrated by RoboGene’s agentic task generation, echoes a fundamental truth: control is an illusion. This framework doesn’t build tasks; it cultivates a diverse ecosystem of possibilities, allowing the Vision-Language-Action model to adapt and refine itself through iterative interaction with a physically grounded environment. Vinton Cerf observed, “The Internet treats everyone the same. That’s its power.” Similarly, RoboGene’s diversity-driven approach doesn’t predefine success, but provides the conditions for emergent capabilities. Every dependency-on simulated physics, on the initial distribution of tasks-is a promise made to the past, but the system’s capacity for self-reflection allows it to renegotiate those promises over time, ultimately fixing itself through experience.

What Seeds Will Sprout?

The pursuit of automated task generation, as exemplified by RoboGene, isn’t about building a better engine for creating data. It’s about cultivating a more robust garden. Each generated task is a seed, and the diversity encouraged by this framework speaks to an understanding that monoculture – a reliance on a narrow range of training scenarios – breeds brittleness. The real challenge lies not in maximizing the quantity of seeds, but in understanding the conditions that allow for flourishing, even in unexpected soil.

Current approaches still operate under the assumption of a ‘correct’ task, a goal state to be achieved. This is a fleeting comfort. The world rarely conforms to neat definitions. Future work should embrace the inherent ambiguity of physical interaction, allowing for tasks that are ill-defined, partially achievable, or even intentionally frustrating. A system that can learn from failure-from tasks it cannot complete-will prove far more adaptable than one perpetually chasing success.

Resilience won’t be found in isolating components, striving for perfect modularity. It will emerge from the forgiveness between them-the capacity to gracefully degrade when faced with the inevitable imperfections of the physical world. The true measure of these frameworks won’t be their ability to generate tasks, but their ability to learn from the tasks that go awry.

Original article: https://arxiv.org/pdf/2602.16444.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- 1xBet declared bankrupt in Dutch court

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-02-19 12:56