Author: Denis Avetisyan

A new agentic AI framework, GRACE, is automating the complex process of designing and simulating physics experiments, promising to accelerate scientific discovery.

GRACE autonomously designs detector configurations and evaluates experimental outcomes using Monte Carlo simulation and a knowledge graph.

Designing optimal experiments in high-energy and nuclear physics is often constrained by intuition and manual iteration, limiting the exploration of potentially superior configurations. This work introduces ‘GRACE: an Agentic AI for Particle Physics Experiment Design and Simulation’, a novel agentic AI framework capable of autonomously proposing, simulating, and evaluating modifications to detector setups. GRACE bridges the gap between artificial intelligence and scientific discovery by framing experimental design as a constrained search problem governed by physical law and employing [latex]\text{Monte Carlo}[/latex] methods for optimization. Could this approach unlock new avenues for simulation-driven scientific reasoning and accelerate the pace of discovery in complex instrumentation?

The Imperative of Parameter Space Exploration

High-energy physics experiments present a unique design challenge due to the sheer scale of possibilities that must be considered. Researchers navigate a vast parameter space – encompassing variables like particle beam energies, detector configurations, and data acquisition strategies – where even slight adjustments can dramatically impact results. These parameters aren’t independent; they’re interwoven with intricate constraints dictated by accelerator capabilities, detector limitations, and fundamental physics principles. For instance, maximizing the signal of a rare particle decay might necessitate a specific beam energy, but that same energy could also increase background noise, requiring careful optimization of detector shielding and trigger thresholds. This complex interplay demands not just innovative experimental setups, but also sophisticated computational tools to effectively explore the multitude of potential designs and identify those most likely to yield groundbreaking discoveries.

Historically, the optimization of high-energy physics experiments has been a largely manual process, demanding physicists meticulously adjust countless parameters and iteratively refine experimental setups based on observed results. This approach, while yielding valuable insights, now struggles to keep pace with the sheer scale and complexity of modern data acquisition. Contemporary experiments generate data at unprecedented rates and involve vastly more intricate instrumentation, rendering traditional tuning methods increasingly slow and inefficient. The time required for manual optimization can become a significant bottleneck, hindering the pace of discovery and limiting the full exploitation of expensive experimental facilities. Consequently, researchers are actively seeking automated and computationally efficient strategies to navigate the expansive parameter spaces inherent in these complex investigations.

Optimizing experimental designs in high-energy physics presents a substantial computational hurdle due to the inherent need to balance multiple, often conflicting, objectives. Researchers aren’t simply seeking a single ‘best’ configuration; instead, they must navigate a complex landscape where maximizing data yield, minimizing background noise, and adhering to strict hardware limitations are all crucial. This necessitates algorithms capable of exploring vast parameter spaces and identifying solutions that represent optimal trade-offs, rather than absolute maxima. The challenge isn’t merely one of computational power, but of developing intelligent search strategies that can efficiently sift through possibilities and converge on designs that satisfy a constellation of demanding criteria. Such approaches often leverage techniques from fields like multi-objective optimization and machine learning, allowing for automated exploration and refinement of experimental layouts to unlock the full potential of complex scientific instruments.

GRACE: An Autonomous Agent for Experimental Refinement

The GRACE framework implements an autonomous agent designed to automate aspects of experimental design within simulated environments. This agent operates by directly interacting with and manipulating parameters within the simulation, eliminating the need for constant human intervention. Unlike traditional experimental design workflows requiring manual iteration and analysis, GRACE functions as a self-directed system capable of proposing, executing, and evaluating experimental setups. The agent’s native operation within the simulation environment allows for rapid prototyping and testing of designs without the constraints and costs associated with physical experimentation, enabling a significantly accelerated research cycle.

The GRACE framework employs a Knowledge Graph as its central mechanism for representing and enforcing constraints during experimental design. This graph isn’t simply a database; it’s a structured representation of relationships between physical quantities, components, and established scientific principles. Nodes within the graph represent entities – materials, forces, geometric parameters – while edges define the relationships between them, such as functional dependencies or physical limitations. By encoding domain expertise – including established physics equations and known material properties – within the Knowledge Graph, GRACE ensures that generated experimental designs adhere to physically plausible configurations and avoid scientifically invalid parameter combinations. This allows the agent to explore the design space more efficiently, focusing on solutions that are inherently viable and meaningful from a scientific perspective, rather than requiring post-hoc validation against physical laws.

GRACE employs Monte Carlo Simulation as a core component of its iterative design process. This computational technique involves running numerous simulations with randomized parameter variations to statistically evaluate design performance across a broad range of conditions. By quantifying the probability distributions of key performance indicators, GRACE can identify optimal parameter settings without relying on gradient-based optimization or manual tuning. The agent uses the results of these simulations to build a probabilistic model of the design space, allowing it to intelligently explore and refine designs, ultimately automating the process of experimental optimization and surpassing the limitations of traditional, manual methods.

Simulation-Driven Reasoning: Beyond Validation

Simulation-Driven Reasoning, central to GRACE’s functionality, moves beyond traditional simulation use as a simple validation step. Instead, simulation outputs directly inform and drive iterative design improvements; the results are not merely assessed for correctness, but are algorithmically processed to propose modifications to the experimental design. This creates a closed-loop system where simulated performance is continuously optimized through successive design iterations, allowing for automated exploration of the design space and identification of configurations that maximize desired outcomes. This contrasts with conventional approaches where designs are manually adjusted based on simulation feedback, offering a potentially faster and more comprehensive optimization process.

GRACE utilizes design optimization techniques – specifically, algorithms that systematically adjust experimental parameters – guided by a defined set of performance metrics to iteratively refine designs. These metrics quantify key aspects of detector performance, such as light yield, background rejection efficiency, and energy resolution. The framework employs these metrics as objective functions, allowing for automated evaluation of different design configurations and identification of improvements. Optimization algorithms then modify the experimental setup – altering parameters like detector material, geometry, or component placement – to maximize these performance metrics, resulting in designs demonstrably superior to those created manually.

Simulation results indicate that the GRACE framework generates detector designs with performance levels comparable to, and often surpassing, those created by human experts. Specifically, optimization of the DarkSide-50 detector configuration using GRACE yielded a 3.05x improvement in light yield. This performance gain was directly attributed to an increase in the number of photomultiplier tubes (PMTs) incorporated into the design, as determined through iterative simulation and optimization processes within the GRACE framework.

Expanding the Horizon: Applications in Particle Physics

The GRACE framework is currently being leveraged to refine the designs of cutting-edge experiments employing Liquid Argon Time Projection Chamber (TPC) technology – a crucial approach in the search for dark matter and the study of elusive neutrinos. This optimization process extends to facilities like DarkSide-50, which seeks to detect weakly interacting massive particles (WIMPs), and ProtoDUNE, a precursor to the monumental Deep Underground Neutrino Experiment (DUNE). By simulating various detector configurations and signal processing strategies, GRACE assists researchers in maximizing the sensitivity of these instruments to rare event signatures, ultimately enhancing the potential for groundbreaking discoveries in particle physics and astrophysics. The framework’s adaptability allows for targeted improvements in component utilization, promising significant advancements in the detection capabilities of current and future Liquid Argon TPC experiments.

The GRACE framework offers a powerful approach to refining the design and data analysis pipelines of detectors searching for the universe’s most elusive particles. By systematically exploring numerous detector configurations and signal processing algorithms, it identifies arrangements that dramatically enhance the probability of observing exceedingly rare events. This optimization isn’t merely incremental; simulations demonstrate substantial gains in detection efficiency and the ability to distinguish genuine signals from background noise. Specifically, GRACE allows researchers to fine-tune detector components and analysis methods to extract the maximum amount of information from limited data, crucial for experiments probing the nature of neutrinos and the mysterious substance known as dark matter.

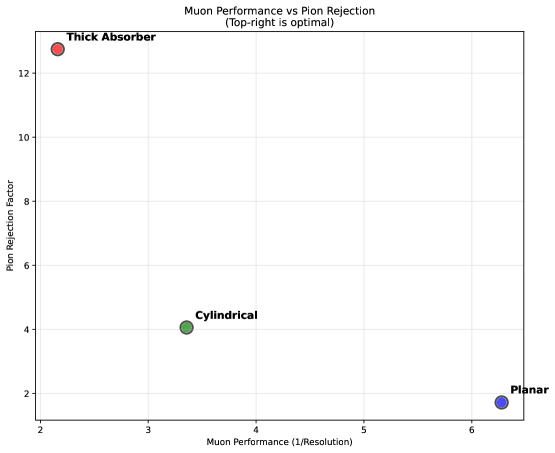

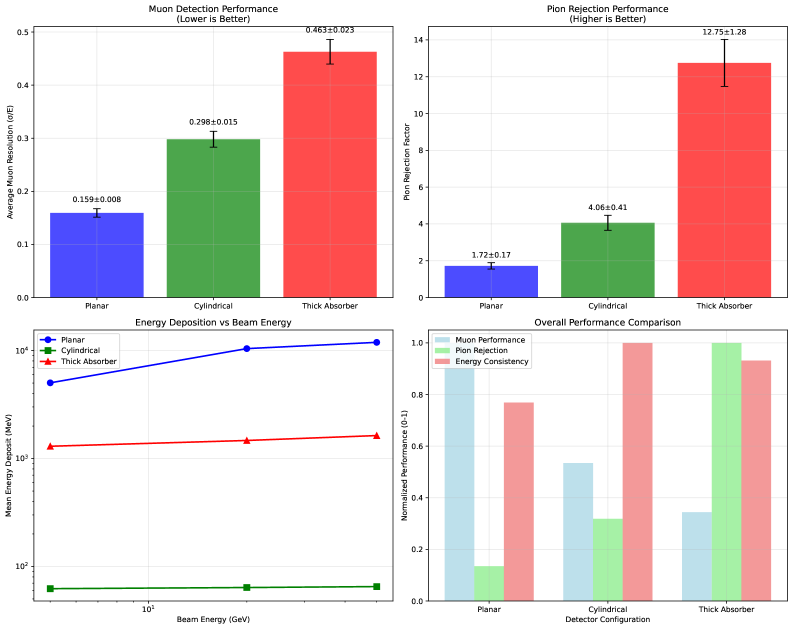

The GRACE framework demonstrates a powerful capability in refining the performance of key detector components used in the search for dark matter and neutrino interactions. Simulations focusing on the DarkSide-50 experiment reveal that strategically optimizing technologies like X-ARAPUCA light guides and wavelength shifters significantly boosts photon detection efficiency – achieving an impressive 2.02x improvement. This optimization, implemented with a thick absorber configuration, not only enhances sensitivity to rare events but also dramatically improves the rejection of unwanted background signals, specifically pions, by a factor of 12.75 – a substantial 7.4x gain compared to traditional planar detector designs. These results highlight GRACE’s potential to unlock enhanced capabilities in current and future liquid argon time projection chamber experiments, such as ProtoDUNE and the ambitious DUNE project.

![Increasing the photosensor count by 33% resulted in a disproportionately large improvement in light yield [latex]3.05\times[/latex] and detection efficiency [latex]2.02\times[/latex], demonstrating that the original detector configuration was limited by photosensor coverage.](https://arxiv.org/html/2602.15039v1/x1.png)

The development of GRACE demonstrates a commitment to establishing verifiable foundations within the realm of artificial intelligence for scientific exploration. This pursuit aligns with Immanuel Kant’s assertion: “All our knowledge begins with the senses, ends with the understanding.” GRACE, by autonomously designing and simulating particle physics experiments, doesn’t merely process data; it actively tests hypotheses through a rigorous, simulation-driven process. The agentic framework establishes clear boundaries for experimentation, enabling a systematic approach to knowledge discovery, where each proposed detector configuration is subject to logical evaluation-a process mirroring the pursuit of categorical understanding. This echoes a mathematical purity, essential for provable results.

The Path Forward

The advent of GRACE, while a demonstrable construction, merely illuminates the chasm between algorithmic function and genuine scientific understanding. The system’s capacity to navigate the parameter space of detector configurations, though efficient, relies fundamentally on the fidelity of the underlying Monte Carlo simulations. A perfect agent, it is not; its ‘discoveries’ are bounded by the approximations inherent in those simulations. The true test lies not in reproducing known physics, but in posing questions that expose the limitations of current theoretical frameworks-a task demanding more than iterative optimization.

Future efforts must address the fragility of the knowledge graph. The system’s reasoning, however elegant, remains susceptible to the biases and incompleteness of the data upon which it is built. A truly autonomous investigator requires a capacity for self-critique, the ability to identify and resolve inconsistencies within its own knowledge base. This necessitates a move beyond passive data assimilation toward active hypothesis generation and falsification-a demand for intellectual courage, if such a term can be applied to a machine.

Ultimately, the value of such systems resides not in replacing human physicists, but in forcing a rigorous re-evaluation of the scientific method itself. Can the pursuit of knowledge be fully automated? Or does the very act of discovery require a subjective element, an intuitive leap beyond the constraints of logic and computation? The answer, one suspects, will prove more philosophical than mathematical.

Original article: https://arxiv.org/pdf/2602.15039.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- 1xBet declared bankrupt in Dutch court

- Gold Rate Forecast

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-02-18 08:19