Author: Denis Avetisyan

A new study investigates how providing reviewers with AI-generated suggestions affects their evaluation process and perceptions of intellectual contribution.

Researchers examine the interplay between human expertise and AI assistance in academic peer review, focusing on reviewer responses to AI-driven feedback.

Despite growing integration of artificial intelligence in academic research, its role in peer review remains contentious, raising questions about fairness and the value of human expertise. This study, ‘What happens when reviewers receive AI feedback in their reviews?’, investigates the first live deployment of an AI feedback tool at ICLR 2025, examining how reviewers interact with and perceive AI-generated suggestions. Findings reveal a nuanced response, acknowledging potential improvements while simultaneously highlighting concerns about maintaining the core intellectual work of evaluation. How can we best design AI-assisted reviewing systems that enhance quality without diminishing reviewer agency and responsibility?

The Strained Foundation of Scholarly Assessment

The cornerstone of scientific validity, peer review, increasingly demands substantial cognitive resources from its participants. Each manuscript requires not only expertise in a narrow field but also dedicated time for careful evaluation of methodology, data analysis, and contextual relevance – a process that can easily overwhelm even the most seasoned researchers. This cognitive load isn’t merely a matter of time commitment; it directly impacts the quality of the assessment, potentially leading to overlooked errors or superficial critiques. Furthermore, the escalating volume of submissions, coupled with limited reviewer availability, creates a system under strain, jeopardizing the long-term sustainability of this vital process and raising concerns about maintaining rigorous standards in scientific publishing. Consequently, the very foundation of knowledge creation is challenged by the pressures placed upon those who safeguard its integrity.

The escalating volume of scientific submissions presents a fundamental challenge to the traditional peer review system. Historically reliant on manual assessment by individual experts, the process struggles to keep pace with the exponential growth of research output. This creates bottlenecks, extending review timelines and potentially compromising the depth of scrutiny each manuscript receives. While the core principle of expert evaluation remains vital, existing methods lack the scalability to efficiently manage the sheer number of papers requiring assessment. Consequently, reviewers face mounting pressure, often juggling numerous requests simultaneously, which can lead to rushed evaluations and a diminished capacity for thoroughness – ultimately impacting the reliability and integrity of published research. The current infrastructure, designed for a different era of scientific output, demands innovative solutions to ensure continued quality control without overburdening the very experts upon whom the system depends.

The increasing demands placed upon peer reviewers are demonstrably impacting the quality and timeliness of scientific assessment. A heavy workload, coupled with continually rising submission volumes, often forces reviewers to prioritize speed over thoroughness, potentially leading to superficial evaluations of research. This isn’t necessarily a matter of diminished expertise, but rather a consequence of cognitive overload; reviewers may struggle to dedicate sufficient attention to each manuscript, increasing the risk of overlooking critical flaws or nuances. Consequently, delayed feedback becomes common, hindering the progress of both accepted and rejected studies and contributing to a cycle of stress within the scientific community. This situation threatens the very foundation of rigorous scientific inquiry, as the reliability of published research depends on careful and considered peer assessment.

Recognizing the escalating demands on scientific peer reviewers, research increasingly focuses on developing assistive technologies designed to enhance, not replace, human expertise. These tools aim to alleviate cognitive burdens by automating tasks like initial plagiarism checks, identifying potential methodological flaws, or summarizing prior work relevant to the submission. Crucially, the design philosophy centers on preserving the reviewer’s critical judgment; algorithms are intended to flag areas requiring focused attention, offering supporting evidence, but never dictating a final assessment. The goal is to empower reviewers to navigate increasingly complex literature with greater efficiency and confidence, ultimately bolstering the reliability and speed of scientific discovery without sacrificing the nuanced evaluation that only a human expert can provide.

Augmenting Expertise: AI Assistance at ICLR 2025

The ICLR 2025 review process incorporated an AI Feedback Tool designed to augment the standard peer review workflow. This tool operated within the OpenReview platform, providing reviewers with immediate suggestions as they composed their assessments. The AI analyzed review text in real-time, flagging areas where feedback lacked specificity, clarity, or constructive detail. Suggestions included prompts for elaboration, identification of potentially ambiguous phrasing, and recommendations for framing critiques in a more actionable manner. The tool was not intended to replace human judgment, but rather to serve as an assistive technology, encouraging reviewers to produce more comprehensive and helpful evaluations.

The AI Feedback Tool for ICLR 2025 was implemented directly within the OpenReview platform, leveraging its existing infrastructure for paper submission, reviewer assignment, and communication. This integration avoided the need for reviewers to access a separate application or transfer data between systems, minimizing disruption to the established review cycle. Specifically, the tool operated as a plugin within the OpenReview interface, providing suggestions and formatting assistance as reviewers composed their assessments. This approach ensured all feedback, including AI-generated suggestions and reviewer edits, remained centrally stored and readily accessible within the platform’s version control system, maintaining a complete audit trail of the review process.

The ICLR 2025 review process prioritized enhancing the quality of feedback to authors, specifically focusing on clarity, specificity, and constructive criticism. This was achieved by encouraging reviewers to move beyond general assessments and provide detailed explanations of both strengths and weaknesses within submissions. The intent was to facilitate more effective author revisions by pinpointing areas needing improvement and offering actionable suggestions. Reviewer guidance emphasized providing concrete examples to support claims, and framing critiques in a manner that encouraged positive engagement and iterative refinement of the research.

The ICLR 2025 review process incorporated automated formatting checks within the AI Feedback Tool to reduce reviewer workload. Specifically, the tool automatically assessed review text for completeness of scoring, presence of summary statements, and adherence to structured feedback guidelines. By handling these superficial, but necessary, formatting tasks, the system aimed to minimize cognitive load on reviewers, enabling them to concentrate analytical effort on the technical merit, novelty, and potential impact of the submitted research. This automation was intended to improve the quality of reviews by shifting reviewer focus from formatting concerns to substantive critique.

Preserving Agency: Human Oversight in an AI-Assisted System

The AI Feedback Tool was intentionally designed to support, not supplant, human reviewer expertise. This core principle ensured reviewers maintained complete control over the assessment process and final feedback delivered to authors. The tool functioned by providing suggestions intended to inform reviewer judgment, but all decisions regarding the validity and incorporation of these suggestions remained with the individual conducting the review. This approach prioritized the preservation of reviewer agency and aimed to leverage AI as a supportive resource rather than an autonomous evaluator.

The AI Feedback Tool was designed to function as a support system for peer reviewers, not an automated decision-maker. Reviewers maintained complete authority over all aspects of the assessment process; the tool generated suggestions intended to highlight potential areas for improvement, but these were presented as recommendations only. The final evaluation of the manuscript, the determination of its suitability for publication, and the specific feedback communicated to authors remained entirely under the control of the human reviewer, ensuring editorial oversight and preserving the nuanced judgment expected in peer review.

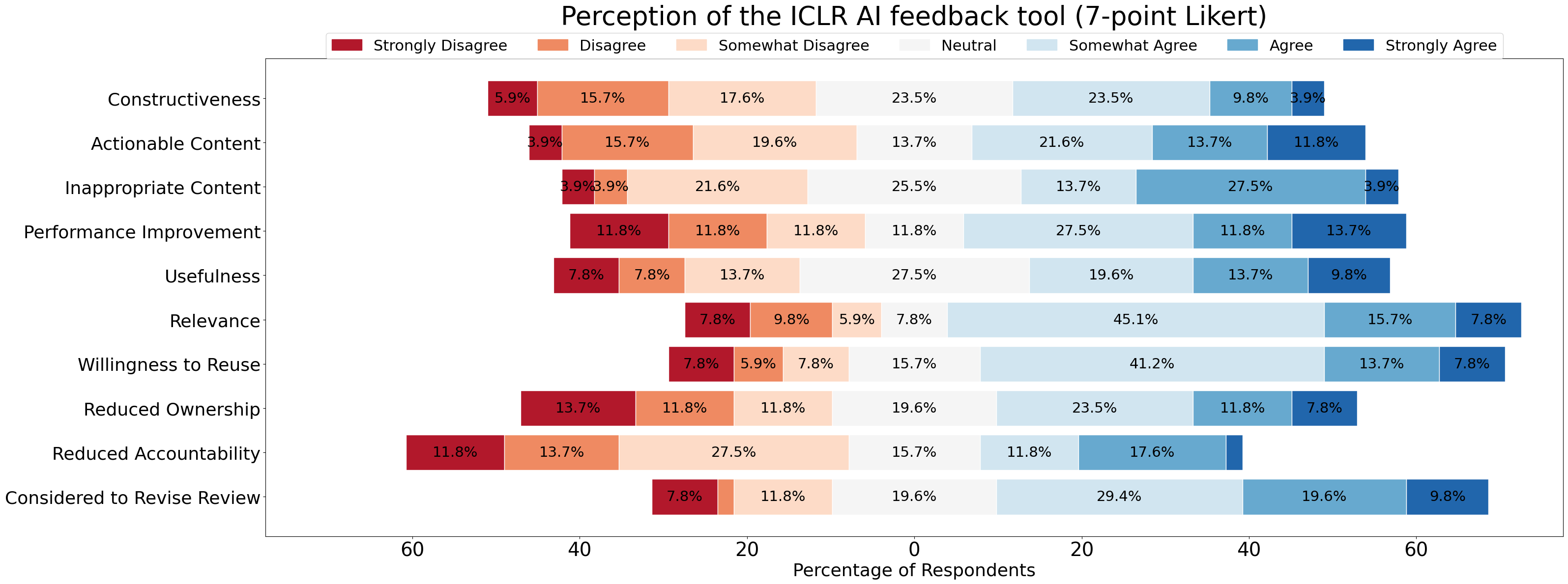

Assessment of the AI Feedback Tool’s impact on review quality involved a comparative analysis of review characteristics both before and after its implementation. Data collection included a reviewer survey, achieving a 51% response rate from a total of 92 reviewers. This response rate represents the basis for quantitative analysis regarding reviewer perceptions and behavioral changes following exposure to the AI-generated feedback. The survey results were used to determine the extent to which the tool influenced reviewer decision-making and the overall characteristics of submitted reviews.

Analysis of reviewer behavior following exposure to AI feedback indicates a discrepancy between stated intention and actual revision rates. While 78.4% of reviewers reported an intention to modify their assessments based on the AI’s suggestions, only 56.9% ultimately implemented edits. Statistical analysis confirmed a significant agreement regarding the relevance of the AI feedback (p=0.032). Furthermore, a negative correlation (-0.30) was observed between reviewer experience and the propensity to revise, suggesting that less experienced reviewers were more likely to incorporate the AI-generated suggestions into their final reviews.

Towards a Sustainable and Equitable Future for Peer Review

The escalating demands of scientific publishing have placed a significant burden on peer reviewers, often leading to burnout and unsustainable workloads. Recognizing this challenge, researchers are increasingly exploring the integration of artificial intelligence to alleviate some of these pressures. AI assistance isn’t intended to replace human judgment, but rather to augment it by automating tasks such as initial manuscript screening, plagiarism detection, and even identifying potential peer reviewers with relevant expertise. By streamlining these processes, AI can free up reviewers to focus on the critical evaluation of research quality, ultimately promoting both efficiency and wellbeing. This shift allows for a more balanced distribution of effort, potentially attracting a wider range of experts to participate in peer review and ensuring the long-term viability of the scholarly assessment process.

The successful integration of artificial intelligence into peer review hinges on robust governance frameworks, and crucially, these must be implemented at the level of individual publishing venues. Such venue-led systems are essential for establishing clear guidelines regarding AI’s role, ensuring transparency in its application-how AI assists, and what decisions remain with human reviewers-and maintaining accountability for any biases or errors that may arise. Without this decentralized yet coordinated approach, the potential for unfair or opaque evaluations increases, eroding trust in the process. Effective governance also requires ongoing monitoring and adaptation, as AI technologies evolve and their impact on peer review becomes better understood, ultimately safeguarding the integrity and equity of scholarly assessment.

The implementation of artificial intelligence within the peer review process isn’t simply about automating tasks; it’s designed to elevate the overall standard of scholarly assessment and accelerate the dissemination of reliable research. By streamlining initial checks for plagiarism and adherence to guidelines, and by offering constructive feedback on clarity and methodology, AI tools free up reviewers to concentrate on the core intellectual merit of submissions. This enhanced efficiency translates to quicker turnaround times for authors, reducing delays in publishing impactful findings. Furthermore, a more thorough and consistent review process fosters greater confidence in published research, benefiting researchers across all disciplines and ultimately strengthening the foundation of scientific knowledge for the wider community. The intended outcome is a virtuous cycle: improved review quality leads to more robust research, which in turn drives further innovation and discovery.

The current scientific landscape faces an increasing challenge: a rapidly expanding volume of research demanding rigorous evaluation. Existing peer review systems, largely reliant on volunteer efforts, struggle to keep pace, creating delays and potential inequities in access to publication. This initiative addresses this critical bottleneck by proposing a scalable framework capable of handling increased submissions without compromising quality. By leveraging technological advancements, the system aims to distribute the workload more efficiently and provide support to reviewers, ultimately fostering a more inclusive and responsive evaluation process. The anticipated outcome is not simply an increase in throughput, but a fundamental shift towards a peer review system that can sustainably support the growth of scientific knowledge and ensure equitable opportunities for researchers worldwide.

The study illuminates a fascinating dynamic within the peer review process-a tension between embracing assistance and preserving intellectual ownership. This resonates with Alan Turing’s observation: “A useful version of the principle of economy is to avoid doing things just because they can be done.” The research demonstrates that while reviewers acknowledge the potential of AI to refine their assessments, they remain hesitant to cede the core evaluative work to a machine. It’s not simply about what AI can do, but about maintaining the human element crucial to rigorous academic evaluation – a point Turing subtly highlights. Infrastructure, in this case the peer review system, should evolve without rebuilding the entire block; AI offers refinement, not replacement.

Where Do We Go From Here?

The study of AI assistance in peer review reveals a predictable, if subtly unsettling, dynamic. Introducing a computational element does not simply add to the existing system; it fundamentally alters the relationships within it. The observed tension between acknowledging improvements suggested by the AI and preserving the perceived intellectual labor of evaluation highlights a core principle: modifying one part of a system triggers a domino effect. The evaluation process isn’t merely about identifying flaws; it’s a performance of expertise, and that performance is now subject to a novel form of comparison.

Future work must move beyond assessing whether AI assistance is helpful and focus on how it reshapes the very nature of scholarly judgment. What are the long-term consequences of offloading aspects of critical analysis to algorithms? Does it lead to a homogenization of thought, or does it free reviewers to focus on more nuanced, creative assessments? These questions necessitate a broader architectural view, one that considers the entire scholarly ecosystem-incentives, reputations, and the evolving definition of intellectual contribution.

Ultimately, the challenge isn’t to optimize AI for peer review, but to understand how the introduction of such tools necessitates a re-evaluation of the entire academic framework. Simplicity, clarity, and a holistic perspective will be crucial, lest we find ourselves patching a system that requires fundamental redesign.

Original article: https://arxiv.org/pdf/2602.13817.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- 1xBet declared bankrupt in Dutch court

- Gold Rate Forecast

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-02-18 04:59