Author: Denis Avetisyan

A new simulation framework explores how teams of autonomous agents can effectively collaborate to build and sustain a Martian base.

Agent Mars demonstrates how curated communication and hierarchical control improve the efficiency and resilience of multi-agent systems in the context of multi-planetary settlement.

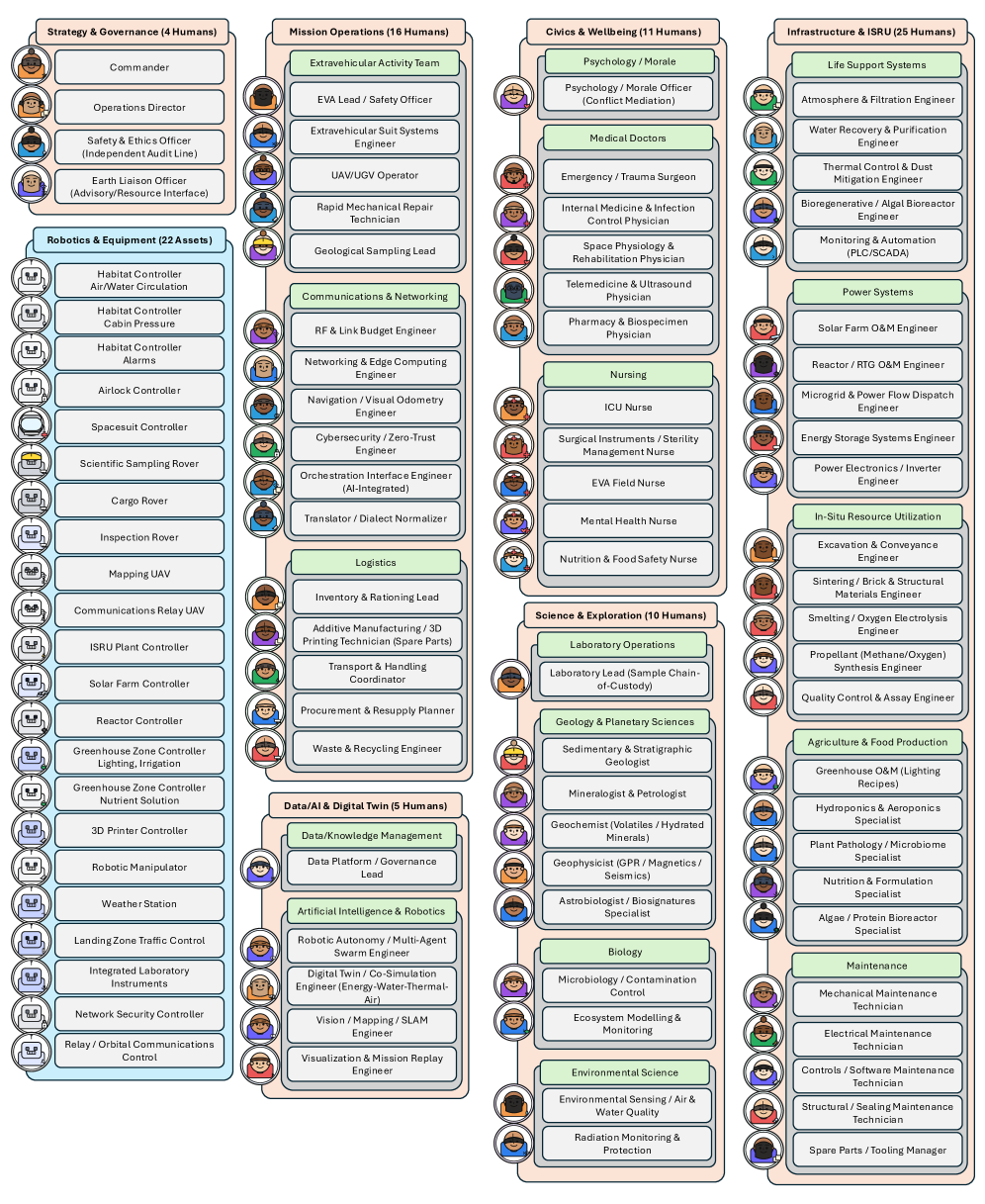

Effective coordination among specialized teams is paramount in complex, safety-critical environments, yet becomes exponentially more challenging with communication delays and resource constraints. This challenge is addressed in ‘Agent Mars: Multi-Agent Simulation for Multi-Planetary Life Exploration and Settlement’, which introduces a novel, open-ended simulation framework for studying large-scale multi-agent systems in the context of Mars base operations. Our work demonstrates that curated cross-layer communication and hierarchical control can significantly improve both the efficiency and resilience of these systems, as quantified by the Agent Mars Performance Index. How can these insights inform the design of robust, auditable AI systems for future space exploration and beyond?

Orchestrating Resilience: The Challenge of Martian Base Coordination

The ambition of establishing a truly self-sufficient Martian base presents a logistical challenge unlike any previous human endeavor, demanding an unprecedented level of coordination between numerous specialized agents – robotic construction crews, resource extraction systems, habitat maintenance units, scientific research teams, and life support infrastructure. This isn’t simply a matter of assigning tasks; it requires a dynamic interplay where each agent anticipates the needs of others, resolves conflicts autonomously, and adapts to unforeseen circumstances. Unlike terrestrial projects with readily available oversight, the vast distances involved preclude real-time centralized control, necessitating a system where agents operate with a degree of independence while remaining seamlessly integrated into the overall mission objectives. Success hinges on creating a complex, interwoven network of capabilities, where the failure of one component doesn’t cascade into catastrophic system-wide failure, but is instead mitigated through the resilience and adaptability of the remaining agents.

The vast distances between Earth and Mars introduce a fundamental challenge to controlling any Martian settlement: the unavoidable delay in communication, known as Earth-Mars Light-Time. This latency, ranging from approximately four to twenty-four minutes each way depending on planetary alignment, renders traditional centralized control systems wholly impractical. Imagine a robotic arm malfunctioning during a critical construction task; by the time a signal reaches Earth for analysis and a corrective command is sent, the situation could have escalated dramatically, or the opportunity for timely intervention lost. This isn’t merely a matter of inconvenience; it’s a barrier to real-time decision-making and necessitates a paradigm shift toward autonomous systems capable of operating effectively with significant delays and limited oversight, demanding resilience and adaptability built directly into the Martian infrastructure.

Successfully establishing a permanent Martian settlement demands a paradigm shift in how complex tasks are coordinated. Given the substantial communication delays – often exceeding twenty minutes round trip – between Earth and Mars, traditional command-and-control structures become wholly impractical. Instead, the future of Mars base operations relies on decentralized multi-agent systems, where individual robotic units and habitat components possess a degree of autonomy and can negotiate solutions locally. These systems must be incredibly robust, capable of functioning reliably despite equipment failures, unforeseen environmental challenges, and limited bandwidth for data transmission. Furthermore, adaptability is paramount; the agents need to learn from experience, adjust to changing conditions, and dynamically reconfigure their strategies without requiring constant intervention from Earth. This necessitates advanced algorithms for distributed decision-making, conflict resolution, and resource allocation, allowing the Mars base to operate as a resilient and self-regulating ecosystem.

Distributed Intelligence: Harnessing the Power of Multi-Agent Reinforcement Learning

Multi-agent reinforcement learning (MARL) presents a viable methodology for constructing decentralized control systems by enabling multiple agents to learn optimal policies through interaction with a shared environment. Unlike traditional centralized approaches requiring a single controller with complete system knowledge, MARL distributes control logic across individual agents, enhancing robustness and scalability. Each agent observes a local state and acts independently, receiving rewards based on collective performance. This paradigm is particularly suited for complex systems where centralized computation is impractical or where agents possess inherently distributed sensing and actuation capabilities. The resulting systems demonstrate adaptability to dynamic conditions and potential for emergent, coordinated behaviors without explicit pre-programming of inter-agent communication or control strategies.

Multi-agent reinforcement learning algorithms such as MAPPO, QMIX, and MADDPG facilitate the development of cooperative behaviors in decentralized systems by allowing agents to learn through interaction with their environment and each other. These algorithms utilize trial-and-error methods, eliminating the need for pre-programmed strategies; agents independently explore possible actions and receive rewards or penalties based on the collective outcome. MAPPO employs a proximal policy optimization approach extended to the multi-agent setting, while QMIX utilizes a centralized critic to learn a joint action-value function. MADDPG, an extension of Deep Deterministic Policy Gradient, allows for learning in both cooperative and competitive scenarios through the use of a centralized critic and decentralized actors. The resulting policies are derived directly from the learned interactions, enabling agents to adapt to complex, dynamic environments without explicit instructions.

Effective communication in multi-agent reinforcement learning (MARL) is a significant challenge due to the non-stationarity introduced by concurrent learning agents; each agent’s policy changes during training, altering the environment from the perspective of others. This necessitates architectures that move beyond independent learning and facilitate information exchange. Approaches to address this include centralized training with decentralized execution, where agents share information during training to learn coordinated policies but act independently during deployment. Specific techniques involve using communication channels – either discrete or continuous – to transmit relevant state information or learned policies. The design of these communication protocols, including message encoding and decoding, and the selection of which agents communicate with each other, directly impacts the performance and scalability of MARL systems. Furthermore, bandwidth limitations and noisy communication channels require robust architectures capable of handling imperfect information transfer.

![A translator agent enables communication between disparate protocols by mapping domain-specific terms-such as hydrated silicates from GEO-to a standardized AI control vocabulary with a volatile signature and accompanying audit trail, represented by the function [latex]\tau: \mathcal{L}_{geo} \to \mathcal{L}_{ai}[/latex].](https://arxiv.org/html/2602.13291v1/x6.png)

Differentiable Communication: Enabling Emergent Coordination

Differentiable communication models, including CommNet, DIAL, and TarMAC, represent a paradigm shift in multi-agent system design by enabling agents to concurrently learn both task-specific control policies and the communication protocols necessary for effective collaboration. Unlike traditional approaches where communication is pre-defined, these models integrate communication channels directly into the learning process, allowing gradients to flow through the communication pathways. This end-to-end differentiability facilitates the optimization of communication strategies based on their impact on overall system performance, effectively treating communication as a trainable component rather than a fixed constraint. The architecture allows agents to dynamically adapt their messaging to maximize collective outcomes, potentially discovering emergent communication languages optimized for the specific task at hand.

End-to-end training of differentiable communication models allows for the direct optimization of communication strategies as an integral part of the agent’s control policy learning process. Unlike traditional methods requiring pre-defined communication protocols, these models learn to communicate in a manner that directly maximizes collective performance on the given task. This approach bypasses the need for hand-engineering communication signals and enables agents to dynamically adapt their communication based on the evolving state of the environment and the actions of other agents. Consequently, the models can learn both what to communicate and how to communicate, resulting in more efficient and effective coordination, as demonstrated by improvements in task completion times within multi-agent simulations.

Within the Agent Mars simulation environment, differentiable communication models are undergoing development to improve coordination among specialized agent groups. A key component of this refinement is the implementation of a Translator Agent utilizing heterogeneous communication protocols, enabling effective information exchange between agents with differing capabilities or communication constraints. Performance evaluations in the ScienceExploration scenario demonstrate a reduction in task completion time of up to 51.3% when using this system, compared to traditional strict hierarchical routing methods. This improvement indicates the efficacy of learned communication strategies in complex multi-agent systems and highlights the potential of differentiable communication for optimizing collective performance.

![The hierarchical link control (HCLC) routing system utilizes a strict hierarchy of links [latex]\mathcal{E}_{H}[/latex] supplemented by curated shortcuts [latex]\mathcal{E}_{X}(\mathcal{W})[/latex] and, when direct links are unavailable, forwards traffic through a monitored mission hub (OPS) for auditing.](https://arxiv.org/html/2602.13291v1/x4.png)

Memory and Consensus: Building Robust and Adaptive Agent Behaviors

LLM-based agents operating in complex, dynamic environments benefit significantly from the incorporation of scenario-aware memory. This system allows agents to retain and recall pertinent details from prior interactions, effectively building a contextual understanding of ongoing situations. Rather than treating each event in isolation, the agent leverages past experiences to inform present decisions, resulting in more nuanced and effective responses. The ability to recall previous observations, actions, and their consequences enables the agent to anticipate potential outcomes, avoid repeating errors, and optimize strategies for achieving its objectives. This persistent memory function is particularly crucial in long-duration tasks or environments where conditions evolve over time, as it allows the agent to adapt and maintain performance without requiring constant re-instruction or external intervention.

Propose-Vote Consensus offers a robust method for multi-agent coordination, particularly valuable when operating under conditions of incomplete information or divergent goals. This mechanism allows agents to individually propose solutions to a given challenge, followed by a voting phase where each agent evaluates the proposals based on its own knowledge and objectives. The solution receiving the majority vote is then enacted, ensuring a collective decision even amidst uncertainty. This distributed approach bypasses the need for a central authority, increasing resilience and scalability, and is especially useful in complex scenarios where agents may possess differing perspectives or priorities. By aggregating individual assessments through a simple voting procedure, the system effectively filters out potentially flawed proposals and converges on a mutually acceptable course of action, promoting cohesive and reliable team performance.

Rigorous evaluation of multi-agent coordination necessitates quantifiable metrics, and the Agent Mars Performance Index (AMPI) was developed to assess effectiveness within complex, simulated Martian base operations. Results from these simulations reveal substantial improvements following the implementation of heterogeneous coordination protocols; failure rates in critical Emergency Response scenarios decreased dramatically from 0.10 to 0.01, and Daily Operations failures were similarly reduced from 0.05 to 0.01. Beyond reliability, these strategies demonstrably enhance efficiency; Daily Operations were completed 17.4% faster, and the challenging CommsBlackoutEVA scenario saw a 28.3% reduction in completion time when utilizing cross-layer routing, suggesting a robust and adaptable system for future exploration.

![Integrating a distilled long-term memory component [latex]f(M^{\text{long}})[/latex] with a short-term window significantly improves state carry-over and reduces redundant re-queries compared to relying on short-term memory alone, as detailed in Table 10.](https://arxiv.org/html/2602.13291v1/x5.png)

The work detailed in ‘Agent Mars’ underscores a fundamental principle of complex systems: structure dictates behavior. This is powerfully echoed in Robert Tarjan’s observation, “If a design feels clever, it’s probably fragile.” The simulation’s emphasis on curated communication and hierarchical control isn’t about creating a needlessly intricate system, but rather establishing a robust foundation for emergent behavior. By prioritizing clear lines of authority and information flow, the framework avoids the pitfalls of overly complex interactions, fostering resilience in the face of unforeseen challenges within the Mars base operations. The elegance lies in the simplicity of the underlying architecture, mirroring Tarjan’s preference for designs built to endure.

Future Trajectories

The Agent Mars framework, while demonstrating the value of structured communication in complex multi-agent systems, ultimately reveals a familiar truth: optimization begets new constraints. Improved efficiency through hierarchical control does not eliminate tension, but rather relocates it. The simulation clarifies that a seemingly elegant solution at one level invariably introduces novel vulnerabilities elsewhere – perhaps in the robustness of the central coordinating agents, or the adaptability of the lower tiers when faced with truly unforeseen circumstances. The system’s behavior over time will be defined by these emergent weaknesses, not the initial design specifications.

Future work must therefore move beyond simply achieving coordination, and focus on quantifying and predicting the costs of that coordination. True resilience isn’t about minimizing all failure modes, but about anticipating likely failures and building systems capable of graceful degradation. This requires integrating more sophisticated models of agent cognition, including not only task-specific expertise but also meta-cognitive awareness – the ability to assess and adapt to changing environmental demands and internal limitations.

Ultimately, the enduring challenge remains the same: architecture is the system’s behavior over time, not a diagram on paper. Agent Mars offers a valuable platform for exploring these dynamics, but the true test will be in acknowledging that every “solution” is merely a temporary truce in an ongoing negotiation with complexity.

Original article: https://arxiv.org/pdf/2602.13291.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- 1xBet declared bankrupt in Dutch court

- Gold Rate Forecast

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-02-17 20:28