Author: Denis Avetisyan

New research demonstrates a system where robots use both visual understanding and natural language to dynamically adjust collaborative tasks, improving reliability in human-robot interaction.

A novel framework leverages vision-language models and a dual-correction mechanism for robust replanning in assistive robotics applications.

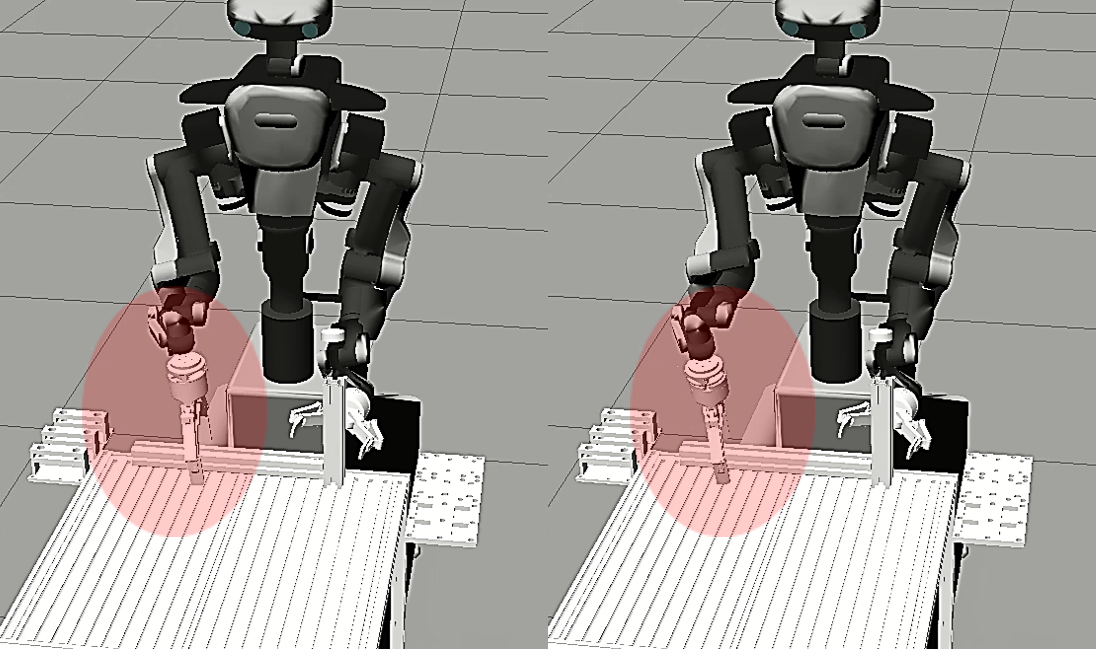

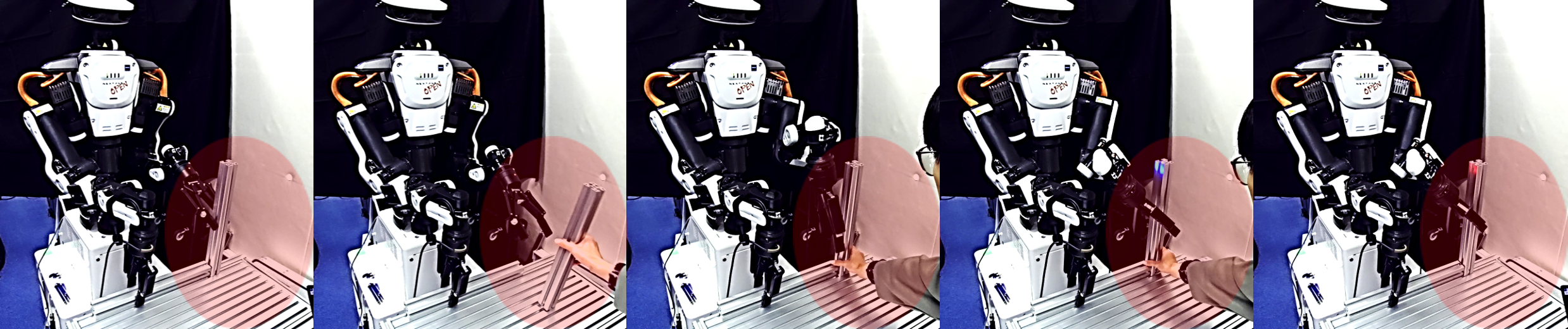

Despite advances in human-robot collaboration, reliably interpreting ambiguous, high-level instructions and ensuring physically feasible execution remains a significant challenge. This work, ‘Replanning Human-Robot Collaborative Tasks with Vision-Language Models via Semantic and Physical Dual-Correction’, introduces a novel framework that augments Vision-Language Models with a dual-correction mechanism-one for pre-action logical consistency and feasibility, and another for post-execution physical error recovery. Simulation and real-world experiments demonstrate improved success rates in collaborative assembly tasks, highlighting the framework’s ability to enable robust, interactive replanning. Could this approach unlock more adaptable and intuitive human-robot partnerships across a wider range of complex scenarios?

Navigating Complexity: The Rise of Collaborative Robotics

Contemporary manufacturing processes are undergoing a significant transformation, driven by an increasing need for flexibility and efficiency in the face of complex product demands and shorter production cycles. Traditional, fully automated systems often struggle to adapt to the nuanced tasks requiring human dexterity and judgment. Consequently, Human-Robot Collaboration (HRC) is rapidly becoming integral to modern facilities. This approach leverages the strengths of both humans – problem-solving, adaptability, and fine motor skills – and robots – precision, repeatability, and strength. By working in tandem, humans and robots can achieve levels of productivity and quality previously unattainable, optimizing workflows and addressing the growing demand for customized products and agile manufacturing solutions. The integration isn’t simply about robots assisting humans; it’s about creating symbiotic partnerships where each entity complements the other, redefining the factory floor and paving the way for a more responsive and resilient industrial landscape.

The increasing sophistication of Human-Robot Collaboration (HRC) is now heavily influenced by the development of Vision-Language Models (VLMs). These models offer a pathway to more adaptable robotic systems, capable of interpreting natural language instructions and visual inputs to perform complex tasks. However, current VLMs are not without significant limitations; their reliance on learned correlations, rather than a true understanding of physics and spatial reasoning, often results in unpredictable behavior. This manifests as an inability to reliably generalize to novel situations or handle unexpected obstacles, hindering the seamless integration of robots into dynamic human workspaces. While promising, the effective deployment of VLMs in HRC necessitates ongoing research to address these inherent constraints and ensure safe, reliable performance.

A significant obstacle to deploying Vision-Language Models (VLMs) in human-robot collaboration lies in their propensity to “hallucinate” – a phenomenon where the model generates robotic actions that defy physical laws or the specifics of the workspace. This isn’t a matter of simple error; rather, VLMs, trained on vast datasets of images and text, can confidently propose actions that are impossible, such as reaching beyond the robot’s articulated range, colliding with obstacles, or manipulating objects in physically unstable configurations. The core issue stems from the models’ lack of embodied understanding – they excel at correlating language with visual inputs but lack a grounded awareness of real-world mechanics and constraints. Consequently, these fabricated actions, while linguistically plausible, present a critical safety risk and necessitate robust mechanisms for validation and correction before implementation in collaborative robotic systems.

A Two-Tiered System for Robust Reliability

The proposed Replanning Framework addresses reliability concerns in Human-Robot Collaboration (HRC) by incorporating both preemptive and reactive correction stages. Prior to plan execution, a pre-execution correction model analyzes the generated plan for potential logical inconsistencies. Following initiation of the plan, a post-execution correction model continuously monitors the physical world for discrepancies between the expected and actual outcomes of each action. This dual-layered approach allows for both the prevention of flawed plans and the immediate correction of errors detected during runtime, facilitating a more robust and dependable HRC system.

The Internal Correction Model functions as a verification stage within the proposed Replanning Framework, specifically evaluating the logical coherence between the plan generated by the Vision-Language Model (VLM) and the defined Action Target. This model assesses whether the proposed sequence of actions logically fulfills the requirements of the target action, independent of physical execution. Discrepancies identified during this internal check – for example, a plan step that does not contribute to achieving the target or includes logically impossible operations – trigger a replanning request before any physical actions are initiated, thus preventing execution of flawed plans and increasing overall system reliability.

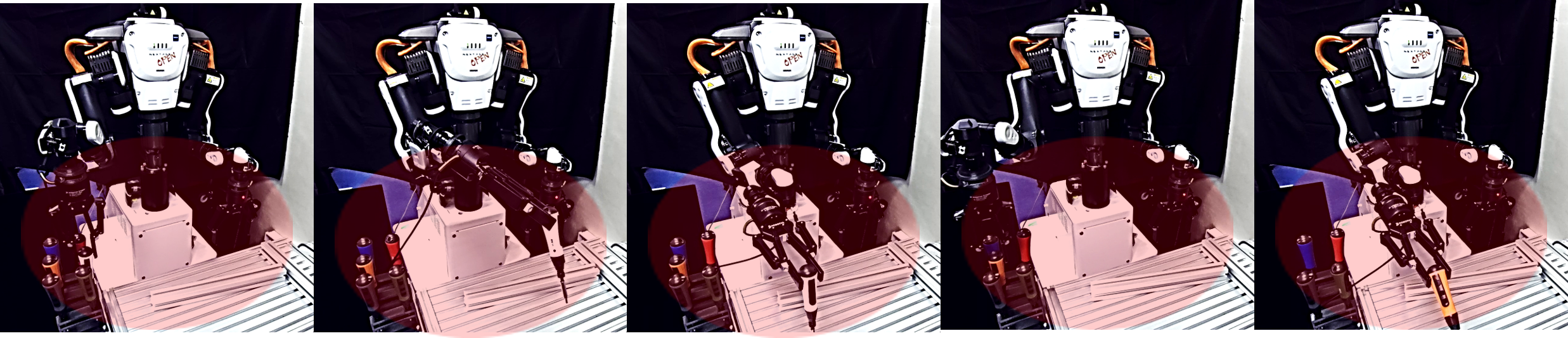

The External Correction Model functions as a real-time monitoring system during the physical execution of a robot’s plan. This model utilizes sensor data – including visual, tactile, and proprioceptive feedback – to compare the robot’s actual state and actions against the expected trajectory defined by the VLM-generated plan. Discrepancies identified through this comparison – such as a failed grasp, unexpected obstacle contact, or deviation from the intended path – immediately trigger the Replanning Framework. This initiates a revised plan generation, ensuring the robot can adapt to unforeseen circumstances and maintain task success. The model does not attempt to correct the action itself, but rather flags the issue to allow the system to replan and correct the overall strategy.

The implemented Dual-Correction Mechanism effectively addresses the potential for inaccuracies, termed ‘hallucinations’, within Vision-Language Model (VLM)-driven Human-Robot Collaboration (HRC) systems. Simulation results demonstrate a 100% success rate in both object fixation and initial tool preparation selection when utilizing this mechanism. This performance indicates complete mitigation of VLM-based errors in these critical initial HRC steps, as the system consistently identifies and corrects potentially flawed plans before or during execution, ensuring reliable task completion.

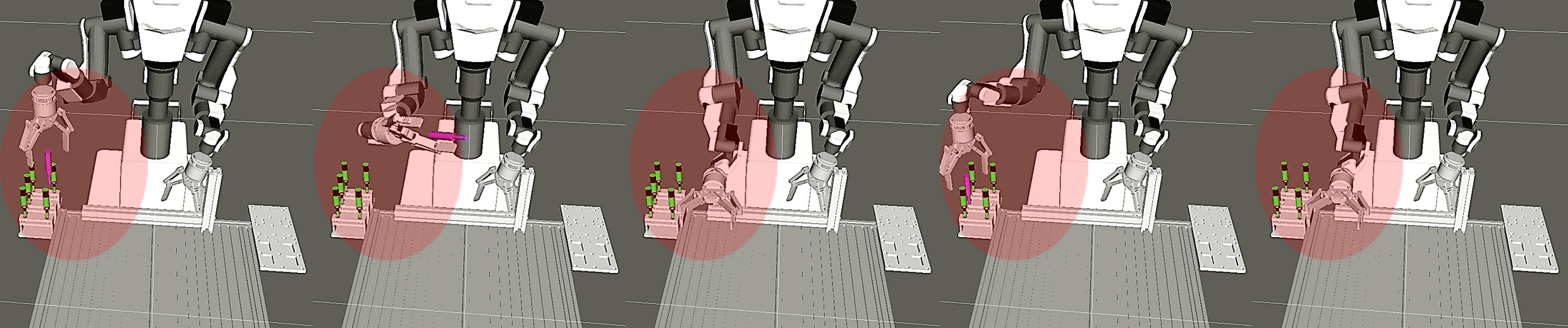

Foundational Technologies: Precision in Grasping and Motion

Robust six-degree-of-freedom (6-DoF) grasp generation is a fundamental requirement for robotic object manipulation, as it defines the initial contact configuration between the robot gripper and the target object. Tools such as GraspGen employ algorithms to compute a diverse set of stable and feasible grasps, considering factors like object geometry, gripper kinematics, and collision avoidance. The resulting grasps are evaluated based on metrics such as force closure, which ensures the grasp can resist external disturbances, and grasp wrench space, which defines the range of forces and torques the grasp can withstand. Generating a sufficient quantity of high-quality 6-DoF grasps increases the probability of successfully grasping an object, even in the presence of uncertainty or cluttered environments, and forms the basis for subsequent manipulation tasks.

Collision-free trajectory planning is a fundamental requirement for robotic systems operating in complex environments. Utilizing frameworks such as MoveIt, this process involves computing a path for the robot that avoids obstacles while efficiently reaching a desired goal state. MoveIt provides functionalities for kinematic planning, collision checking, and path optimization, enabling the robot to navigate workspaces safely and effectively. The planning process typically considers the robot’s physical dimensions, joint limits, and the geometry of the surrounding environment, generating smooth and feasible trajectories. These trajectories are then executed by the robot’s motion controllers, ensuring precise and coordinated movements without collisions.

The Replanning Framework integrates robust 6-DoF grasp generation and collision-free trajectory planning to enable dynamic adaptation to unforeseen circumstances during robotic manipulation. Real-world testing of this framework demonstrated a 66.7% success rate in object fixation tasks, indicating reliable grasping and positioning. Furthermore, the system achieved a 75% success rate in tool preparation with corrective selection, meaning the framework can identify and recover from failures during tool acquisition and positioning, improving overall task completion rates in dynamic environments.

Towards Industry 5.0: A Synergy of Human Skill and Robotic Intelligence

This research aligns with the core tenets of Industry 5.0 by prioritizing a harmonious partnership between human expertise and robotic capabilities in production environments. The framework moves beyond simple automation, instead focusing on collaborative intelligence – systems designed to augment human workers, not replace them. This human-centered approach recognizes that complex manufacturing processes often require nuanced judgment, adaptability, and problem-solving skills best provided by people, while robots excel at repetitive tasks and precision execution. By fostering this synergy, the methodology aims to unlock new levels of efficiency, resilience, and innovation, paving the way for more sustainable and responsive manufacturing systems that prioritize both productivity and the well-being of the workforce.

Modern manufacturing is increasingly reliant on Human-Robot Collaboration (HRC) to navigate dynamic environments and complex tasks. Advanced HRC techniques, such as SayCan, KnowNo, and TidyBot, are proving pivotal in enhancing this adaptability and responsiveness. SayCan allows robots to assess task feasibility, while KnowNo enables them to recognize when they lack the necessary knowledge and request human assistance. TidyBot focuses on efficient workspace organization, minimizing disruptions and maximizing collaborative potential. The combined effect of these methods is a system capable of not only executing instructions, but also of understanding its own limitations and proactively seeking guidance – a crucial step towards truly intelligent and flexible production lines. This intelligent collaboration fosters a more resilient and efficient workflow, allowing manufacturers to respond rapidly to changing demands and maintain a competitive edge.

A significant leap in manufacturing potential is realized through frameworks designed to minimize errors and foster enhanced collaboration between humans and robots. Recent implementations of this approach have demonstrated a substantial improvement in complex task execution, achieving a 70% success rate for consecutive instruction following – a benchmark indicating markedly increased reliability and adaptability on the factory floor. This heightened precision not only streamlines production processes but also unlocks opportunities for innovation, allowing manufacturers to tackle increasingly intricate assemblies and customized product lines with greater confidence and efficiency. The reduction in errors translates directly into lowered costs, minimized waste, and ultimately, a more resilient and competitive manufacturing landscape.

The pursuit of robust human-robot collaboration, as detailed in this work, hinges on anticipating systemic failures. A seemingly minor misinterpretation of a task – a semantic error – or a physical obstruction can cascade into larger issues. This echoes Donald Knuth’s observation: “Premature optimization is the root of all evil.” The dual-correction mechanism proposed here isn’t simply about fixing errors; it’s about proactively building a system capable of gracefully handling inevitable imperfections. By iteratively verifying both semantic understanding and physical feasibility, the framework minimizes the potential for those ‘invisible boundaries’ to cause disruptive failures, mirroring a holistic approach to system design where understanding the whole prevents localized breakdowns.

Future Directions

The presented framework, while a step toward reliable human-robot collaboration, predictably reveals the usual suspects of unresolved difficulty. The reliance on Vision-Language Models introduces the familiar fragility of systems attempting to bridge the semantic gap. If the system looks clever, it probably is fragile. Future work must address the inevitable failures in perception and interpretation-not merely by improving the models themselves, but by designing for their inherent unreliability. A truly robust system will not seek to eliminate error, but to contain it.

The dual-correction mechanism, while demonstrating improved resilience, implicitly acknowledges the limitations of purely reactive replanning. The architecture of any collaborative system dictates its behavior, and a constant cycle of correction suggests a fundamental lack of anticipatory capability. The next logical progression lies in embedding a more sophisticated understanding of physical affordances and human intent – a shift from responding to errors to preemptively avoiding them. This, of course, demands a willingness to sacrifice degrees of freedom, to constrain the robot’s actions in the service of predictable behavior.

Ultimately, the pursuit of ‘general’ collaborative intelligence feels increasingly misguided. The art of system design is, after all, the art of choosing what to sacrifice. Focusing on specific, well-defined task domains – and accepting the inherent trade-offs between flexibility and robustness – may prove a more fruitful path. It is a simple truth, elegantly obscured by the allure of broad applicability.

Original article: https://arxiv.org/pdf/2602.14551.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- 1xBet declared bankrupt in Dutch court

- Gold Rate Forecast

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Breaking Down the Ending of the Ice Skating Romance Drama Finding Her Edge

2026-02-17 15:15