Author: Denis Avetisyan

A new framework offers practical guidance for researchers leveraging artificial intelligence to develop software independently, prioritizing both methodological rigor and personal learning.

This paper introduces SHAPR, a human-centred practice framework for solo researchers employing AI assistance in research software development.

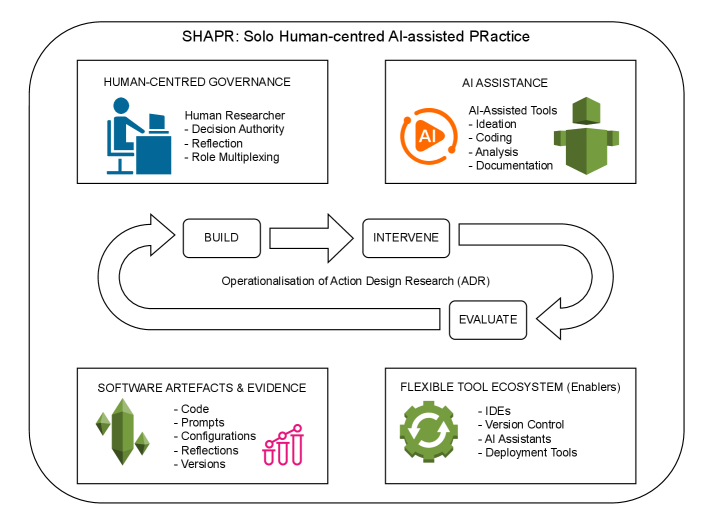

While robust research methodologies are essential for scholarly inquiry, solo researchers increasingly navigate the complexities of software development alongside emerging artificial intelligence tools. This paper addresses this challenge by introducing ‘SHAPR: A Solo Human-Centred and AI-Assisted Practice Framework for Research Software Development’, a practice-level framework designed to integrate rigorous Action Design Research principles with the realities of AI-assisted solo development. SHAPR operationalizes these principles by explicitly defining roles, artefacts, and reflective practices to sustain accountability and learning throughout the development lifecycle. How might such a framework not only enhance the quality of research software, but also better prepare future researchers for navigating the evolving landscape of Human-AI collaboration?

The Illusion of Individual Inquiry

The conventional model of scientific inquiry frequently depends on collaborative efforts involving sizable teams, a structure that, while offering benefits, introduces considerable logistical complexities. Coordinating schedules, managing data sharing, and navigating differing perspectives can significantly slow progress and increase administrative burdens. Moreover, this emphasis on collective work may inadvertently stifle the unique insights that arise from individual contemplation and focused exploration. The pressure to conform to established group norms, or the diffusion of responsibility within a team, can sometimes discourage researchers from pursuing unconventional ideas or challenging prevailing assumptions – potentially hindering genuinely novel discoveries that might emerge from a singular, dedicated perspective.

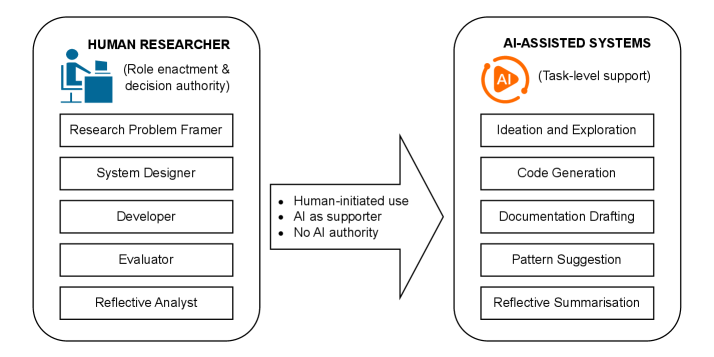

The modern solo researcher often embodies a uniquely demanding professional profile, functioning as designer, data collector, analyst, and interpreter – all within a single individual. This ‘role multiplexing’ necessitates a broad skillset, moving fluidly between conceptualization and meticulous execution, potentially stretching resources and demanding constant context-switching. While fostering independence and potentially accelerating the initial phases of inquiry, it also introduces challenges in maintaining objectivity and rigorous quality control throughout the research lifecycle. The need to simultaneously oversee every aspect of a project can inadvertently introduce bias or overlook crucial details, requiring the researcher to develop heightened self-awareness and implement robust internal checks to compensate for the absence of traditional peer review and collaborative oversight.

The demand on a solo researcher to embody multiple, traditionally separate roles-designer, data collector, analyst, and interpreter-introduces inherent complexities throughout the research process. This ‘role multiplexing’ doesn’t simply add workload; it creates potential for systemic errors. Without the natural checks and balances of a team, biases can creep into study design and data analysis, impacting the validity of findings. Maintaining consistent rigor becomes particularly challenging as the researcher transitions between these distinct functions, potentially overlooking critical details or unconsciously influencing interpretations to align with pre-conceived notions. Consequently, solo researchers must develop heightened self-awareness and employ meticulous documentation practices to mitigate these risks and ensure the trustworthiness of their work, a task that often demands considerable additional effort.

Automating the Inevitable

AI-assisted development streamlines research software creation by automating repetitive coding, testing, and debugging procedures. This automation reduces the time spent on tasks such as data cleaning, algorithm implementation, and basic error correction. Consequently, researchers can accelerate project timelines and iterate more rapidly on their designs. Specifically, tools leveraging machine learning can generate boilerplate code, suggest optimal parameters, and identify potential bugs, allowing developers to focus on core research logic and innovation rather than routine implementation details. The integration of AI into the software development lifecycle demonstrably improves efficiency and reduces the burden on individual researchers or small teams.

Generative AI models are increasingly utilized in research software development to automate content creation. These models can produce functional code snippets in multiple programming languages based on natural language prompts, reducing the time required for initial implementation. Beyond code generation, they facilitate the creation of technical documentation, including API references and user guides, by analyzing code and generating descriptive text. Furthermore, generative AI can assist in the design phase of research artifacts, such as data schemas and experimental protocols, by suggesting structures and parameters based on specified research goals and existing datasets. This capability extends beyond simple automation; the models can propose novel approaches and configurations, although these require validation by the researcher.

By automating routine software development and documentation tasks with AI assistance, solo researchers can reallocate cognitive resources towards the core elements of the research process. This includes dedicating more time to precise problem formulation – clearly defining research questions and hypotheses – and to the rigorous interpretation of results, which involves critical analysis of data, identifying trends, and drawing evidence-based conclusions. This shift allows for increased analytical depth and a more nuanced understanding of findings, ultimately improving the quality and impact of research conducted by individuals without large team support.

SHAPR: A Framework for Controlled Chaos

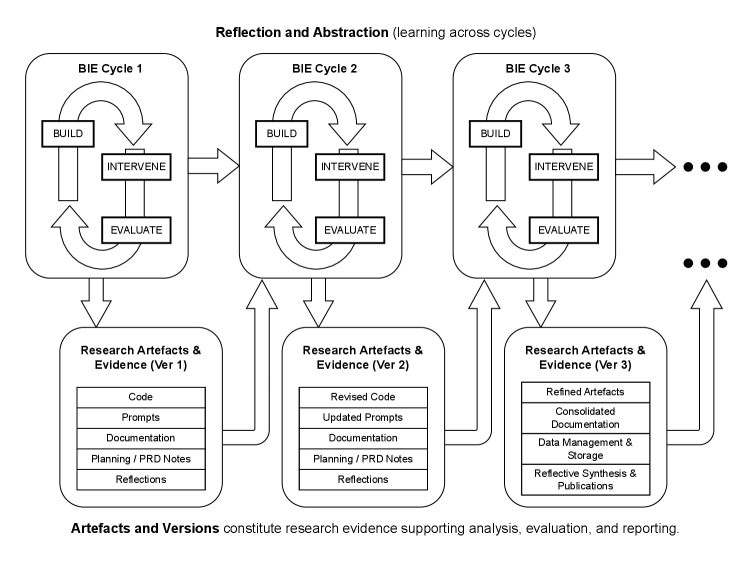

The SHAPR Framework operationalizes Action Design Research (ADR) for individual researchers integrating Artificial Intelligence (AI) into their workflows. It structures the research process into five iterative phases – Scoping, Hypothesizing, Acting, Perceptually-driven Refinement, and Reflection – each designed to build and evaluate research software. This systematic approach enables solo researchers to move beyond conceptualizing ADR principles and actively implement them through software development, focusing on both the creation of research tools and the concurrent learning derived from their application and iterative improvement. The framework’s emphasis on perceptual-driven refinement incorporates continuous evaluation of the software’s functionality and usability, fostering a cyclical process of design, implementation, and learning.

The SHAPR Framework centers on an iterative development cycle for research software, prioritizing continuous evaluation alongside construction. This process involves building a functional prototype, subjecting it to rigorous testing – potentially including user studies or automated analysis – and then incorporating the resulting insights to refine the software’s design and functionality. This cycle of build, test, and refine is repeated throughout the research process, allowing the software to evolve in response to emerging data and research questions. The emphasis on iterative development facilitates learning not only about the research topic but also about the effectiveness of the software itself as a research tool, leading to more robust and reliable findings.

The SHAPR framework addresses key challenges in solo research involving AI-assisted software development by prioritizing methodological rigor, human accountability, and iterative learning. Specifically, it provides a structured process to move beyond ad-hoc AI integration, encouraging researchers to explicitly define research questions, design software as a research instrument, and systematically evaluate results. This approach aims to mitigate risks associated with ‘black box’ AI outputs by maintaining human oversight throughout the development lifecycle and documenting design decisions. Furthermore, SHAPR promotes continuous learning through iterative refinement of both the software and the underlying research methodology, fostering a cycle of development and evaluation that improves the validity and reliability of findings.

The Illusion of Control

Research software, especially when developed as a complete, full-stack system, transcends mere tooling and becomes a physical manifestation of a researcher’s core hypotheses and analytical frameworks. Unlike simply applying existing software, the process of creation forces explicit articulation of underlying assumptions – how data is structured, what relationships are prioritized, and the very logic by which conclusions are drawn. This tangible embodiment allows for a unique form of validation; inconsistencies between the implemented model and initial theoretical expectations become readily apparent, revealing potential flaws or prompting refinements in the research design. Consequently, the software isn’t just a means to an answer, but an integral part of the research itself, fostering a deeper, more nuanced understanding of the investigated phenomena.

The process of constructing research software isn’t merely a technical exercise, but a powerful engine for discovery itself. Actively building these systems forces researchers to explicitly define and codify their underlying assumptions, revealing inconsistencies or gaps in logic that might otherwise remain hidden within abstract theoretical frameworks. This ‘learning-by-building’ approach facilitates a deeper, more nuanced understanding of the research question, as the practical demands of implementation often expose unforeseen complexities and prompt iterative refinement of the analytical model. Through the challenges of translating theory into functioning code, researchers gain novel insights, uncover hidden relationships within the data, and ultimately strengthen the validity and robustness of their findings – often leading to unexpected avenues of inquiry and entirely new research directions.

The integration of artificial intelligence tools throughout the software development lifecycle is rapidly reshaping the capabilities of individual researchers. No longer constrained by the time-intensive demands of coding, testing, and debugging, scientists can leverage AI assistance for tasks ranging from automated code generation and documentation to intelligent error detection and performance optimization. This allows a solo researcher to effectively scale their efforts, iterating on complex analytical models and building sophisticated research software with a speed and precision previously accessible only to large teams. The result is not merely increased productivity, but a demonstrable improvement in the quality and robustness of the research itself, as AI-driven tools can identify subtle bugs and potential biases that might otherwise go unnoticed, ultimately accelerating the pace of discovery.

The SHAPR framework, despite its aspirations for methodological rigor, inevitably courts the same fate as all elegantly conceived systems. It proposes a structured approach to solo research software development with AI assistance, yet production realities will undoubtedly introduce unforeseen complexities. As Brian Kernighan observed, “Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.” This sentiment perfectly encapsulates the inherent tension between theoretical frameworks and the messy, unpredictable nature of real-world software creation. SHAPR, like all such attempts, will become a foundation upon which new layers of technical debt are built, a testament to the enduring gap between intention and implementation.

What’s Next?

The SHAPR framework, presented here, feels…predictable. A meticulously crafted methodology for solo developers wielding generative AI. It’s a valiant attempt to impose order on what will inevitably become chaos. One suspects the first production deployment will reveal that the ‘rigorous methodology’ section conveniently overlooked edge cases involving improperly formatted data and the inherent instability of LLM outputs. They’ll call it AI debt and raise funding for a ‘robustness layer’.

The focus on human accountability is admirable, if naive. The temptation to simply accept the AI’s suggestions, to offload cognitive burden entirely, will prove irresistible. Soon, the framework will be less about ‘human-centred’ development and more about damage control after the AI confidently introduces a critical bug. It’s a familiar pattern – the elaborate system, once a simple bash script, grows increasingly complex, masking the original intent.

Future work must address the inevitable erosion of developer skill. If the AI handles the tedious aspects of coding, what remains for the human? The framework acknowledges learning, but doesn’t account for unlearning – the slow atrophy of fundamental skills. The real challenge isn’t building software with AI, it’s preventing developers from becoming glorified prompt engineers, hopelessly reliant on a black box. And, of course, the documentation will lie again.

Original article: https://arxiv.org/pdf/2602.12443.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Breaking Down the Ending of the Ice Skating Romance Drama Finding Her Edge

- ‘Reacher’s Pile of Source Material Presents a Strange Problem

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

2026-02-16 15:51