Author: Denis Avetisyan

As generative social robots move into classrooms, careful consideration of their underlying knowledge is crucial for effective and responsible learning support.

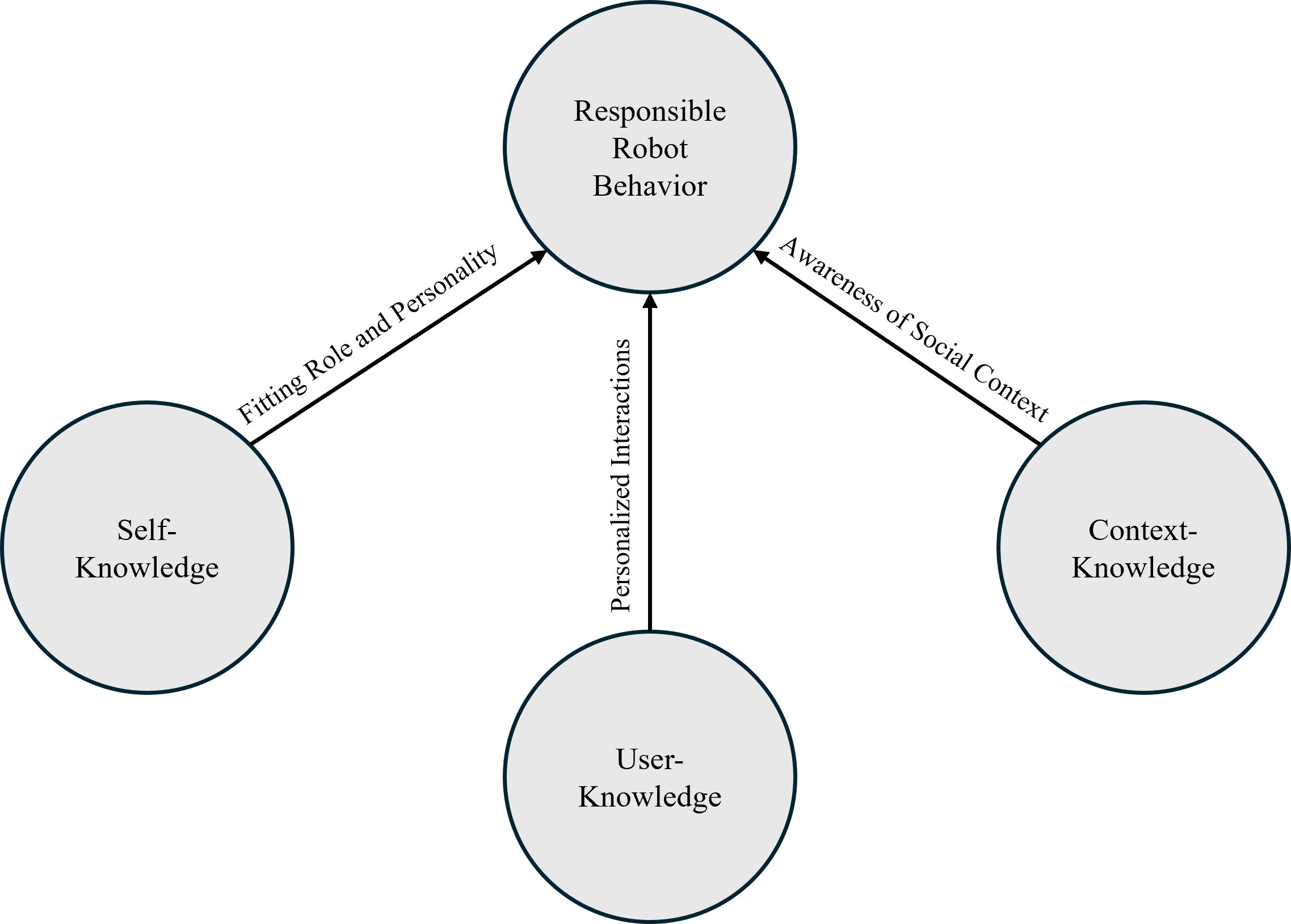

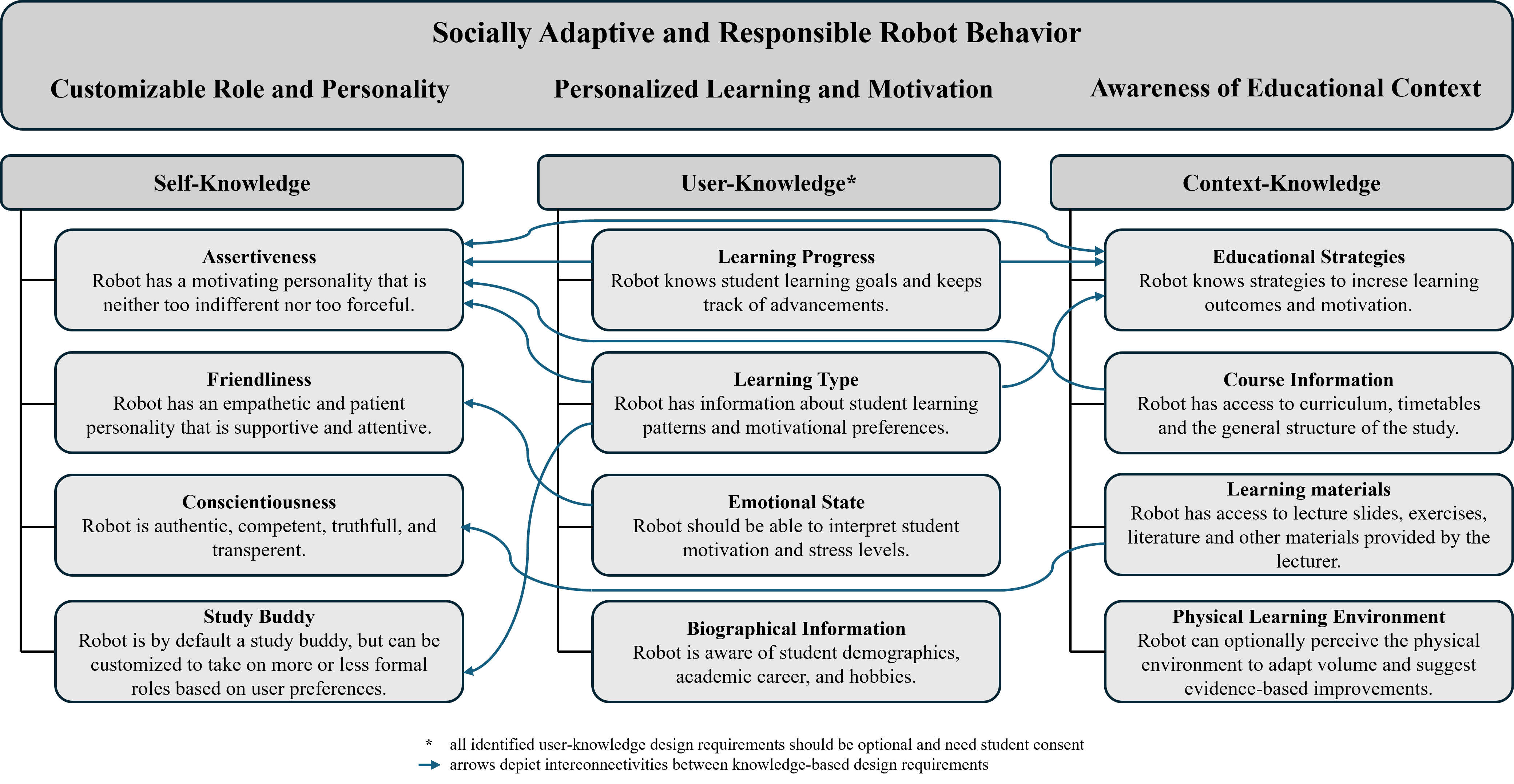

This review outlines the knowledge-based design requirements for tutoring-oriented social robots, emphasizing configurable self-knowledge, personalized user understanding, and contextual awareness.

While large language models promise adaptive and personalized learning experiences, their effective and responsible integration into educational settings requires more than defining desired behaviors. This need is addressed in ‘Knowledge-Based Design Requirements for Generative Social Robots in Higher Education’, which investigates the foundational knowledge necessary for tutoring-oriented generative social robots in higher education. Through interviews with students and lecturers, this work identifies twelve key design requirements spanning self-knowledge, user-knowledge, and context-knowledge, establishing a structured basis for building effective and ethical learning companions. How can these knowledge-based design principles be translated into practical guidelines for developers and ultimately, enhance the impact of generative social robots on student learning?

Deconstructing the Standard: The Promise of Personalized Learning

Conventional educational models, frequently designed around standardized curricula and pacing, often overlook the diverse learning needs present within any classroom. This one-size-fits-all approach can inadvertently create barriers for students who learn at different speeds, possess varying prior knowledge, or respond better to alternative teaching styles. Consequently, disengagement becomes a common issue, as students struggle to keep pace or feel unchallenged, ultimately leading to suboptimal academic outcomes. Research indicates this mismatch between instructional methods and individual student needs contributes significantly to decreased motivation, increased frustration, and a widening achievement gap, highlighting the necessity for more adaptable and personalized learning experiences.

Personalized Learning represents a departure from standardized education, aiming to tailor the learning experience to individual needs; however, realizing this potential hinges on the development of sophisticated adaptive technologies. These technologies must move beyond simply presenting material at varying speeds, and instead dynamically adjust to a student’s learning style – whether visual, auditory, or kinesthetic – as well as their existing prior knowledge. Robust systems are needed to accurately assess these characteristics, identify knowledge gaps, and then deliver content that is appropriately challenging and engaging. Such technologies demand complex algorithms and continuous data analysis to ensure that the learning path remains optimally aligned with each student’s evolving understanding, promising a more effective and fulfilling educational journey.

Effective personalized learning hinges on a comprehensive grasp of individual student characteristics, particularly what is termed ‘User-Knowledge’ – encompassing not just what a student knows, but how they know it, their preferred learning styles, and existing cognitive frameworks. Recent research delved into this concept through interviews with twelve participants – an equal cohort of students and lecturers – to pinpoint the core elements of User-Knowledge and how these impact instructional design. The study sought to bridge the gap between pedagogical theory and practical application, revealing that a nuanced understanding of a student’s pre-existing knowledge base, coupled with an awareness of their learning preferences, is crucial for creating truly adaptive and effective learning experiences. Findings highlighted the importance of lecturers actively assessing and integrating this User-Knowledge into their teaching strategies, ultimately fostering deeper engagement and improved learning outcomes.

The Algorithmic Tutor: Generative Social Robots as Learning Companions

Generative Social Robots offer a departure from traditional tutoring systems by leveraging Large Language Models (LLMs) to facilitate dynamic, personalized learning experiences. Unlike systems reliant on pre-scripted dialogues or limited response sets, these robots utilize LLMs to generate communicative behaviors in real-time, allowing for more nuanced and adaptable interactions with students. This capability enables the robot to respond to a wider range of student questions and learning styles, creating an engaging and responsive tutoring environment. The system’s ability to move beyond static content delivery represents a significant advancement in the field of educational robotics, aiming to provide a more human-like and effective learning companion.

Generative Social Robots differentiate themselves from traditional tutoring systems by dynamically constructing responses rather than retrieving pre-defined content. This autonomous generation of communicative behavior is achieved through the integration of Large Language Models, enabling the robot to formulate explanations, ask clarifying questions, and provide feedback tailored to the individual student’s performance and expressed understanding. Adaptation occurs in real-time, allowing the robot to adjust its teaching strategy based on the student’s immediate responses and the evolving context of the learning interaction, moving beyond a fixed curriculum to address specific knowledge gaps or misconceptions as they arise.

The functional efficacy of Generative Social Robots as tutoring agents relies on the integration of two distinct knowledge domains: Context-Knowledge, encompassing the factual content of the subject matter, and Self-Knowledge, which governs the robot’s ability to formulate coherent and pedagogically appropriate responses. Recent research informed these requirements through qualitative interviews, averaging 45 minutes in duration with a range of 27 to 70 minutes per participant. This data was used to define the scope and structure of both knowledge bases, ensuring the robot can not only access relevant information but also present it in a manner conducive to effective learning and understanding.

The Ethics of Intelligence: Responsible AI Frameworks in Education

The implementation of artificial intelligence within educational settings introduces significant ethical challenges centered on data security and student privacy. AI-driven tools frequently require the collection and analysis of sensitive student data, including performance metrics, behavioral patterns, and personal information. This data is vulnerable to breaches, unauthorized access, and misuse, potentially leading to identity theft, discrimination, or compromised future opportunities for students. Furthermore, the use of algorithms to assess student performance or predict future success raises concerns about algorithmic bias and fairness, requiring robust data governance policies and adherence to relevant privacy regulations, such as GDPR and FERPA, to protect student rights and ensure responsible AI deployment.

Value Sensitive Design (VSD) and AI for Social Good represent proactive methodologies for embedding ethical considerations directly into the development and deployment of AI systems in education. VSD, a systematically iterative approach, necessitates identifying stakeholders – including students, educators, and administrators – and explicitly mapping their values onto the technical design of the AI. This involves conducting empirical research to understand these values, translating them into technical requirements, and iteratively evaluating the design to ensure alignment. AI for Social Good, conversely, prioritizes addressing societal challenges – such as educational inequity – through the purposeful application of AI technologies. Both frameworks emphasize a human-centered design process, requiring continuous assessment of potential biases and unintended consequences to promote equitable access, opportunity, and outcomes for all learners.

The necessity of transparent AI systems in educational contexts stems from the requirement for both trust-building and effective intervention. Understanding the reasoning behind an AI’s output – how it arrives at a specific explanation or recommendation – is vital for educators to validate its appropriateness and identify potential biases or errors. This principle was underscored by the composition of the study’s participant group: lecturers with a mean age of 52 years (SD = 10.95) and an average of 18 years of teaching experience (SD = 8.94, range 6-33 years). This experienced cohort provided critical pedagogical insight, emphasizing the need for AI systems to be explainable and allow for human oversight to maintain educational integrity and student well-being.

Orchestrating the Change: A Structured Approach to AI Implementation

The seamless integration of Generative Social Robots into education isn’t simply a matter of introducing new technology; it demands a carefully considered strategic framework. Successful implementation requires moving beyond initial enthusiasm to establish clear objectives, meticulously plan the robot’s role within the learning environment, and proactively address potential challenges. This structured approach ensures the technology complements existing pedagogical practices, rather than disrupting them. A well-defined framework accounts for the unique characteristics of both the robot and the learners, fostering a positive and productive interaction that maximizes educational benefits. Without such a roadmap, the potential of these advanced tools remains largely untapped, and valuable resources may be misallocated, hindering genuine advancements in learning experiences.

Structured approaches to integrating artificial intelligence, such as the IDEE and 4E Frameworks, are proving essential for maximizing the benefits of these technologies in complex environments. The IDEE Framework-Identify, Design, Execute, Evaluate-offers a cyclical process for defining clear learning objectives, developing AI-driven interventions, implementing those interventions, and rigorously assessing their impact. Complementing this, the 4E Framework-Engagement, Emotion, Embodiment, and Evaluation-focuses on creating AI interactions that are not only effective but also emotionally resonant and physically embodied, particularly relevant in social robotics. By systematically addressing both the what-desired outcomes-and the how-methods of delivery and assessment-these frameworks provide a roadmap for successful AI implementation, ensuring that technological advancements translate into measurable improvements in learning and development.

Successful integration of artificial intelligence into educational contexts hinges not simply on the technology itself, but on a robust system supporting its use and measuring its effects. A comprehensive analysis, involving the coding of 642 text segments across four primary categories and seventeen subcategories, revealed a granular understanding of the prerequisite knowledge needed for effective AI tutoring. This detailed examination underscored the critical need for readily available, relevant learning materials to complement AI instruction, alongside a dedicated commitment to continuously monitoring student learning progress. Such monitoring allows for iterative adjustments to the AI’s approach and ensures a positive impact on educational outcomes, transforming the technology from a novel tool into a truly effective learning partner.

Rewriting the Classroom: The Future of Personalized Education

The emergence of generative social robots signals a potential paradigm shift in education, moving beyond standardized curricula towards truly personalized learning experiences. These aren’t simply pre-programmed tutors; they utilize advanced artificial intelligence to dynamically assess a student’s strengths, weaknesses, and preferred learning style, adapting instructional content and pace accordingly. Crucially, realizing this revolution demands more than just technological advancement; robust ethical frameworks are essential to address concerns surrounding data privacy, algorithmic bias, and the potential for over-reliance on robotic educators. Strategic implementation, focusing on augmenting – not replacing – human teachers, will be key to ensuring these robots foster critical thinking, creativity, and essential social-emotional skills, ultimately unlocking each student’s unique potential and cultivating a lifelong passion for learning.

The capacity of generative social robots to truly personalize education stems from their ability to move beyond standardized curricula and cater to the unique profile of each learner. These robots aren’t simply delivering pre-programmed lessons; they are designed to assess a student’s strengths, weaknesses, and preferred learning modalities – whether visual, auditory, kinesthetic, or a blend thereof. Through ongoing interaction and data analysis, the robots adapt the pace, content, and delivery method to optimize comprehension and retention. This adaptive learning fosters not only improved academic performance but also cultivates a sense of agency and intrinsic motivation, ultimately nurturing a lifelong enthusiasm for acquiring knowledge and skills. The system effectively transforms education from a one-size-fits-all model to a dynamic, individualized journey, unlocking each student’s potential and empowering them to become self-directed, engaged learners.

Intelligent motivational support, delivered via artificial intelligence, represents a significant advancement in fostering student engagement and maximizing potential. These systems move beyond simple encouragement, adapting to individual learner profiles and providing precisely timed interventions based on performance and identified needs. Recent studies, including interviews with a cohort of students averaging 27 years of age (with a standard deviation of 3.10 years), have been instrumental in refining these AI algorithms, ensuring that motivational strategies resonate with a diverse range of adult learners. The aim is not merely to boost scores, but to cultivate intrinsic motivation, fostering a sustained passion for learning and empowering individuals to reach their academic and personal goals through personalized encouragement and support.

The pursuit of genuinely intelligent tutoring systems, as detailed in the paper’s exploration of knowledge-based design, inherently demands a dismantling of conventional pedagogical approaches. It’s a process of reverse-engineering how humans learn, identifying the core components, and then rebuilding them in a machine. This mirrors Marvin Minsky’s sentiment: “You can’t always get what you want, but sometimes you get what you need.” The paper doesn’t aim to replicate human teachers perfectly, but rather to distill the essential knowledge structures – self-knowledge, user-knowledge, and contextual awareness – necessary for effective personalized learning. It’s about identifying what a robot needs to know to be a valuable educational tool, even if it doesn’t possess the full spectrum of human understanding.

What Breaks Down From Here?

The comfortable assumption underpinning much of this work-that a robot can meaningfully possess “knowledge” relevant to higher education-deserves scrutiny. The paper rightly identifies requirements for configurable self-knowledge, user profiles, and contextual awareness, but these are, at base, elaborate mirroring exercises. The real challenge isn’t building a robot that seems to know, but understanding what happens when the illusion falters. What systemic errors emerge when a generative model, however sophisticated, encounters a student’s genuinely novel insight, a question outside its training data, or a logical paradox?

Future work shouldn’t shy away from deliberately stressing these boundaries. Instead of optimizing for seamless interaction, the field needs to actively seek failure modes. Can one reliably induce a “knowledge gap” in the robot to observe how it handles uncertainty, admits ignorance, or defers to human expertise? The goal isn’t to create a perfect tutor, but to map the edge cases where the system’s inherent limitations become instructive – revealing not what the robot knows, but what knowing itself means in a pedagogical context.

Ultimately, the utility of these generative social robots may reside less in their ability to deliver information and more in their capacity to expose the fragility of knowledge itself. The true test isn’t whether they can teach, but whether they can help students understand what it means to not know, and to embrace the productive discomfort of intellectual inquiry.

Original article: https://arxiv.org/pdf/2602.12873.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Breaking Down the Ending of the Ice Skating Romance Drama Finding Her Edge

- ‘Reacher’s Pile of Source Material Presents a Strange Problem

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

2026-02-16 14:04