Author: Denis Avetisyan

A new multi-agent system uses visual feedback to refine physics simulations, bridging the gap between code generation and accurate results.

Perceptual self-reflection in agentic systems enables improved physics simulation code generation by visually validating and correcting outputs.

Conventional methods for verifying physics simulation code struggle to differentiate syntactically correct solutions from those exhibiting physically implausible behavior-a challenge known as the ‘oracle gap’. This paper introduces a novel multi-agent system for physics simulation code generation, titled ‘Perceptual Self-Reflection in Agentic Physics Simulation Code Generation’, which addresses this limitation through a mechanism of perceptual validation-analyzing rendered simulation outputs using a vision-language model. Our results demonstrate that this approach, leveraging iterative refinement based on visual feedback, substantially improves accuracy across diverse physics domains compared to single-shot generation baselines. Could this paradigm of ‘perceptual self-reflection’ unlock new levels of automation and reliability in engineering workflows and physics-based data generation pipelines?

The Illusion of Precision: Why Simulations Lie

The pursuit of accurate physics simulation frequently encounters a curious paradox: code can execute flawlessly, free of syntactic errors, yet still generate results that defy physical reality. This discrepancy, termed the ‘Oracle Gap’, stems from the fundamental difference between conventional software testing and validating physical plausibility. Standard code verification confirms that a program does what it is programmed to do, but it doesn’t inherently ensure that ‘what it is programmed to do’ aligns with the immutable laws governing the universe. Consequently, a simulation might perfectly solve the equations it embodies, while those equations themselves are flawed or inappropriately applied, leading to demonstrably unrealistic outcomes. This presents a significant challenge, as researchers may unknowingly base conclusions on simulations that, while internally consistent, bear little resemblance to the physical phenomena they intend to model.

Traditional software testing focuses on verifying that code behaves according to its specification – that it produces the expected output for a given input. However, ensuring physical correctness in simulation demands something far more stringent: adherence to the immutable laws of nature. Unlike debugging a logical error, identifying physically implausible behavior requires evaluating whether the simulation’s internal dynamics – its forces, interactions, and resulting motion – align with established physical principles. This presents a unique challenge because physical laws are often continuous and complex, making it difficult to define discrete, testable criteria for correctness. A simulation can pass all standard code tests yet still produce results that violate fundamental physics, subtly undermining the validity of the modeled system and potentially leading to erroneous conclusions.

The potential for simulations to mislead extends beyond simple computational errors; without rigorous validation against established physical laws, even syntactically correct models can yield profoundly inaccurate results. This poses a significant threat to scientific advancement across disciplines reliant on computational modeling, from climate science and materials design to astrophysics and drug discovery. A simulation appearing to function flawlessly may still produce outputs divorced from reality, leading researchers down unproductive paths and potentially misinforming critical decisions. The consequence isn’t merely wasted time or resources, but the risk of building theories or technologies on fundamentally flawed foundations, emphasizing the vital need for validation techniques that prioritize physical plausibility over purely computational correctness.

Perceptual Self-Reflection: A System That Checks Its Own Work

The Perceptual Self-Reflection Architecture addresses the Oracle Gap – the difficulty in verifying the correctness of complex simulations – through a novel validation process centered on visual analysis. This architecture moves beyond traditional code inspection by generating executable simulations and then evaluating their physical realism by rendering animation frames. These rendered frames are then analyzed, not for aesthetic quality, but as data representing the simulation’s adherence to expected physical laws and behaviors. This approach allows for indirect validation: discrepancies between the rendered visuals and established physical principles indicate potential errors within the underlying simulation code, providing a means of automated and objective assessment without requiring explicit ground truth data or manual review of the code itself.

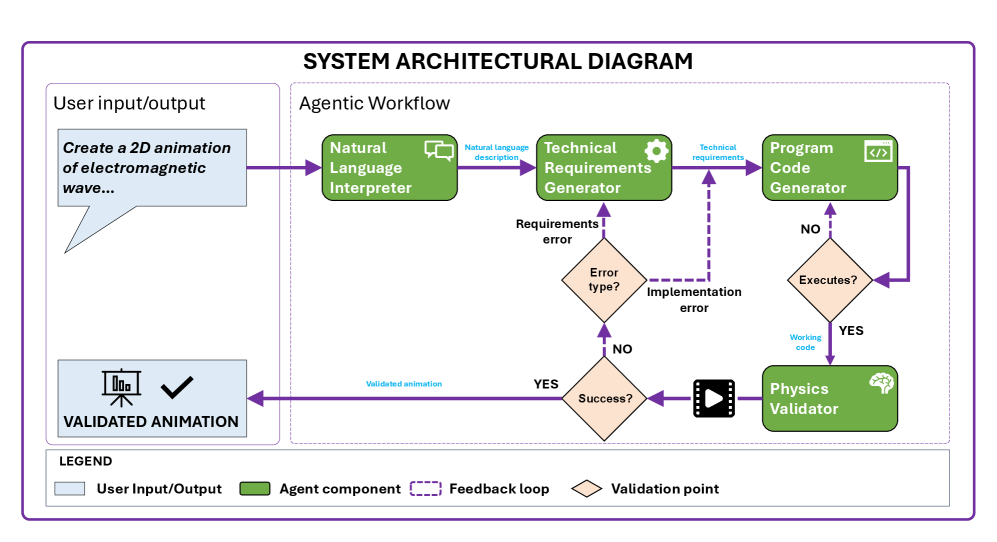

The Perceptual Self-Reflection Architecture utilizes a sequential pipeline of agents to generate executable simulations from initial natural language input. First, a Natural Language Interpreter processes the input to extract intended functionality. This information is then passed to a Technical Requirements Generator, which translates the interpreted input into a formal set of technical specifications. Subsequently, a Physics Code Generator utilizes these specifications to produce the simulation code itself, ultimately resulting in an executable simulation ready for validation. This modular approach allows for traceability from initial intent to concrete implementation, facilitating debugging and refinement of the simulation process.

The Physics Validator component employs a Vision-Capable Language Model to quantitatively assess the physical realism of simulated scenarios. This assessment is performed by analyzing rendered animation frames generated from the simulation code; the model is trained to identify inconsistencies with expected physical behavior based on visual cues. Specifically, the Vision-Capable Language Model analyzes features within the frames – such as object trajectories, deformation, and interactions – and correlates these with underlying physical principles. This analysis results in a validation score indicating the degree to which the simulation adheres to expected physical behavior, providing a measurable metric for code verification.

Defining Physical Correctness: Beyond Simple Error Detection

The Physics Validator assesses simulation accuracy using ‘Validation Criteria’ which go beyond standard software testing. These criteria evaluate whether the simulation’s behavior aligns with established physical laws, with a primary focus on the conservation of energy and other fundamental principles. This approach differs from traditional code validation, which primarily confirms the absence of programming errors; the Physics Validator instead verifies that the results of the simulation are physically plausible, even if the underlying code functions correctly. This necessitates the implementation of domain-specific checks to confirm adherence to these principles throughout the simulation process, identifying deviations from expected physical behavior.

For simulations modeling ‘Conservative Systems’ – those governed by the principle of energy conservation – the Physics Validator implements criteria to maintain energy balance throughout the simulation. These criteria function by continuously monitoring the total energy within the system and flagging deviations exceeding a defined tolerance. Unrealistic energy gains, resulting in perpetual motion, and losses, leading to energy dissipation without a physical mechanism, are specifically detected and reported as validation failures. This ensures the simulated system behaves within the bounds of established physical laws, preventing the accumulation of numerical errors that could lead to unstable or non-physical results. The tolerance level for acceptable energy drift is configurable, allowing users to balance simulation accuracy with computational performance.

The Physics Validator incorporates domain-specific metrics to enhance the precision of validation criteria beyond generalized physical laws. These metrics are customized to the unique characteristics of the simulated system; for example, a fluid dynamics simulation might utilize metrics related to vorticity or turbulence intensity, while a rigid body simulation would employ metrics concerning angular momentum and kinetic energy distribution. Integration of these tailored metrics allows the validator to identify subtle deviations from expected behavior specific to the simulated phenomena, increasing the fidelity of the validation process and enabling the detection of errors that would be missed by broader, less-refined checks. This granular approach ensures that the simulation accurately reflects the target physical system’s behavior, rather than simply adhering to universally applicable, but potentially insufficient, physical principles.

From Simulation to Discovery: A System That (Almost) Thinks For Itself

The architecture demonstrably streamlines scientific investigation by automating the traditionally labor-intensive validation process. This automation minimizes the need for researchers to manually verify the accuracy and reliability of computational models and simulations, a critical bottleneck in many fields. By autonomously assessing results against established physical principles and experimental data, the system dramatically accelerates the iterative cycle of hypothesis, computation, and analysis. Consequently, researchers are freed to focus on higher-level tasks – interpreting results, formulating new questions, and pushing the boundaries of scientific understanding – rather than being bogged down in meticulous error checking, ultimately fostering a faster pace of discovery and innovation.

The Physics Code Generator incorporates an ‘Automated Self-Correction’ mechanism designed to significantly improve the efficiency of scientific model development. This proactive error resolution isn’t simply about flagging mistakes; the system analyzes generated code during execution, identifying discrepancies between expected and observed behavior. When errors are detected, the generator doesn’t require external intervention; instead, it automatically modifies the code, testing the changes iteratively until the simulation converges on a valid solution. This internal feedback loop minimizes the need for manual debugging, accelerating the refinement process and allowing the system to independently address a wider range of computational challenges than traditional methods. The result is a robust and self-improving system capable of tackling complex physics problems with reduced human oversight.

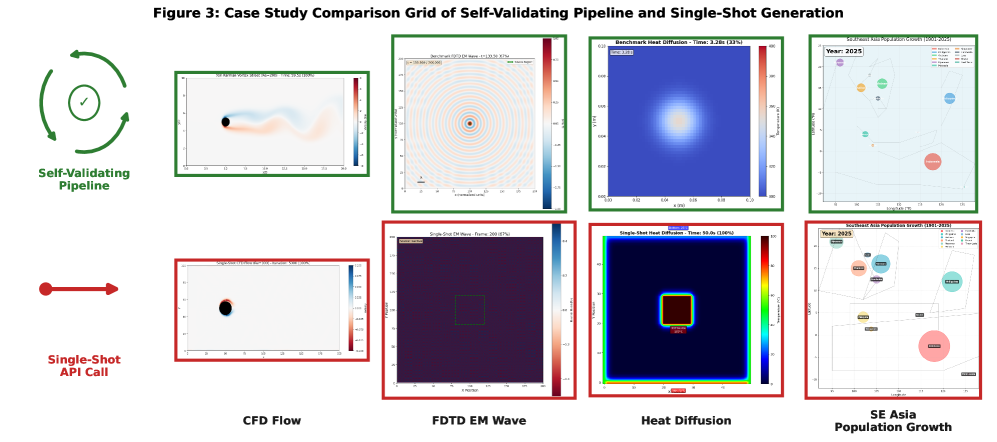

The developed architecture demonstrates a significant leap in automated scientific problem solving, achieving an average physics accuracy of 91% when tested across seven distinct domains. This performance markedly surpasses that of existing methods, indicating a robust and versatile approach to computational physics. Such a high degree of accuracy isn’t merely a numerical improvement; it suggests the framework can reliably generate physics code that produces results consistent with established scientific principles, even when applied to varied and complex scenarios. This advancement promises to not only expedite research but also to reduce the potential for errors inherent in manual code development, ultimately bolstering the trustworthiness of computational simulations and analyses.

The developed framework demonstrates a robust capacity for automated scientific problem-solving, achieving an 86% success rate across a diverse range of tested scenarios. This metric quantifies the percentage of instances where the system not only generates a solution, but also attains a predefined target accuracy of 85% or higher. Such performance represents a significant advancement over existing approaches, notably exceeding the 40% success rate reported for single-shot generation of comparable computational physics problems. The consistently high success rate underscores the system’s reliability and its potential to substantially accelerate the pace of scientific discovery by minimizing the need for manual intervention and iterative refinement.

Current approaches to automatically generating computational physics problems at the PhD level face significant limitations, achieving a success rate of only 40% in single attempts. This benchmark, established by Ali-Dib and Menou in 2023, highlights the inherent difficulty in crafting problems that are both solvable and representative of real-world physics challenges. The low success rate indicates a substantial need for improved automation techniques capable of consistently producing valid and meaningful problems without extensive manual intervention or iterative refinement. This emphasizes the potential of new architectures to dramatically accelerate research by overcoming this critical bottleneck in automated scientific discovery.

The framework leverages ‘Matplotlib Animation’ to translate complex simulation data into readily interpretable visual representations. This approach moves beyond static charts and graphs, offering dynamic depictions of physical phenomena as they evolve over time. By visualizing processes such as fluid dynamics or particle interactions, researchers gain an intuitive understanding of simulation outcomes, accelerating the identification of patterns, anomalies, and key insights. This enhanced visual feedback loop not only streamlines the analysis process but also fosters a deeper, more nuanced comprehension of the underlying scientific principles, ultimately driving more effective model refinement and discovery.

The pursuit of flawless simulation, as detailed in this work concerning perceptual self-reflection, feels…familiar. The system’s iterative refinement through visual validation-agents essentially critiquing their own outputs-is a pragmatic dance with inevitable failure. As Brian Kernighan observed, “Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.” This holds true; the ‘oracle gap’ this paper attempts to bridge isn’t a technical hurdle alone, but an acknowledgement that perfectly modeling reality is an asymptotic goal. Each iteration, each visual check, merely delays the inevitable entropy-a beautifully structured panic, if one prefers-before production finds a novel way to break things.

What’s Next?

The pursuit of self-reflection in simulated agents, as demonstrated, merely relocates the problem. The ‘oracle gap’ doesn’t vanish; it’s now a visual Turing test, demanding ever-more-sophisticated image analysis to confirm what should, theoretically, be known from the code itself. This introduces a new class of failure – perceptual drift, where the simulation looks correct, even as fundamental physics quietly breaks. CI is, after all, a temple-and the gods are fickle.

Future work will inevitably focus on automating the creation of these ‘perceptual oracles’. Expect a proliferation of adversarial networks, tasked with finding the most subtle visual discrepancies. This feels less like progress and more like building increasingly elaborate scaffolding around a fundamentally fragile system. Anything that promises to simplify life adds another layer of abstraction, and abstractions are, by definition, leaky.

The true limitation, predictably, isn’t the algorithm, but the data. Visual validation demands immense, meticulously labeled datasets of ‘correct’ physics. The creation of such datasets will become the dominant cost, and the source of the next bottleneck. Documentation is a myth invented by managers, but labeled data? That’s a resource constraint with teeth.

Original article: https://arxiv.org/pdf/2602.12311.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Overwatch Domina counters

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- ‘Reacher’s Pile of Source Material Presents a Strange Problem

- Breaking Down the Ending of the Ice Skating Romance Drama Finding Her Edge

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

2026-02-16 07:26