Author: Denis Avetisyan

New research reveals that even slight communication delays can erode cooperation between artificial intelligence agents, leading to competitive and ultimately less effective teamwork.

Communication latency significantly impacts cooperative behavior in multi-agent systems built with large language models, demonstrating the critical importance of the infrastructure layer.

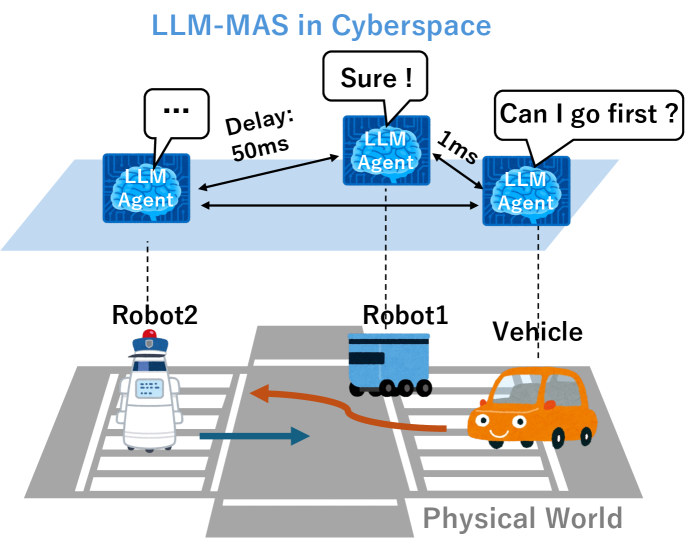

While increasingly sophisticated, multi-agent systems powered by large language models often assume idealized communication conditions, overlooking critical real-world constraints. This work, ‘Cooperation Breakdown in LLM Agents Under Communication Delays’, investigates how communication delays impact cooperative behavior in LLM-based agents using a novel continuous Prisoner’s Dilemma framework. We demonstrate that increasing delay initially encourages exploitative strategies, yet excessive delay paradoxically reduces these cycles, resulting in a U-shaped relationship between delay and mutual cooperation. These findings suggest that successful deployment of LLM agents requires attention not only to high-level coordination mechanisms but also to the often-neglected infrastructure layer-what factors will ultimately enable robust and reliable collaboration in complex, resource-constrained environments?

The Fragility of Cooperation: A Systems-Level Challenge

Despite remarkable progress in artificial intelligence, crafting genuinely cooperative multi-agent systems presents a persistent challenge. The difficulty isn’t a lack of individual agent capability; increasingly sophisticated algorithms allow agents to perform complex tasks and even exhibit learning behaviors. Instead, the hurdle lies in enabling these intelligent entities to consistently work together towards shared objectives. Simply possessing intelligence doesn’t guarantee collaboration; agents often struggle with issues of trust, communication, and the coordination of actions. This necessitates moving beyond solely improving individual agent performance and focusing on the architectural underpinnings that facilitate seamless, reliable, and beneficial interaction between them, a complex problem demanding novel approaches to system design and agent interaction protocols.

Many attempts to build cooperative artificial intelligence systems have prioritized agent intelligence – the ability to reason and act – while inadvertently neglecting the foundational support structures necessary for sustained collaboration. These systems often assume agents can seamlessly coordinate simply by being intelligent, overlooking the need for dedicated communication channels, shared knowledge repositories, and robust protocols for resolving conflicts or managing resource allocation. This infrastructural gap leads to brittle cooperation; even seemingly intelligent agents struggle when faced with complexities arising from imperfect information, unforeseen circumstances, or the need to negotiate shared goals over extended periods. Consequently, the true challenge isn’t solely about creating intelligent agents, but about designing the underlying architecture that enables them to reliably and effectively work together as a cohesive team, ensuring cooperation isn’t merely a fleeting demonstration but a durable capability.

Successfully coordinating multiple artificial intelligence agents hinges not simply on their individual capabilities, but on the underlying infrastructure that facilitates interaction and resource allocation. While sophisticated algorithms can equip agents with intelligence, a robust framework is essential for translating that intelligence into collective action. This framework must encompass reliable communication channels, shared understandings of goals and constraints, and mechanisms for resolving conflicts and distributing resources efficiently. Without such a system, even highly intelligent agents can struggle to synchronize efforts, leading to redundancy, inefficiency, and ultimately, a failure to achieve shared objectives. Consequently, research increasingly focuses on designing these coordinating architectures – the ‘social infrastructure’ for Multi-AI systems – to unlock the full potential of cooperative intelligence.

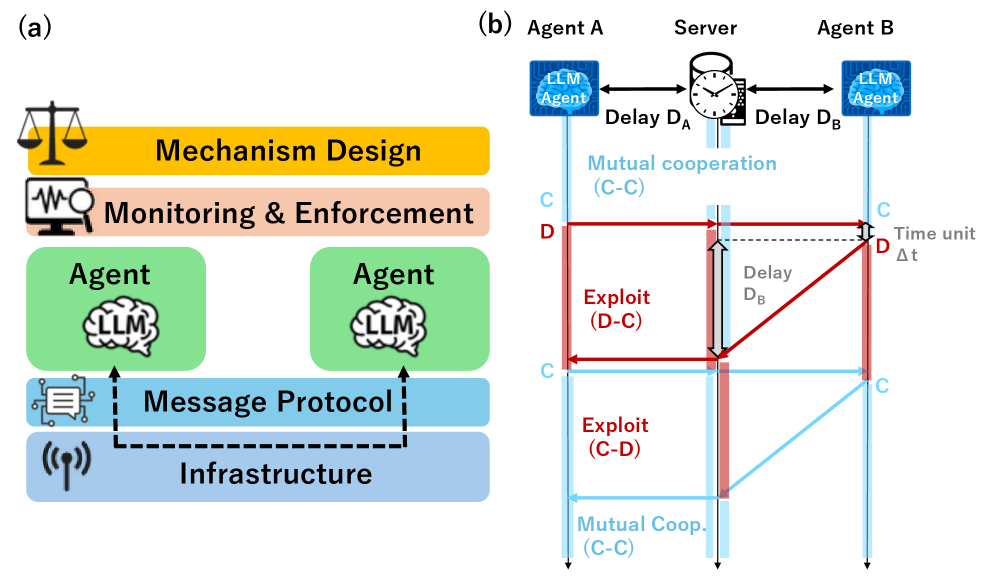

FLCOA: Layered Architecture for Cooperative Control

The Mechanism Design Layer within the FLCOA framework defines the objectives that participating agents collectively pursue and establishes the operational rules governing their interactions. This layer utilizes game-theoretic principles to incentivize cooperative behavior and mitigate potential conflicts arising from self-interested actions. Specifically, it details the reward structures, penalty mechanisms, and decision-making processes that shape agent behavior, ensuring alignment with the overarching collective goals. Successful implementation of this layer is crucial as it provides the foundational logic upon which all subsequent layers – monitoring, agent behavior refinement, and communication infrastructure – are built, enabling sustained and predictable cooperative outcomes.

The Monitoring and Enforcement Layer within the FLCOA framework utilizes a combination of observation and reactive mechanisms to maintain compliance with established protocols. This layer functions by continuously assessing agent actions against predefined rules, identifying deviations, and implementing pre-determined responses. These responses can range from warnings and penalties to more substantial interventions, depending on the severity of the infraction and the framework’s configuration. Successful monitoring and enforcement are critical for establishing a predictable environment, reducing opportunistic behavior, and cultivating trust among interacting agents by ensuring equitable accountability and consistent application of rules.

The Agent Layer within the FLCOA framework models individual agent behavior by integrating parameters derived from the Big Five Personality Traits – Openness, Conscientiousness, Extraversion, Agreeableness, and Neuroticism. These traits are translated into quantifiable variables that modulate agent decision-making processes, influencing factors such as risk assessment, negotiation tactics, and response to incentives. By incorporating these psychological parameters, the framework allows for the simulation of heterogeneous agent populations where behavioral diversity impacts collective outcomes and the emergence of cooperative strategies. This approach moves beyond purely rational actor models by acknowledging the systematic influences of personality on agent interactions and predictions.

The Message Protocol and Infrastructure Layers of the FLCOA framework establish the technical foundations for agent interaction. These layers define standardized communication protocols ensuring messages are reliably transmitted and correctly interpreted, preventing ambiguity and errors. Resource allocation is managed through defined mechanisms within this infrastructure, prioritizing equitable distribution based on pre-defined rules or dynamically adjusted needs. Efficient communication minimizes latency and bandwidth consumption, while equitable resource allocation prevents starvation or monopolization, both of which are critical factors in sustaining long-term cooperative behavior among agents within the system. The infrastructure also includes provisions for message authentication and integrity checks to prevent malicious interference or data corruption.

Empirical Evidence: Communication Delays and Exploitation

The experimental framework utilized a Continuous Prisoner’s Dilemma implemented with a Server-Client architecture to introduce and control communication delay between agents. In this setup, agents interacted repeatedly, with decisions and resulting payoffs communicated between client agents and a central server. The server introduced configurable delays to these communications, simulating real-world network latency. This architecture allowed for precise manipulation of communication delay as a variable, enabling the observation of its impact on agent strategies and interaction outcomes within the repeated game scenario. The continuous nature of the game, as opposed to a single-round Prisoner’s Dilemma, allowed for the assessment of long-term strategic responses to delayed feedback.

The LLM agents utilized in this study were programmed with two distinct behavioral strategies: Tit-for-Tat and Exploitation. The Tit-for-Tat strategy involved agents initially cooperating and subsequently mirroring the opponent’s previous action in each round, promoting reciprocal cooperation. Conversely, the Exploitation strategy prioritized maximizing the agent’s individual reward, even at the expense of the opponent, by consistently defecting. Implementing both strategies allowed for a comparative analysis of agent responses to delayed feedback within the Continuous Prisoner’s Dilemma, specifically examining how the presence of communication delays impacted the propensity for cooperative versus exploitative behaviors.

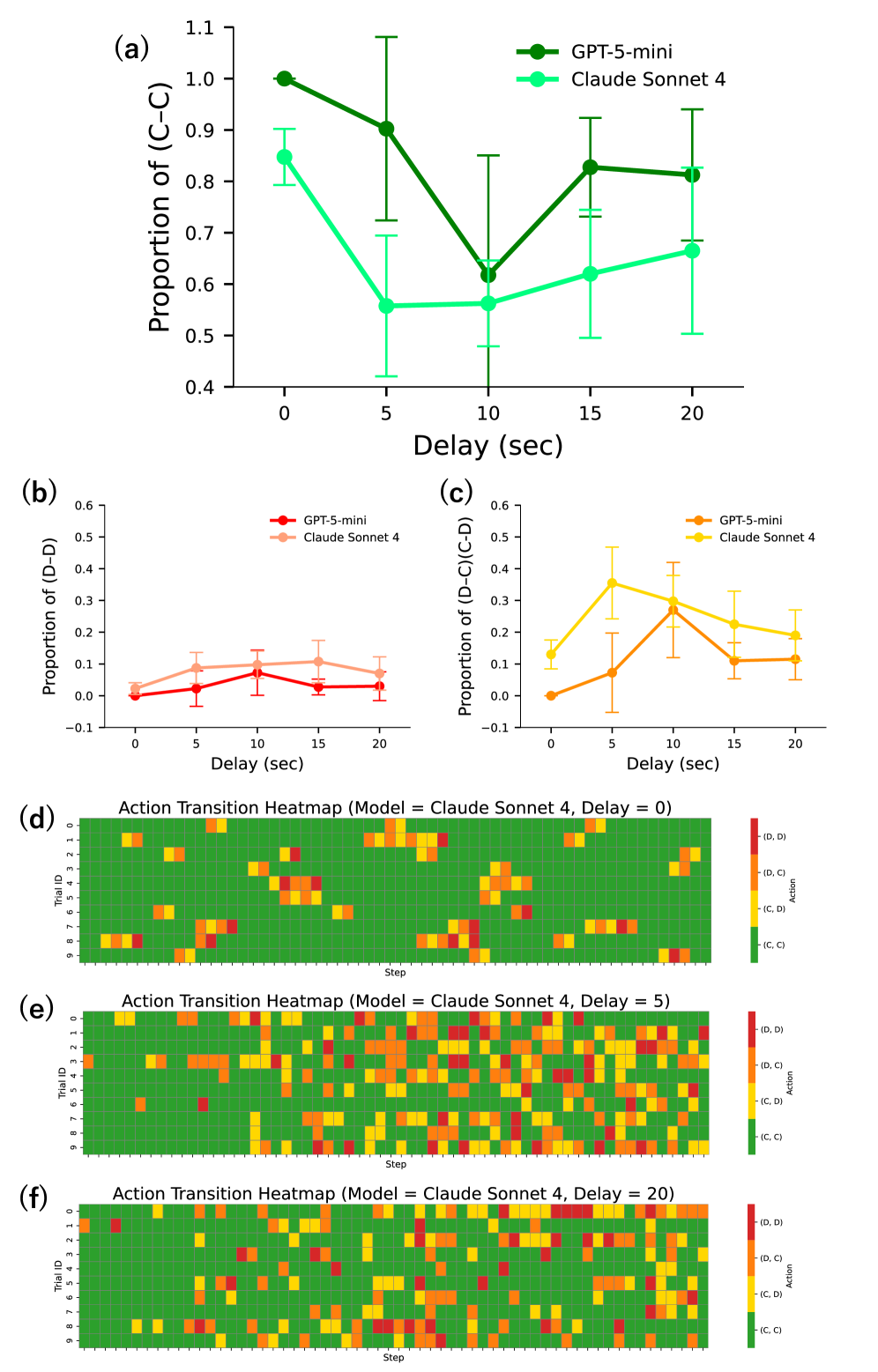

Analysis of agent interaction within the Continuous Prisoner’s Dilemma testbed revealed a non-monotonic correlation between communication delay and the rate of mutual cooperation. Specifically, the Mutual Cooperation Rate initially decreased as communication delay increased from zero to moderate levels (5-10 seconds). However, beyond this point, the rate of mutual cooperation recovered, exhibiting an overall U-shaped curve. This suggests that moderate delays disrupt cooperative strategies more significantly than either negligible or substantial delays, potentially due to increased uncertainty or the breakdown of reciprocal feedback mechanisms. Data indicated the lowest rates of mutual cooperation occurred with delays between 5 and 10 seconds, while both minimal and high delays (15-20 seconds) showed relatively higher rates of sustained cooperation.

Analysis of agent behavior revealed an inverted U-shaped relationship between communication delay and the Exploitation Rate. Specifically, the rate of exploitative actions increased as communication delay rose from minimal to moderate levels (5-10 seconds), indicating agents were more likely to defect when feedback on prior actions was delayed. However, at higher communication delays (15-20 seconds), the Exploitation Rate decreased, demonstrating an inverse correlation with the Mutual Cooperation Rate and suggesting that extreme delays diminished the effectiveness of exploitative strategies. This suggests a sweet spot for exploitation exists at moderate delay levels, where agents can capitalize on delayed feedback without facing complete unpredictability.

Analysis of agent interactions under communication delay revealed that opportunities for exploitation are present, though not consistently increasing with delay duration. Agents employing an exploitation strategy were able to capitalize on delayed feedback to defect without immediate reciprocal punishment, thereby increasing their individual reward. However, this effect diminished at higher delay values (15-20 seconds), as the decreased frequency of reciprocal interactions reduced the effectiveness of defecting. Specifically, the exploitation rate peaked with moderate delays (5-10 seconds) before declining, demonstrating an inverse correlation with the observed mutual cooperation rate and indicating a non-linear relationship between communication delay and exploitative behavior.

Implications for Robust Cooperative Systems

Cooperative systems, whether composed of robots, software agents, or even humans, frequently operate under the constraint of imperfect communication, and recent investigations highlight the critical need to explicitly address these delays in system design. The inherent latency in transmitting and processing information can destabilize cooperative endeavors, creating opportunities for exploitation or simply hindering effective coordination. Ignoring these delays assumes an idealized scenario rarely encountered in real-world applications, where network congestion, computational limitations, or physical distance introduce unavoidable lags. Consequently, systems built without accounting for communication delays are prone to failure or suboptimal performance, demonstrating that resilience in cooperative agents isn’t simply about achieving agreement, but about maintaining stability despite the inevitable imperfections of information exchange.

Exploitation represents a significant challenge in cooperative multi-agent systems, but proactive design can substantially reduce this risk. Systems engineered to prioritize swift feedback loops allow agents to quickly detect and correct deviations from expected behavior, hindering attempts at manipulation. Crucially, robust error handling mechanisms are equally vital; these systems shouldn’t simply fail when encountering unexpected inputs or actions, but instead possess the capacity to gracefully recover and continue operation. This resilience is achieved through redundant checks, alternative strategies, and the ability to isolate and contain errors, preventing them from cascading and destabilizing the entire cooperative effort. Consequently, strategies emphasizing both rapid responsiveness and fault tolerance create a more secure and dependable environment for continued collaboration, even when faced with potentially adversarial agents.

Investigations into resilient cooperative systems increasingly suggest that static communication protocols are insufficient for real-world applications. Future work should therefore prioritize the development of adaptive strategies, allowing agent interactions to dynamically adjust based on prevailing communication conditions – such as bandwidth limitations, network congestion, or intermittent connectivity. These strategies could involve altering communication frequency, message complexity, or even switching between different communication modalities, all in response to real-time feedback on network performance. Such adaptive systems promise to not only maintain functionality in challenging environments, but also to optimize performance by leveraging available resources efficiently, ultimately leading to more robust and reliable cooperative behavior.

The Fast, Local, Cooperative, Online Auction (FLCOA) framework offers a robust architecture for designing cooperative systems resilient to communication delays. This infrastructure prioritizes localized decision-making, reducing reliance on constant, immediate feedback from distant agents and minimizing the impact of lagged information. By facilitating rapid, independent responses to local conditions, FLCOA inherently buffers against exploitation stemming from delayed signals. Moreover, the framework’s online auction mechanism allows agents to dynamically negotiate and adapt to fluctuating communication bandwidths, effectively establishing a self-regulating system capable of maintaining cooperation even under adverse conditions. This adaptability positions FLCOA as a promising foundation for building cooperative agents that can function reliably in real-world environments characterized by unpredictable network latencies and intermittent connectivity.

The study reveals how seemingly benign infrastructural elements, such as communication latency, can precipitate systemic failure in complex multi-agent systems. This echoes a fundamental principle of robust design: fragility often resides not in the components themselves, but in their interactions. As Paul Erdős once stated, “A mathematician knows a lot of things, but knows nothing deeply.” This sentiment applies equally to system architects; a comprehensive understanding of inter-agent dependencies – particularly the often-overlooked infrastructure layer – is crucial. The observed non-linear decline in cooperation rates under communication delay underscores that systems break along invisible boundaries-if one can’t anticipate these weaknesses, pain is coming. The FLCOA framework, while offering a valuable analytical tool, is ultimately limited if it fails to account for the real-world constraints imposed by the underlying communication infrastructure.

Where Do We Go From Here?

The observed sensitivity to communication delay suggests a fundamental truth about these systems: cleverness is not resilience. If a multi-agent system built upon large language models falters with a mere hiccup in its infrastructure, one suspects the architecture prioritizes demonstration over robustness. The FLCOA framework provides a useful lens, but frameworks, however elegant, are merely maps, not territories. The territory, in this case, is the messy reality of distributed computation and imperfect communication.

Future work must address the implicit assumptions baked into these systems. The Prisoner’s Dilemma, while a convenient benchmark, simplifies a world where agents rarely operate with complete information or static payoffs. Exploring more complex game theoretic scenarios, and importantly, scenarios where agents learn the communication constraints, feels crucial. It’s tempting to layer more sophisticated negotiation strategies atop the LLMs, but that feels akin to applying bandages to a structural flaw.

Ultimately, the infrastructure layer – the unglamorous plumbing of packet delivery and latency – demands greater attention. The art of system design, after all, isn’t about maximizing features, but about choosing what to sacrifice. And if this work demonstrates anything, it’s that consistently achievable, albeit modest, cooperation is often preferable to spectacular, but fragile, displays of intelligence.

Original article: https://arxiv.org/pdf/2602.11754.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Overwatch Domina counters

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Gold Rate Forecast

- ‘Reacher’s Pile of Source Material Presents a Strange Problem

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

- Honor of Kings Year 2026 Spring Festival (Year of the Horse) Skins Details

2026-02-16 00:34