Author: Denis Avetisyan

A new framework leverages intelligent agents to automate robot training, overcoming the bottlenecks of traditional reinforcement learning methods.

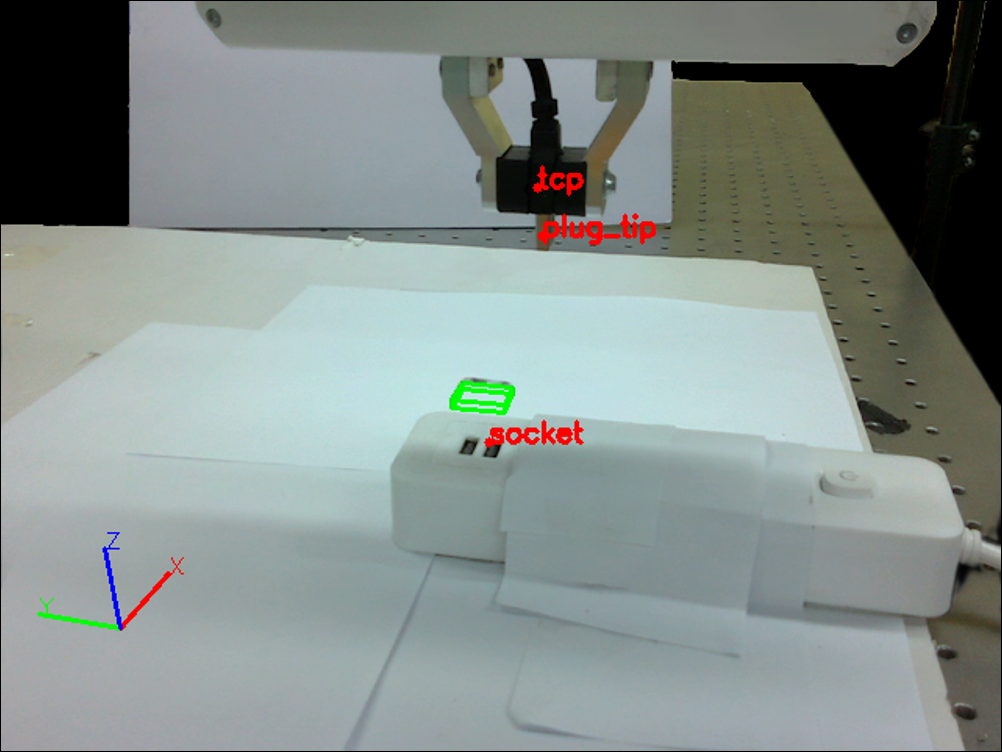

![Hierarchical imitation learning faces limitations as task complexity increases due to the demands of one-to-one supervision and operator fatigue, but this is overcome by an automated approach-AGPS-which utilizes [latex]FLOAT[/latex] as an asynchronous trigger to monitor policy performance and, upon detecting deviations, recalls memory and leverages action primitives, perception, and geometric reasoning to provide action guidance for trajectory correction and exploration pruning for spatial constraint.](https://arxiv.org/html/2602.11978v1/x1.png)

This review details Agent-guided Policy Search (AGPS), which combines multimodal agents, semantic world models, and failure detection for accelerated robot manipulation.

Despite the promise of autonomous skill acquisition, scaling reinforcement learning for real-world robotics remains hampered by significant sample inefficiency. This limitation motivates the work ‘Accelerating Robotic Reinforcement Learning with Agent Guidance’, which introduces a novel framework, Agent-guided Policy Search (AGPS), to automate the training pipeline traditionally reliant on human supervision. AGPS leverages multimodal agents as semantic world models, injecting prior knowledge and providing precise corrective guidance via executable tools to structure physical exploration and prune unproductive trajectories. By replacing human operators with an automated agent, can we unlock truly scalable and labor-free robot learning systems capable of mastering complex manipulation tasks?

The Inherent Limitations of Human-Guided Robotics

Historically, teaching robots new skills has heavily depended on Human-in-the-Loop methods, where a human operator provides continuous feedback and corrections during the learning process. While effective for simple tasks, this approach quickly becomes impractical as complexity increases; the sheer volume of required human supervision creates a significant bottleneck. Each new scenario or variation demands extensive, time-consuming interaction, limiting the robot’s ability to generalize and adapt. This scalability issue isn’t merely a matter of time and cost; it fundamentally restricts the deployment of robots in dynamic, unpredictable environments where constant human intervention is unrealistic, hindering progress toward truly autonomous robotic systems.

Deep Reinforcement Learning presents a compelling route to creating truly autonomous robots, yet its practical implementation is often hindered by substantial data demands and a lack of robustness. These algorithms typically require millions of interactions with an environment to learn even relatively simple tasks, a process impractical for many real-world applications and prone to failure when faced with unexpected situations. This ‘brittleness’ stems from the algorithm’s reliance on learning precise mappings between states and actions; slight deviations from the training environment – a change in lighting, an unforeseen obstacle – can lead to catastrophic performance drops. Consequently, significant research focuses on techniques like simulation-to-reality transfer and data augmentation to bridge this gap and create more adaptable, reliable robotic systems, but the challenge of efficient and robust learning remains a central hurdle in the field.

While formulating robotic tasks through the framework of a Markov Decision Process

Agent-Guided Policy Search: A Framework for Autonomous Learning

Agent-Guided Policy Search (AGPS) builds upon Deep Reinforcement Learning (DRL) by automating aspects of the training pipeline traditionally requiring manual intervention. This automation is achieved through the integration of multimodal agents that introduce prior knowledge directly into the learning process. Unlike standard DRL which relies solely on environmental feedback, AGPS agents provide supplementary guidance, effectively shaping the policy search and accelerating convergence. This injected knowledge can encompass domain expertise, pre-trained models, or learned heuristics, allowing the system to explore more promising areas of the state space and reduce the need for exhaustive random exploration. The result is a more efficient and robust learning process, particularly in complex environments where reward signals are sparse or delayed.

FLOAT is an online failure detection module integrated into the AGPS framework, designed to monitor policy performance during execution and proactively address distribution drift. It employs a Visual Encoder to process observed states and generate a feature representation, which is then used to estimate the divergence between the current execution distribution and the training distribution. This divergence is quantified, allowing FLOAT to trigger corrective actions – such as policy resets or exploratory adjustments – only when the detected deviation exceeds a predefined threshold. By continuously monitoring and reacting to distribution shift, FLOAT aims to improve the stability and sample efficiency of the learning process by preventing the agent from diverging into unproductive state spaces.

FLOAT utilizes Optimal Transport (OT) to quantify the deviation between the current policy’s state distribution and a reference distribution established during successful training phases. OT calculates a cost matrix representing the minimum ‘cost’ to transform one distribution into another, providing a numerical measure of distributional divergence. This allows FLOAT to move beyond simple threshold-based failure detection; intervention, in the form of policy resets or adjustments, is triggered only when the OT distance exceeds a defined threshold, indicating a significant and potentially detrimental shift in the agent’s behavior. By intervening selectively based on distributional drift, rather than at fixed intervals, FLOAT minimizes unnecessary resets, thereby improving sample efficiency and accelerating the learning process.

![During policy training, AGPS learned a robust recovery strategy reflected in a broad high-value region [latex]\left(states deviating from the optimal trajectory\right)[/latex], while HIL-SERL overfit to demonstrated trajectories, resulting in a narrow high-value corridor and limited adaptability.](https://arxiv.org/html/2602.11978v1/x3.png)

Spatial Reasoning and Action Primitives: Towards Robust Robotic Execution

Action Guidance, a core component of AGPS, addresses potential failures during task execution by dynamically generating corrective waypoints. These waypoints are not calculated through replanning from the initial state, but are instead derived from a pre-defined Action Primitives Library. This library contains a set of parameterized actions – such as obstacle circumvention, path smoothing, or re-orientation – allowing the system to react rapidly to unforeseen circumstances. By selecting and adapting primitives based on the nature of the failure, AGPS efficiently guides the robot back towards a feasible trajectory and successful task completion, minimizing recovery time and maximizing robustness in dynamic environments.

Exploration Pruning operates by defining three-dimensional spatial constraints within the robot’s operational environment. These constraints effectively mask or disregard task-irrelevant states, reducing the size of the state space the robot needs to explore during the learning process. By focusing exploration on spatially feasible and goal-relevant areas, the algorithm accelerates learning in complex environments, improving both the speed of convergence and the efficiency of the robot’s planning process. This pruning technique is particularly effective in cluttered or expansive environments where exhaustive search would be computationally prohibitive.

The AGPS architecture incorporates a Memory Module to store associations between achieved subgoals and the corresponding 3D spatial constraints that facilitated their completion. This cached data functions as a long-term memory, enabling the system to bypass re-exploration of previously successful strategies in similar environments. By retrieving relevant spatial constraints based on current subgoal requirements, the robot accelerates learning and improves task efficiency, as it avoids redundant computations and leverages past experiences for generalization to novel situations. The module’s capacity to retain and recall these mappings directly contributes to the robot’s ability to navigate complex spaces and adapt to changing conditions without requiring repeated trial-and-error learning.

Demonstrating AGPS: Mastery of Complex Physical Manipulation

AGPS demonstrates a capacity for complex physical manipulation by successfully completing tasks demanding precise interaction with the real world, such as USB insertion and Chinese knot tying. These aren’t simple pick-and-place operations; they require a nuanced understanding of geometry, force, and sequential actions. USB insertion, for example, necessitates aligning the connector and applying the correct pressure, while knot tying involves looping, tightening, and maintaining structural integrity – challenges that push the boundaries of robotic dexterity. The system’s ability to master these tasks signals a significant advancement toward more versatile and adaptable robots capable of operating effectively in human environments, going beyond pre-programmed routines to address intricate, real-world problems.

A critical component of successful robotic manipulation lies in accurately perceiving the environment, and the AGPS system addresses this through a sophisticated Perception Module. This module utilizes a Vision-Language Model (VLM) to identify keypoints within images that are directly relevant to the task at hand – be it the USB port or the strands of a Chinese knot. By pinpointing these crucial visual features, the system can perform precise geometric calculations, determining the necessary angles, distances, and trajectories for the robot to interact with objects effectively. This capability moves beyond simple object recognition, enabling the robot to ‘understand’ the spatial relationships needed for complex manipulations and form the foundation for accurate and reliable task completion.

Within a mere eight minutes – or 600 training steps – the AGPS system consistently and successfully completed the USB insertion task, achieving a 100% success rate. This performance notably surpasses that of the HIL-SERL system, which failed to achieve comparable results even with extended training. The rapid convergence and flawless execution demonstrate AGPS’s capacity for efficiently mastering complex physical manipulations requiring precise hand-eye coordination and spatial reasoning. This benchmark highlights a significant advancement in robotic learning, suggesting AGPS can quickly adapt to and reliably perform tasks that previously demanded substantial manual programming or lengthy trial-and-error processes.

The complexity of physical manipulation was starkly demonstrated through the Chinese Knot tying task, where the AGPS system achieved a 90% success rate within 42 minutes – equivalent to completing 3000 training steps. This accomplishment stands in direct contrast to the HIL-SERL system, which failed to achieve any successful knot ties until it also reached the 3000-step mark. This performance gap highlights AGPS’s ability to rapidly learn and execute a task demanding fine motor control, spatial reasoning, and sequential action planning – skills that proved significantly challenging for the comparative system to acquire within the same timeframe. The results suggest AGPS’s architecture facilitates a more efficient learning process for intricate, multi-step manipulation challenges.

The integration of a dedicated memory module within the AGPS framework demonstrably accelerates the learning process, particularly evident in complex manipulation tasks like USB insertion. Experimental results indicate a two-fold increase in convergence speed when employing this memory module, enabling the agent to achieve successful task completion in significantly fewer steps. This enhancement stems from the module’s capacity to store and recall previously successful action sequences and perceptual states, effectively mitigating the need for repeated exploration and allowing the policy to rapidly refine its approach. By leveraging past experiences, AGPS with memory avoids redundant calculations and streamlines the learning trajectory, showcasing the benefits of incorporating episodic memory into robotic reinforcement learning systems.

As the agent’s policy refined through learning, external intervention became entirely unnecessary in both the USB insertion and Chinese knot tying tasks. This achievement signifies a crucial step towards truly autonomous robotic manipulation; the system progressed from requiring constant guidance to operating independently and reliably. The convergence to a 0% intervention ratio demonstrates not merely task completion, but a robust and self-sufficient policy capable of handling the complexities of physical interaction without human assistance – a hallmark of intelligent robotic systems designed for real-world applications and deployment.

![The memory module [latex]2\times[/latex] accelerates convergence and ultimately eliminates the need for intervention across both tasks, as demonstrated by decreasing trigger counts over rollouts.](https://arxiv.org/html/2602.11978v1/x4.png)

The presented work on Agent-guided Policy Search (AGPS) embodies a commitment to foundational correctness, mirroring Torvalds’s own emphasis on provable solutions. He once stated, “Most good programmers do programming as an exercise in frustration.” This resonates with the challenges overcome by AGPS; automating robot training through multimodal agents and failure detection isn’t about achieving expediency, but about establishing a robust, reliable system. The framework actively rejects the compromises inherent in purely heuristic approaches, striving instead for a demonstrably correct policy – a solution that isn’t merely ‘working on tests’, but is fundamentally sound, even in complex robot manipulation scenarios.

The Path Forward

The presented Agent-guided Policy Search (AGPS) represents a logical, if incremental, step towards automating the notoriously brittle process of robotic reinforcement learning. The framework’s reliance on multimodal agents and failure detection addresses a practical need, yet it sidesteps the fundamental question of what constitutes a truly robust policy. Success, as currently measured, remains contingent on the training environment and the predefined failure criteria. A policy that navigates a controlled laboratory setting is not, axiomatically, a policy prepared for the inevitable chaos of the real world.

Future work must move beyond merely detecting failure and instead focus on provable guarantees of stability and performance. Semantic world models, while useful for guidance, are approximations – elegant lies, if one will. The pursuit of perfect models is a fool’s errand; the challenge lies in designing algorithms that are demonstrably resilient to model inaccuracies. The field would benefit from a rigorous exploration of formal verification techniques applied to reinforcement learning policies, moving beyond empirical validation toward mathematical proof.

Ultimately, the true measure of progress will not be the speed with which robots can be trained, but the degree to which their actions are governed by principles of logical completeness – a demonstration that the policy is not merely ‘working,’ but demonstrably correct, even in the face of unforeseen circumstances. Until then, the automation of robotic learning remains a sophisticated, but ultimately incomplete, solution.

Original article: https://arxiv.org/pdf/2602.11978.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Married At First Sight’s worst-kept secret revealed! Brook Crompton exposed as bride at centre of explosive ex-lover scandal and pregnancy bombshell

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

- Gold Rate Forecast

- ‘Reacher’s Pile of Source Material Presents a Strange Problem

- Meme Coins Drama: February Week 2 You Won’t Believe

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Olivia Dean says ‘I’m a granddaughter of an immigrant’ and insists ‘those people deserve to be celebrated’ in impassioned speech as she wins Best New Artist at 2026 Grammys

- Why Andy Samberg Thought His 2026 Super Bowl Debut Was Perfect After “Avoiding It For A While”

- February 12 Update Patch Notes

2026-02-14 00:04