Author: Denis Avetisyan

New research leverages advanced AI to help robots understand and navigate complex environments with people, going beyond simple obstacle avoidance.

This work introduces a system using Vision-Language Models and Gaussian Process Regression to generate abstraction maps for improved crowd avoidance and path planning in robotics.

While autonomous navigation has advanced significantly, reliably ensuring safety and efficiency in crowded, dynamic environments remains a key challenge. This paper, ‘Safe mobility support system using crowd mapping and avoidance route planning using VLM’, introduces a novel framework leveraging the power of Vision-Language Models (VLMs) and Gaussian Process Regression (GPR) to generate probabilistic ‘abstraction maps’ representing crowd densities. By enabling robots to perceive and reason about abstract environmental concepts beyond simple geometric obstacles, our approach facilitates more informed path planning and successful avoidance of both static and dynamic impediments. Could this paradigm shift in environmental perception unlock truly adaptive and robust autonomous navigation in complex real-world scenarios?

The Imperative of Dynamic Mapping: Beyond Static Spatial Representation

For decades, robotic navigation has been predicated on the use of meticulously crafted, static maps – digital blueprints detailing a predictable world. However, this approach falters dramatically when confronted with the realities of everyday human environments. These spaces are rarely static; they are populated by individuals moving with varying intentions, creating a constantly shifting landscape of obstacles and potential pathways. A robot reliant on a fixed map struggles to adapt to these dynamic changes, often becoming trapped or inefficient as people unexpectedly alter the navigable space. Consequently, advancements in robotics require a fundamental shift away from pre-programmed routes and toward systems capable of perceiving, predicting, and reacting to the unpredictable behavior inherent in crowded, real-world settings.

Effective navigation within bustling environments isn’t simply a matter of charting physical space; it necessitates interpreting the complex and often erratic movements of people. Unlike static obstacles, crowds exhibit collective behaviors – surges, hesitations, and spontaneous re-directions – that defy predictable modeling. A robot operating in such a space must therefore move beyond geometric understanding and incorporate probabilistic reasoning about human actions, anticipating potential trajectories and adjusting its path accordingly. This demands the development of algorithms capable of not just detecting people, but also predicting their likely future positions, effectively treating the crowd not as a series of individual obstacles, but as a dynamic, flowing entity with its own internal logic.

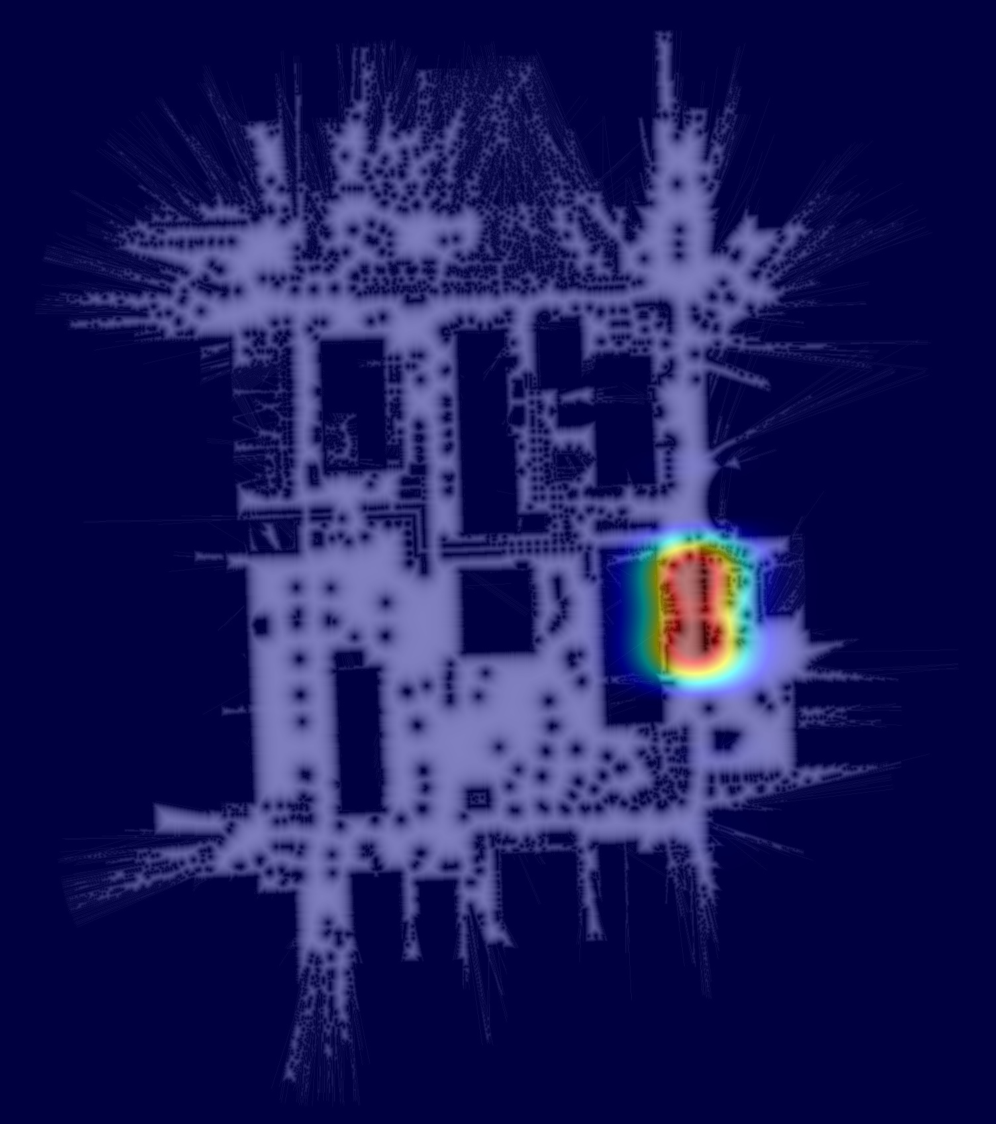

A truly resilient navigation system for crowded spaces necessitates more than just a depiction of walls and obstacles; it requires a computational understanding of the ‘crowd’ as a distinct entity. Researchers are exploring methods to represent this collective behavior not as individual trajectories, but as a dynamic field – a probabilistic map outlining areas of high and low pedestrian density, anticipated movement flows, and potential social interactions. This involves fusing sensor data – from cameras and LiDAR, for instance – with predictive models of human behavior, allowing the system to anticipate where people will be, not just where they are currently located. Effectively, the environment isn’t just ‘mapped’ geometrically, but also ‘socially’, creating a layered representation where physical space and behavioral probabilities intertwine, enabling robots to navigate not around people, but with them.

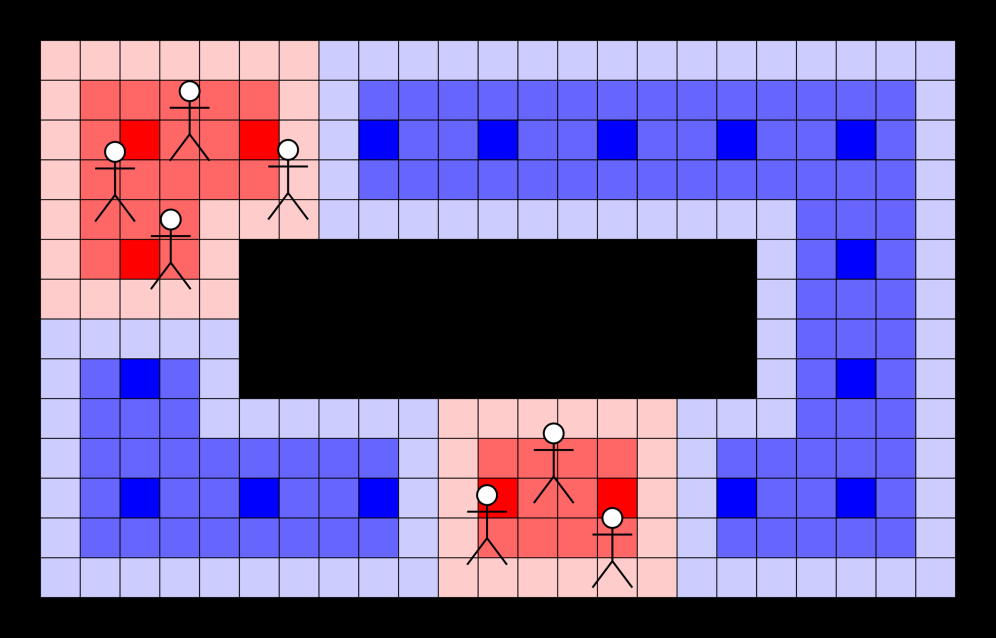

Constructing the Cost Map: A Probabilistic Synthesis of Perception and Prediction

The Cost Map is a representation of environmental traversability utilized for robot navigation. It combines data from static geometric mapping – providing information on fixed obstacles and available space – with an assessment of dynamic occupancy via crowd density estimation. This fusion creates a grid-based map where each cell’s value represents the cost of traversing that space; lower values indicate easier passage, while higher values denote obstacles or areas of high congestion. The integration of crowd density, treated as an abstract but quantifiable factor, allows the system to avoid collisions with pedestrians and navigate efficiently through populated environments, supplementing the purely geometric understanding of the space.

Crowd detection is implemented using Vision-Language Models (VLMs), specifically GPT-4V, which analyze visual input to identify and locate individuals within the robot’s environment. Performance of this detection is range-dependent; while close-range detection yields relatively high accuracy, the ability to reliably identify individuals diminishes with increasing distance from the sensor. This reduction in accuracy is attributed to factors including reduced visual resolution and increased occlusion of individuals within the scene. The VLM outputs, representing detected crowd presence, are then processed for integration into the overall Cost Map.

Gaussian Process Regression (GPR) is employed to transform the discrete outputs of Vision-Language Models (VLMs) into a continuous cost surface. The VLM provides crowd density estimates at specific visual locations; however, path planning algorithms require a spatially continuous representation of cost. GPR functions as a probabilistic model, interpolating between these discrete VLM outputs and extrapolating to unobserved areas, while simultaneously providing a measure of uncertainty. This process generates a smooth cost field where each point represents the estimated cost of traversal, enabling efficient path planning by algorithms that require a continuous cost function. The resulting surface effectively converts sparse, noisy data into a differentiable, actionable map for robot navigation.

The Cost Map functions as a consolidated environmental model enabling robot navigation by integrating geometric data with dynamic crowd density information. This unified representation allows path planning algorithms to consider both static obstacles and the probabilistic cost associated with traversing areas occupied by pedestrians. By assigning a cost value to each location, the map facilitates the generation of safe and efficient trajectories, prioritizing routes that minimize collision risk and maintain navigable space. The resulting cost surface enables robots to effectively reason about the environment and make informed decisions regarding path selection and maneuver execution.

Path Planning Through Dynamic Landscapes: An Algorithmic Synthesis

Dijkstra’s Algorithm functions as the foundational path planning component by calculating the lowest-cost path from a start to a goal location within the Cost Map. This map represents the navigable space, assigning a cost value to each cell based on both static obstacles – such as walls or stationary objects – and dynamic crowd density. The algorithm iteratively explores neighboring cells, accumulating costs until the lowest-cost path to the goal is determined. Higher cost values are assigned to cells occupied by obstacles or containing greater crowd concentrations, effectively guiding the robot to avoid these areas and prioritize routes with minimal impedance. The resulting path represents the optimal trajectory based on the defined cost function, ensuring efficient and collision-free navigation.

Trajectory modeling is integrated with Interacting Gaussian Processes (IGPs) to improve robot navigation in dynamic environments. IGPs allow for probabilistic prediction of multiple future trajectories for surrounding agents, accounting for their interactions and uncertainties. This contrasts with single-trajectory prediction methods, offering a more robust representation of potential crowd behaviors. The integration involves modeling each agent’s future state as a Gaussian Process, where the covariance function captures the dependencies between agents and their individual motion models. By sampling from these Gaussian Processes, the system generates a set of plausible future trajectories for all agents, which are then used to inform the robot’s path planning and collision avoidance strategies. This probabilistic approach enhances the robot’s adaptability to unforeseen crowd movements and improves overall navigational safety.

The system enables proactive collision avoidance by predicting the future positions of individuals within the crowd and dynamically recalculating the robot’s trajectory. This is achieved through the integration of trajectory modeling and Interacting Gaussian Processes, which allow the robot to estimate likely crowd movements over a defined time horizon. By continuously assessing these predicted trajectories, the path planning algorithm can adjust the robot’s course to maintain a safe distance from anticipated pedestrian locations, facilitating both collision avoidance and smoother navigation through crowded environments. This reactive path adjustment minimizes abrupt changes in direction, contributing to a more natural and comfortable experience for individuals sharing the space with the robot.

The Extended Social Force Model (ESFM) simulates pedestrian behavior by representing each individual as being driven by social forces, including attraction to the goal, repulsion from obstacles, and interaction with other pedestrians. ESFM builds upon the original Social Force Model by incorporating factors such as individual preferences for personal space, differing walking speeds, and anticipatory behavior-modeling how pedestrians adjust their trajectories based on perceived intentions of others. This predictive capability improves the accuracy of crowd simulations, allowing the navigation system to forecast potential congestion points and preemptively alter the robot’s path, thereby increasing overall system robustness against unpredictable crowd dynamics and reducing the likelihood of collisions. The model’s parameters are calibrated using observational data of pedestrian movement to ensure realistic and reliable behavior prediction.

Expanding the Horizon: Multi-Sensor Fusion and Scalable Robotic Systems

Precise assessment of crowd density is paramount for effective navigation and safety, and recent research leverages a synergistic combination of technologies to significantly enhance ‘Crowd Detection’ accuracy. This work integrates Wi-Fi Sensing, which passively monitors the presence of wireless devices to infer population size, with Finite-Element Support Vector Machine (FS-ELM) algorithms for robust data analysis. Further refinement is achieved through Reinforcement Learning, allowing the system to adapt and improve its estimations over time based on observed data and environmental feedback. This multi-faceted approach not only addresses the challenges of varying signal strengths and environmental noise inherent in Wi-Fi sensing but also allows for a dynamic and increasingly accurate understanding of crowd distributions, exceeding the capabilities of single-method approaches.

Effective crowd localization within indoor spaces presents unique challenges due to signal attenuation and the complexities of human movement; therefore, a multi-faceted sensing approach proves invaluable. Wi-Fi sensing provides broad spatial coverage by detecting the presence of wireless devices carried by individuals, but struggles with pinpoint accuracy and differentiating closely packed people. Conversely, Forward Scatter – Extreme Learning Machine (FS-ELM) utilizes environmental data to identify and track individuals with greater precision, though its range is limited. Integrating these techniques, alongside Reinforcement Learning to adapt to changing crowd dynamics, creates a complementary system. Wi-Fi sensing establishes a general awareness of crowd distribution, while FS-ELM refines the location of individuals within those crowds, offering a robust solution for indoor environments where traditional methods like cameras may be impractical or ineffective.

Testing within the bustling environment of a university campus confirmed the system’s capability to navigate complex, real-world scenarios. The multi-robot framework successfully demonstrated collision avoidance with both stationary obstacles – such as benches, building supports, and parked bicycles – and with dynamically moving pedestrian crowds. This achievement isn’t merely about avoiding contact; the system actively adjusts its path to maintain a safe distance from individuals and groups, ensuring smooth and predictable movement even amidst unpredictable human behavior. The successful trials highlight the robustness of the combined sensing and navigation algorithms, moving the technology closer to practical deployment in dynamic public spaces.

The capacity to model and navigate within dynamic crowds unlocks significant potential across a range of practical applications. This system facilitates advanced automated surveillance, allowing for intelligent monitoring of public spaces and anomaly detection. Beyond security, the technology proves invaluable in emergency response scenarios, enabling rapid assessment of crowd density and optimized path planning for first responders. Furthermore, the framework supports sophisticated multi-robot coordination; robots can collaboratively map crowded areas, share information about pedestrian movement, and work together to achieve objectives such as delivering supplies or guiding individuals to safety-effectively transforming how robots interact with, and operate within, human environments.

The developed framework transcends the limitations of single-purpose robotics by offering a versatile solution for managing pedestrian dynamics across a spectrum of environments. Beyond the initial testing on a university campus, the system’s core algorithms demonstrate potential in optimizing pedestrian flow within the complex layouts of shopping malls, reducing congestion and enhancing customer experience. Crucially, the adaptable nature extends to high-stakes scenarios such as emergency evacuation, where the multi-robot system could efficiently guide individuals to safety, dynamically adjusting routes based on real-time crowd density and obstacle locations. This scalability – from routine traffic management to critical incident response – positions the framework as a valuable tool for enhancing public safety and operational efficiency in diverse and demanding contexts.

The presented research rigorously pursues a demonstrable understanding of environmental perception, mirroring a fundamental tenet of mathematical reasoning. It isn’t sufficient for a robot to navigate a space; it must comprehend the abstract qualities within it – in this case, crowd density as represented by the abstraction map. This pursuit of provable understanding resonates deeply with the assertion of Henri Poincaré: “Mathematics is the art of giving reasons.” The creation of an abstraction map, grounded in Vision-Language Models and Gaussian Process Regression, isn’t merely an algorithmic trick; it’s an attempt to formalize a logical representation of the environment, allowing for demonstrably safer path planning and a reduction in uncertainty. The system’s reliance on quantifiable data, rather than heuristic assumptions, establishes a foundation for verifiable robustness.

What Lies Ahead?

The presented work, while a demonstrable step towards more nuanced robotic navigation, ultimately highlights the enduring chasm between perception and true understanding. The creation of ‘abstraction maps’ – translating visual data into linguistic concepts – is elegant, yet relies on the inherently imprecise nature of language itself. The Gaussian Process Regression offers a probabilistic framework, but does not resolve the fundamental ambiguity inherent in defining ‘crowds’ or ‘avoidance’ in a mathematically rigorous manner. Further refinement demands a departure from merely representing concepts to proving their validity within a formal system.

A critical limitation remains the reliance on pre-defined linguistic categories. The system operates on abstractions given to it; it does not discover new, relevant distinctions within the environment. Future work should explore methods for dynamic abstraction – allowing the robot to formulate its own conceptual hierarchies based on observed patterns and predictive modeling. This necessitates a move beyond current Vision-Language Models, towards systems capable of genuine conceptual reasoning, not merely statistical correlation.

Ultimately, the pursuit of ‘safe mobility’ is a mathematical problem disguised as an engineering one. The elegance of a solution will not be judged by its performance on a limited set of tests, but by its provable correctness – its consistency with the underlying laws of geometry and logic. Until then, the robot remains a skilled mimic, not an intelligent agent.

Original article: https://arxiv.org/pdf/2602.10910.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Married At First Sight’s worst-kept secret revealed! Brook Crompton exposed as bride at centre of explosive ex-lover scandal and pregnancy bombshell

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

- Gold Rate Forecast

- Why Andy Samberg Thought His 2026 Super Bowl Debut Was Perfect After “Avoiding It For A While”

- Influencer known as the ‘Human Barbie’ is dug up from HER GRAVE amid investigation into shock death at 31

- How Everybody Loves Raymond’s ‘Bad Moon Rising’ Changed Sitcoms 25 Years Ago

- Genshin Impact Zibai Build Guide: Kits, best Team comps, weapons and artifacts explained

- Meme Coins Drama: February Week 2 You Won’t Believe

- Brent Oil Forecast

2026-02-12 19:06