Author: Denis Avetisyan

New research explores how AI-powered tools can move beyond pre-sharing privacy controls to dynamically manage personal data and respect evolving preferences across digital interactions.

This review analyzes the design space for AI agents supporting human-as-the-unit privacy, focusing on usable controls for cross-boundary data flows and interpersonal privacy management.

Despite increasing awareness of data privacy, individuals struggle to manage their digital footprints across a fragmented landscape of platforms and evolving contexts. This paper, ‘From Fragmentation to Integration: Exploring the Design Space of AI Agents for Human-as-the-Unit Privacy Management’, investigates the potential for AI agents to offer holistic, user-centered privacy solutions by adopting a ‘human-as-the-unit’ perspective. Our findings reveal a strong user preference for post-sharing management tools with varying degrees of agent autonomy, suggesting that automated remediation of existing digital footprints is a key design opportunity. Can AI agents effectively bridge the gaps in current privacy practices and empower individuals to navigate the complexities of modern digital life?

Dissecting the Fragmented Self: A Digital Identity Crisis

The modern digital landscape presents individuals with an ever-growing challenge: managing a fragmented online presence. Across numerous social media platforms, data brokers, and online services, a person’s ‘DigitalFootprintOverload’ accumulates, often without their full awareness or control. This proliferation of data points – from purchasing habits to social connections and expressed opinions – creates a disjointed and often inaccurate representation of self. Consequently, many individuals report feeling overwhelmed and powerless, experiencing a sense of lost control over their personal information and, by extension, their digital identity. The sheer volume of accounts to monitor and privacy settings to adjust contributes to this feeling of helplessness, fostering anxieties about data security, reputation management, and the potential for misuse of personal data.

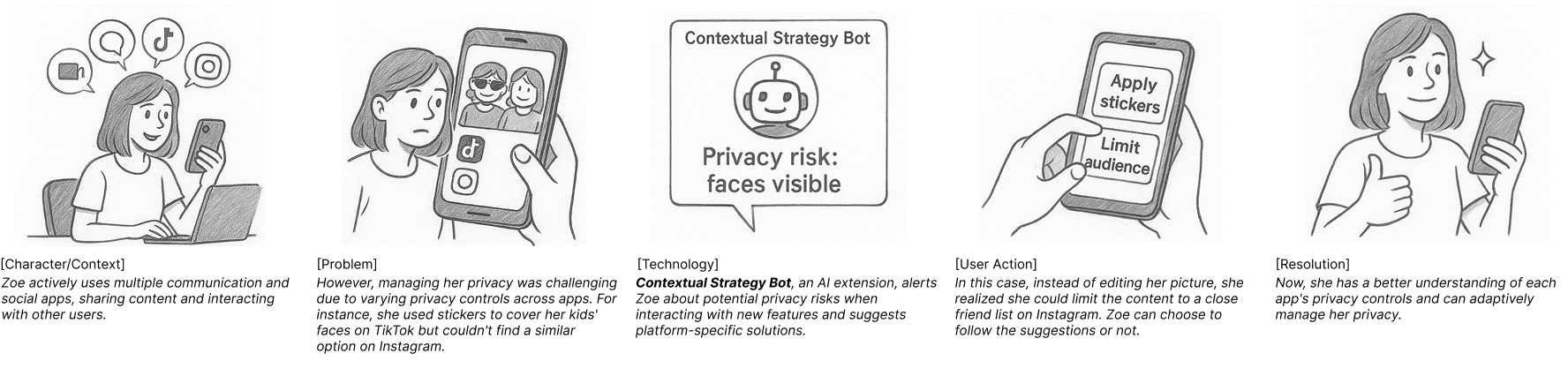

Current approaches to online privacy largely operate as fragmented responses to breaches or evolving platform policies, rather than proactive, unified systems designed for the modern digital landscape. Individuals navigate a complex web of privacy settings, each unique to a specific service, creating a situation where comprehensive data protection becomes exceedingly difficult to achieve. This platform-specific nature fails to account for the seamless flow of information between services – a reality driven by data brokers and cross-platform tracking. Consequently, individuals often lack a clear understanding of how their data is aggregated and utilized, hindering their ability to exercise meaningful control. The result is a reactive cycle of damage control, rather than a preventative framework that truly prioritizes holistic privacy needs in an increasingly interconnected world.

The increasing interconnectedness of digital platforms, driven by cross-platform data integration, presents a growing challenge to individual privacy management. Data routinely flows between services, often without users fully understanding the extent or implications of this sharing. Recent studies reveal a notable shift in user behavior; individuals are increasingly focused on addressing privacy concerns after data has been disseminated, rather than proactively controlling it beforehand. This reactive approach suggests existing pre-sharing privacy controls are perceived as insufficient or ineffective, prompting a need for tools and strategies that empower users to monitor, manage, and rectify data flows across multiple platforms – a move towards ‘post-hoc’ privacy management that acknowledges the limitations of preventative measures in a highly integrated digital landscape.

Reclaiming Agency: The ‘HumanAsUnitPrivacy’ Paradigm

The ‘HumanAsUnitPrivacy’ paradigm shifts the focus of data privacy from application-specific features to individual-centric control. This approach posits that individuals should be the primary decision-makers regarding their personal data, irrespective of the platform or service being utilized. Traditionally, privacy has been implemented as a set of controls within applications; this model treats privacy as an inherent right of the individual, managed proactively and consistently across all data-handling entities. This necessitates a move away from reactive, application-level permissions towards a holistic system where individuals maintain persistent control and visibility over their data lifecycle, regardless of where it resides or how it is processed.

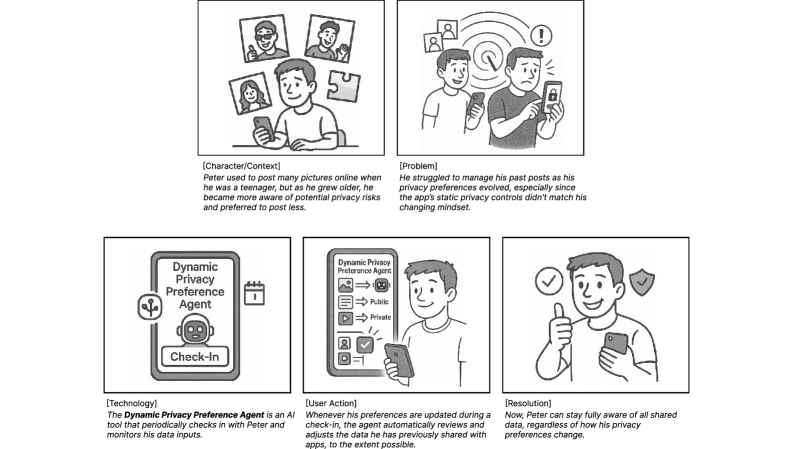

The ‘HumanAsUnitPrivacy’ paradigm requires a shift toward proactive privacy management encompassing both pre-disclosure controls and post-disclosure tools. Pre-sharing management focuses on informed consent and minimizing data collection, while post-sharing management addresses user rights regarding data modification, deletion, and tracking after data has been disclosed to a third party. User research demonstrates a strong preference for concepts centered around post-sharing privacy management; these concepts consistently rank within the top three preferred approaches when presented to users, indicating a demand for tools that empower individuals to retain control over their data even after it leaves their direct possession.

Thorough PrivacyRiskAssessment is a critical component of effective pre-sharing data control. This assessment involves systematically identifying potential harms that could result from data disclosure, considering factors such as re-identification risk, discriminatory outcomes, and potential misuse. A comprehensive assessment should quantify these risks where possible and present them to users in a clear, understandable format prior to consent. The goal is to enable informed decision-making by outlining the specific consequences associated with sharing particular data elements, allowing users to weigh the benefits of data sharing against the potential harms and adjust their sharing preferences accordingly. Effective PrivacyRiskAssessment moves beyond generic privacy notices to provide granular, context-specific information relevant to the data being shared and the intended recipient.

AI as Privacy Sentinel: Proactive and Adaptive Control

AI Agent PrivacySupport utilizes artificial intelligence to automate and refine user privacy management. This involves continuously monitoring data flows across various platforms and adjusting privacy settings based on predefined user preferences or learned behavior. Rather than relying on manual configuration, these agents proactively intervene to limit data sharing, request data deletion where appropriate, and ensure compliance with user-defined privacy policies. The system aims to reduce the cognitive load on users by handling complex privacy controls and adapting to evolving data practices, thereby providing a more robust and responsive privacy posture.

DataOwnershipTransparency, as facilitated by AI agents, involves the automated communication of data usage and sharing practices to the user. These agents monitor data flows across connected platforms and generate readily understandable reports detailing which data elements are being collected, with whom they are being shared, and the stated purpose of that sharing. This communication extends beyond static privacy policies to provide dynamic, context-specific explanations triggered by actual data events. The agents aim to translate complex data handling procedures into concise summaries, empowering users with actionable insights into their data’s lifecycle and fostering greater control over their digital footprint.

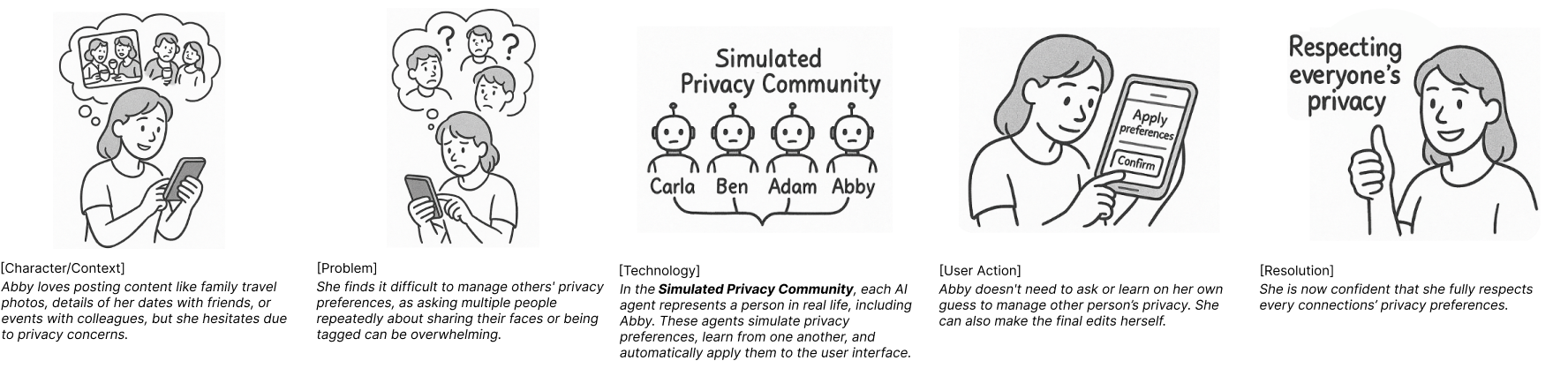

AI agents are being developed to enable GranularRelationshipPrivacy, allowing users to dynamically control data access permissions after information has been shared. This functionality moves beyond broad privacy settings by factoring in the specific nature of relationships between individuals or entities. For example, an agent could automatically restrict access to photos shared with “family” from being visible to “colleagues.” User testing, based on storyboard concepts, indicates strong acceptance of this approach, with median resonance scores of 4-5 out of 5, suggesting a high degree of perceived value and usability.

The Peril of Automation: Preserving User Agency

The increasing prevalence of artificial intelligence agents necessitates a fundamental principle: user agency control. While these systems promise enhanced efficiency and personalized experiences, individuals must unequivocally retain the power to override automated decisions and meticulously tailor privacy settings. This isn’t simply about offering options, but ensuring those options are readily accessible and understandable, allowing users to actively manage their data and dictate the terms of interaction. Without such control, reliance on automated processes can inadvertently lead to unintended consequences, eroding trust and potentially exposing sensitive information – a dynamic where the system, rather than the user, dictates the boundaries of privacy. Prioritizing user agency is therefore not merely a feature, but a cornerstone of responsible AI development, fostering a collaborative relationship between technology and the individual.

The uncritical acceptance of automated decisions, termed ‘AIAutomationBias’, presents a significant challenge to the responsible implementation of artificial intelligence. When individuals are denied the ability to oversee or modify the actions of AI agents, trust erodes, potentially leading to the rejection of beneficial technologies. This lack of control isn’t merely a matter of user experience; it directly impacts privacy, as automated systems, operating without sufficient human oversight, may inadvertently collect, utilize, or disclose personal information in ways that conflict with individual expectations or legal requirements. Consequently, a demonstrable commitment to user agency – enabling individuals to understand, challenge, and correct automated processes – is crucial for fostering both confidence and mitigating the potential for privacy breaches within increasingly AI-driven environments.

Effective privacy protection necessitates more than static settings; instead, preferences must dynamically adjust to evolving life circumstances. Recent thematic analysis of user needs-spanning topics identified as 35, 48, 7, 15, 20, 8, 19, 23, and 17-reveals a strong desire for automated systems that recognize and respond to changes in personal situations. This means privacy configurations should not be ‘set it and forget it’ but rather intelligently adapt to factors such as shifts in employment, location, relationship status, or health concerns. Such a system fosters continued relevance and maximizes the effectiveness of privacy controls, ensuring ongoing protection without requiring constant manual intervention and promoting user trust in automated decision-making processes.

The pursuit of comprehensive AI agents for privacy management, as detailed in the study, necessitates a willingness to challenge existing frameworks. It’s a process of deconstruction, aiming to understand the limitations of current systems before rebuilding them with a ‘human-as-the-unit’ approach. This echoes David Hilbert’s sentiment: “We must be able to answer the question: What are the ultimate possibilities and limitations of any precisely defined mathematical method?” The study mirrors this intellectual rigor, seeking to define the boundaries of automated privacy controls and the ultimate potential for AI to navigate the complexities of interpersonal privacy. Just as Hilbert sought to define the limits of formal systems, this work probes the limits of current privacy management paradigms, ultimately aiming for a more robust and user-centric solution.

Beyond Control: Charting New Privacy Horizons

The pursuit of ‘usable privacy’ often feels like endlessly refining the locks on a door while ignoring the foundations of the house. This work, by framing privacy management around the individual rather than discrete data points, begins to address that structural failing. However, the very notion of a coherent ‘unit’ of privacy remains stubbornly elusive. The paper correctly identifies the limitations of pre-sharing controls, but true integration demands confronting the messy reality of preference inconsistency – people rarely adhere to fixed rules, and automated systems must account for, and perhaps even embrace, that inherent unpredictability.

Future investigations should prioritize dismantling the artificial separation between ‘personal’ and ‘interpersonal’ privacy. Boundaries are negotiated, fluid, and frequently breached – a system built on rigid definitions will inevitably fail. The challenge isn’t simply automating existing norms, but building agents capable of learning, adapting, and even proposing revised boundaries based on observed social dynamics. This necessitates a move beyond passive enforcement to active mediation – a privacy agent as a subtle social choreographer, rather than a digital guard dog.

Ultimately, the most interesting questions aren’t about how to control information, but why. What motivates privacy preferences? What social signals reveal underlying anxieties? Until these questions are addressed, automated privacy management will remain a sophisticated exercise in symptom control, perpetually chasing a moving target. The field needs to shift from engineering solutions to understanding the fundamental human impulses they seek to serve.

Original article: https://arxiv.org/pdf/2602.05016.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- eFootball 2026 Epic Italian League Guardians (Thuram, Pirlo, Ferri) pack review

- The Elder Scrolls 5: Skyrim Lead Designer Doesn’t Think a Morrowind Remaster Would Hold Up Today

- Cardano Founder Ditches Toys for a Punk Rock Comeback

- All The Celebrities In Taylor Swift’s Opalite Music Video: Graham Norton, Domnhall Gleeson, Cillian Murphy, Jodie Turner-Smith and More

- The vile sexual slur you DIDN’T see on Bec and Gia have the nastiest feud of the season… ALI DAHER reveals why Nine isn’t showing what really happened at the hens party

- Season 3 in TEKKEN 8: Characters and rebalance revealed

- Avengers: Doomsday’s WandaVision & Agatha Connection Revealed – Report

- Josh Gad and the ‘Wonder Man’ team on ‘Doorman,’ cautionary tales and his wild cameo

- Elon Musk Slams Christopher Nolan Amid The Odyssey Casting Rumors

- How TIME’s Film Critic Chose the 50 Most Underappreciated Movies of the 21st Century

2026-02-06 20:08