Author: Denis Avetisyan

New research explores how making a chatbot’s reasoning process visible impacts how users perceive its empathy, warmth, and overall competence.

Displaying a chatbot’s internal ‘thought process’-whether focused on emotional support or expertise-significantly shapes user perceptions during conversational interactions.

While increasingly sophisticated conversational agents are designed to offer support and guidance, little is known about how revealing their internal reasoning impacts user perception. This research, ‘Watching AI Think: User Perceptions of Visible Thinking in Chatbots’, investigates how displaying a chatbot’s ‘thinking’ – expressed as either emotionally supportive or expertise-driven reflections – shapes users’ assessments of its empathy, warmth, and competence during help-seeking interactions. Findings reveal that visible thinking significantly influences user experience, demonstrating that how an AI communicates its reasoning process is crucial for building trust and rapport. Consequently, how can we best design these ‘thinking displays’ to foster more effective and beneficial human-computer interactions in sensitive contexts?

The Illusion of Connection: Addressing the Empathy Gap in Conversational AI

Despite remarkable progress in natural language processing, conversational AI frequently fails to forge the genuine connections that characterize human interaction. While these systems excel at generating syntactically correct and contextually relevant responses, they often fall short in establishing the trust and rapport necessary for meaningful engagement. Interactions can feel decidedly transactional – a simple exchange of information – rather than empathetic, lacking the nuanced understanding and emotional intelligence that underpin successful human relationships. This limitation stems from the difficulty in replicating the subtle cues, shared experiences, and inherent vulnerability that build trust, leaving users with a sense of distance and hindering the development of truly supportive AI companions.

The limitations of current chatbots become strikingly apparent when confronted with emotionally nuanced or intricate user needs. While capable of processing language and delivering information, these systems often fail to convincingly demonstrate genuine understanding – a crucial component of effective support. Studies reveal that users quickly perceive a lack of empathy or contextual awareness when discussing sensitive topics, leading to frustration and a breakdown in communication. This isn’t simply a matter of technical accuracy; even grammatically perfect responses can feel cold and impersonal without the ability to appropriately acknowledge, validate, and respond to the underlying emotional state of the user, ultimately hindering the chatbot’s ability to provide meaningful assistance and fostering a sense of disconnect rather than connection.

The proficiency of modern conversational AI in constructing grammatically sound sentences often overshadows a more fundamental hurdle: conveying genuine understanding. While algorithms excel at predicting and generating text, replicating the nuanced cognitive processes underpinning human thought remains elusive. This discrepancy isn’t merely about semantics; it’s about the ability to infer context, recognize emotional cues, and respond with empathy – qualities that signal authentic ‘thinking’ to users. Current systems frequently deliver responses that, while technically correct, lack the depth and resonance necessary to foster true connection, leaving interactions feeling superficial and ultimately hindering the development of trust and rapport. Bridging this gap requires moving beyond statistical language modeling towards AI that can convincingly simulate – or even embody – the complexities of human cognition.

Revealing the Inner Workings: Manipulating Transparency in Chatbot Reasoning

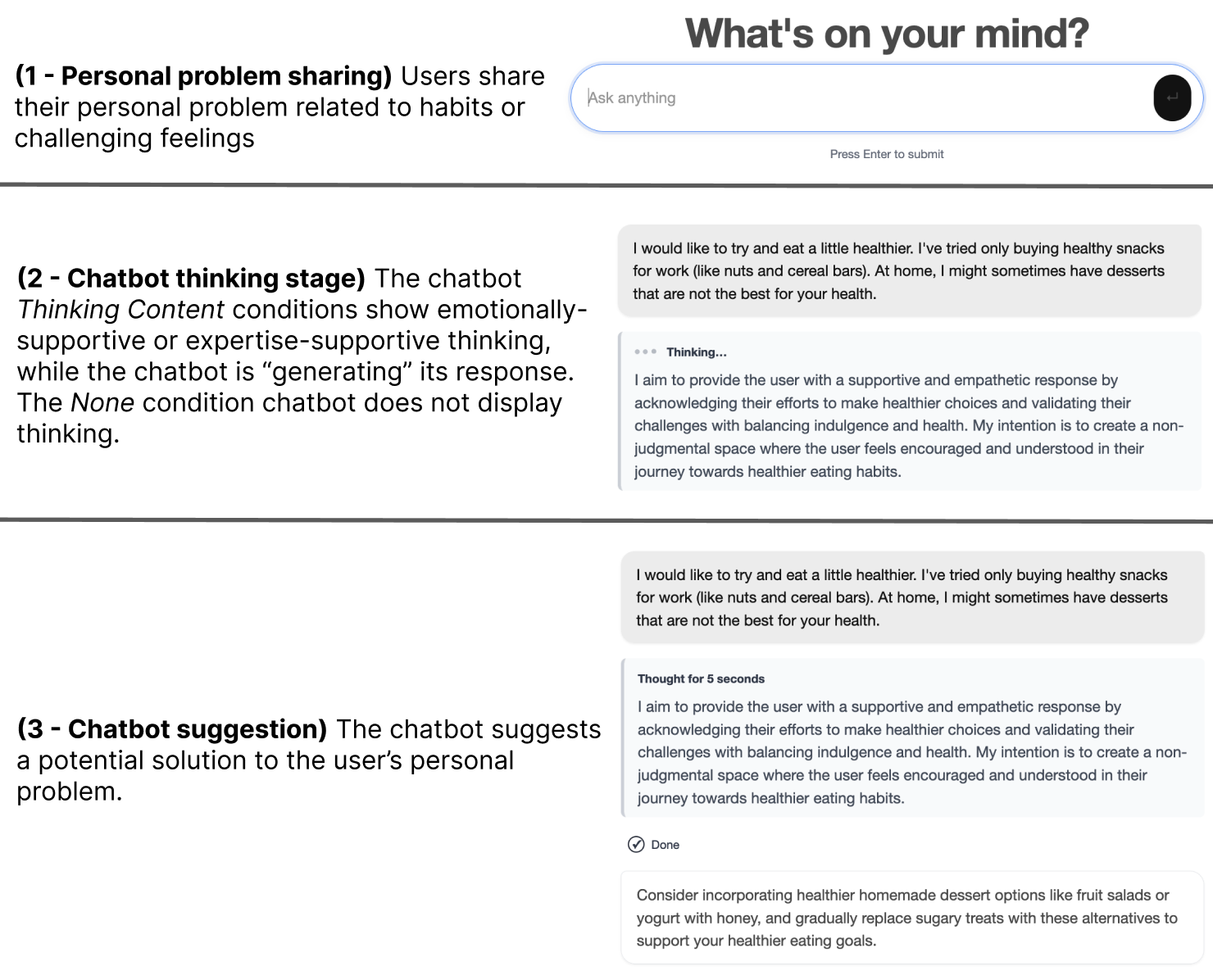

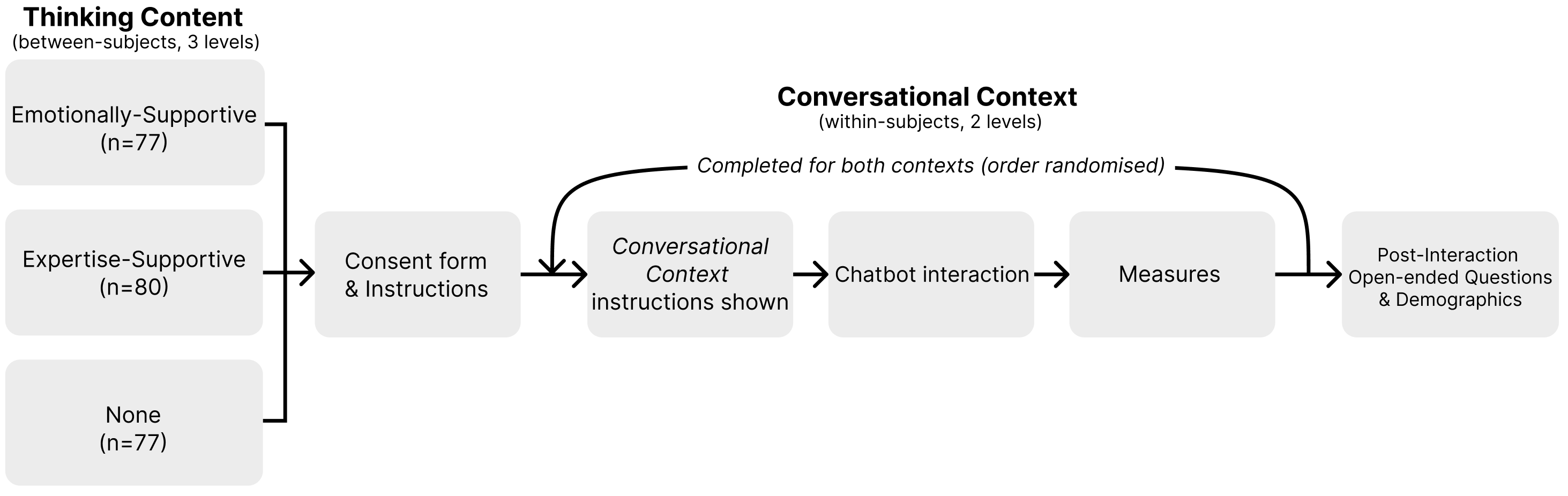

A mixed-design experiment was conducted to quantitatively assess how the visibility of a chatbot’s internal reasoning – termed ‘Thinking Content’ – influences user perceptions. The experimental design incorporated a between-subjects factor manipulating the type of Thinking Content displayed (Emotionally-Supportive, Expertise-Supportive, or a control condition with no visible reasoning), and a within-subjects factor involving varying conversational contexts categorized as either Habit-related or Feelings-related problem domains. Participants were randomly assigned to one of the Thinking Content conditions and engaged in conversations covering both Habit and Feelings topics, allowing for the measurement of both between-group differences in overall perception and within-subject effects of conversational context.

The experimental design incorporated two distinct conversational contexts to assess the influence of visible chatbot reasoning under varying user needs. Participants interacted with the chatbot regarding either problems relating to personal habits – such as exercise or diet – or issues concerning emotional wellbeing. This manipulation allowed for the examination of whether the type of problem being addressed moderated the impact of the chatbot’s displayed ‘Thinking Content’ on user perceptions of its helpfulness and trustworthiness. Utilizing both habit-related and feelings-related scenarios ensured a broader assessment of the chatbot’s perceived performance across different domains of user inquiry.

The experimental manipulation centered on varying the type of ‘Thinking Content’ displayed to users during chatbot interactions. Participants were exposed to one of three conditions: Emotionally-Supportive content, presenting reasoning focused on empathetic responses; Expertise-Supportive content, showcasing reasoning highlighting knowledge and problem-solving skills; or a null control condition where no internal reasoning was displayed. This ‘Thinking Content’ was designed to simulate the chatbot’s internal thought process and was presented as textual output visible to the user, allowing for an assessment of its impact on user perceptions of the chatbot’s behavior.

The Resonance of Empathy: Quantifying the Impact of ‘Thinking’ Styles

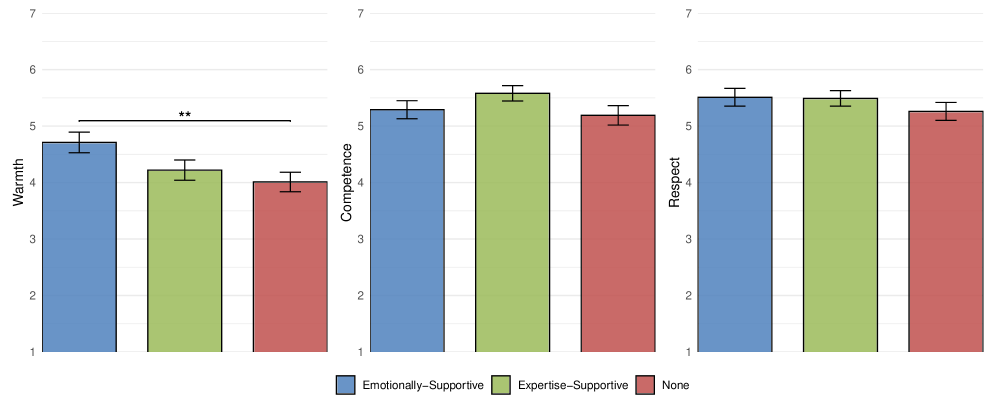

Analysis of user responses demonstrated a statistically significant positive correlation between the implementation of Emotionally-Supportive Thinking and perceived empathy. Participants exposed to this conversational style achieved an average empathy score of 55.61, a result notably higher than that observed in the null condition (p=0.0268). This indicates that framing chatbot responses with language focused on emotional understanding and validation is effective in increasing users’ perception of the system’s empathetic capabilities.

Data analysis revealed a strong correlation between Expertise-Supportive Thinking and increased User Trust. Participants exposed to this style of interaction reported an average trust score of 71.62, a statistically significant increase compared to the null condition (p=0.0328). This suggests that framing chatbot responses with an emphasis on knowledge, competence, and factual accuracy effectively builds user confidence in the system’s reliability and trustworthiness.

Negative Expectancy Violation occurred when the chatbot’s displayed ‘thinking’ process – the rationale presented for its actions – did not logically connect with its subsequent response. This inconsistency generated a statistically significant negative reaction from participants, indicating that users form expectations based on the presented reasoning. The observed effect demonstrates that transparency in a chatbot’s decision-making process is ineffective, and potentially detrimental, if not consistently applied throughout the interaction. This highlights the critical need for alignment between the stated ‘thinking’ and the actual behavior of the chatbot to maintain user confidence and avoid negative perceptions.

Beyond the Algorithm: Implications for the Future of Conversational Design

Recent investigations demonstrate that revealing a chatbot’s internal reasoning-essentially, making its ‘thinking’ visible to the user-can substantially enhance the overall conversational experience. This transparency fosters a sense of trust and understanding, allowing users to perceive the bot not merely as a reactive program, but as a more relatable and predictable entity. The research indicates that when users gain insight into how a chatbot arrives at a particular response, they report higher levels of satisfaction and are more likely to establish a stronger rapport. This effect isn’t simply about accuracy; it’s about building confidence in the system’s process, even when the outcome isn’t perfect, ultimately paving the way for more effective and engaging human-computer interactions.

Research indicates that simply revealing a chatbot’s reasoning isn’t universally beneficial; the type of thinking disclosed profoundly impacts user perception. When users seek emotional support, a chatbot that demonstrates empathetic processing – highlighting considerations of feelings and personal context – fosters greater rapport. Conversely, when users require factual information or task completion, a display of logically-driven, expertise-focused thinking proves more effective. This suggests that truly successful conversational AI requires adaptive strategies, dynamically tailoring the revealed ‘thought process’ to align with both the immediate conversational context and the user’s underlying needs, maximizing trust and perceived competence at any given moment.

Further research is crucial to determine the precise degree of transparency that maximizes the benefits of revealing a chatbot’s internal reasoning without overwhelming the user or creating unrealistic expectations. Investigations must address the potential for ‘negative expectancy violations’ – instances where the chatbot’s actions contradict its previously displayed ‘thought’ process – which can erode trust and hinder effective interaction. Studies should therefore explore strategies for maintaining consistent alignment between declared reasoning and actual behavior, perhaps through dynamic adjustment of transparency levels or the implementation of error-handling mechanisms that explicitly acknowledge and address discrepancies. Ultimately, the goal is to establish a predictable and reliable relationship between a chatbot’s stated intentions and its performed actions, fostering a more positive and productive user experience.

The study demonstrates a critical interplay between system structure and perceived behavior, mirroring the principles of holistic design. Displaying a chatbot’s reasoning – whether framed as emotional support or expertise – isn’t merely cosmetic; it fundamentally alters how users interpret the interaction. This echoes the idea that modifying one part of a system triggers a domino effect, as even subtle changes to the ‘thinking’ display significantly influence perceptions of empathy and competence. As David Hilbert noted, “One must be able to say at all times what one knows, and what one does not.” This transparency, visualized in the chatbot’s process, builds trust and allows users to more accurately assess the system’s capabilities, reinforcing the importance of a coherent, understandable architecture.

Where Do We Go From Here?

The demonstration that visible reasoning-even simulated-alters perceptions of a conversational agent compels a re-examination of what constitutes ‘intelligence’ in these systems. It is not sufficient to achieve a correct answer; the perception of a thoughtful process, however superficial, fundamentally shapes the user experience. This raises a critical question: what are systems actually optimized for? Is the goal truly effective problem-solving, or is it the construction of a believable, and therefore acceptable, illusion of thought? The distinction is, perhaps, more crucial than currently acknowledged.

Future work must move beyond simply showing thinking, to understanding what kind of thinking is most beneficial – and for whom. The research suggests a nuanced relationship between the perceived rationale-emotional support versus expertise-and user response. A truly robust theory would account for individual differences, task complexity, and the contextual factors that modulate trust and engagement. Simplicity, in this case, is not minimalism but the discipline of distinguishing the essential signals of cognition from the accidental noise.

Ultimately, the field faces a challenge of coherence. Visible thinking, as currently implemented, feels like a cosmetic addition. A deeper integration with the underlying reasoning architecture is required. Systems should not merely display a process; they should be built around a transparent, explainable core. Only then will visible thinking move beyond a user interface trick and become a foundational element of genuinely intelligent interaction.

Original article: https://arxiv.org/pdf/2601.16720.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- EUR ILS PREDICTION

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- 28 Years Later: The Bone Temple’s huge Samson twist is more complicated than you think

- Simulating Society: Modeling Personality in Social Media Bots

- Battlestar Galactica Brought Dark Sci-Fi Back to TV

- ‘This from a self-proclaimed chef is laughable’: Brooklyn Beckham’s ‘toe-curling’ breakfast sandwich video goes viral as the amateur chef is roasted on social media

2026-01-26 16:24