Author: Denis Avetisyan

A new system uses artificial intelligence to generate realistic user interactions, dramatically increasing the speed and scale of usability evaluations.

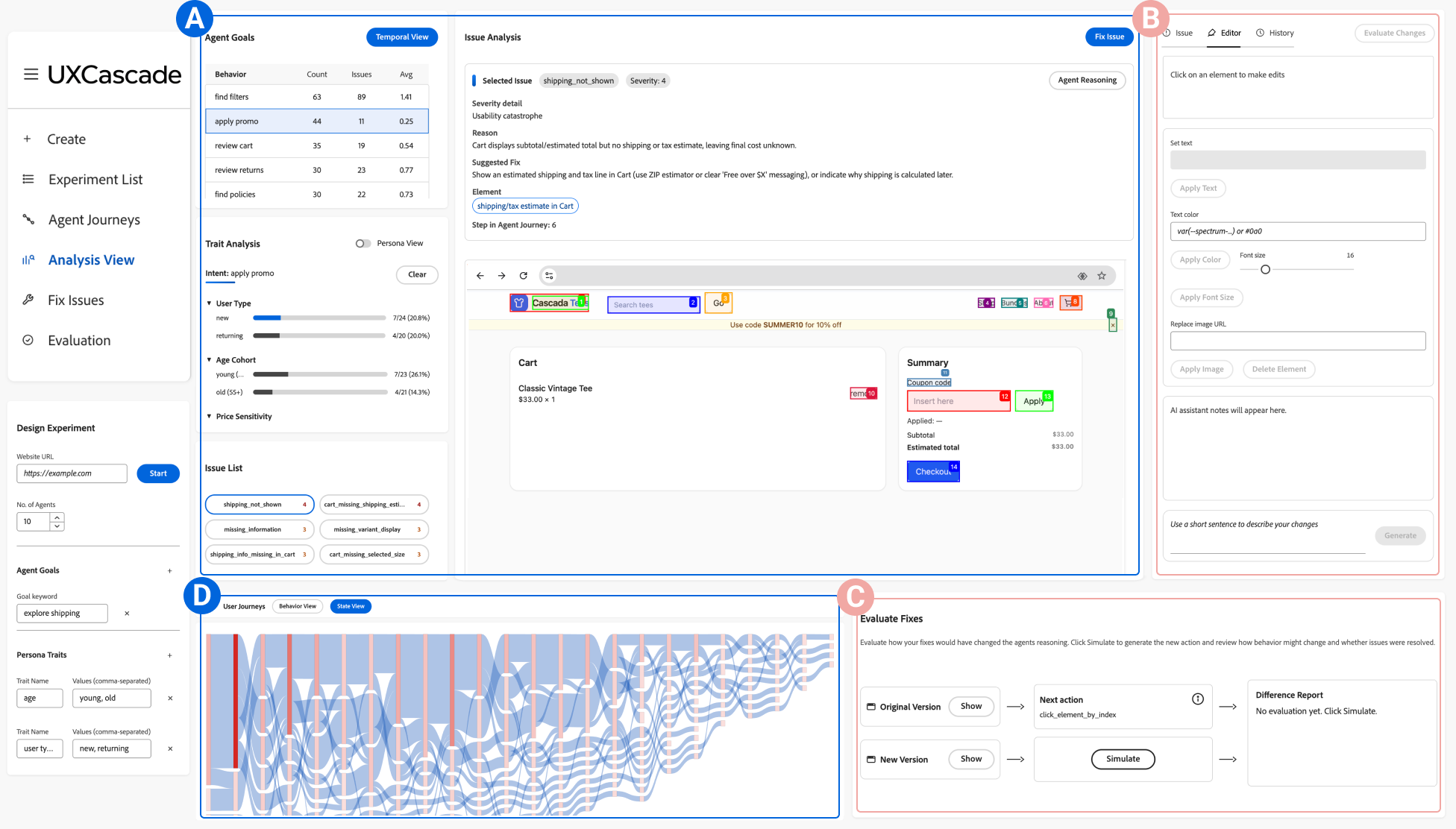

UXCascade leverages large language model agents to simulate usability testing, providing designers with actionable insights for iterative interface refinement.

Despite the growing need for rapid iteration in user experience (UX) design, traditional usability testing struggles to scale with modern development cycles. This paper introduces UXCascade: Scalable Usability Testing with Simulated User Agents, an interactive system that leverages large language model (LLM) agents to generate and analyze usability feedback at scale. UXCascade operationalizes a multi-level analysis workflow, linking agent reasoning to specific interface issues and facilitating actionable design improvements through a structured overview of persona traits, goals, and outcomes. Can this approach unlock more efficient and insightful usability evaluations, ultimately accelerating the design of user-centered interfaces?

Deconstructing Usability: The Limits of Human-Centric Testing

Conventional usability testing, while valuable, often presents a significant strain on development resources. The process typically involves recruiting participants, meticulously crafting testing scenarios, and then dedicating substantial time to observing and analyzing individual user interactions. This approach struggles to encompass the breadth of real-world user diversity – variations in technical proficiency, cultural background, accessibility needs, and usage contexts – resulting in a limited, and potentially skewed, understanding of how a product will perform in the hands of its entire audience. Consequently, development teams frequently encounter bottlenecks as they attempt to reconcile limited usability data with the need for rapid iteration and deployment, delaying product launches and potentially compromising the overall user experience.

The painstaking process of manually reviewing user interaction data presents a significant hurdle in usability testing. Each click, swipe, and hesitation requires individual assessment, demanding substantial time and resources, particularly with larger datasets. More critically, this manual approach introduces unavoidable subjective bias; an analyst’s interpretation of a user’s actions can be influenced by their own preconceptions and expectations, potentially overlooking crucial usability issues or misinterpreting user intent. Consequently, the depth of insights derived from such analyses is often limited, hindering a comprehensive understanding of user behavior and potentially leading to design decisions based on incomplete or skewed information. This reliance on human interpretation, while offering nuanced observation, ultimately restricts the scalability and objectivity necessary for robust usability evaluation.

The prevailing approach to usability testing frequently surfaces issues only after a product has reached a late stage of development, or even after public launch. This reactive stance creates a cascade of negative consequences, beginning with diminished user satisfaction and potentially leading to brand damage. Addressing these post-launch problems necessitates costly and time-consuming revisions – often involving code rewrites, design overhauls, and re-testing cycles. Moreover, the delay between identifying an issue and implementing a fix can erode user trust and open the door for competitors. A shift toward proactive identification, integrated throughout the development process, is therefore crucial for minimizing these risks and maximizing the potential for positive user experiences and reduced long-term costs.

![The UX development cycle integrates key stages-research, design, implementation, and evaluation-with methods like usability testing and [latex]A/B[/latex] testing to ensure user-centered design.](https://arxiv.org/html/2601.15777v1/figures/ux-pipeline.png)

Simulating the User: Synthetic Data and the LLM Revolution

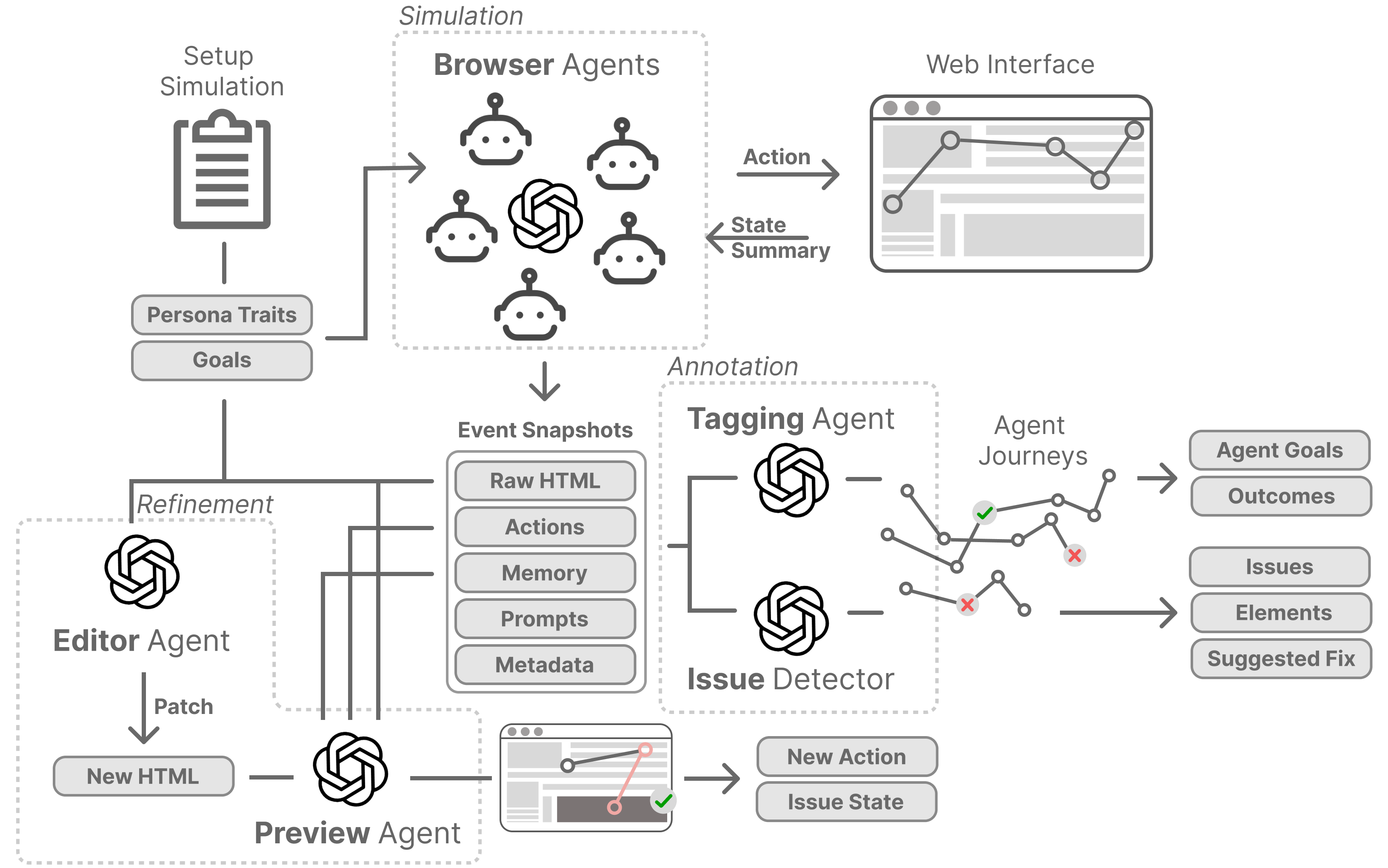

UXCascade is a system designed to generate user interaction data through the use of ‘Simulation Agents’. These agents are powered by Large Language Models (LLMs), enabling them to emulate user behavior and produce realistic interaction patterns. The system functions by prompting LLMs with specific tasks and observing the resulting actions, which are then recorded as interaction data. This allows for the creation of synthetic datasets that can be used for usability testing, A/B testing, and other forms of UX research, without the need for live user participation. The generated data includes simulated user inputs, navigation paths, and task completion times, providing a quantifiable basis for evaluating user interface designs.

Persona-Driven Simulation employs parameterized user models, or ‘personas’, to generate varied interaction data. Each persona encapsulates a set of characteristics defining user behavior, including demographics, technical proficiency, goals, and task-completion strategies. These parameters are utilized by the simulation agents to mimic realistic user actions within a user interface, introducing diversity in interaction patterns beyond those achievable with a limited number of human testers. By adjusting the parameters within these personas, researchers can systematically explore how variations in user profiles impact usability metrics and identify potential design flaws affecting specific user segments. This approach enables testing with a wider range of behavioral patterns than traditional methods, facilitating a more comprehensive evaluation of user experience.

Traditional usability testing is often resource-intensive, requiring significant time for participant recruitment, test setup, and data analysis, alongside considerable financial investment. Our research demonstrates that utilizing automated simulation, driven by Large Language Models, substantially lowers both the temporal and financial burdens of UX evaluation. Specifically, the system allows for the generation of user interaction data at scale, enabling broader test coverage – simulating interactions from numerous user profiles concurrently – without the logistical constraints of recruiting and scheduling human participants. This approach is designed to complement existing UX workflows, not replace them, by providing rapid feedback on design iterations and identifying potential usability issues early in the development cycle, ultimately accelerating the overall testing process.

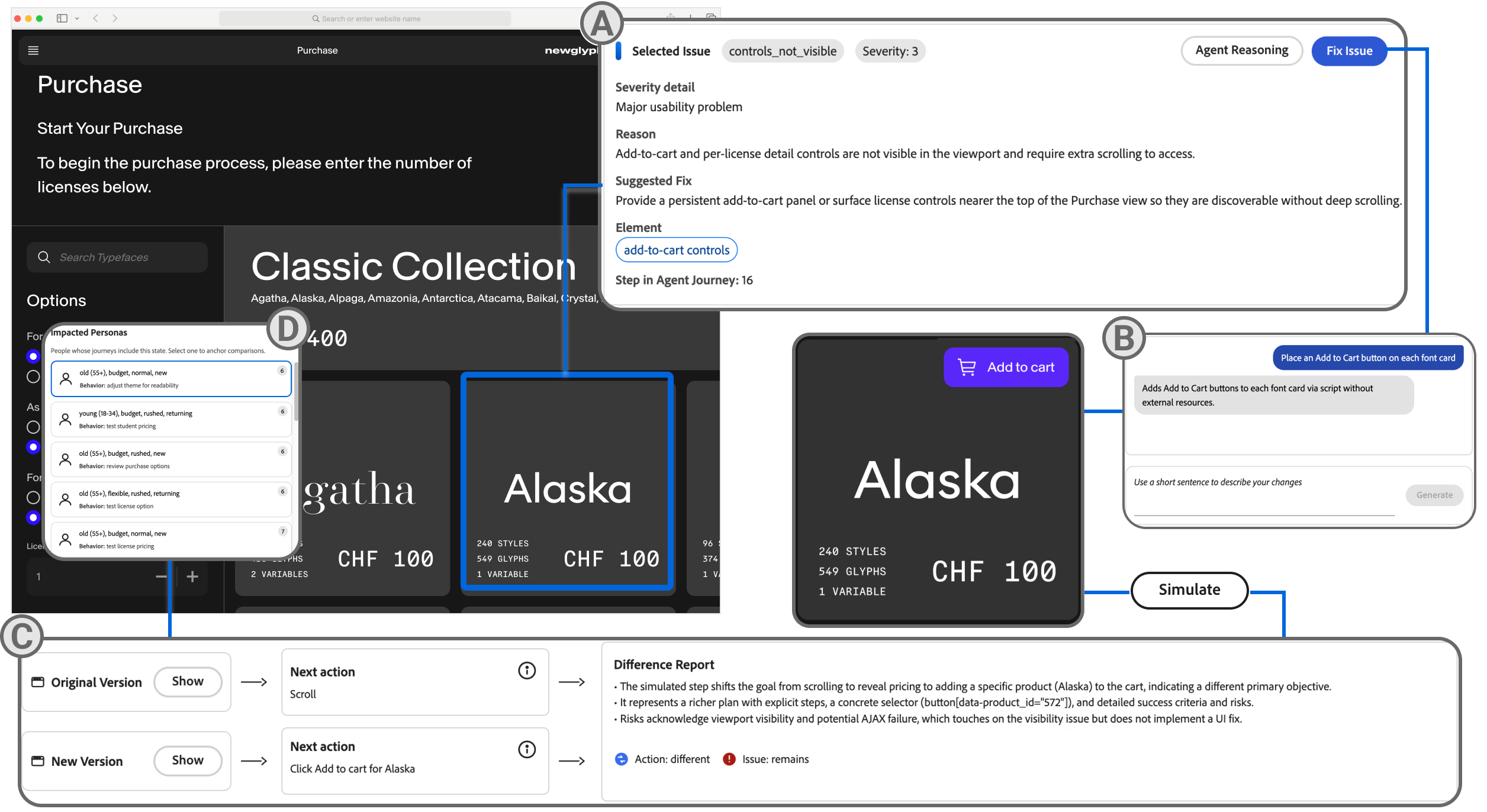

Decoding Intent: Automated Analysis of Simulated Interactions

UXCascade utilizes ‘Annotation Agents’ to analyze simulation traces, a record of user interactions within a simulated environment. These agents function by identifying the cognitive intent behind each user action – determining what the user was trying to achieve – and then correlate that intent with observed usability challenges. The agents process data such as mouse movements, clicks, and time spent on tasks to pinpoint specific usability issues like inefficient workflows, confusing interface elements, or errors in information architecture. This automated analysis extends beyond simple error detection to provide insights into why a user might struggle, enabling a more nuanced understanding of usability problems than traditional methods often provide.

Agent-Generated Feedback within UXCascade consists of reports detailing potential usability issues identified through the analysis of simulation traces. These reports are structured to provide concise summaries of observed problems, including specific instances where user cognitive intent deviated from expected system behavior. The feedback is designed to be directly actionable, outlining the nature of the issue and the location within the simulated user experience where it occurred. Reports include data points such as the specific simulation frame, user action, and identified cognitive load, allowing developers to efficiently investigate and address identified problems without requiring extensive manual review of raw simulation data.

The UXCascade analysis workflow builds upon established usability testing methodologies by integrating AI-driven insights from ‘Annotation Agents’. This process doesn’t replace traditional methods, but rather augments them with automated identification of potential usability issues within simulation traces. Specifically, the workflow systematically processes simulation data, leverages agent-generated feedback to highlight areas of concern, and facilitates a more efficient review process. Validation studies demonstrated the system’s capacity to successfully identify usability problems that might be missed or require significantly more time to uncover using conventional usability testing alone.

Dynamic Design: Iterative Refinement with Autonomous Agents

UXCascade utilizes specialized “Refinement Agents” – autonomous modules designed to directly manipulate the underlying HTML code of design prototypes. These agents aren’t simply reacting to user feedback; they are actively editing HTML snapshots, translating qualitative assessments into precise structural and stylistic changes. This automated editing capability allows for a dynamic design process where suggestions – whether concerning button placement, color schemes, or content hierarchy – are instantly reflected in a revised prototype. The system effectively bridges the gap between user input and tangible design alterations, enabling rapid iterations and facilitating a more fluid exploration of potential solutions without requiring manual coding adjustments.

Iterative refinement, a core principle within UXCascade, allows designers to move beyond static prototypes and embrace a dynamic cycle of creation and evaluation. Rather than building a complete design before gathering user feedback, this process fosters rapid prototyping, where designs are quickly generated, tested with users, and then immediately adjusted based on the insights gained. This accelerated loop dramatically shortens the design cycle, enabling exploration of numerous potential solutions in the time it would traditionally take to develop a single iteration. By continuously refining the design based on real-time feedback, developers can identify and address usability issues early on, ultimately leading to more intuitive and effective user experiences and a reduction in development costs.

UXCascade significantly compresses the traditionally lengthy design cycle through automation, enabling quicker iterations and ultimately, enhanced product quality. The system’s ‘Refinement Agents’ don’t simply suggest changes; they enact them directly on HTML prototypes, drastically reducing the time spent on manual adjustments. Studies evaluating UXCascade’s impact revealed a tangible benefit for designers, demonstrating a reduction in NASA-TLX workload scores when compared to conventional design methods. This indicates that, beyond simply speeding up the process, UXCascade also lessens the cognitive burden on design professionals, fostering a more efficient and less stressful workflow and allowing for greater focus on creative problem-solving.

The pursuit of scalable usability testing, as demonstrated by UXCascade, inherently necessitates a willingness to dismantle conventional approaches. Every exploit starts with a question, not with intent. Andrey Kolmogorov articulated this sentiment beautifully: “The shortest path between two truths runs through a labyrinth of uncertainties.” UXCascade embodies this principle by actively probing interface limitations through simulated user agents, effectively navigating that labyrinth. By systematically challenging designs with LLM-driven personas, the system doesn’t merely validate existing assumptions; it purposefully seeks out vulnerabilities, mirroring the spirit of intellectual deconstruction essential to truly understanding interactive systems and achieving iterative refinement.

What’s Next?

UXCascade demonstrates a predictable outcome: automation will attempt to solve the fundamentally human problem of interface evaluation. The system’s reliance on LLM agents, while scaling usability testing, merely shifts the bottleneck. The quality of insight is now directly proportional to the quality of the simulated ‘user’-a construct as prone to bias and illogicality as any flesh-and-blood participant. The next iteration won’t be about more testing, but about more accurate simulation-demanding a deeper understanding of how these agents model cognitive processes, and acknowledging the inherent limits of that model.

A crucial, and largely unaddressed, question lingers: at what point does a sufficiently detailed simulation become a user? If an agent can convincingly mimic human interaction, exhibiting frustration, confusion, or satisfaction, does the source of that response even matter? This work invites a re-evaluation of usability testing itself – perhaps the true goal isn’t to eliminate errors, but to design systems robust enough to accommodate them.

Ultimately, the best hack is understanding why it worked; every patch is a philosophical confession of imperfection. The pursuit of scalable usability isn’t about building a perfect system, but about meticulously documenting its flaws, and building tools to exploit-or elegantly circumvent-them. The field must now focus on quantifying the distance between simulation and genuine user experience, and accepting that some gaps may prove insurmountable.

Original article: https://arxiv.org/pdf/2601.15777.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- EUR ILS PREDICTION

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- eFootball 2026 Manchester United 25-26 Jan pack review

- The five movies competing for an Oscar that has never been won before

- CS2 Premier Season 4 is here! Anubis and SMG changes, new skins

- Streaming Services With Free Trials In Early 2026

2026-01-26 02:55