Author: Denis Avetisyan

A new approach leveraging the power of artificial intelligence is poised to redefine the limits of wireless communication systems.

Large Wireless Foundation Models offer enhanced generalization and performance within the constraints of real-world hardware and stringent system requirements.

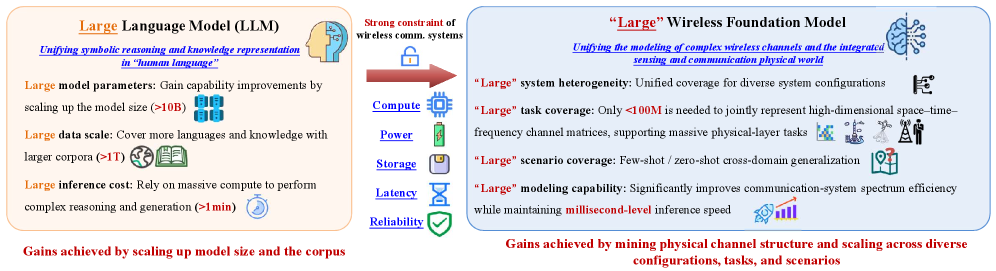

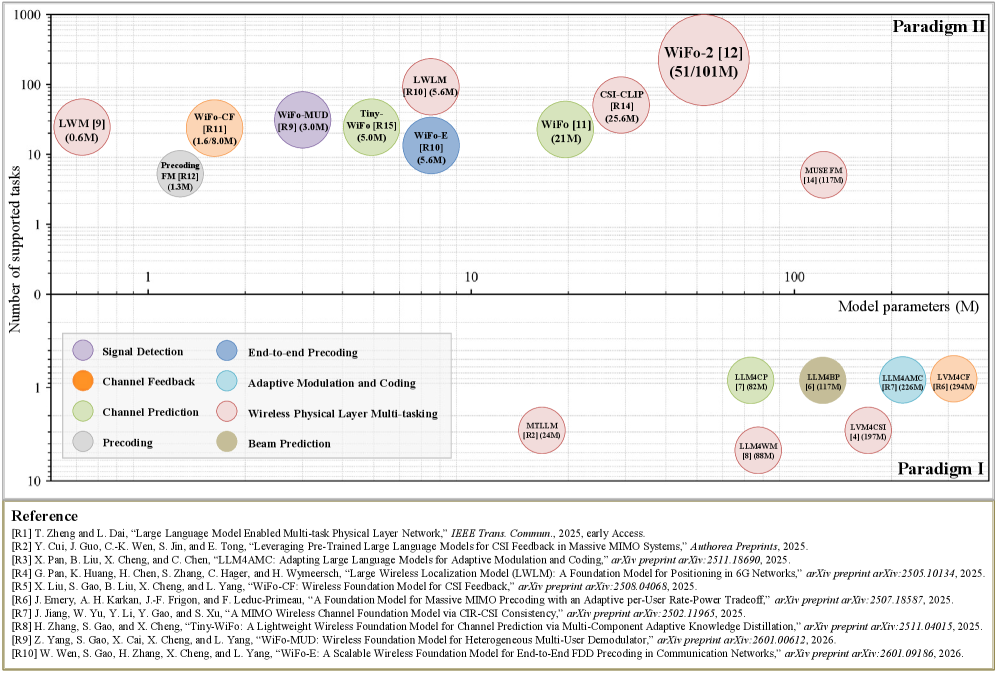

While current AI-driven wireless designs often rely on narrowly-focused, task-specific models, the inherent generality of communication systems demands a more adaptable approach. This paper, ‘Large Wireless Foundation Models: Stronger over Bigger’, introduces the concept of Large Wireless Foundation Models (LWFMs) – a paradigm shift leveraging the reasoning and generalization capabilities of foundation models specifically tailored for the constraints of the wireless domain. By outlining novel frameworks and design principles, we demonstrate that LWFMs offer a path toward more robust and versatile wireless systems, achieving superior performance beyond simply increasing model size. How can we best navigate the trade-offs between model complexity, hardware limitations, and the ever-increasing demands of future wireless networks?

The Inevitable Complexity of Wireless Evolution

The anticipated arrival of 6G wireless technology necessitates a significant leap in both data transmission speeds and responsiveness, placing unprecedented strain on established techniques for understanding and predicting signal behavior. Current channel modeling and signal processing methods, often built upon manually designed features, are proving inadequate to capture the intricacies of increasingly complex and rapidly changing wireless environments. Achieving the terabit-per-second data rates and sub-millisecond latency promised by 6G requires moving beyond these traditional approaches, as their reliance on pre-defined parameters limits adaptability and predictive accuracy in real-world scenarios. This demand for enhanced performance is driving research into innovative solutions capable of handling the sheer volume of data and dynamic conditions inherent in next-generation wireless networks.

Traditional wireless communication systems often rely on meticulously designed, or “hand-crafted,” features to interpret and manage radio signals. These features, developed through extensive engineering analysis, attempt to anticipate and compensate for the various ways signals degrade as they travel through the air. However, modern wireless environments are becoming increasingly complex and dynamic – think dense urban areas with reflections off buildings, or rapidly changing indoor spaces. These environments present scenarios that are difficult, if not impossible, to fully anticipate with pre-defined features. Consequently, these systems struggle to maintain reliable connections and optimal performance when faced with unforeseen interference, signal blockage, or rapidly fluctuating conditions. The inherent inflexibility of hand-crafted features limits the ability of these systems to adapt to the ever-changing realities of the wireless world, creating a need for more intelligent and adaptive solutions.

The advent of artificial intelligence presents a paradigm shift in wireless communication, moving beyond manually designed systems to data-driven approaches. Instead of relying on pre-defined models and hand-crafted features to interpret and optimize signal transmission, AI algorithms can learn directly from the complexities of the wireless environment. This capability allows for the establishment of intricate mappings between received signals and channel characteristics without requiring prior assumptions about the environment’s structure. Consequently, systems can adapt dynamically to changing conditions – such as interference or user density – and optimize performance in ways previously unattainable, promising significant advancements in data rates, reliability, and overall network efficiency as wireless technologies continue to evolve.

Foundation Models: A New Order of Adaptability

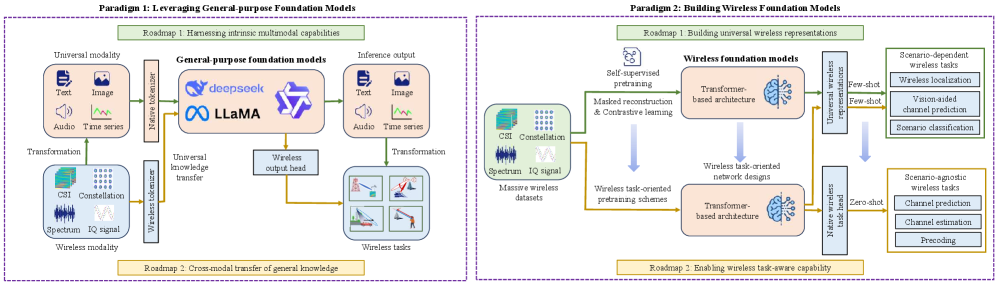

Foundation models represent a departure from traditional wireless system design, which typically requires task-specific training for each new function. These models are initially trained on extremely large and varied datasets – often encompassing text, images, and other modalities – and subsequently adapted, or “fine-tuned,” for specific wireless applications. This pre-training process enables a single model to perform a range of tasks, including signal processing, channel estimation, and resource allocation, without requiring extensive re-training for each new scenario. The adaptability stems from the model’s ability to learn generalized representations of data, allowing it to transfer knowledge across different tasks and environments, ultimately reducing development time and improving system performance in dynamic wireless conditions.

The adaptability of Large Language Models (LLMs) and Large Vision Models (LVMs) enables the development of Wireless Domain-Specific Foundation Models. This extension involves fine-tuning pre-trained LLMs and LVMs with datasets specific to wireless communications – including radio frequency (RF) signal characteristics, channel state information, and network topologies. Such fine-tuning allows these models to perform tasks such as signal classification, channel estimation, resource allocation, and interference management, all within the wireless environment. The resulting domain-specific models leverage the general knowledge embedded in the original LLMs and LVMs, while specializing in the intricacies of wireless systems and improving performance compared to models trained from scratch.

Deep learning techniques, particularly artificial neural networks, provide the computational framework for extracting and modeling complex relationships inherent in wireless signals and propagation channels. These methods move beyond traditional signal processing by automatically learning features from raw data, eliminating the need for manually engineered representations. Specifically, architectures like convolutional neural networks (CNNs) excel at spatial data analysis relevant to channel estimation and interference mitigation, while recurrent neural networks (RNNs) are effective in processing the temporal dynamics of wireless communication. The ability of deep learning models to approximate non-linear functions allows for improved prediction of channel state information (CSI), enhanced modulation and coding schemes, and optimized resource allocation, ultimately leading to more robust and efficient wireless systems.

Large Wireless Foundation Models: A System’s Capacity to Evolve

Large Wireless Foundation Models (LWFMs) represent an adaptation of general Foundation Models to the specific demands of wireless communication systems. These models are designed to enhance performance in critical areas such as channel estimation and prediction, which are fundamental to reliable wireless data transmission and reception. By pre-training on extensive datasets of wireless signals and environments, LWFMs develop a generalized understanding of wireless propagation characteristics. This pre-training allows for efficient adaptation to new and unseen wireless scenarios with significantly reduced training data compared to task-specific artificial intelligence models, ultimately leading to improved accuracy and efficiency in wireless communication and sensing applications.

Large Wireless Foundation Models (LWFMs) utilize Time-Series Foundation Models and State-Space Models to process the inherent sequential nature of wireless data. These models are capable of learning complex temporal dependencies within radio frequency (RF) signals, enabling accurate analysis and forecasting of channel characteristics, signal propagation, and user mobility patterns. Specifically, State-Space Models provide a framework for representing the system’s internal state as it evolves over time, while Time-Series Foundation Models excel at identifying and extrapolating patterns within the sequential data. This approach allows LWFMs to predict future wireless conditions based on historical observations, improving the performance of various wireless applications.

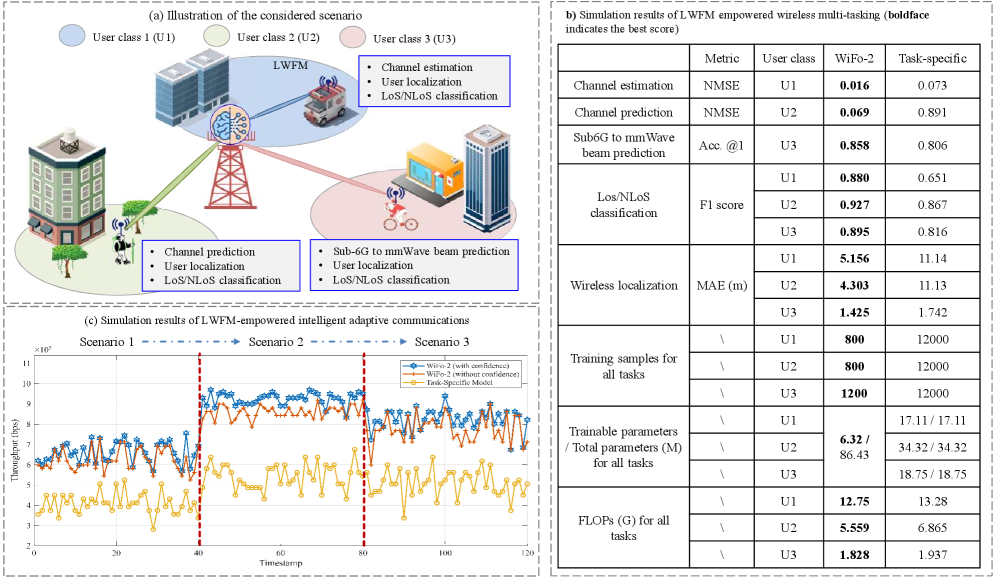

Simulations demonstrate the WiFo-2 model achieves performance gains of 58.9% in wireless communication tasks and 30.8% in wireless sensing tasks when benchmarked against task-specific artificial intelligence models. Notably, these improvements are attained with significantly reduced computational requirements; WiFo-2 requires only 7.8% of the training data and utilizes 9.0% of the trainable parameters compared to the task-specific models, indicating a substantial increase in data and parameter efficiency.

Deployment and Impact: The Network’s Emerging Intelligence

Large waveform models (LWFMs) represent a significant departure from conventional wireless network design, promising intelligent signal processing capabilities distributed across the entire network infrastructure. Traditionally, base stations (BS) handled the bulk of signal processing, while edge devices operated with limited intelligence; LWFMs, however, enable a paradigm shift by embedding adaptable AI directly into both BS and edge devices. This distributed intelligence allows for real-time optimization of signal transmission and reception, responding dynamically to changing network conditions and user demands. By moving beyond task-specific AI, LWFMs can generalize to a broader range of wireless scenarios, improving network efficiency, reducing latency, and ultimately enhancing the user experience across a multitude of connected devices.

Conventional artificial intelligence models designed for wireless networks typically address singular tasks – noise reduction, beamforming, or channel estimation – creating a rigid and often inefficient system. Large Waveform Models (LWFMs), however, represent a paradigm shift by learning the underlying structure of wireless signals themselves. This allows a single LWFMs to generalize across a multitude of wireless tasks, adapting to new and unforeseen challenges without requiring retraining for each specific scenario. Unlike task-specific models that become obsolete as network demands evolve, LWFMs offer a flexible and scalable solution, promising sustained performance improvements and reduced complexity in future wireless infrastructure. This adaptability is crucial as networks become increasingly heterogeneous and dynamic, demanding intelligent systems capable of handling diverse and rapidly changing conditions.

Recent advancements in Large Wireless Foundation Models (LWFMs) are yielding substantial performance gains in wireless communication networks, as evidenced by the WiFo-2 model. This model achieved a remarkable 64.9% improvement in throughput when contrasted with traditional, task-specific AI approaches, demonstrating its superior adaptability and generalization capabilities. Further refinement through the incorporation of confidence outputs yielded an additional 6.7% throughput boost, highlighting the importance of uncertainty awareness in signal processing. Crucially, the underlying Mixture-of-Experts (MoE) architecture significantly enhances both the capacity and efficiency of LWFMs, enabling them to effectively manage the complexities of modern wireless environments and paving the way for their deployment across a wide range of base stations and edge devices.

The pursuit of ever-larger models, as demonstrated by Large Wireless Foundation Models, inevitably introduces complexities. This work acknowledges the trade-offs inherent in scaling-the need for efficient hardware and the constraints of real-world deployment. It’s a recognition that any simplification, even in the pursuit of generalization capability, carries a future cost. As Blaise Pascal observed, “All of humanity’s problems stem from man’s inability to sit quietly in a room alone.” This resonates with the challenge of building robust systems; the drive for increasingly complex solutions must be balanced against the elegance of fundamental principles and the practical realities of implementation. The system’s memory, in this case, is not just code, but the accumulated compromises made in the name of progress.

The Horizon Recedes

The emergence of Large Wireless Foundation Models signals a predictable shift-a leveraging of broadly capable systems to address the increasingly complex demands of wireless communication. Every failure, however, is a signal from time; the initial promise of scale will inevitably encounter the limitations imposed by hardware, energy constraints, and the non-stationary nature of the wireless environment. The question is not whether these models will falter, but how gracefully they will age.

Future work must prioritize not simply the expansion of model size, but the development of architectures that facilitate efficient adaptation and knowledge transfer. Refactoring is a dialogue with the past; the pursuit of generalizable wireless intelligence demands a rigorous understanding of which inductive biases are truly beneficial, and which represent merely the echoes of training data. The field will need to move beyond treating the wireless channel as a static entity, and embrace methods that allow models to continually learn and refine their understanding of its dynamic behavior.

Ultimately, the success of LWFMs-and the broader ambition of AI-native wireless systems-will be judged not by peak performance in controlled settings, but by their resilience in the face of inevitable degradation and change. The horizon recedes as one approaches it; the true measure of progress lies in acknowledging this fundamental truth and designing systems accordingly.

Original article: https://arxiv.org/pdf/2601.10963.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- ‘SNL’ host Finn Wolfhard has a ‘Stranger Things’ reunion and spoofs ‘Heated Rivalry’

- M7 Pass Event Guide: All you need to know

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- Kingdoms of Desire turns the Three Kingdoms era into an idle RPG power fantasy, now globally available

2026-01-19 16:12