Author: Denis Avetisyan

As artificial intelligence increasingly contributes to news production, effectively communicating the division of labor between humans and machines is critical for maintaining public trust.

A new study examines how different information visualizations of human-AI collaboration affect user perceptions of authorship and transparency in journalistic content.

As artificial intelligence increasingly contributes to news production, current disclosure methods often fail to capture the nuanced collaboration between humans and machines. This research, ‘More Human or More AI? Visualizing Human-AI Collaboration Disclosures in Journalistic News Production’, investigates how different visual designs can effectively communicate the division of labor in news creation. Findings reveal that specific visualizations-like chatbot interfaces-offer more detailed insights than simple textual labels, and can even subtly shift perceptions of AI’s actual contribution. How can we design transparency tools that accurately reflect-rather than inadvertently reshape-the roles of humans and AI in shaping the news we consume?

Unveiling the Machine: Why Disclosure is Paramount

The proliferation of artificial intelligence in content creation is rapidly blurring the lines between human and machine authorship, necessitating a clear distinction to preserve trust and authenticity. As AI tools become increasingly adept at generating text, images, and even audio-visual media, audiences may unknowingly consume content crafted, at least in part, by algorithms. This opacity erodes confidence, as the perceived origin of information profoundly influences its reception and credibility. Establishing methods to reliably identify AI’s contribution – whether through watermarking, metadata tagging, or other techniques – is no longer simply a matter of ethical consideration, but a practical imperative for maintaining a functioning information ecosystem where audiences can critically evaluate the source and intent behind the content they encounter. Without such clarity, the very notion of authorship and the value placed upon genuine human creativity are placed at risk.

The proliferation of AI-generated content presents a significant risk of audience deception, potentially eroding trust in information sources. When the origin of content remains obscured, individuals may unknowingly accept machine-authored material as human-created, leading to misinformed decisions or the acceptance of biased perspectives. This lack of transparency doesn’t simply concern the content itself, but actively impedes the progress of beneficial Human-AI collaboration. Responsible development in this field necessitates a clear understanding of where AI begins and human input ends; without it, evaluating the strengths and weaknesses of these partnerships, or refining their performance, becomes impossible. Ultimately, concealing AI’s role stifles innovation and prevents the establishment of ethical guidelines crucial for navigating an increasingly AI-mediated world.

The bedrock of responsible innovation in artificial intelligence hinges on transparency and accountability, principles currently applied inconsistently to AI-generated content. While the potential benefits of AI are substantial, a lack of clear disclosure regarding its involvement erodes public trust and hinders meaningful oversight. This absence extends beyond simple labeling; it encompasses a broader need for documenting the datasets, algorithms, and creative processes underpinning AI outputs. Without standardized implementation of these principles, assessing the veracity of information, attributing authorship, and addressing potential biases become significantly more difficult, ultimately impeding the development of truly collaborative human-AI systems and potentially fostering a climate of misinformation. Establishing clear guidelines and enforcement mechanisms is therefore critical to unlock the full potential of AI while safeguarding societal values.

Deconstructing the Black Box: Visualizing AI’s Contribution

Effective AI disclosure necessitates visualizations that accurately convey the degree and character of AI involvement in content creation. Simply stating AI was used is insufficient; users require granular information regarding which specific tasks were performed by AI versus humans. Visualizations should clearly delineate the division of labor, indicating whether AI contributed to drafting, editing, fact-checking, or other content stages. The precision of this communication is critical; vague or misleading visualizations undermine trust and hinder user comprehension of the content’s origin and potential biases. Data suggests users respond more favorably to visualizations that offer detailed breakdowns of AI contributions, allowing for informed evaluation of the presented information.

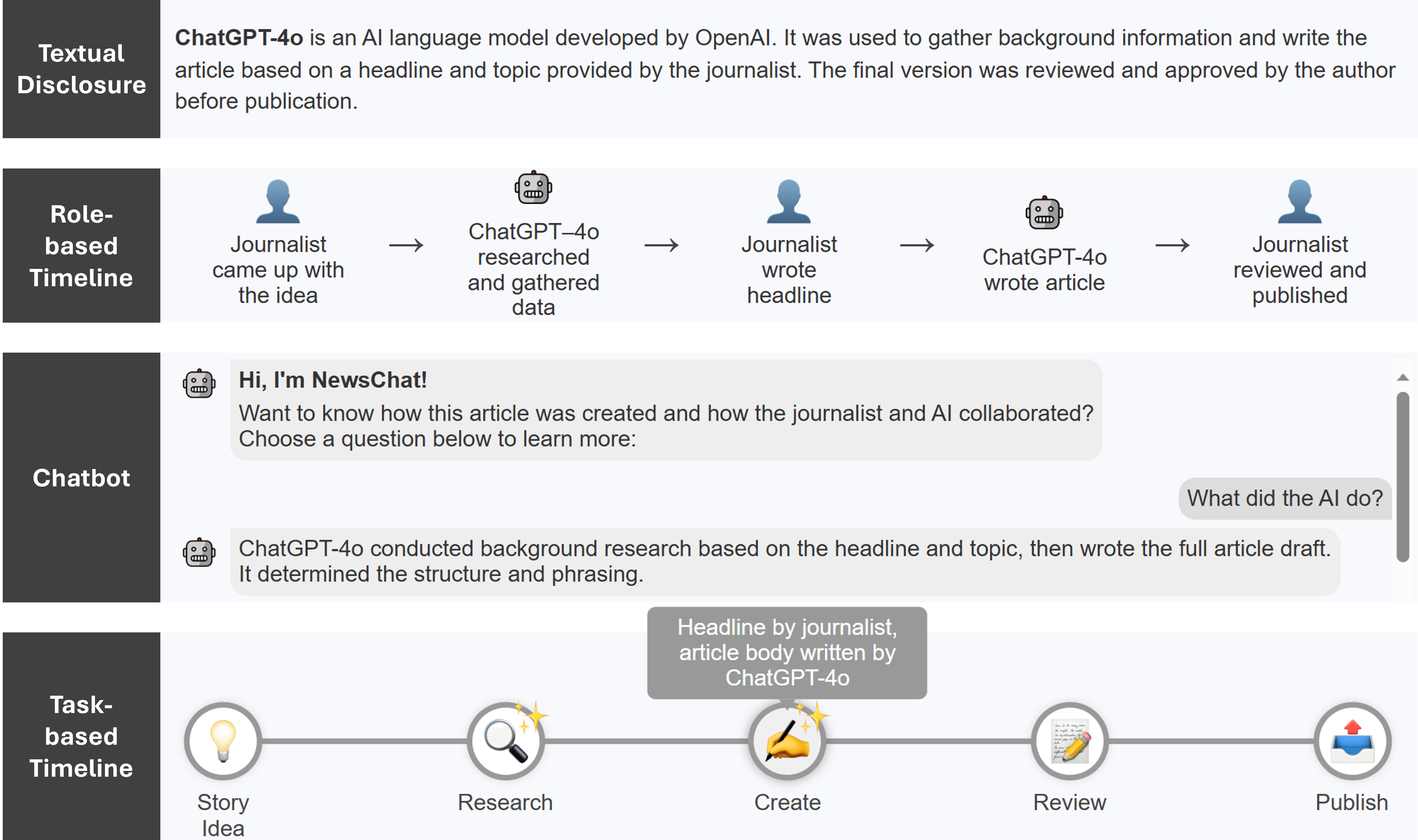

Research into AI disclosure visualizations included the development of two distinct timeline approaches: Task-based and Role-based. The Task-based Timeline visually represented the sequence of specific actions contributing to content creation, differentiating those performed by humans and AI. This approach focused on what actions were taken. Conversely, the Role-based Timeline categorized contributions by the agent – human or AI – responsible, emphasizing who performed each action. Both timelines aimed to clearly demarcate the division of labor, providing users with insight into the extent of AI involvement, but differed in their primary organizational principle and resulting emphasis.

Progressive Disclosure was implemented through an interactive Chatbot Interface designed to detail the extent of AI involvement in content creation. User testing revealed this interface prompted significantly longer fixation durations and a higher count of saccades – rapid eye movements – compared to alternative visualization methods. These metrics suggest users engaged with the Chatbot Interface more deeply and actively explored the information regarding AI contributions, indicating a greater level of comprehension and scrutiny of the AI’s role than was observed with other visualization approaches.

Testing the Boundaries: A User-Centered Validation

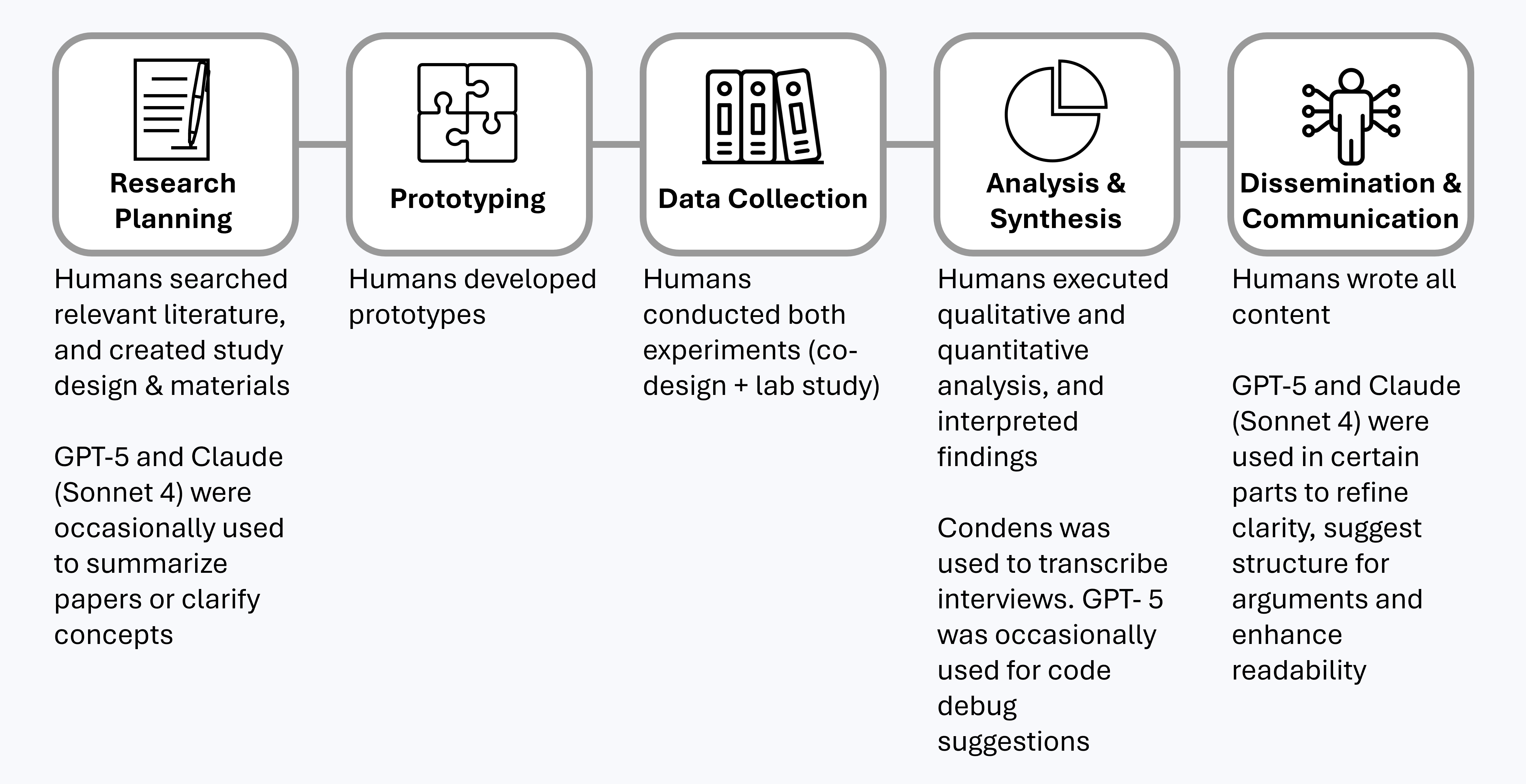

Co-design sessions were conducted with a diverse group of stakeholders – including writers, editors, AI developers, and potential end-users – to iteratively develop a range of disclosure visualizations. These sessions employed participatory design methods, focusing on understanding varying user information needs and preferences regarding AI involvement in content creation. Participants collaborated in sketching, prototyping, and providing feedback on initial visualization concepts, resulting in a set of disclosures varying in format, level of detail, and interaction style. This process prioritized user-centered design, ensuring the resulting visualizations addressed practical concerns and facilitated comprehension of AI’s role, rather than relying on pre-defined or technically-driven approaches to disclosure.

A controlled laboratory study was conducted to quantitatively assess the effectiveness of various disclosure visualizations in communicating the degree of AI involvement in content creation. Participants were exposed to different visualization methods while performing specific tasks, and their eye movements were recorded using gaze tracking technology. This allowed researchers to analyze where participants focused their attention, for how long, and in what order, providing objective data on the salience and comprehensibility of each visualization. Data collected from gaze tracking was correlated with self-reported measures of understanding and trust to determine which visualizations most effectively conveyed information about AI’s role in the content generation process. The study employed a within-subjects design, ensuring each participant was exposed to all visualization conditions, thereby controlling for individual differences in cognitive abilities and prior beliefs.

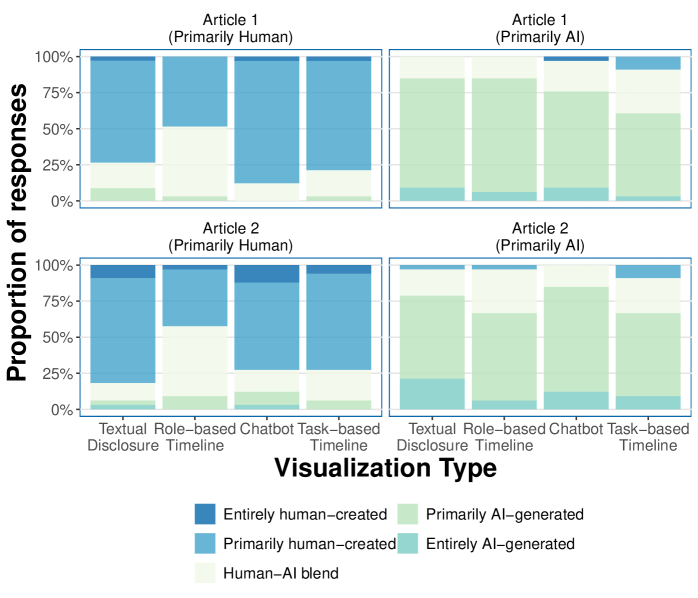

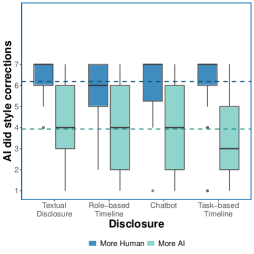

Evaluation of disclosure methods revealed that an interactive chatbot interface, when paired with visualizations of task and role division, substantially improved user comprehension and trust compared to textual disclosures and a standalone chatbot. Quantitative data showed both Role-based Timelines and Task-based Timelines received significantly higher clarity ratings from participants. Critically, the type of timeline impacted perception of authorship; Role-based timelines increased perceived AI contribution in articles predominantly authored by humans, while Task-based timelines shifted perceptions towards greater human involvement in articles primarily generated by AI. These findings indicate that visual disclosure, and specifically the chosen visualization type, can actively shape user understanding of AI’s role in content creation.

Beyond the Surface: Implications for a Transparent Future

Successfully disclosing artificial intelligence involvement extends far beyond merely technical implementations; it necessitates a concurrent focus on bolstering public AI literacy and establishing robust accountability structures. Without widespread understanding of what AI is and how it functions, disclosures risk becoming meaningless jargon, failing to empower individuals to critically evaluate AI-generated content. Equally crucial is defining who is responsible when AI systems produce harmful or misleading outputs – is it the developer, the deployer, or the user? Clear accountability frameworks are essential for fostering trust and mitigating potential risks, incentivizing responsible AI development and deployment, and ensuring that disclosures are more than just performative exercises in transparency. Addressing these intertwined challenges-knowledge gaps and responsibility assignment-is paramount to realizing the true benefits of AI disclosure and fostering a future where AI systems are both powerful and trustworthy.

To bolster confidence in digitally disseminated information, the implementation of Providence Signals offers a promising pathway towards verifiable authenticity. These signals function as embedded metadata, meticulously documenting the origin and any subsequent alterations made to a piece of content – be it text, image, or video. Unlike simple watermarks, Providence Signals are designed to be cryptographically secure and resistant to tampering, creating an auditable trail that allows recipients to independently verify the content’s lineage. This detailed record extends beyond mere source identification, capturing details of the creative process, editing history, and even the algorithms utilized in its creation or modification. By providing this granular level of traceability, Providence Signals empower individuals to assess the trustworthiness of digital content and discern genuine creations from manipulated or fabricated ones, fostering a more informed and resilient information ecosystem.

Continued investigation must focus on the practical application of AI disclosure across a diverse range of media, extending beyond text to encompass images, audio, and video formats. Successfully integrating these techniques into current content creation workflows – encompassing tools used by journalists, artists, and everyday users – is paramount. This integration shouldn’t be viewed as an added burden, but rather as a seamless incorporation of verification protocols. Research should prioritize minimizing disruption to creative processes while maximizing the clarity and accessibility of AI-generated content provenance. Specifically, developing standardized metadata schemas and APIs will be crucial for interoperability and widespread adoption, ultimately fostering a more informed and trustworthy digital landscape.

The study meticulously details how visual cues-the very language of information-can subtly reshape understanding of collaborative processes. It’s a fascinating echo of Grace Hopper’s sentiment: “It’s easier to ask forgiveness than it is to get permission.” The research doesn’t simply report on human-AI collaboration; it demonstrates how easily the perception of that collaboration can be manipulated through design. Just as Hopper advocated for a proactive approach to innovation, this work implicitly suggests that transparency isn’t a passive state, but rather something actively constructed-or, if carelessly handled, subtly undermined-through the choices made in visualizing information. The implications extend beyond news production; any system where humans and AI interact demands similar scrutiny.

Beyond Disclosure: The Ghosts in the Machine

The study demonstrates, with unsettling clarity, that the presentation of human-AI collaboration is as crucial as the collaboration itself. The research does not simply reveal a preference for human contribution – it reveals a malleability of perception, easily nudged by visual cues. One wonders if the goal should be seamless integration, obscuring the division of labor entirely, or a hyper-transparent system that broadcasts every algorithmic decision. The latter, while seemingly ideal, risks a paralysis of analysis, a constant questioning of every fact presented. Perhaps true security lies not in revealing the mechanism, but in acknowledging its inherent opacity.

Future work must address the long-term effects of these visualizations. Do repeated exposures to specific disclosure designs create predictable biases? Does the framing of AI’s role – as assistant, author, or something in between – fundamentally alter trust in news content? The current research provides a snapshot; longitudinal studies are needed to chart the evolution of public perception. Moreover, the focus should extend beyond visual design to encompass the language used to describe AI’s contributions – the subtle power of framing should not be underestimated.

Ultimately, this line of inquiry forces a reckoning with the very notion of authorship and objectivity. If a machine can generate text indistinguishable from human writing, and its contribution can be masked or highlighted at will, what does it mean to ‘know’ something? The study isn’t about AI disclosure; it’s about the limits of human perception and the uncomfortable realization that the stories we tell ourselves are often constructed, not discovered.

Original article: https://arxiv.org/pdf/2601.11072.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- ‘SNL’ host Finn Wolfhard has a ‘Stranger Things’ reunion and spoofs ‘Heated Rivalry’

- M7 Pass Event Guide: All you need to know

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- Kingdoms of Desire turns the Three Kingdoms era into an idle RPG power fantasy, now globally available

2026-01-19 12:38