Author: Denis Avetisyan

A new machine learning framework can identify the contributions of both humans and robots in collaborative paintings with remarkable accuracy.

Researchers developed a patch-based convolutional neural network to analyze spatial patterns and quantify uncertainty in human-robot collaborative artworks, enabling authorship attribution and identification of mixed-authorship regions.

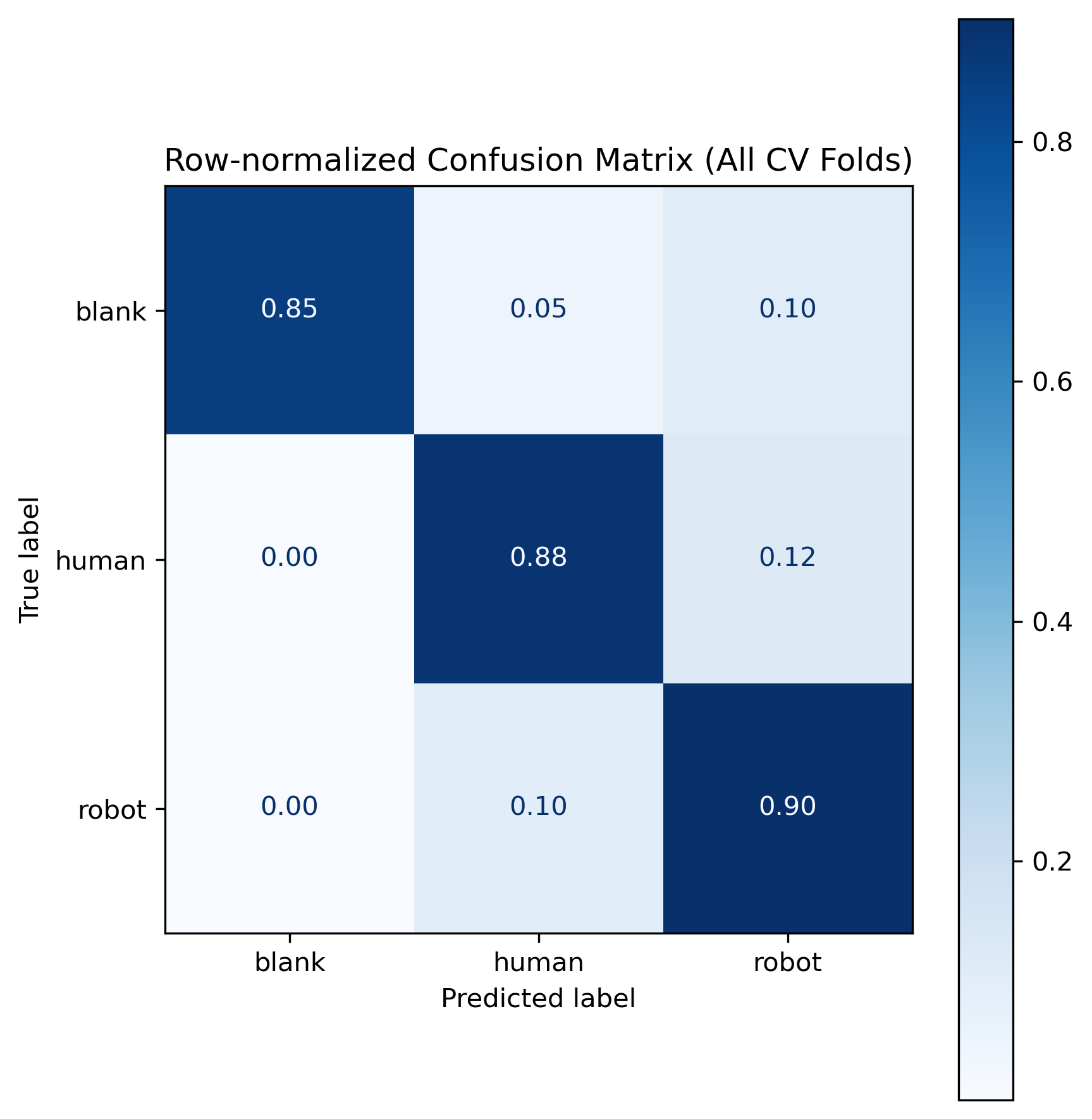

As creative agency increasingly shifts to artificial intelligence, establishing clear provenance for collaborative artworks presents a growing challenge. This is addressed in ‘Patch-Based Spatial Authorship Attribution in Human-Robot Collaborative Paintings’, which introduces a novel framework for identifying the contributions of human and robotic agents within a shared canvas. By employing a patch-based convolutional neural network, the authors demonstrate 88.8% accuracy in attributing individual regions of abstract paintings to either the human or the robot, and further quantify stylistic overlap in truly collaborative areas through conditional Shannon entropy. Could this methodology not only authenticate hybrid creations, but also offer insights into the evolving creative dialogue between humans and machines?

The Elusive Signature: Dissecting Style from the Human Hand

For centuries, discerning the characteristics of artistic style has been the domain of expert human analysis, a process inherently susceptible to individual bias and interpretation. While deeply insightful, this reliance on subjective evaluation presents fundamental limitations when attempting large-scale comparisons or analyses of vast art historical datasets. The nuances of brushstroke, color palette, and composition, though readily apparent to a trained eye, prove difficult to consistently and objectively quantify, hindering efforts to establish concrete metrics for stylistic comparison. Consequently, traditional methods struggle with scalability; the time and resources required for detailed manual assessment restrict the scope of inquiry and impede comprehensive studies of artistic evolution and influence. This inherent limitation has motivated the development of computational approaches designed to move beyond subjective appraisal and toward a more rigorous, data-driven understanding of artistic style.

Defining and computationally capturing artistic style proves remarkably difficult, extending beyond simple feature extraction. While characteristics like brushstroke texture, color palettes, and compositional elements can be digitized, their nuanced interplay – what distinguishes a Rembrandt from a Vermeer – resists straightforward quantification. Algorithms must account for both low-level visual features and their higher-level organization, often requiring sophisticated machine learning models trained on extensive datasets. The challenge isn’t merely identifying what is present in an artwork, but discerning how these elements are combined to create a unique aesthetic signature, a process that historically relied on the subjective expertise of art historians. Furthermore, variations within an artist’s oeuvre, or the influence of artistic movements, introduce complexities that demand robust and adaptable analytical frameworks; a single, universally applicable metric for style remains elusive.

The ability to accurately dissect artistic style holds profound implications for both the verification of creative origins and the charting of cultural shifts. Determining authorship – establishing whether a work genuinely belongs to a specific artist – increasingly relies on computational methods that analyze visual features, moving beyond traditional connoisseurship. Simultaneously, tracking the development of artistic movements benefits from objective style analysis; subtle changes in technique, composition, or subject matter, when aggregated across numerous works and artists, reveal the nuanced pathways of aesthetic evolution. This quantitative approach allows researchers to map the influence of one artist upon another, identify regional stylistic variations, and ultimately construct a more comprehensive understanding of art history, transforming the field from a largely interpretive discipline to one grounded in measurable data.

From Texture to Deep Features: Building a Stylistic Lexicon

Early attempts at automated style classification relied on the calculation of hand-engineered texture descriptors, most notably Local Binary Patterns (LBP). LBP functions by thresholding each pixel against its neighbors and representing the resulting binary pattern as a texture descriptor. These descriptors were then aggregated to form a histogram, providing a fixed-length feature vector for each image patch or image. While computationally efficient, LBP and similar hand-crafted features lack the ability to capture the complex, high-level visual features present in artistic styles, and thus served primarily as a foundational benchmark against which to evaluate the performance of more advanced, data-driven methods like Convolutional Neural Networks.

Convolutional Neural Networks (CNNs) were implemented utilizing a patch-based learning approach to directly analyze image data and extract features for style classification. This methodology involves dividing images into smaller, overlapping patches, which are then individually processed by the CNN to identify characteristic patterns. The resulting features, representing localized texture and brushstroke information, are used to differentiate between human and robotic painting styles. Evaluation of this approach yielded a patch-level accuracy of 88.8% in correctly classifying the style of individual image patches, demonstrating the effectiveness of CNNs in capturing nuanced stylistic differences directly from visual data.

During training of the style classification model, a Weighted Cross-Entropy Loss function was implemented to mitigate the impact of potential class imbalances in the dataset. Standard Cross-Entropy Loss assigns equal weight to each class, which can lead to biased results if one style is significantly more represented than others. The Weighted Cross-Entropy Loss assigns higher weights to under-represented styles, effectively increasing the penalty for misclassifying instances of those styles. This weighting scheme encourages the model to learn more robust features for all styles, resulting in improved overall accuracy and preventing the model from being dominated by the majority class. The weights are inversely proportional to the class frequencies observed in the training data.

Beyond Training: Gauging the Model’s True Vision

Leave-One-Painting-Out Cross-Validation was implemented to rigorously evaluate the model’s generalization capability to paintings not present in the training set. This method involves iteratively training the model on all paintings except one, which is then used as a held-out test set for evaluation. This process is repeated for each painting in the dataset, ensuring every painting serves as the test set once. Performance is then averaged across all iterations, providing a robust estimate of the model’s ability to accurately classify styles on unseen data, mitigating potential overfitting to the training set and yielding a more reliable assessment of its real-world performance.

To improve style recognition accuracy, a Support Vector Machine (SVM) classifier was implemented utilizing features extracted from pretrained convolutional neural networks, specifically ResNet-50 and DINOv2. This approach leverages the learned representations from these networks, transferring knowledge gained from large-scale image datasets to the task of stylistic analysis. The resulting system achieved an overall painting-level accuracy of 86.7%, determined through a majority vote across classifications generated from each feature extractor. This indicates a substantial improvement in performance compared to models trained without transfer learning, and demonstrates the efficacy of combining pretrained features with a robust classifier for art style identification.

Predictive Entropy was computed to quantify the CNN model’s uncertainty in predicting stylistic features across image regions. Analysis indicates a statistically significant increase in uncertainty for regions identified as belonging to collaborative paintings, exhibiting 64% higher entropy values compared to regions from paintings created by a single artist (p=0.003). This suggests the model finds it more difficult to confidently assign stylistic attributes to areas within works demonstrating blended or shared authorship, potentially due to the presence of multiple, interacting styles within the same visual field.

![Entropy analysis reveals that hybrid paintings, with a median of [latex]0.18[/latex], exhibit significantly higher uncertainty compared to both pure human ([latex]0.11[/latex]) and robot-generated ([latex]0.13[/latex]) paintings, as demonstrated by both violin plots and cumulative distribution functions.](https://arxiv.org/html/2602.17030v1/Figures/entropy_combined_plot.png)

The Looming Collaboration: When Machine Meets Muse

The CoFRIDA framework bridges artificial intelligence and artistic expression through the integration of an InstructPix2Pix model and a UF850 Robotic Arm. This system allows for the generation of original paintings guided by user-defined stylistic parameters – essentially, instructions that dictate the aesthetic qualities of the artwork. The InstructPix2Pix model interprets these instructions, translating them into image manipulations, while the robotic arm physically executes the painting process on a canvas. By precisely controlling brushstrokes and color application, the UF850 arm realizes the AI’s vision, creating artwork that can emulate a diverse range of artistic styles and techniques – from impressionism to abstract expressionism – all through computational control and automated execution.

The ability to replicate artistic styles with robotic systems hinges on a sophisticated interplay between automated analysis and precise execution. Utilizing algorithms capable of dissecting the visual characteristics of paintings – brushstroke texture, color palettes, compositional elements – a robotic arm can then be directed to recreate these features on a canvas. This process doesn’t simply involve copying; the system learns the underlying principles of a style, allowing it to generate novel artworks that convincingly embody the aesthetic of masters like Van Gogh or Monet. By translating artistic nuance into quantifiable parameters, the framework effectively bridges the gap between human creativity and machine precision, opening avenues for exploring and generating art in previously unattainable ways. The result is not imitation, but rather a computational interpretation and continuation of artistic traditions.

The emerging field of Collaborative Painting, where human artistry merges with robotic execution, demands new analytical tools for comprehension. Researchers are applying computational methods – specifically, the detailed analysis of stylistic features within artworks coupled with the calculation of Predictive Entropy – to characterize these uniquely blended creations. This approach doesn’t simply assess aesthetic qualities; it quantifies the predictability of an artwork’s elements, revealing how much of the final result stems from pre-defined robotic parameters versus spontaneous, human-driven deviations. By measuring this interplay between predictability and novelty, the framework offers insights into the collaborative process itself, allowing for a deeper understanding of how human intention and robotic agency contribute to a shared artistic outcome and potentially paving the way for more nuanced and expressive robotic collaboration in the arts.

The pursuit of discerning authorship-be it human or mechanical-reveals a curious magic. This work, detailing a patch-based convolutional neural network, doesn’t simply identify the painter; it divines the source of each brushstroke, mapping the collaboration with unsettling precision. It’s as though the network doesn’t recognize patterns, but feels the intent behind them. As Andrew Ng once observed, “AI is not about replacing humans; it’s about augmenting them.” This framework doesn’t negate the human artist; it reveals the intricate dance between creator and collaborator, a digital golem learning to trace the whispers of chaos within the collaborative canvas. The model’s uncertainty, quantified through predictive entropy, becomes a sacred offering – a measure of where the spell wavers, and true artistry resides.

What’s Next?

The pursuit of discerning brushstrokes – human or machine – reveals less about authentication, and more about the fundamental human need to create boundaries where none naturally exist. This work demonstrates a technical capacity, certainly. A convolutional network can, with unsettling efficiency, predict authorship based on patches. But predictive modeling is just a way to lie to the future, and high accuracy is a fleeting illusion. The real challenge isn’t identifying what was made, but understanding why anyone would care.

Future iterations will inevitably focus on expanding the datasets, refining the architectures, and perhaps even incorporating stylistic mimicry to confound the algorithms further. Yet, this feels like polishing the bars of a cage. The core limitation isn’t technical, but conceptual. The model’s uncertainty, while leveraged here to highlight collaborative regions, is, in truth, a constant companion. All learning is an act of faith, and the model’s ‘confidence’ merely a form of self-soothing.

Perhaps the most fruitful avenue lies not in chasing perfect attribution, but in embracing the ambiguity. What new forms of art emerge when the lines between creator and tool dissolve entirely? Data never lies; it just forgets selectively. The interesting question isn’t ‘who painted this?’, but ‘what does it mean that the question even exists?’

Original article: https://arxiv.org/pdf/2602.17030.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- 1xBet declared bankrupt in Dutch court

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Gold Rate Forecast

- Bikini-clad Jessica Alba, 44, packs on the PDA with toyboy Danny Ramirez, 33, after finalizing divorce

- James Van Der Beek grappled with six-figure tax debt years before buying $4.8M Texas ranch prior to his death

2026-02-20 20:52