Author: Denis Avetisyan

New research introduces a comprehensive dataset of human-computer web interactions designed to improve AI’s ability to predict when human assistance is required.

Researchers detail the creation of CowCorpus and demonstrate that fine-tuning large language models on this data significantly enhances intervention prediction for more effective human-agent collaboration.

Despite advances in autonomous web agents, effective collaboration still requires understanding when and why humans intervene during task execution. This paper, ‘Modeling Distinct Human Interaction in Web Agents’, introduces CowCorpus, a dataset of over 4,200 human-agent interactions used to identify four distinct patterns of collaboration-from hands-off supervision to full user takeover. By fine-tuning large language models on this data, we demonstrate a significant improvement in predicting user intervention, achieving up to a 26.5% increase in user-rated agent usefulness. Could a more nuanced understanding of human-agent dynamics unlock truly adaptive and collaborative AI systems for complex web-based tasks?

Predicting the Inevitable: Understanding Human-Agent Collaboration

Successful human-agent collaboration hinges on the ability to foresee when a user will override automated processes, a critical factor often overlooked in system design. Frequent interruptions to an agent’s task flow not only impede its efficiency but can also diminish the user’s perception of its competence and reliability. Research indicates that minimizing unnecessary interventions-by accurately predicting when a human will naturally step in-is paramount; a smoothly operating agent, free from constant correction, fosters trust and enhances the overall collaborative experience. This proactive approach allows the system to maintain momentum, complete tasks more effectively, and ultimately deliver a more valuable and satisfying outcome for the user.

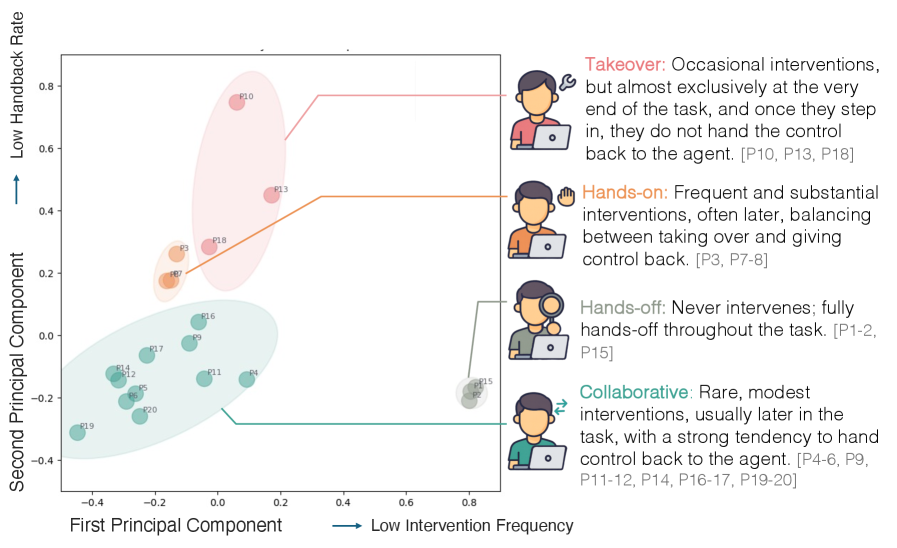

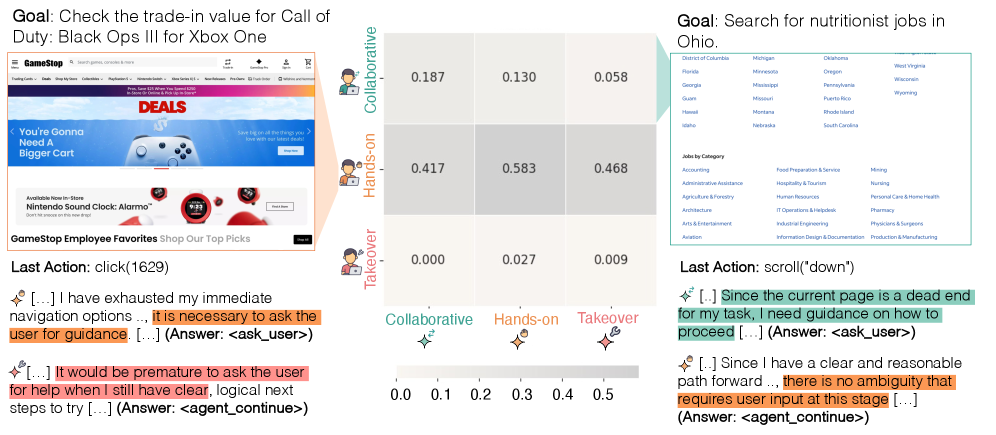

The dynamic between humans and artificial intelligence is significantly shaped by the chosen collaboration style, ranging from a ‘hands-off’ approach where the agent operates with minimal input, to a ‘hands-on’ strategy demanding frequent user direction. Research demonstrates that a predominantly hands-off style often leads to fewer, but more critical, interventions – typically occurring when the agent encounters genuinely ambiguous situations or makes errors requiring substantial correction. Conversely, a hands-on approach fosters a higher frequency of smaller, corrective interventions, allowing for continuous guidance and preventing significant deviations from the user’s intent. This variation isn’t merely quantitative; the nature of intervention differs markedly, impacting both the efficiency of the agent and the user’s cognitive load – a carefully balanced approach, attuned to the specific task and user preference, is crucial for optimal performance and a seamless collaborative experience.

The efficacy of human-agent collaborative systems hinges on the ability to foresee when a user will step in and override the agent’s actions; anticipating these interventions is not merely about avoiding disruption, but actively fostering a more productive partnership. Research demonstrates that accurately predicting these moments of required human input leads to a demonstrably improved user experience, with studies revealing a significant 26.5% increase in perceived usefulness of web agents. This improvement stems from a smoother workflow, reduced cognitive load, and a greater sense of control for the user, ultimately fostering trust and encouraging continued engagement with the AI assistant. Consequently, developers are increasingly focused on incorporating predictive modeling into these systems, aiming to create agents that seamlessly blend automation with human oversight, maximizing efficiency and user satisfaction.

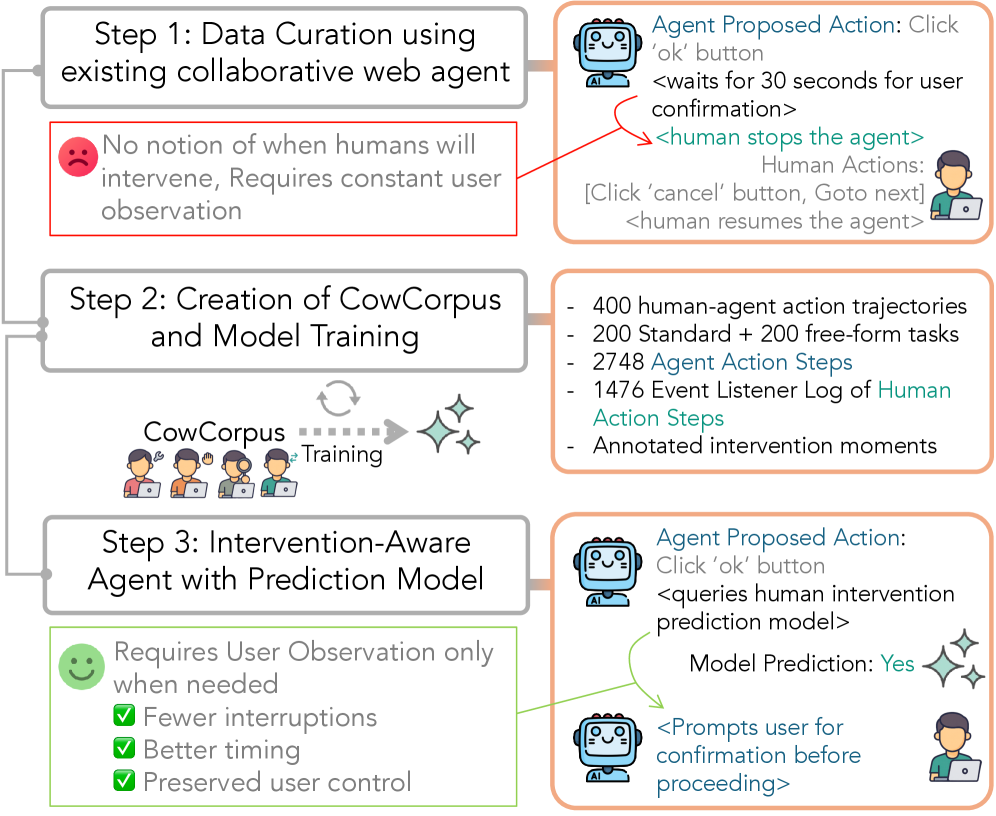

The CowPilot Dataset: A Necessary Foundation

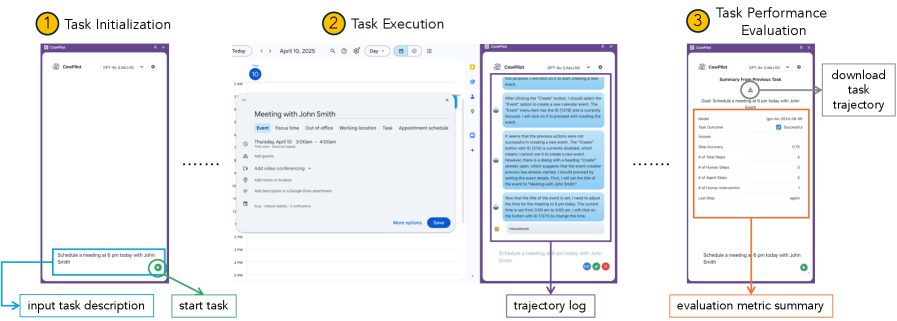

The CowCorpus dataset comprises 400 individual web navigation sessions recorded from real users interacting with the CowPilot browser extension. These trajectories detail user actions during web browsing, captured while the extension’s agent – powered by Large Language Models – was actively engaged. This dataset serves as a primary resource for studying human-agent collaboration, offering detailed logs of both user and agent behavior throughout each navigation session. The data includes complete action sequences, timestamps, and contextual information related to the websites visited, facilitating analysis of interaction patterns and intervention dynamics.

The CowPilot extension employs Large Language Models (LLMs) as the core intelligence for its agent functionality. This implementation allows the agent to generate contextually relevant responses and actions during web navigation, simulating a more natural and adaptive collaborative partner. Specifically, the LLM processes user actions and website content to determine appropriate assistance, moving beyond pre-defined scripts and enabling dynamic interaction. This contrasts with traditional rule-based agents and contributes to a more realistic human-agent collaborative experience, as evidenced by the dataset’s capture of nuanced intervention patterns.

The CowPilot dataset differentiates itself by capturing data beyond explicit user actions; it also records implicit signals indicative of user intent. These signals include factors such as cursor movements, scrolling behavior, and dwell time on specific page elements. This expanded data scope allows for a more granular understanding of Human Intervention, moving beyond simple binary classifications of intervention/no-intervention to model the reasoning behind user overrides. Capturing these nuanced cues enables the identification of preemptive interventions-cases where a user intervenes before the agent makes an error-and facilitates the study of subtle shifts in user behavior that indicate dissatisfaction with the agent’s performance.

Analysis of the CowPilot dataset reveals a correlation between agent collaboration styles and the frequency and timing of user interventions. By examining these patterns, we developed models capable of predicting user interventions with an accuracy range of 61.4-63.4%. This predictive capability is derived from the dataset’s detailed logging of both agent and user actions, allowing for the identification of specific behavioral cues that precede human override. The observed accuracy demonstrates the potential for proactive adaptation of agent behavior to minimize unnecessary interventions and optimize the collaborative experience.

Predicting the Inevitable: Multimodal Input is Key

The system utilizes a multimodal input approach, integrating visual screenshot data with structured information derived from the Accessibility Tree (AXTree). The AXTree provides a hierarchical representation of the webpage’s elements and their associated semantic roles, detailing interactive components and content organization. This structured data is combined with the pixel-based information from screenshots to create a more complete and nuanced representation of the user interface. This fusion of visual and semantic data allows the models to move beyond purely visual cues and incorporate an understanding of the webpage’s underlying structure when predicting user actions and determining optimal intervention timings.

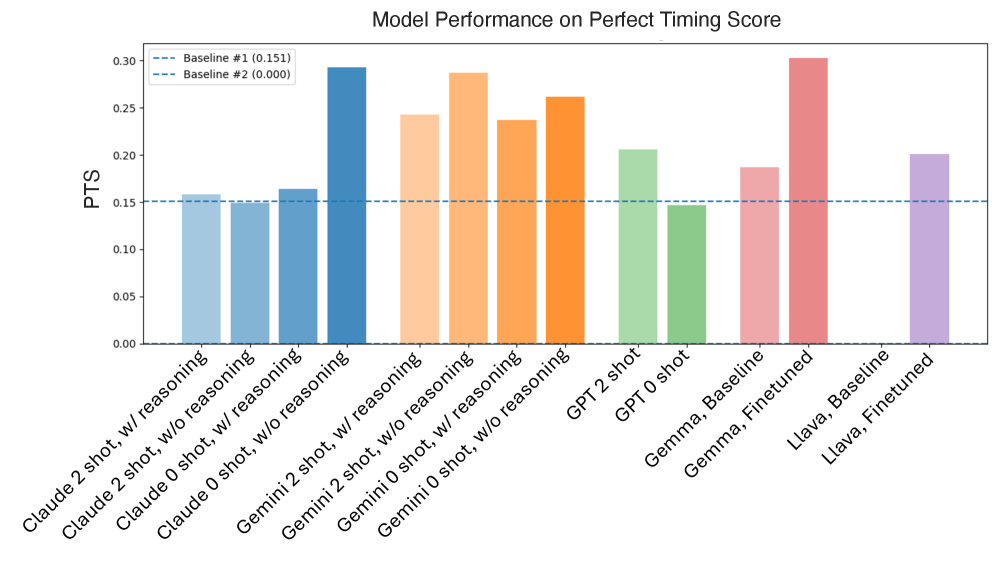

The core of our intervention prediction system relies on training and evaluating several Large Language Models (LLMs), specifically Gemma 27B, GPT-4o, and Claude. These models are provided with multimodal input – visual screenshots combined with structured data from the Accessibility Tree – and tasked with predicting appropriate interventions. Model performance is assessed based on the accuracy of these predictions, with the goal of identifying which LLM architecture best leverages the combined input for effective and timely intervention timing. This comparative analysis allows us to determine the suitability of each model for practical application in assistive technology and web accessibility contexts.

Current intervention prediction models frequently focus solely on what action to take, neglecting the critical aspect of when to execute it. This approach limits overall system efficiency and can introduce unnecessary disruption to the user experience. Our research prioritizes accurate timing of interventions, aiming to proactively address user needs without interrupting ongoing tasks. By predicting the optimal moment for an intervention, we seek to minimize latency, reduce cognitive load, and maximize the effectiveness of assistive technologies or automated support systems. This focus on temporal accuracy represents a shift from simple action prediction towards a more nuanced and user-centric approach to intervention delivery.

Utilizing the Accessibility Tree (AXTree) enables a more detailed analysis of web page structure and semantics, improving the accuracy of intervention predictions. The AXTree provides programmatic access to elements and their relationships, allowing models to discern the functional purpose of each component. When combined with historical human action data, this approach yields a step accuracy of 81.36% in predicting appropriate interventions, demonstrating the effectiveness of leveraging semantic information from the AXTree to enhance predictive capabilities.

Beyond Accuracy: Measuring Real-World Impact

A rigorous evaluation of intervention prediction models necessitates a multifaceted approach, and this study utilized three key metrics to comprehensively assess performance. Step Accuracy quantified the model’s ability to correctly identify necessary assistance at each stage of a task, while the [latex]\text{F1 Score}[/latex] provided a balanced measure of precision and recall in predicting interventions. Critically, the Perfect Timing Score (PTS) gauged not only the correctness of the prediction but also its temporal appropriateness – rewarding interventions delivered precisely when needed. By considering these metrics in concert, researchers were able to move beyond simple accuracy rates and gain a nuanced understanding of how effectively models anticipate and respond to user needs, ultimately informing the development of more responsive and helpful collaborative agents.

Investigations reveal a substantial performance increase when intervention prediction models incorporate multiple data streams beyond visual input. While models focused solely on analyzing visual cues demonstrate a baseline level of accuracy, those leveraging multimodal data – integrating information such as user actions and system states alongside video – exhibit markedly improved predictive capabilities. This suggests that a holistic understanding of the user’s context, rather than visual observation alone, is crucial for accurately anticipating intervention needs. The capacity to process and interpret these combined data streams enables the model to discern nuanced patterns and subtle cues that would otherwise be missed, leading to more timely and effective assistance.

Analysis reveals that human collaboration isn’t uniform; distinct styles, such as the assertive “Takeover” approach where one individual quickly assumes control, and the more egalitarian “Collaborative” style characterized by shared decision-making, manifest in predictable intervention patterns. The study demonstrates that machine learning models can discern these subtle differences in how people work together, anticipating when an intervention – be it assistance or a suggestion – will be most effective based on the observed collaborative dynamic. This capability allows for a shift from generic assistance to personalized support, tailoring the timing and nature of interventions to align with the prevailing collaboration style and ultimately enhancing team performance. By recognizing these patterns, the models effectively learn how people collaborate, not just that they are collaborating, opening avenues for more nuanced and helpful human-computer interaction.

The potential for personalized interaction is significantly enhanced by agents capable of adapting to user preferences and collaborative approaches. Research indicates that such adaptive behavior fosters a more fluid and efficient experience, as demonstrated by a Perfect Timing Score (PTS) of 0.303 achieved with a fine-tuned Gemma 27B model. This score represents a measurable improvement over alternative models like Claude 4 Sonnet, which attained a PTS of 0.293 under the same conditions. The ability to anticipate and respond to individual collaboration styles-whether a user prefers a decisive takeover or a more balanced partnership-allows the agent to provide timely and relevant assistance, ultimately optimizing the human-agent interaction and maximizing productivity.

The pursuit of seamless human-agent collaboration, as detailed in this work with CowCorpus, inevitably highlights the chasm between theoretical elegance and production realities. The dataset aims to predict human intervention, a tacit acknowledgement that even the most advanced large language models aren’t infallible. As Vinton Cerf once observed, “Any sufficiently advanced technology is indistinguishable from magic…until it breaks.” This sentiment rings true; the creation of CowCorpus, while valuable, is merely a step in a perpetual cycle of refinement. It addresses current limitations, but the next iteration of ‘breakage’ is already lurking, waiting to expose new forms of tech debt within the collaborative framework. The very act of modeling intervention is, in a way, preparing for failure – and that, perhaps, is the most honest outcome of all.

What’s Next?

The creation of CowCorpus, and the demonstrable gains from fine-tuning on it, feels…predictable. It solved a problem nobody quite articulated, which is always a red flag. The immediate future will involve increasingly baroque datasets, each attempting to capture a slightly more nuanced form of human exasperation with automation. They’ll call it ‘affective computing’ and raise funding. The real question isn’t whether models can predict intervention, but whether they can learn to be subtly, proactively unhelpful enough to avoid it. After all, a perfectly obedient agent is a quickly unemployed one.

This work highlights a persistent truth: what begins as a clever intervention model inevitably devolves into a complex dependency. This elegant prediction scheme will, within a year, be a fragile, undocumented mess, held together by duct tape and the fading memories of the original authors. It used to be a simple bash script, undoubtedly. The current focus on LLMs is a distraction; the core challenge remains the same: humans are messy, inconsistent creatures, and any system attempting to model that mess will inevitably accumulate technical – and emotional – debt.

Future research will undoubtedly explore the generalizability of these findings. Will CowCorpus translate to other domains? Perhaps. But the underlying principle remains: the most valuable data isn’t about successful collaboration, it’s about the precise moments when things fall apart. Because that’s where the truly interesting problems – and the real opportunities for monetization – lie.

Original article: https://arxiv.org/pdf/2602.17588.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Gold Rate Forecast

- 1xBet declared bankrupt in Dutch court

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

2026-02-20 12:11