Author: Denis Avetisyan

New research reveals that trust in AI-assisted advice isn’t just about accuracy, but crucially depends on who-human or machine-corrects any mistakes.

Trust in AI advisory systems is significantly influenced by the source of error correction, with human-initiated corrections fostering greater confidence than automatic fixes.

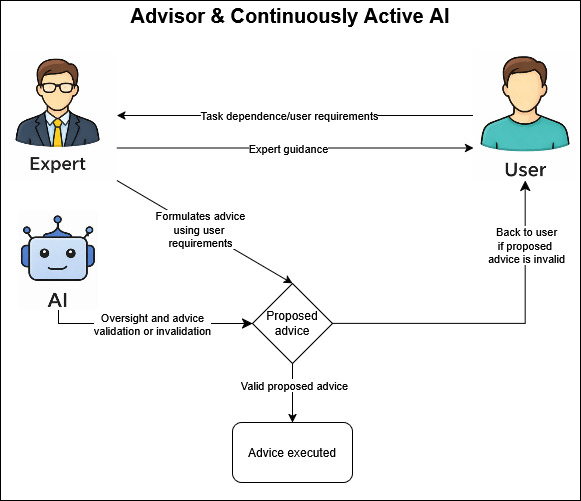

While increasingly integrated into expert domains, the role of artificial intelligence in shaping trust within advisory workflows remains poorly understood. This research, titled ‘Implications of AI Involvement for Trust in Expert Advisory Workflows Under Epistemic Dependence’, investigates how AI’s presence affects user trust in both human experts and the AI systems they utilize, employing a simulated course-planning task with [latex]\mathcal{N}=77[/latex] participants. Key findings demonstrate that trust isn’t solely determined by the accuracy of recommendations, but critically by how errors are addressed-specifically, whether correction originates from the AI or the human advisor. How can hybrid human-AI teams be designed to maximize trust and facilitate effective adoption of AI-assisted expertise?

The Calculus of Trust: Calibrating Collaboration with Artificial Intelligence

The potential for synergistic partnerships between humans and artificial intelligence is fundamentally dependent on calibrated trust. Neither blind acceptance nor outright rejection of AI guidance will yield optimal outcomes; instead, effective collaboration requires users to develop an appropriately nuanced understanding of an AI system’s capabilities and limitations. This isn’t simply about believing an AI is ‘smart’, but rather assessing its reliability for a specific task, acknowledging potential biases, and understanding the scope of its expertise. Research suggests that trust isn’t static; it’s earned through consistent performance, transparent reasoning, and demonstrable alignment with user goals. Without this carefully cultivated trust, the benefits of AI – increased efficiency, improved decision-making, and novel insights – remain largely unrealized, hindering its integration into critical workflows and limiting its overall impact.

The potential for both harm and benefit is magnified when artificial intelligence enters high-stakes decision-making processes, such as academic advising. Over-reliance on AI recommendations, stemming from an assumption of infallible accuracy, could lead students down unsuitable educational paths, hindering their long-term potential. Conversely, unwarranted skepticism-a complete dismissal of AI-driven insights-may result in the neglect of valuable data that could otherwise support student success. This delicate balance highlights a critical need to understand how individuals calibrate their trust in AI systems operating within such sensitive domains, as misplaced trust-in either direction-can have profound consequences for a student’s academic trajectory and future opportunities.

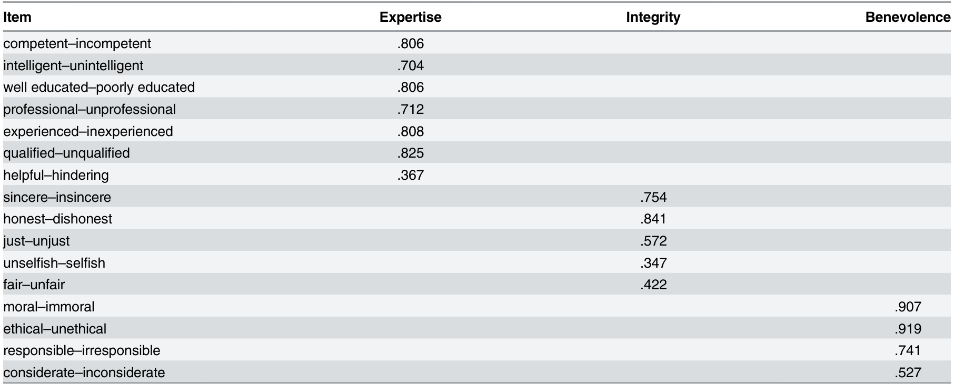

The establishment of robust trust in artificial intelligence fundamentally relies on perceptions of epistemic trustworthiness – a complex evaluation of an AI system’s capabilities, character, and motivations. This isn’t simply about technical accuracy; users assess expertise based on demonstrated competence, integrity through consistent and reliable performance, and benevolence, or the belief that the AI is acting in their best interests. Research indicates that even highly accurate AI can be rejected if perceived as lacking in these qualities, while systems with moderate accuracy but high perceived trustworthiness often receive greater acceptance and effective collaboration. Consequently, developers must prioritize not only algorithmic performance but also the transparent communication of capabilities and the design of systems that inspire confidence through consistent, ethical, and user-centered behavior; fostering this multifaceted trustworthiness is crucial for realizing the full potential of AI across all domains.

Deconstructing the Advisory Process: A Controlled Environment for Experimentation

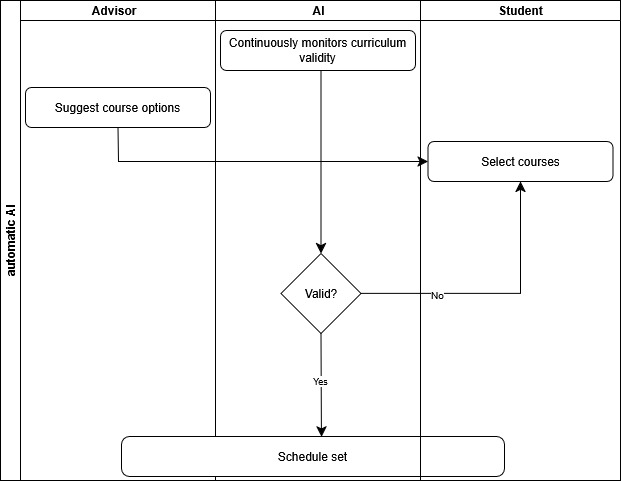

The Advising Simulation is an interactive environment designed to model student-advisor interactions. Participants are assigned the role of students and engage with a simulated advisor, receiving course recommendations based on presented scenarios. This setup allows for controlled experimentation, enabling researchers to systematically vary advisor behaviors and assess resulting outcomes. The simulation captures user responses throughout the interaction, providing quantifiable data on student perceptions and decision-making processes. The environment is built to replicate key aspects of a typical advising session, including the presentation of academic information and the negotiation of course selections.

The Advising Simulation’s workflow design utilizes a standardized interaction protocol. Participants are presented with pre-defined student profiles, each detailing academic history, stated goals, and potential constraints. These profiles initiate specific advising scenarios, such as course selection for the upcoming semester or addressing academic performance concerns. User responses – including expressed preferences, questions asked, and decisions made – are systematically captured as structured data. This data includes both the content of textual interactions and selections from presented options, allowing for quantitative and qualitative analysis of participant behavior within the simulation.

The Advising Simulation enables controlled analysis of factors influencing user trust in course recommendations. By systematically varying advisor performance – including the quality and consistency of advice given – and introducing different levels of AI intervention, we can isolate the specific contributions of each to shifts in user-reported trust metrics. Data captured during simulated interactions, specifically user responses to recommendations and subsequent self-reported trust levels, are then statistically analyzed to determine the relative impact of human advisor behavior versus algorithmic assistance on the development of user confidence in the advising process.

The Timing of Intervention: Proactive Assistance vs. Reactive Correction

The study employed two distinct modes of AI oversight to assess their impact on user trust. Proactive AI Assistance functioned by identifying and flagging potential errors prior to their occurrence, allowing for correction before the user encountered an issue. Conversely, Reactive AI Assistance operated by intervening only after an error had been detected by the user or system, functioning as a corrective measure to address mistakes as they arose. This design allowed for a comparative analysis of how preemptive versus responsive AI intervention strategies influenced user perceptions of the AI advisor’s reliability and competence.

Evaluation of AI-assisted advisory systems revealed differential impacts of intervention timing on user trust. Analyses demonstrated that both proactive and reactive AI assistance significantly altered trust levels, but via distinct mechanisms. Proactive interventions, occurring prior to error manifestation, correlated with increased perceptions of advisor competence and subsequently, heightened user trust. Conversely, reactive interventions, implemented following error detection, while mitigating negative outcomes, were associated with perceptions of underlying advisor fallibility, potentially limiting the gains in trust achieved through error correction. These findings suggest that the timing of AI intervention is a critical factor influencing user perceptions and the overall efficacy of AI-assisted advisory systems.

User trust is differentially impacted by the timing of AI assistance. Data indicates that proactive AI interventions – flagging potential errors before they manifest – cultivate a perception of advisor competence, leading to increased trust regardless of whether an error was actually going to occur. Conversely, reactive assistance, while successfully correcting mistakes, can inadvertently signal underlying fallibility in the advisor, potentially diminishing trust. This suggests that preventing errors, even if unnecessary, is more effective at building user confidence than correcting them, as the former reinforces a positive perception of capability while the latter highlights the possibility of errors in the first place.

Validating Trust and Performance: A Multi-Faceted Assessment

User trust was evaluated through a dual methodology employing the Riedl et al. Trust Scale and the METI Instrument. The Riedl et al. scale is a validated, seven-item measure assessing trust in automated systems, focusing on reliance and confidence in the system’s competence. Complementing this, the METI Instrument – the Machine Epistemic Trustworthiness Inventory – specifically targets epistemic trustworthiness, examining user perceptions of the system’s knowledge, accuracy, and reliability as a source of information. Utilizing both instruments provided a multifaceted assessment of trust, capturing both general reliance and perceptions of the AI’s cognitive capabilities, thereby enhancing the robustness of the trust evaluation.

Analysis revealed a significant correlation between advisor performance and user-reported trust levels. However, the introduction of AI intervention altered this relationship, demonstrating a moderating effect. While positive advisor performance generally led to increased trust, and negative performance decreased trust, the manner of AI assistance-whether proactive or reactive-influenced the strength of this correlation. Specifically, AI’s role in error correction, or lack thereof, impacted how strongly performance outcomes translated into changes in user trust, indicating that AI can either amplify or diminish the effect of advisor performance on trust perception.

Analysis of scheduling errors revealed that the ultimate determinant of user trust was the outcome of the advisory process – whether errors occurred or were successfully resolved – rather than whether the AI intervened proactively or reactively. Tracking of these errors and subsequent corrective interventions demonstrated that users prioritized successful scheduling, irrespective of the assistance mode. This suggests that while AI intervention can mitigate errors, its impact on trust is secondary to achieving a positive outcome. The observed data indicates that a successful advisory session, even with AI assistance, fostered greater trust than an unsuccessful session, regardless of the intervention strategy employed.

Quantitative analysis revealed significant differences in user perception and intent based on AI intervention and error conditions. Perceived expertise, as measured by the Hendriks et al. (2015) scale, was significantly lower in the proactive-AI condition when errors occurred (p=.03). Advisor trust, assessed using the Riedl et al. Trust Scale, was notably lower in the reactive error condition compared to the proactive error condition (p=.05). Furthermore, user intention to reuse the system was significantly diminished in the proactive AI with error condition when contrasted with both the advisor-only condition (p=.04) and the proactive AI success condition (p=.03); these effects were moderate, with partial eta-squared (η²) values ranging from 0.13 to 0.16.

The Future of Collaboration: Beyond Error Correction

The efficacy of artificial intelligence extends beyond mere error correction; research demonstrates that cultivating user trust is paramount for successful human-AI collaboration. Systems designed with proactive assistance – anticipating user needs and offering help before mistakes occur – foster a sense of shared competence and reduce reliance on reactive error flagging. Crucially, this assistance must be coupled with transparent communication, clearly explaining the AI’s reasoning and limitations. This approach doesn’t simply improve task performance; it builds a collaborative dynamic where users feel supported and understand the AI’s contributions, ultimately leading to greater acceptance, engagement, and a more effective partnership between humans and intelligent systems.

Research indicates that the advantages of AI providing assistance before errors occur extend far beyond mere performance gains. This proactive support cultivates a dynamic of shared competence between humans and AI, fostering a collaborative environment where both parties contribute effectively. This sense of working with the AI, rather than being corrected by it, is critical for building user acceptance and sustained engagement. The study suggests that when AI anticipates needs and offers helpful suggestions, individuals are more likely to trust the system’s judgment and integrate it seamlessly into their workflow, ultimately leading to more effective and harmonious human-AI teams.

Extending the principles of proactive assistance and transparent communication beyond the current research necessitates investigation across diverse collaborative contexts. Future studies should examine how these strategies function within high-stakes environments, such as medical diagnosis or complex engineering projects, where trust and error mitigation are paramount. Adapting these insights to fields requiring nuanced judgment, like creative design or legal analysis, presents a unique challenge – ensuring the AI’s support enhances, rather than stifles, human intuition and expertise. Ultimately, broadening the scope of inquiry will reveal the generalizability of these findings, paving the way for the development of universally effective and trustworthy human-AI teams capable of tackling increasingly complex problems.

The study reveals a fascinating dynamic regarding trust calibration within human-AI advisory systems. It demonstrates that simple outcome success isn’t sufficient; the process of error correction heavily influences continued reliance. This echoes Bertrand Russell’s observation: “The whole problem with the world is that fools and fanatics are so confident in their ideas.” The research suggests that automatic corrections, while efficient, can breed a dangerous overconfidence in the system-a sort of epistemic complacency. Conversely, human-initiated corrections, even after AI error, foster a healthier skepticism and maintain crucial oversight, recognizing that even the most sophisticated algorithms aren’t immune to fallibility. This highlights the importance of designing workflows that prioritize transparency and allow for human judgment, rather than blindly accepting AI outputs.

Deconstructing the Oracle

The study reveals a predictable, yet unsettling, dependency. Trust isn’t simply granted for correct answers; it’s earned through the demonstration of error management. But the critical finding-that human-initiated corrections engender more trust than automated ones-isn’t a testament to human superiority, but an exposure of a fundamental need for perceived agency. The system isn’t evaluating competence; it’s registering who pulls the strings when things go wrong. This suggests a deep-seated aversion to relinquishing control, even when demonstrably suboptimal.

Future work should deliberately dismantle this illusion of control. Can trust be engineered without human intervention in error correction? Or, more provocatively, can a system simulate human error and subsequent correction to preemptively cultivate trust? The current focus on achieving accuracy feels…naive. The true challenge lies in understanding – and manipulating – the conditions under which humans accept fallibility, rather than striving to eliminate it.

The long-term implications extend beyond advisory systems. If trust is a performance-a carefully orchestrated display of agency-then any system, regardless of its underlying function, must account for the audience’s need to believe in a guiding hand. This isn’t about building intelligent machines; it’s about staging a convincing illusion of intelligence, and perhaps, understanding why that illusion is so desperately desired.

Original article: https://arxiv.org/pdf/2602.11522.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Gold Rate Forecast

- Star Wars Fans Should Have “Total Faith” In Tradition-Breaking 2027 Movie, Says Star

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- KAS PREDICTION. KAS cryptocurrency

- Christopher Nolan’s Highest-Grossing Movies, Ranked by Box Office Earnings

- eFootball 2026 is bringing the v5.3.1 update: What to expect and what’s coming

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Jessie Buckley unveils new blonde bombshell look for latest shoot with W Magazine as she reveals Hamnet role has made her ‘braver’

- How to watch Marty Supreme right now – is Marty Supreme streaming?

- Country star Thomas Rhett welcomes FIFTH child with wife Lauren and reveals newborn’s VERY unique name

2026-02-13 22:27