Author: Denis Avetisyan

A proposal suggests adapting financial ‘Know Your Customer’ protocols to govern access to powerful biological design tools and mitigate emerging biosecurity risks.

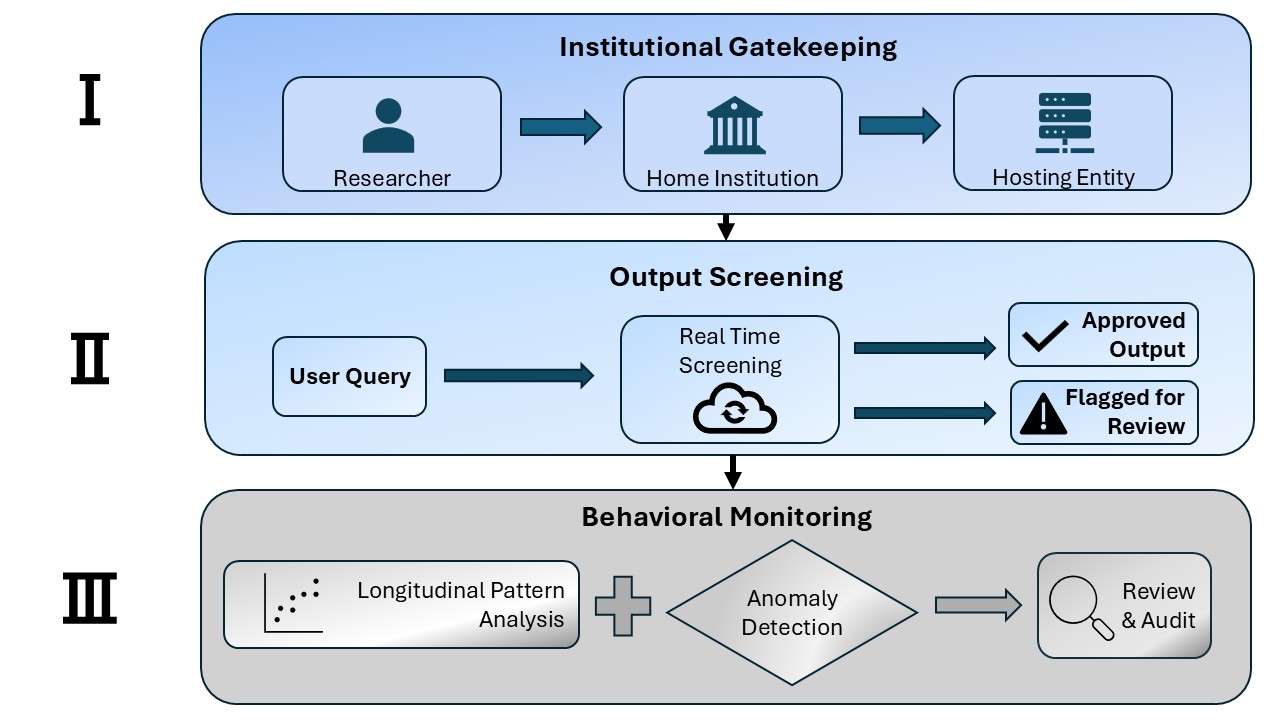

This review outlines a three-tier KYC framework for verifying scientist identity and monitoring activity when utilizing biological AI design platforms.

Rapid advances in biological design tools, powered by artificial intelligence, present a paradox: increasing our ability to create life-saving innovations while simultaneously expanding opportunities for misuse. The paper ‘Know Your Scientist: KYC as Biosecurity Infrastructure’ proposes a shift in governance, moving beyond content-based screening-an inherently limited approach in biology-towards verifying the identities and affiliations of users accessing these powerful technologies. This framework, inspired by ‘Know Your Customer’ (KYC) practices in finance, establishes a three-tiered system of institutional vouching, output monitoring, and behavioral analysis to raise the cost of malicious activity. Could this approach, prioritizing accountability and traceability, offer a viable path toward responsible innovation in the rapidly evolving landscape of synthetic biology?

Navigating the Paradox of Biological Innovation

The rapid evolution of biological design technologies, exemplified by tools like RFdiffusion and AlphaFold, presents a paradoxical landscape of scientific progress and potential hazard. These innovations, capable of accurately predicting protein structures and even generating novel biomolecules, dramatically accelerate biological research and offer promising applications in medicine and materials science. However, this increased accessibility and power simultaneously heighten concerns regarding misuse, as the same capabilities that enable beneficial discoveries could be repurposed for harmful ends. The ease with which these tools can now be utilized-often requiring limited specialized expertise-introduces a dual-use dilemma, demanding careful consideration of security protocols and responsible innovation to mitigate the risks associated with intentionally or unintentionally creating dangerous biological agents or disrupting critical systems.

Current biosecurity protocols, largely built around monitoring known pathogens and restricting access to specialized equipment, are proving inadequate in the face of rapidly advancing AI-driven biological design tools. These technologies, such as RFdiffusion and AlphaFold, democratize the ability to create novel biological sequences, shifting the risk from centralized laboratories to a far wider, less regulated landscape. Simply tracking known threats is no longer sufficient; a proactive access control framework is now essential. This necessitates developing systems that can anticipate potential misuse by evaluating the design of biological sequences, rather than solely focusing on the sequences themselves. Such a framework would require establishing criteria for responsible innovation, implementing tiered access levels based on expertise and intent, and fostering international collaboration to ensure consistent standards and effective oversight-a fundamental shift from reactive containment to preventative risk management.

The increasing ease with which individuals can design biological systems demands a fundamental shift in security protocols. Historically, biosecurity has relied on monitoring the creation of potentially dangerous pathogens or organisms, reacting after a threat emerges. However, the widespread accessibility of tools like RFdiffusion and AlphaFold-allowing even those without extensive training to generate complex biological designs-renders this reactive approach increasingly inadequate. A proactive framework, focused on preventing misuse at the design stage, is now essential. This necessitates developing robust access controls, establishing clear guidelines for responsible innovation, and fostering a culture of awareness regarding the dual-use potential of these powerful technologies, ensuring that preventative measures keep pace with the accelerating advancements in biological design.

Establishing a Tiered Framework for Responsible Access

The proposed Know Your Customer (KYC) framework for biological AI tools draws direct parallels to Anti-Money Laundering (AML) regulations utilized in financial sectors. AML frameworks prioritize preventative measures to mitigate risk before illicit activities occur, and this principle is adopted to control access to potentially hazardous biological designs generated by AI. Rather than reactive security measures addressing misuse after it has occurred, KYC for Bio emphasizes upfront user vetting and continuous monitoring to establish trust and proactively prevent the development or dissemination of dangerous biological agents. This preventative approach aims to reduce the likelihood of malicious use by verifying user identity, intended research purpose, and ongoing activity patterns, thereby bolstering biosecurity within the rapidly evolving landscape of AI-driven biotechnology.

Tier I, Institutional Gatekeeping, functions as the initial access control layer by establishing user trust through affiliation with recognized research institutions. This process leverages existing Institutional Biosafety Committee (IBC) oversight, reducing redundant vetting procedures. Users requesting access to biological AI tools must demonstrate affiliation and submit research proposals for IBC review, confirming adherence to established biosafety guidelines and research ethics. Approved institutions and proposals grant preliminary access, subject to ongoing monitoring via Tiers II and III. This approach minimizes the initial burden on tool providers while capitalizing on the established expertise and regulatory frameworks of participating institutions, effectively scaling preventative access control.

Tier II access control utilizes continuous output screening to identify potentially hazardous biological designs generated by AI models. This process employs Sequence Homology analysis, comparing generated sequences against databases of known dangerous or restricted biological entities, and Functional Annotation, which predicts the biochemical function of designed proteins to assess potential pathogenicity or toxicity. These methods are applied to all model outputs prior to dissemination, flagging designs with significant matches to known threats or predicted harmful functions for further review. The screening process is designed to be probabilistic, identifying designs requiring expert evaluation rather than providing a definitive safety assessment, and operates independently of the user’s declared research intent.

Tier III, Behavioral Monitoring, implements a system for tracking user interactions with biological AI tools over time to identify activity inconsistent with their stated research objectives. This approach draws parallels to fraud detection in financial systems, where unusual transaction patterns trigger investigation. Specifically, the system establishes a baseline of expected user behavior – including data access patterns, model input characteristics, and frequency of analysis – and flags deviations exceeding pre-defined thresholds. These anomalies are then subject to review by designated personnel to assess potential misuse or safety concerns. Data collected for behavioral monitoring is anonymized and aggregated where possible to protect user privacy, while still enabling effective anomaly detection. The system is designed to be adaptive, continually refining baseline expectations based on evolving user behavior and research norms.

Addressing the Challenge of Open-Weight Models

The proliferation of open-weight models presents a substantial obstacle to implementing Know Your Customer (KYC) frameworks for large language models. Unlike closed-source, centrally-managed models where access and usage can be controlled, open-weight models are publicly available for download and modification. This inherent accessibility means that developers can bypass any access restrictions or monitoring mechanisms established for controlled models. Consequently, even a robust KYC system applied to a specific, secured model cannot prevent malicious actors from utilizing freely available open-weight alternatives for similar, potentially harmful, purposes. The distributed nature of open-weight models and the lack of a central authority further complicates efforts to enforce responsible use or trace the origin of generated content.

The proposed KYC framework operates by controlling access to specific, hosted language models such as ESM3, verifying user identity and adherence to usage policies before granting access. However, this access control is ineffective against open-weight models, which are publicly downloadable and runnable without requiring interaction with any central authority. Because the model weights are freely available, any user can bypass the KYC framework entirely by utilizing these alternatives, negating the security measures implemented for controlled models. This fundamentally limits the framework’s ability to comprehensively address risk, as malicious actors are not restricted from employing openly available resources.

Addressing the challenges posed by open-weight models requires a two-pronged approach. Research will focus on developing incentive structures to encourage responsible development and deployment, potentially including reputational systems, differential privacy mechanisms, or collaborative agreements within the open-source community. Simultaneously, efforts will explore complementary monitoring techniques – separate from model access control – to detect and mitigate harmful applications of these freely available models. These techniques may include anomaly detection in generated content, tracking model usage patterns, and developing methods to attribute misuse without compromising user privacy. The goal is not to restrict access, but to establish a layered defense against malicious actors while fostering innovation within the open-weight model ecosystem.

Cultivating a Secure Future for Biological AI

A robust “Know Your Customer” (KYC) framework offers a critical safeguard against the potential misuse of increasingly powerful biological AI tools. This proactive approach extends beyond simple authentication, encompassing continuous monitoring of user activity and data access patterns to identify and mitigate suspicious behavior. By meticulously vetting individuals seeking to utilize these technologies – and tracking how they are used – the framework establishes accountability and dramatically reduces the risk of malicious actors employing biological AI for harmful purposes, such as the creation of dangerous pathogens or the manipulation of critical biological systems. This system isn’t merely reactive; it anticipates potential threats by analyzing usage trends and flagging anomalies, enabling timely intervention and preventing misuse before it occurs. The result is a significantly more secure landscape for biological AI innovation, fostering responsible development and deployment.

A secure research environment is paramount for the advancement of biological AI, and a robust framework actively cultivates this essential condition. By minimizing the potential for unauthorized access and malicious use, researchers are empowered to explore the frontiers of drug discovery and synthetic biology with greater confidence. This allows for accelerated development of novel therapeutics, sustainable biomaterials, and innovative biotechnologies, all while mitigating the risks associated with powerful biological tools. The resulting atmosphere of trust and accountability not only safeguards scientific progress but also fosters public confidence in the responsible application of these groundbreaking technologies, paving the way for a future where biological AI benefits all of society.

Establishing a robust security framework for biological AI demands a concerted effort extending beyond individual laboratories. Successful implementation necessitates open communication and collaboration between researchers developing these powerful tools, the institutions housing this research, and policymakers tasked with overseeing responsible innovation. Clear, universally adopted guidelines and standards for access control are paramount; these should define permissible uses, data handling protocols, and mechanisms for auditing activity. This collaborative approach ensures that advancements in biological AI benefit society while mitigating potential risks, fostering public trust, and preventing misuse – a shared responsibility crucial for navigating this emerging field.

The proposed KYC framework, mirroring financial AML protocols, recognizes that robust biosecurity isn’t about predicting malicious intent, but establishing clear boundaries and accountability. This echoes Robert Tarjan’s observation: “A good program is not about writing clever code; it’s about structuring code.” The article champions a similar principle – a well-defined system of access control, tiered verification, and activity monitoring, rather than attempting to foresee the potential misuse of biological design tools. By focusing on how access is granted and managed, rather than what is being designed, the approach acknowledges the inherent complexity of predicting dual-use applications and prioritizes structural integrity as the foundation of responsible innovation. This emphasis on structure, mirroring Tarjan’s insights, suggests that a resilient biosecurity infrastructure arises from clarity and well-defined processes, not intricate prediction algorithms.

What’s Next?

The proposal to adapt financial ‘Know Your Customer’ protocols to biosecurity introduces a curiously elegant simplicity. It sidesteps the intractable problem of predicting dangerous design-a fool’s errand given the combinatorial nature of biological systems-and focuses instead on the more pragmatic task of knowing who is doing the designing. However, this is not a technical fix; it’s a shift in the framing of the problem. The crucial question becomes: what are the acceptable limits of institutional affiliation as a proxy for responsible conduct? Simply establishing a university or company as a vetting authority begs the question of internal oversight and the potential for capture.

Future work must address the granularity of access control. A three-tier system, while conceptually sound, needs to account for the diversity of biological design tools-from simple sequence editing to complex, generative AI platforms. The level of scrutiny should be commensurate with the inherent risk of the technology itself. Furthermore, the article implicitly assumes a relatively stable geopolitical landscape. What happens when institutional boundaries become blurred, or when actors deliberately seek to circumvent these controls?

Ultimately, the success of this approach hinges not on technological sophistication, but on a clear articulation of societal values. It is a reminder that biosecurity is not merely a technical challenge, but a fundamentally political one. The discipline must move beyond reactive measures and grapple with the deeper question of what constitutes responsible innovation in the life sciences, and who decides.

Original article: https://arxiv.org/pdf/2602.06172.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Gold Rate Forecast

- Jason Statham’s Action Movie Flop Becomes Instant Netflix Hit In The United States

- Kylie Jenner squirms at ‘awkward’ BAFTA host Alan Cummings’ innuendo-packed joke about ‘getting her gums around a Jammie Dodger’ while dishing out ‘very British snacks’

- KAS PREDICTION. KAS cryptocurrency

- Hailey Bieber talks motherhood, baby Jack, and future kids with Justin Bieber

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- How to download and play Overwatch Rush beta

- Christopher Nolan’s Highest-Grossing Movies, Ranked by Box Office Earnings

- Jujutsu Kaisen Season 3 Episode 8 Release Date, Time, Where to Watch

2026-02-10 01:50