Author: Denis Avetisyan

Researchers have developed a system that uses the power of large language models to decipher user motivations from reviews and behavior, leading to more relevant and effective recommendations.

LMMRec leverages large language models and multimodal data to model user motivations and improve recommendation accuracy and robustness.

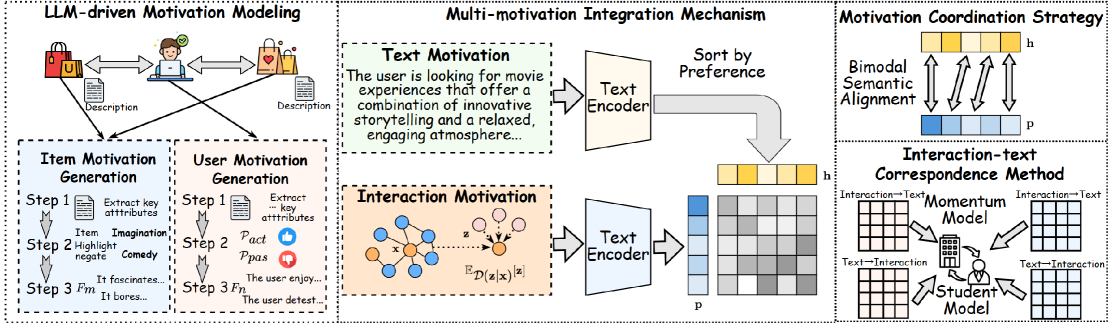

While recommender systems increasingly leverage multimodal data, a crucial understanding of why users prefer certain items remains largely untapped. The work introduces LMMRec: LLM-driven Motivation-aware Multimodal Recommendation, a novel framework that explicitly models user motivations by integrating insights from both textual reviews and interaction data via large language models. This approach achieves performance gains of up to 4.98% by robustly aligning motivations across modalities using contrastive learning and a dual-encoder architecture. Could a deeper understanding of user motivations, powered by LLMs, unlock a new generation of truly personalized and effective recommendation systems?

The Illusion of Personalization: Why Recommendations Fail

Conventional recommendation systems, particularly those leveraging Collaborative Filtering, frequently fall short in delivering truly personalized experiences due to a fundamental limitation: an inability to discern the why behind a user’s preferences. These systems primarily focus on identifying patterns of similarity – users who liked similar items in the past – and extrapolate from there. However, this approach overlooks the complex and often nuanced motivations driving those preferences; a user might choose a thriller because they’re seeking escapism, a cookbook because they’re planning a dinner party, or a historical novel to learn about a specific era. Without understanding these underlying reasons, the resulting recommendations tend to be generic, suggesting items that are merely popular or superficially similar, rather than genuinely aligned with the user’s current needs and desires. This reliance on surface-level patterns ultimately diminishes the effectiveness of the system and limits its capacity to foster meaningful engagement.

While Graph Neural Networks (GNNs) represent a significant advancement over traditional collaborative filtering approaches to recommendation, their capacity to truly understand why a user desires a particular item remains limited. Current GNN architectures primarily focus on the relationships between users and items, often neglecting the valuable contextual information embedded within textual data – such as product reviews, social media posts, or search queries. This rich textual content frequently reveals underlying user needs, preferences, and motivations that directly influence their choices. The inability of existing GNNs to effectively integrate and leverage this information results in recommendations that, while potentially relevant based on past behavior, often fail to address the deeper, nuanced reasons behind a user’s intent, hindering the potential for truly personalized and satisfying experiences.

The precision of modern recommendation systems is fundamentally constrained by a difficulty in discerning why a user desires a particular item, not just that they might like it. Without understanding the underlying motivations – whether a user seeks information, entertainment, social connection, or a practical solution – algorithms resort to predicting preferences based on superficial patterns of past behavior. This limitation results in recommendations that, while statistically relevant, often lack genuine personalization and fail to anticipate evolving needs. Consequently, users are frequently presented with suggestions that feel generic or irrelevant, diminishing engagement and eroding trust in the system’s ability to provide truly valuable assistance. A system blind to intent ultimately offers a limited, and often frustrating, experience, hindering its potential to foster meaningful connections between users and desired content.

Beyond Patterns: LLMs and the Search for Intent

Large Language Models (LLMs) represent a significant advancement in natural language processing, enabling the automated extraction of data points from unstructured textual sources. Specifically, LLMs excel at processing user reviews and item descriptions, identifying key phrases, sentiments, and relationships within the text. This capability moves beyond simple keyword detection to encompass semantic understanding, allowing for the identification of nuanced information previously requiring manual analysis. Models like those based on the Transformer architecture can be fine-tuned or prompted to perform specific information extraction tasks, delivering scalable and repeatable results on large datasets. The resulting extracted data can then be used for downstream applications such as recommendation systems, market research, and customer feedback analysis.

Latent motivations within textual data are accessible through the application of Chain-of-thought prompting, a technique that encourages Large Language Models (LLMs) to articulate their reasoning process step-by-step. This method, combined with models like Text-embeddings-3-large and GPT-4o-mini, enables the identification of underlying reasons behind user preferences or item characteristics. Text-embeddings-3-large facilitates semantic understanding of the text, while GPT-4o-mini provides efficient reasoning capabilities. The combination allows for the extraction of motivations not explicitly stated in the source material, revealing deeper insights than simple keyword analysis. These models process text and generate outputs detailing the inferred motivations, providing a structured understanding of the ‘why’ behind observed behaviors or attributes.

Extracted motivations, categorized as Text Motivation and Interaction Motivation, directly inform recommendation system performance by moving beyond simple content-based or collaborative filtering approaches. Text Motivation, derived from analyzing textual data like reviews, identifies why a user might prefer certain item characteristics. Interaction Motivation, conversely, assesses preferences based on a user’s past interactions – such as clicks, purchases, or time spent viewing items – revealing how a user behaves. Integrating both motivation types allows for the creation of recommendation models that predict not only what a user might like, but also why they might like it, leading to increased relevance, user satisfaction, and ultimately, improved conversion rates. This dual-motivation approach enables more nuanced personalization than traditional methods, particularly in scenarios where explicit user preferences are limited or unavailable.

LMMRec: A Framework for Motivation-Aware Recommendations

LMMRec is a novel recommendation framework designed to incorporate user motivations extracted through the application of Large Language Models (LLMs). The system processes user interactions to identify underlying reasons for those interactions – the ‘motivations’ – and represents them as embeddings. These motivation embeddings are then integrated into the recommendation process, allowing the system to move beyond simple preference matching and consider why a user might engage with an item. This approach aims to improve recommendation relevance by aligning recommendations with inferred user needs and intentions, ultimately enhancing user satisfaction and engagement. The framework utilizes LLMs for nuanced understanding of user behavior and translates those insights into a quantifiable signal for the recommendation algorithm.

The Motivation Coordination Strategy within LMMRec utilizes a Dual-Tower Architecture to independently encode user and item features into embedding vectors. These vectors are then aligned through Mutual Information Maximization (MIM), a process designed to increase the statistical dependence between the user and item representations. Specifically, MIM encourages the model to learn embeddings where knowing the user embedding provides maximal information about the item embedding, and vice-versa. This alignment ensures a consistent cross-modal space, enabling effective matching of users to items based on inferred motivations. The objective function for MIM typically involves maximizing the lower bound of the mutual information between the user and item representations, calculated using techniques such as noise contrastive estimation or InfoNCE.

The Interaction-text Correspondence Method enhances motivation extraction by aligning interaction sequences with corresponding textual descriptions. This is achieved through Momentum-based Teacher-Student Learning, where a teacher network provides soft targets to guide the student network’s learning process. The method explicitly minimizes the [latex]Kullback-Leibler divergence[/latex] between the teacher and student networks’ output distributions, forcing the student to emulate the teacher’s refined understanding of user motivations. This approach improves the robustness of motivation extraction by mitigating the impact of noisy or ambiguous interaction data and ensuring consistent representation across modalities.

Performance and Applicability: A Glimmer of Hope

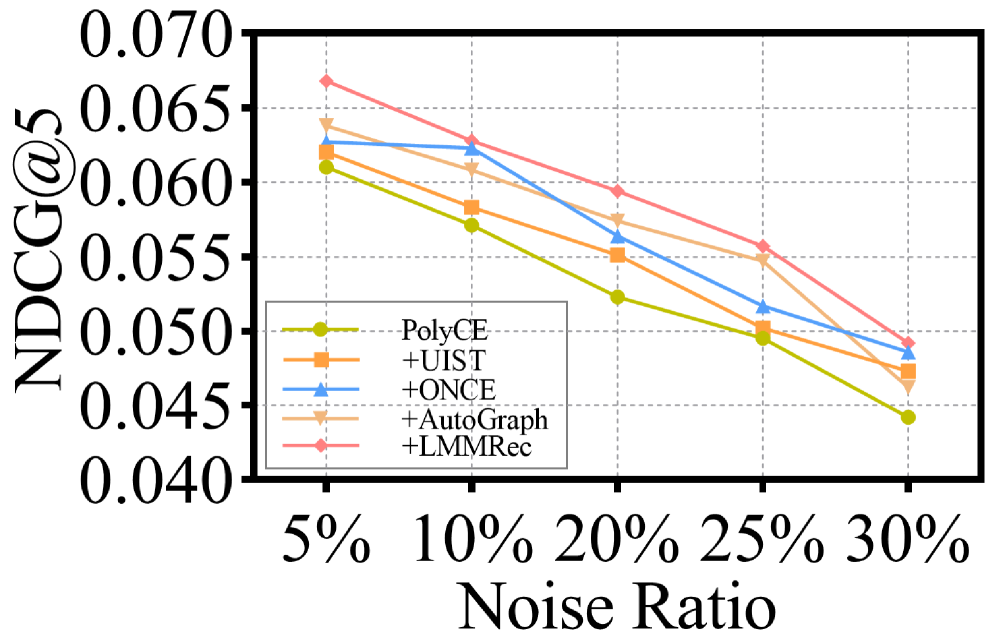

Rigorous testing of LMMRec across diverse datasets – including the Yelp Dataset, Amazon-book Dataset, and Steam Dataset – reveals a consistent and substantial performance advantage over established recommendation algorithms. When benchmarked against methods like PolyCF, UIST, WeightedGCL, and ONCE, LMMRec demonstrates improvements of up to 4.98% in NDCG@N and 4.17% in Recall@N, key metrics for evaluating recommendation accuracy and relevance. These gains indicate that LMMRec not only provides more accurate predictions but also excels at surfacing items users are likely to engage with, suggesting a robust and effective framework for enhancing recommendation systems.

LMMRec distinguishes itself not merely through incremental gains on specific datasets, but through robust performance across diverse recommendation landscapes. Evaluations weren’t limited to a single user behavior pattern or item category; the framework demonstrated consistent improvements on the Yelp, Amazon-book, and Steam datasets – representing local business, e-commerce products, and gaming preferences, respectively. This adaptability suggests LMMRec isn’t narrowly tuned to a particular niche, but rather possesses a generalized ability to model user intent, regardless of the underlying domain. Such broad applicability is a critical factor for real-world deployment, indicating the potential for a single recommendation engine to serve varied platforms and user bases effectively, reducing the need for costly and complex customized solutions.

LMMRec distinguishes itself through a sophisticated ability to discern the underlying motivations driving user preferences, moving beyond simple item interactions to understand why a user might choose a particular item. This nuanced understanding facilitates the delivery of remarkably personalized recommendations, going beyond merely suggesting popular items to anticipating individual needs and desires. Consequently, users experience a higher degree of relevance in the suggestions they receive, fostering increased engagement with the recommendation system and ultimately leading to greater satisfaction with the platform as a whole. By prioritizing the intent behind user actions, LMMRec creates a more intuitive and rewarding experience, solidifying its potential for widespread adoption and sustained user loyalty.

The Illusion of Understanding: Future Directions

LMMRec signifies a considerable advancement in recommendation system design by integrating Large Multimodal Models (LMMs) to perceive not just what a user interacts with, but also how they interact with it. This approach moves beyond simple prediction algorithms by attempting to model the underlying motivations and emotional states driving user choices. By analyzing diverse data – including text reviews, image features, and behavioral patterns – LMMRec builds a more nuanced understanding of individual preferences. The system can then tailor recommendations that resonate on a deeper level, offering suggestions aligned with a user’s expressed needs and inferred emotional context. This capability promises to deliver more satisfying and relevant experiences, fostering stronger user engagement and potentially reshaping the landscape of personalized content delivery.

Researchers are actively pursuing enhancements to LMMRec by integrating nuanced motivational factors beyond simple preference prediction. This involves moving past surface-level data to model the underlying psychological drivers influencing user choices – considering not just what a user selects, but why. Future iterations will leverage advancements in large language models, exploring techniques like reinforcement learning from human feedback and causal inference to better discern user intent. The goal is to create recommendation systems capable of understanding complex needs, anticipating future desires, and ultimately providing suggestions that resonate on a deeper, more personal level – moving beyond mere prediction to genuine understanding and empathetic assistance.

The current generation of recommendation systems excels at identifying patterns in user behavior to predict future preferences, but a shift is occurring towards systems that delve into the underlying motivations driving those preferences. This research suggests a future where algorithms don’t simply suggest products based on past purchases, but instead infer the needs and desires fueling those purchases – is the user seeking convenience, social connection, self-expression, or problem-solving? By understanding the ‘why’ behind user choices, recommendation systems can move beyond superficial accuracy and offer genuinely helpful, personalized experiences, anticipating unstated needs and fostering deeper engagement. This represents a move towards more empathetic AI, capable of providing recommendations that resonate on a human level and contribute to user well-being.

The pursuit of motivation modeling, as detailed in LMMRec, feels…familiar. It’s the elegant theory colliding with the messy reality of user behavior. This paper attempts to bridge the gap between explicit feedback and implicit signals, aligning textual reviews with interaction data. As Marvin Minsky once observed, “Common sense is what everyone thinks they have, but very few possess.” LMMRec, in its ambition to understand user motivation, treads a similar line. It’s a complex undertaking, inevitably introducing new layers of potential failure, new forms of tech debt accruing with each refinement. The system will, undoubtedly, be broken in production, revealing gaps in the model’s understanding, but the attempt to model motivation is itself a worthwhile, if perpetually incomplete, endeavor.

What’s Next?

The enthusiasm for injecting large language models into recommendation systems is, predictably, reaching a fever pitch. LMMRec represents a logical, if optimistic, step – aligning motivations is a fine goal, provided anyone actually has consistent motivations. The system’s reliance on textual reviews invites the usual questions: how much of that text is genuine signal, and how much is carefully constructed noise designed to game the system? One anticipates a rapid escalation in adversarial examples, and a corresponding need for increasingly complex defenses.

More troubling is the implicit assumption that ‘robustness’ is achievable. Anything called ‘scalable’ simply hasn’t been properly load-tested. The cross-modal alignment, while elegant in theory, will undoubtedly encounter edge cases where user actions demonstrably contradict stated preferences. The logs, one suspects, will be… instructive.

Ultimately, the field will likely discover that modeling ‘motivation’ is far more difficult than it appears. Better one well-understood heuristic than a hundred opaque neural networks pretending to understand human desire. The pursuit continues, of course. It always does. And someone, somewhere, will inevitably declare it ‘solved’ – until production finds a way to prove them wrong.

Original article: https://arxiv.org/pdf/2602.05474.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Jason Statham’s Action Movie Flop Becomes Instant Netflix Hit In The United States

- Kylie Jenner squirms at ‘awkward’ BAFTA host Alan Cummings’ innuendo-packed joke about ‘getting her gums around a Jammie Dodger’ while dishing out ‘very British snacks’

- Gold Rate Forecast

- Brawl Stars February 2026 Brawl Talk: 100th Brawler, New Game Modes, Buffies, Trophy System, Skins, and more

- Hailey Bieber talks motherhood, baby Jack, and future kids with Justin Bieber

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- MLBB x KOF Encore 2026: List of bingo patterns

- Jujutsu Kaisen Season 3 Episode 8 Release Date, Time, Where to Watch

- How to download and play Overwatch Rush beta

2026-02-08 05:39