Author: Denis Avetisyan

New research reveals how an operator’s experience level fundamentally changes their approach to remotely supervising and intervening with robotic systems.

Operator expertise significantly impacts intervention timing and strategy in human-robot teaming, with implications for the design of adaptive user interfaces.

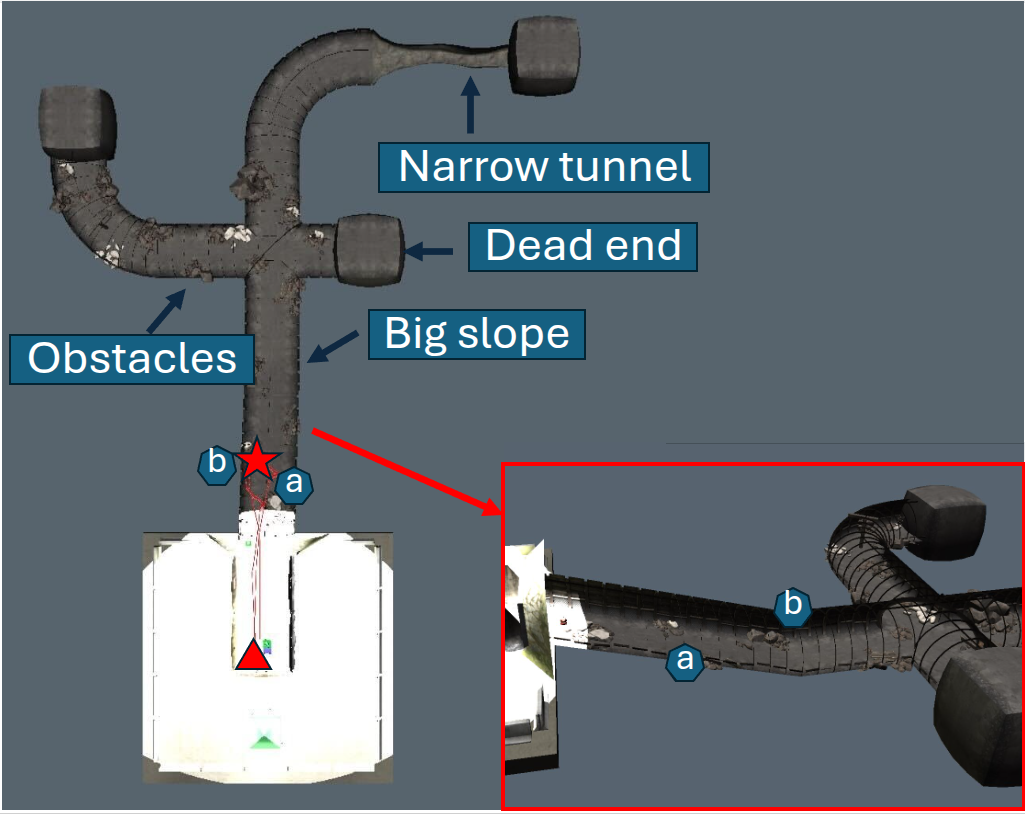

As robots become increasingly autonomous, effective human-robot teaming hinges on nuanced supervisory strategies, yet user expertise significantly impacts these interactions. This study, ‘Influence of Operator Expertise on Robot Supervision and Intervention’, investigates how varying levels of robotics experience shape intervention decisions during remote robot supervision in simulated tunnel environments with [latex]\mathcal{N}=27[/latex] participants. Findings reveal that novice users intervene more frequently based on immediate visual cues, while experts prioritize efficiency by delaying intervention, suggesting a critical need for adaptive interfaces tailored to operator skill. How can we design supervisory systems that dynamically adjust to user expertise, maximizing both safety and performance in human-robot collaboration?

The Inevitable Friction of Remote Control

The deployment of robotic systems into unpredictable, real-world scenarios – such as the aftermath of a disaster or a collapsed structure – introduces a unique set of operational hurdles. These environments are often characterized by limited visibility, unstable terrain, and the presence of hazardous materials, demanding robust robotic capabilities. However, even advanced hardware is insufficient without effective remote control, which is hampered by the very conditions necessitating robotic intervention. Communication bandwidth limitations, coupled with the potential for signal interference, create delays and data loss, making precise manipulation and situational awareness difficult. Consequently, operators face a constant trade-off between maintaining control and gathering sufficient information to navigate these complex landscapes, impacting both the speed and effectiveness of search and rescue operations.

The difficulties inherent in remotely controlling robots, particularly in dynamic or disaster-stricken environments, often stem from the unavoidable delays – or latency – in communication and the sheer volume of sensory data presented to the human operator. This information overload can severely impede a person’s ability to quickly assess a situation and make informed decisions; the time required to process visual feeds, sensor readings, and robot status updates, coupled with the round-trip communication delay, effectively slows down the entire operation. Consequently, even skilled operators can experience cognitive strain and diminished performance, making rapid response and precise manipulation exceedingly difficult – a critical limitation in time-sensitive scenarios like search and rescue or hazardous material handling.

The efficacy of remote robot operation is profoundly linked to the operator’s skill and experience; however, relying solely on highly trained personnel creates a significant bottleneck for widespread adoption. Current systems often demand extensive training to navigate complex scenarios and interpret sensor data effectively, limiting the pool of potential operators. Research is increasingly focused on developing adaptive interfaces and levels of automation that can bridge this expertise gap. These solutions aim to provide intuitive controls and automated assistance, enabling individuals with varying skill levels – from novices to experts – to successfully manage robotic systems in challenging environments. Ultimately, scaling remote exploration requires a shift towards more accessible and user-friendly technologies, broadening participation and maximizing the potential of robotic assistance in diverse applications.

Achieving truly effective remote collaboration between humans and robots necessitates a carefully calibrated interplay of independent action and directed control. Simply automating tasks, or conversely, demanding constant human intervention, proves inefficient and limits operational scope. Instead, research focuses on systems where robots proactively handle routine functions and alert human operators only when encountering ambiguity, requiring complex judgment, or facing unforeseen circumstances. This division of labor minimizes cognitive load on the operator, allowing for strategic oversight and rapid response to critical events. Successful implementations recognize that complete autonomy is often impractical in dynamic, real-world scenarios, and that human intuition and adaptability remain essential for navigating uncertainty and ensuring mission success; the optimal balance isn’t a fixed setting, but a fluid adjustment based on context and task demands.

Skill Modulation: A Patch for Inadequate Systems

Adaptive autonomy systems function by modulating a robot’s operational independence in direct relation to the assessed skill level of the human operator. This is achieved through continuous monitoring of operator actions and performance, allowing the system to dynamically adjust the degree of automated control. When the operator demonstrates proficiency in a given task, the robot relinquishes control, operating with a higher degree of autonomy. Conversely, if the system detects uncertainty or error in the operator’s actions, it increases its level of assistance, potentially offering guidance or assuming direct control to prevent mistakes and maintain task completion. This dynamic adjustment ensures the robot operates at an appropriate level of complexity for the operator, optimizing both efficiency and user experience.

Traditional automation systems operate with pre-programmed instructions, executing tasks independently without accounting for unforeseen circumstances or operator capabilities. In contrast, systems employing collaborative robot behavior allow the robot to actively solicit assistance from a human operator when encountering situations outside its programmed parameters or confidence levels. This capability shifts the paradigm from complete automation to a collaborative workflow, where the robot functions as a teammate, requesting input rather than failing silently or requiring manual intervention. This approach necessitates a communication channel for help requests and a mechanism for the operator to provide guidance, ultimately improving overall system robustness and task completion rates.

Robot-initiated help requests demonstrably improve human-robot interaction by offloading cognitive burden from the operator. Studies indicate a statistically significant preference for this interaction method among participants; a Chi-square test (χ2(2, N=27) = 12.67, p=0.0018) reveals that the majority favored systems where the robot actively solicits assistance when needed, rather than passively awaiting instruction or operating independently. This facilitates a more intuitive collaborative experience, as operators are not required to continuously monitor the robot’s progress or anticipate potential failures, leading to reduced mental workload.

The adaptive autonomy system incorporates operator skill assessment as a core component for modulating robot behavior. This assessment, conducted through monitoring of operator actions, error rates, and completion times, establishes a baseline proficiency level. The system then utilizes this data to dynamically adjust the robot’s autonomy; lower skill levels trigger increased assistance and more frequent help requests, while higher skill levels enable greater independent operation. This tiered approach ensures the robot’s actions align with the operator’s capabilities, optimizing task performance and minimizing the potential for errors stemming from mismatched autonomy levels. The assessment is continuous and allows for real-time adjustments to the level of support provided.

![Novice, intermediate, and expert users demonstrated varying intervention patterns around the robot's trajectory [latex]\overrightarrow{red}[/latex], with ellipses showing greater variance in intervention points for less experienced users.](https://arxiv.org/html/2601.15069v1/Fig6.png)

Simulating Reality: A Convenient Fiction

The CSIRO NavStack Simulator utilizes a physics-based engine and customizable environment parameters to replicate conditions encountered during robotic exploration. It supports the modeling of multiple robotic platforms, including autonomous ground vehicles and aerial drones, and allows for the definition of complex terrain, lighting conditions, and sensor noise. Crucially, the simulator facilitates the incorporation of human-in-the-loop control, enabling the evaluation of teleoperation strategies and the assessment of human-robot interfaces. Data output includes robot pose, sensor readings, and intervention events, providing quantitative metrics for performance analysis and algorithm validation. The simulation environment is designed to be scalable, allowing for the modeling of both small-scale indoor scenarios and large-scale outdoor deployments.

The CSIRO NavStack Simulator facilitates the evaluation of diverse intervention strategies by allowing users to model scenarios requiring human or automated assistance during robotic operations. These strategies, ranging from teleoperation and automated replanning to collaborative task execution, are subjected to simulated conditions mirroring real-world complexities like communication delays, sensor noise, and environmental uncertainty. Quantitative metrics, including task completion time, intervention frequency, and overall mission success rate, are recorded and analyzed to compare the effectiveness of each strategy. This capability enables developers to identify optimal intervention approaches and refine robotic systems to minimize reliance on external support, ultimately improving autonomous operational performance and robustness.

The CSIRO NavStack Simulator incorporates Simultaneous Localization and Mapping (SLAM) systems to facilitate the creation of detailed and dynamic environmental models. These integrated SLAM algorithms allow for the simulation of robotic agents navigating and building maps of unknown spaces, replicating the challenges of real-world exploration. Specifically, the simulator supports multiple SLAM implementations, enabling the testing of different algorithms-including visual SLAM and LiDAR-based SLAM-under varying conditions such as sensor noise, lighting changes, and dynamic obstacles. The resulting maps are used for path planning, obstacle avoidance, and localization of the robotic agent within the simulated environment, providing a realistic foundation for evaluating navigation performance and the effectiveness of intervention strategies.

Optimization of waypoint placement within the CSIRO NavStack Simulator directly impacts robotic mission efficiency and reduces the necessity for human intervention. The simulator allows for iterative testing of various waypoint configurations across modeled terrains and operational constraints. By systematically adjusting waypoint density, location, and sequence, users can identify configurations that minimize travel distance, energy consumption, and instances where the robot requires assistance due to localization failures or unexpected obstacles. Quantitative metrics, such as total path length, intervention frequency, and mission completion time, are recorded and analyzed to determine the optimal waypoint strategy for a given environment and robotic platform. This process enables proactive identification and mitigation of potential issues before deployment in real-world scenarios.

The Human Factor: Still the Biggest Bottleneck

The success of remotely operated robotic teams is fundamentally linked to the skill level of the human operator, ranging from those with limited experience to highly proficient experts. Studies reveal a clear correlation between operator expertise and overall system performance; novice operators typically require more frequent guidance and exhibit slower completion times compared to their more seasoned counterparts. As skill increases, operators demonstrate an enhanced ability to anticipate robotic needs, effectively manage complex situations, and seamlessly integrate into the human-robot workflow. This proficiency translates directly into improved efficiency, reduced error rates, and a greater capacity to handle unforeseen challenges during remote operations, highlighting the critical importance of comprehensive training and skill development for maximizing the potential of these collaborative systems.

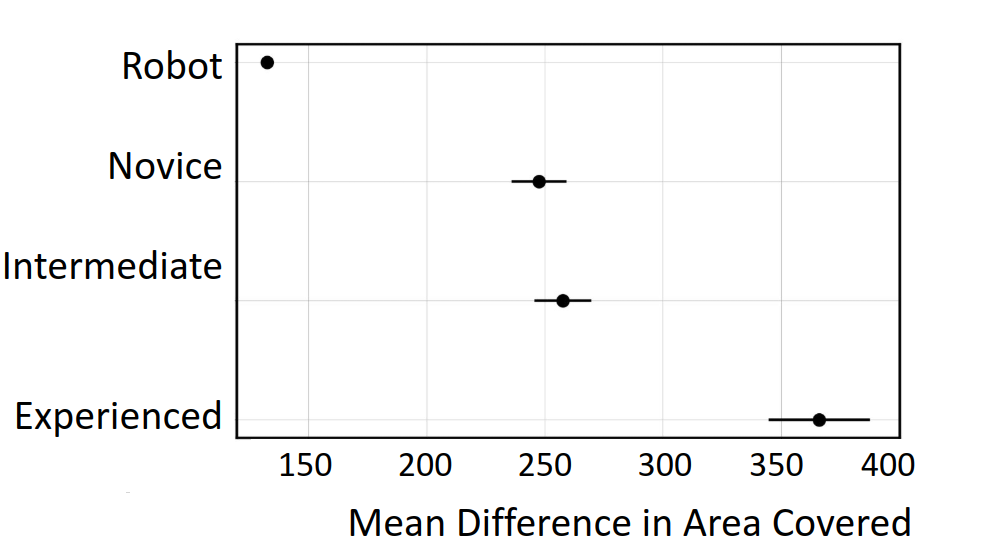

The frequency with which a human operator needs to intervene during remote robot operation is demonstrably linked to their level of expertise. Studies reveal a statistically significant correlation – with a p-value less than 0.001 – indicating that seasoned operators require substantially fewer interventions compared to their novice counterparts. This suggests that experienced individuals are better able to anticipate potential robot failures or navigational challenges, proactively allowing the robot to continue operating autonomously. Consequently, this reduced intervention rate not only streamlines the remote operation but also enhances overall efficiency, as less time is spent correcting issues and more time is dedicated to completing the intended task. The data underscores the importance of operator training and skill development in maximizing the benefits of human-robot teaming.

Effective area coverage during robotic operations is fundamentally linked to a robot’s capacity for autonomous function and a human operator’s skill in facilitating that independence. Studies reveal that minimizing constant human control allows for significantly greater progress in mapping and exploration; notably, both experienced and intermediate users achieved substantially larger areas of coverage following an intervention – a statistically significant finding with a p-value of less than 0.05. This suggests that while intervention remains necessary, skilled operators are better at strategically intervening to enable continued autonomous operation, rather than reverting to direct control, ultimately maximizing the robot’s efficiency and the scope of its exploration.

Effective tunnel exploration, a cornerstone of search and rescue operations in collapsed structures or cave systems, benefits dramatically from a carefully balanced approach to robotic autonomy and human oversight. Studies reveal that robots capable of adapting their behavior – navigating obstacles, mapping environments, and identifying potential victims – significantly improve search efficiency. However, complete autonomy isn’t feasible; instead, the most successful strategies involve adaptive autonomy, where the robot handles routine tasks while strategically requesting human intervention for complex situations. This collaborative dynamic, requiring seamless communication and trust between operator and robot, allows for faster, safer, and more comprehensive exploration of confined and often hazardous tunnel networks, ultimately increasing the probability of locating and assisting those in need.

The study’s findings regarding operator expertise predictably echo a fundamental truth about complex systems. It observes that novices intervene earlier, driven by immediate visual cues, while experts demonstrate a calculated patience, delaying action for optimized efficiency. This isn’t surprising; experience teaches one to tolerate ambiguity, to let the system run before applying a fix. As G.H. Hardy stated, “The most profound knowledge is the knowledge that one knows nothing.” This resonates deeply with the research, as the ‘expert’ operator doesn’t necessarily know the optimal solution immediately, but possesses the wisdom to observe, analyze, and intervene only when truly necessary-a skill born from countless cycles of observation and, inevitably, graceful failure. The adaptive interfaces proposed aren’t about preventing intervention, but about managing its inevitable arrival.

The Road Ahead (and the Potholes)

This investigation into operator expertise, while illuminating, predictably reveals that humans are messy. The finding – that novices grab the wheel first, while experts let the simulation run a little longer – isn’t exactly a shock. It simply confirms that elegant theories of shared autonomy crumble the moment a real operator encounters an unexpected event. The adaptive interfaces proposed are, of course, the next expensive way to complicate everything, trading one set of failure modes for another. A system that dynamically adjusts to ‘skill level’ will inevitably misclassify operators, or worse, create incentives for gaming the system.

The real question isn’t how to build interfaces that cater to expertise, but whether that’s the correct lever to pull. The study relied on simulated environments – a necessary starting point, but one that conveniently avoids the realities of fatigue, distraction, and the sheer unpredictability of production. Any claims of generalizability should be viewed with appropriate skepticism. It’s safe to assume that the first deployment will expose a previously unconsidered edge case.

Ultimately, the pursuit of perfect human-robot teaming feels a bit like chasing a mirage. If the code looks perfect, no one has deployed it yet. The field will likely progress not through revolutionary architectures, but through iterative patching and pragmatic workarounds. The most valuable contributions may not be novel algorithms, but detailed logs of how things actually break, and why.

Original article: https://arxiv.org/pdf/2601.15069.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Gold Rate Forecast

- Star Wars Fans Should Have “Total Faith” In Tradition-Breaking 2027 Movie, Says Star

- KAS PREDICTION. KAS cryptocurrency

- Christopher Nolan’s Highest-Grossing Movies, Ranked by Box Office Earnings

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Jujutsu Kaisen Season 3 Episode 8 Release Date, Time, Where to Watch

- Jason Statham’s Action Movie Flop Becomes Instant Netflix Hit In The United States

- Jessie Buckley unveils new blonde bombshell look for latest shoot with W Magazine as she reveals Hamnet role has made her ‘braver’

- How to download and play Overwatch Rush beta

2026-01-22 15:13