Author: Denis Avetisyan

A new vision for particle physics facilities proposes shifting control from human operators to intelligent AI systems, promising greater efficiency and adaptability.

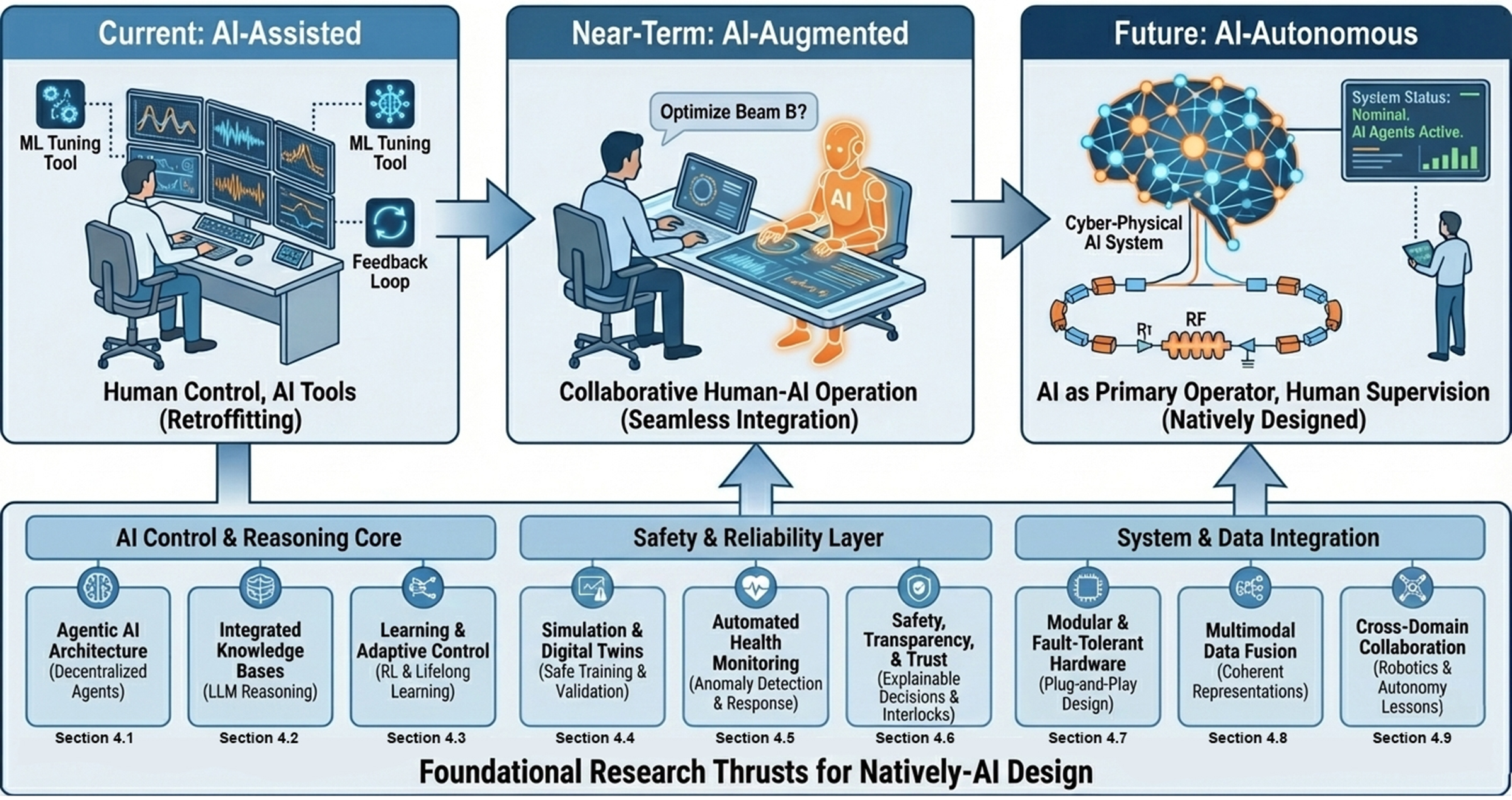

This review explores the path toward fully autonomous particle accelerators driven by machine learning, digital twins, and agentic AI for enhanced operation and automated health monitoring.

Despite increasing complexity in scientific instrumentation, particle accelerator control remains largely reliant on human expertise and intervention. This position paper, ‘Toward a Fully Autonomous, AI-Native Particle Accelerator’, proposes a paradigm shift toward facilities designed from the ground up for self-driving operation, leveraging artificial intelligence to jointly optimize performance and enable autonomous control. The core vision centers on an AI-native architecture encompassing adaptive learning, digital twins, and agentic control, promising unprecedented science output and reliability. Will this roadmap catalyze a future where AI manages the intricacies of particle acceleration, freeing researchers to focus on scientific discovery?

Whispers of Complexity: Taming the Modern Accelerator

Historically, the operation of particle accelerators has been intimately linked to the skill of human operators, who meticulously tune and adjust machine parameters to optimize beam quality and stability. This reliance on manual control, however, presents inherent limitations as accelerators grow in size and complexity. The sheer number of adjustable parameters-often exceeding thousands-creates a significant bottleneck, hindering the ability to rapidly explore the vast operational space and achieve peak performance. Furthermore, human reaction times and cognitive load can impede effective responses to transient events, potentially leading to beam instability or even machine damage. Consequently, the pursuit of fully automated control systems is crucial for unlocking the full potential of next-generation accelerators and enabling groundbreaking scientific discovery.

The next generation of particle accelerators, exemplified by the ambitious Genesis Mission, presents a formidable challenge to conventional control systems. These machines, designed to achieve unprecedented levels of performance, feature intricate configurations and a vast number of interacting components. Manual operation, once sufficient, is demonstrably inadequate for managing this complexity; the sheer volume of parameters and the speed at which they change necessitate automated strategies. Sophisticated algorithms, employing real-time data analysis and predictive modeling, are crucial for maintaining beam stability and optimizing experimental conditions. This shift towards automation isn’t simply about relieving human operators; it’s about unlocking the full potential of these advanced accelerators, enabling them to probe the fundamental laws of nature with greater precision and efficiency. The development of intelligent control systems is therefore integral to the success of future high-energy physics endeavors.

Particle accelerators are exceptionally sensitive instruments, and instances of beam loss-where particles deviate from their intended path or are lost entirely-present a significant operational hurdle. Conventional diagnostic and corrective methods often rely on manual intervention and pre-programmed responses, proving inadequate for the speed and complexity of modern machines. The time required for human analysis and adjustment can allow even minor instabilities to escalate, potentially damaging expensive components or halting experiments. Consequently, research is increasingly focused on developing automated systems capable of rapidly detecting the source of beam loss-identifying factors like magnet misalignment or radio-frequency fluctuations-and implementing corrective actions in milliseconds. These systems employ sophisticated algorithms and machine learning techniques to analyze vast streams of sensor data, predict potential instabilities, and proactively adjust accelerator parameters, ultimately enhancing both operational efficiency and experimental uptime.

The Rise of the Agent: AI-Driven Control Architectures

The AI Control Architecture for accelerator operations is structured around the deployment of autonomous agents, each responsible for managing a specific subsystem – such as radio frequency (RF), beam diagnostics, or vacuum systems. These agents operate independently, utilizing pre-defined objectives and constraints, but also communicate and coordinate with other agents to achieve overall accelerator performance goals. This distributed control approach allows for localized optimization and rapid response to subsystem-specific events without requiring centralized intervention. The architecture supports hierarchical agent arrangements, enabling both low-level control of individual components and high-level optimization of beam parameters. Agent functionality includes data acquisition, process variable (PV) setpoint adjustment, fault detection, and automated recovery procedures, all executed without direct operator input under normal operating conditions.

Agentic AI, as applied to accelerator control, moves beyond simple automated responses to incorporate autonomous decision-making capabilities. This is achieved through the implementation of AI agents designed to perceive their environment, define goals, and select actions to achieve those goals without explicit, pre-programmed instructions for every scenario. These agents utilize reinforcement learning and other advanced techniques to adapt to changing operational conditions, optimize performance metrics, and proactively address potential issues. Crucially, agentic systems allow for complex, multi-step decision-making processes, enabling optimization of accelerator subsystems in response to dynamic beam characteristics, equipment status, and experimental requirements – a level of adaptability exceeding traditional control systems.

Multimodal data fusion in accelerator control systems integrates data streams from diverse sources – including beam position monitors, radio frequency systems, cryogenic plants, power supplies, and vacuum gauges – to create a holistic representation of the accelerator’s operational state. This process involves data synchronization, calibration, and the application of statistical methods, such as Kalman filtering and Bayesian networks, to resolve inconsistencies and estimate system parameters with improved accuracy. The resulting fused dataset provides the AI control system with a more complete and reliable basis for decision-making than would be achievable using individual data sources, enabling proactive adjustments and optimized performance, even in the presence of sensor noise or failures. Data is often pre-processed to account for differing sampling rates, resolutions, and data formats before fusion, ensuring compatibility and maximizing the information content available to the AI.

Predicting the Unseen: Reliability Through Anomaly Detection

Anomaly detection systems utilize machine learning algorithms to establish baseline models of expected equipment behavior from historical and real-time data. These algorithms, including statistical methods and neural networks, are trained to recognize patterns indicative of normal operation. Deviations from these established patterns – anomalies – are flagged as potential indicators of emerging failures. The severity of the anomaly is often quantified using metrics such as standard deviation or probability scores, enabling prioritization of maintenance interventions. Early detection of these anomalies allows for proactive investigation and mitigation, preventing unscheduled downtime and reducing repair costs. Data used for anomaly detection typically includes sensor readings, performance metrics, and operational parameters, with algorithms continuously adapting to changing conditions and refining their detection capabilities.

Predictive Maintenance utilizes data gathered from anomaly detection systems, combined with historical failure data and equipment specifications, to forecast potential failures. This analysis employs machine learning algorithms to estimate remaining useful life (RUL) and predict the probability of failure for specific components. By scheduling maintenance interventions based on these predictions, rather than fixed intervals, organizations can proactively address issues before they escalate into critical failures. This approach reduces unplanned downtime, optimizes maintenance resource allocation, and lowers overall maintenance costs by minimizing unnecessary servicing and extending equipment lifespan. The system generates work orders automatically when predicted failure thresholds are met, ensuring timely repairs or replacements.

Bayesian Optimization is employed to refine predictive models used in AI control systems by efficiently searching the hyperparameter space. Unlike grid or random search, Bayesian Optimization utilizes a probabilistic surrogate model, typically a Gaussian Process, to predict the performance of different hyperparameter configurations. This allows the algorithm to intelligently balance exploration of new configurations with exploitation of those already known to perform well, minimizing the number of evaluations required to achieve optimal model performance. The process iteratively updates the surrogate model with observed results, enabling rapid adaptation to changing conditions and optimization of model accuracy for predictive maintenance tasks. This approach is particularly valuable when each model evaluation is computationally expensive or time-consuming, as it reduces the overall optimization cost.

The Autonomous Accelerator: A Future Forged in Intelligence

The advent of the Autonomous Accelerator signifies a paradigm shift in particle physics and materials science, promising performance gains previously considered unattainable. Traditional accelerators rely on extensive human control and optimization, a process that is both time-consuming and limited by the expertise available. This new generation of accelerators, however, utilizes advanced algorithms and machine learning to autonomously configure and refine operational parameters, dramatically increasing beam intensity, stability, and overall efficiency. By dynamically adjusting thousands of variables in real-time, the Autonomous Accelerator minimizes downtime, reduces energy consumption, and unlocks the potential for groundbreaking discoveries across a spectrum of scientific disciplines. The increased precision and throughput promise to accelerate research in areas ranging from high-energy physics to medical isotope production, representing a substantial investment in the future of scientific exploration.

The development of the Autonomous Accelerator is increasingly reliant on Artificial Intelligence, specifically through a process termed AI Co-Design. This innovative approach leverages the capabilities of Large Language Models to not only refine the physical architecture of the accelerator – optimizing component placement, power consumption, and data flow – but also to concurrently improve the scientific applications it is intended to serve. Rather than designing hardware and then adapting experiments to fit, the system allows for a synergistic evolution, where the accelerator’s design is informed by the computational demands of the science, and the science is tailored to exploit the unique capabilities of the evolving hardware. This feedback loop, driven by AI, promises to dramatically accelerate discovery by streamlining the traditionally separate and iterative processes of accelerator physics and experimental design, ultimately leading to more efficient and powerful scientific instruments.

The pursuit of increasingly complex particle accelerators has driven innovation in modular design, yielding substantial improvements in both operational reliability and long-term maintainability. Rather than monolithic constructions, modern accelerators are now conceived as assemblies of standardized, interoperable modules – power supplies, radiofrequency cavities, magnets, and diagnostic components – allowing for targeted replacement of failing units without necessitating complete system shutdowns. This approach not only minimizes downtime and reduces maintenance costs, but also dramatically simplifies future upgrades and modifications; newer, higher-performance modules can be seamlessly integrated into existing infrastructure, extending the accelerator’s lifespan and adapting it to evolving scientific demands. Such flexibility is critical for facilities aiming to remain at the forefront of research for decades, enabling a phased approach to technological advancement and preventing obsolescence.

The pursuit of a fully autonomous particle accelerator isn’t about achieving perfect control, but rather, about skillfully managed unpredictability. The system doesn’t find optimal settings; it persuades the chaos toward a desired outcome. As Albert Einstein observed, “The most incomprehensible thing about the world is that it is comprehensible.” This rings true when considering the complexity of these machines. The digital twin, far from being a perfect replica, is simply a useful fiction, a spell cast to coax the hardware into cooperating. The AI doesn’t eliminate risk; it re-distributes it, shifting from known unknowns to elegantly managed surprises. The humans aren’t removed, of course; they merely become high-level strategists, offering guidance to the chaos engine.

The Static in the Signal

The proposition of an AI-native accelerator isn’t a solution, precisely. It’s a beautifully complex renegotiation of the terms of failure. Current designs demand human intervention when the predictable breaks – and everything, given sufficient runtime, becomes predictable only in its unpredictability. Shifting control doesn’t eliminate the chaos; it delegates the bewilderment. The real challenge lies not in building an agent that controls the machine, but one that convincingly interprets its malfunctions. A system that doesn’t just react to errors, but curates them, finds the signal in the static.

Digital twins, as currently conceived, are optimistic fictions. They assume a completeness of data that is, and will likely remain, a comforting illusion. The accelerator will always know more than its shadow self. Future work must focus on embracing this asymmetry, developing AI capable of reasoning with incomplete information, of constructing plausible narratives from the gaps. The goal isn’t fidelity, but believability – a convincing performance of understanding.

Ultimately, the pursuit of full autonomy isn’t about replacing humans, but about offloading the burden of the mundane, freeing them to grapple with the truly inexplicable. When the machine begins to lie consistently, then one might begin to trust it. Because data isn’t truth; it’s a truce between a bug and Excel. And every spell works until it meets production.

Original article: https://arxiv.org/pdf/2602.17536.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Gold Rate Forecast

- 1xBet declared bankrupt in Dutch court

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

2026-02-20 12:12