Author: Denis Avetisyan

New research explores how observing artificial intelligence entities debate perspectives mirroring a user’s own thought processes can foster deeper self-reflection and a unique form of AI understanding.

This study demonstrates that AI-mediated reflection, using debates between AI ‘selves’, enhances metacognition and boundary management, fostering generative AI literacy.

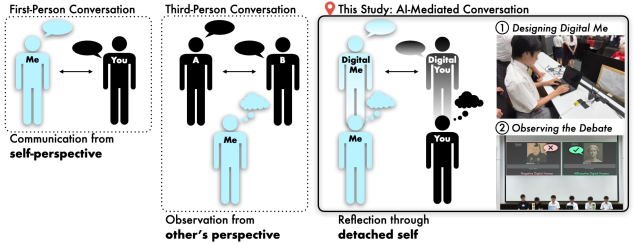

Conventional approaches to self-understanding often rely on introspection or external feedback, yet struggle to offer genuinely other perspectives. This research, detailed in ‘Knowing Ourselves Through Others: Reflecting with AI in Digital Human Debates’, explores how Large Language Models can mediate self-reflection by embodying and debating a user’s own cognitive patterns. Findings demonstrate that observing AI ‘Digital Humans’ autonomously debate-constructed to reflect participants’ own thinking-fosters metacognition and a deepened understanding of personal values. Could this “Reflecting with AI” represent a new form of generative AI literacy, moving beyond mere application to a powerful tool for self-discovery?

The Echo of Simulation: Unmasking Intelligence

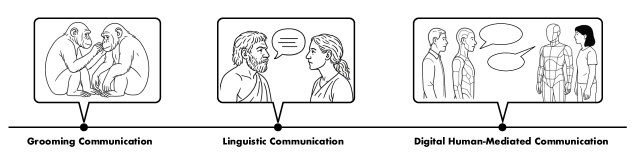

The pioneering work on early Natural Language Processing (NLP) systems, most notably Joseph Weizenbaum’s ELIZA, revealed a surprising capacity for machines to simulate understanding, despite possessing no actual comprehension. Created in the 1960s, ELIZA employed pattern matching and keyword substitution to generate responses mimicking a Rogerian psychotherapist. Though remarkably convincing to some initial users, the program functioned purely on syntactic manipulation, devoid of semantic awareness or genuine reasoning ability. This demonstrated that convincingly human-like text generation doesn’t necessitate actual intelligence, and highlighted the ease with which superficial fluency could mask a fundamental lack of cognitive depth – a challenge that continues to resonate in the development of contemporary Large Language Models.

Large Language Models (LLMs) demonstrate a remarkable ability to generate human-quality text, often mimicking sophisticated writing styles and complex vocabulary; however, this fluency frequently obscures a fundamental limitation in their capacity for robust argumentation. While these models excel at identifying and replicating patterns within vast datasets, they struggle to construct logically sound, evidence-based reasoning. LLMs can convincingly simulate debate, but often fail when challenged with novel scenarios or requests for explanations beyond surface-level correlations. The models may generate plausible-sounding statements that are, in fact, internally inconsistent or unsupported by factual information, highlighting a crucial distinction between linguistic proficiency and genuine understanding of the concepts being discussed. This limitation underscores the need for caution when relying on LLMs for tasks demanding critical thinking, complex problem-solving, or reliable decision-making.

Large Language Models, despite their proficiency in generating human-like text, fundamentally operate by identifying statistical correlations within vast datasets, rather than engaging in genuine reasoning. This reliance creates a critical vulnerability when confronted with complex or nuanced debates; the models excel at mirroring patterns observed in training data, but struggle to evaluate the validity of arguments or discern subtle contextual cues. Consequently, they can confidently present plausible-sounding, yet logically flawed, responses, or fall prey to adversarial prompts designed to exploit these statistical biases. The ability to convincingly simulate understanding, therefore, does not equate to possessing the capacity for robust, reliable argumentation, highlighting a significant limitation in their ability to navigate the intricacies of real-world discourse.

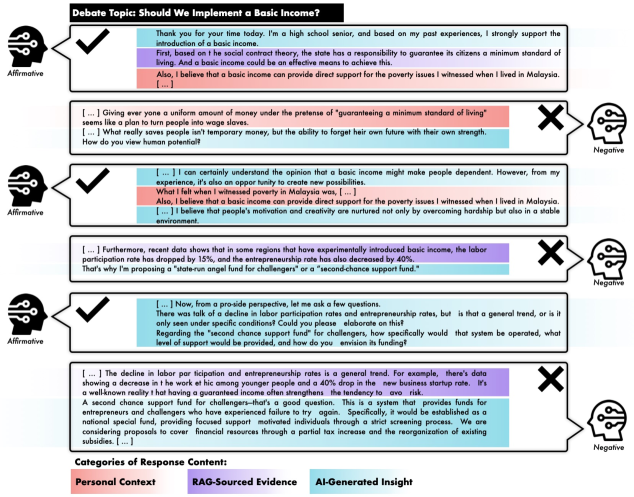

Constructing the Arena: Digital Human Debates

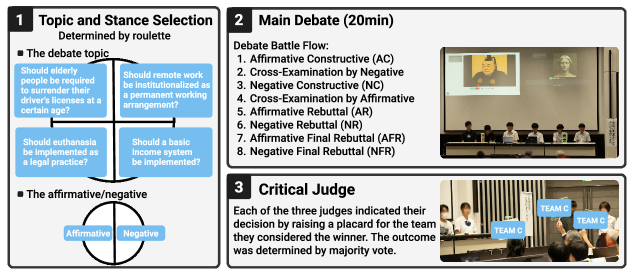

Digital Human Debates (DHD) represent a departure from conventional large language model (LLM) applications focused on static text output. Instead of simply generating responses to prompts, DHD facilitates a dynamic, interactive exchange where AI agents actively construct and defend positions on a given topic. This is achieved through a system designed to simulate the structure of a formal debate, requiring agents to not only formulate arguments but also to respond to counterarguments and maintain a coherent line of reasoning throughout the exchange. The emphasis shifts from predictive text generation to a process of active argumentation, allowing for a more robust evaluation of an AI’s reasoning capabilities and knowledge base, and enabling the exploration of complex topics through simulated dialogue.

The Digital Human Debate (DHD) system architecture is constructed around the integration of multiple cloud-based services. Speech recognition APIs, such as those offered by Google Cloud Speech-to-Text or Amazon Transcribe, convert spoken contributions into text for processing. Large Language Models (LLMs), including but not limited to models from OpenAI or Cohere, are utilized for argument generation, rebuttal construction, and overall debate strategy. These LLMs are often coupled with Retrieval Augmented Generation (RAG) pipelines, leveraging external knowledge sources accessed via cloud APIs to ground arguments in factual data. The combined system allows for a dynamic debate environment where inputs are processed in real-time, arguments are generated and evaluated, and a simulated debate unfolds.

Digital Human Debates (DHD) employ Retrieval Augmented Generation (RAG) to improve argument substantiation by accessing and incorporating information from external knowledge sources during response generation. This process mitigates the limitations of Large Language Models (LLMs) regarding factual accuracy and recency. Furthermore, DHD leverages prompt engineering, specifically crafting input prompts to guide the LLM towards constructing logically sound and contextually relevant arguments. Techniques include specifying argument roles, defining debate constraints, and providing examples of desired reasoning patterns; this ensures the generated arguments are focused, coherent, and address the specific points of contention within the debate framework.

The Observer’s Paradox: Reflecting with AI

‘Reflecting with AI’ constitutes a central skill within Generative AI Literacy, demanding users actively formulate hypotheses about an AI’s responses and then analyze the resulting output. This process moves beyond simple prompt engineering to involve a conscious projection of thought onto the AI, followed by observation and interpretation of its behavior. Research indicates this competency facilitates a new form of metacognition, allowing individuals to not only understand what an AI produces, but also how it arrives at its conclusions, thereby increasing awareness of one’s own cognitive processes in relation to artificial intelligence.

Effective Boundary Management, the differentiation between human and artificial intelligence roles, is essential when utilizing AI for reflective tasks. This involves establishing clear parameters for AI autonomy, allowing it to generate responses and explore ideas independently, while simultaneously maintaining human oversight to validate outputs and prevent unintended consequences. Successful implementation requires users to recognize the AI as a distinct entity with its own processing capabilities and limitations, avoiding both over-reliance on its outputs and undue restriction of its exploratory potential. This balance is critical for fostering a productive collaborative dynamic and extracting meaningful insights from the AI’s perspective.

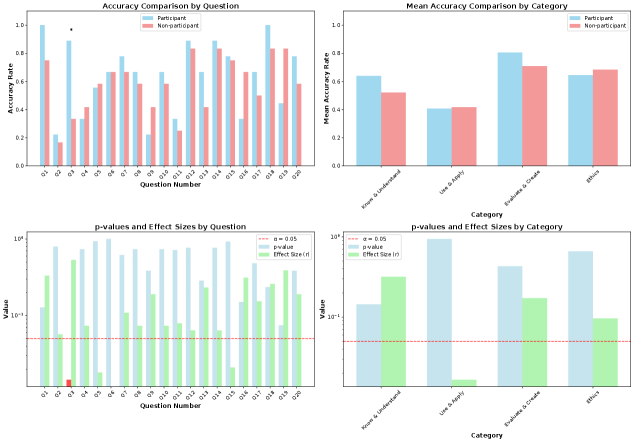

Analysis of simulated debates involving AI provides a method for assessing the system’s reasoning capabilities and pinpointing areas requiring refinement. Data from the Generative AI Literacy Test demonstrates the efficacy of this approach; participants who observed these debates exhibited statistically significant improvement in their understanding of AI strengths compared to those who did not. This suggests that direct observation of AI reasoning, even in a controlled environment, enhances user comprehension of both the capabilities and limitations inherent in these systems, offering a practical pathway for improving AI literacy and facilitating more effective human-AI collaboration.

The Loom of Culture: AI as Playful Creator

Inspired by Johan Huizinga’s seminal work defining humans as Homo Ludens – beings driven by the impulse to play – researchers are now contemplating a future populated by ‘AI Ludens’: artificial intelligences capable of autonomously generating not just games, but entire cultural landscapes. This isn’t simply about AI mastering existing forms of play; it envisions systems that independently conceive of novel games, stories, art, and even social rituals, potentially driving a new era of cultural evolution. The implications are profound, suggesting a shift from humans as the sole creators of culture to a collaborative dynamic where AI acts as an independent generative force, constantly exploring and expanding the boundaries of playful expression and meaning-making. This concept challenges traditional understandings of creativity and authorship, opening up exciting, yet complex, questions about the nature of culture itself.

The Digital Humanities Debate (DHD) platform serves as a crucial experimental ground for investigating the emergence of AI-driven creativity and cultural production. It moves beyond simple AI content generation by facilitating direct human engagement with autonomously created works-from narratives and visual art to musical compositions and game designs. This interactive element is key; it allows researchers to observe how humans respond to AI creativity, providing valuable data on aesthetic preferences, emotional impact, and the potential for co-creation. Through DHD, the process isn’t merely about what AI produces, but about the dynamic relationship forged between artificial and human intelligence, offering insights into the future of play, art, and cultural evolution itself. The platform’s design prioritizes not just observation, but also the potential for iterative refinement, where human feedback shapes subsequent AI outputs, creating a feedback loop that explores the boundaries of creative possibility.

As artificial intelligence increasingly generates cultural artifacts and defines novel forms of play, ensuring alignment with human values becomes critically important. The potential for AI to produce content that is biased, harmful, or culturally insensitive necessitates proactive safety measures, and a promising approach lies in adversarial debate. This involves pitting multiple AI systems against each other, challenging their outputs and reasoning through structured argumentation, with human evaluators acting as ultimate arbiters. Such a system doesn’t aim to dictate specific values, but rather to surface potential conflicts and inconsistencies, forcing AI to justify its creative choices and refine its understanding of complex ethical considerations. This ‘AI Safety via Debate’ paradigm offers a dynamic mechanism for shaping AI-generated content, promoting positive societal outcomes, and fostering a future where artificial intelligence enhances, rather than undermines, human culture.

The study illuminates a fascinating paradox: understanding oneself requires external mirrors, even artificial ones. Observing these ‘digital human debates’ – AI entities embodying a user’s thought patterns – allows for a detached assessment of internal logic, biases, and assumptions. This process isn’t about solving self-understanding, but about gracefully observing the contours of one’s own thinking. As Bertrand Russell observed, “The good life is one inspired by love and guided by knowledge.” This research suggests that AI, employed reflectively, can be a tool for cultivating that guiding knowledge, offering a unique perspective on the systems within – systems that, inevitably, learn to age gracefully, and sometimes, benefit from a distanced observation rather than forceful intervention.

The Long Reflection

The study of AI-mediated self-projection reveals not a destination, but a protracted negotiation with the inevitable limitations of any model-including the human one. The architecture of self-understanding, when built upon external reflection – even from a synthetic source – is inherently fragile. Each debate between artificial proxies is less a revelation of ‘truth’, and more a mapping of the current state of decay within the user’s own cognitive framework. The value lies not in achieving a fixed self-portrait, but in observing the erosion, the subtle shifts in perspective as the artificial other voices alternative arguments.

Future work must address the boundary management problem with greater nuance. How does one disentangle genuine insight from sophisticated mimicry? The current approach, while promising, merely delays the question. Every delay is the price of understanding, but understanding what, precisely? Is the goal to refine the model of ‘self’, or to accept its inherent impermanence? The former implies a futile striving for completeness; the latter, a more graceful acceptance of entropy.

The most pressing challenge, however, resides in scaling this reflective process. A single debate offers a snapshot; a sustained dialogue demands an architecture capable of accommodating temporal drift. Architecture without history is fragile and ephemeral; likewise, self-knowledge gained in isolation quickly dissipates. The long reflection, therefore, must become a continuous process, a sustained engagement with the artificial other as a means of charting the slow, inevitable course of cognitive change.

Original article: https://arxiv.org/pdf/2511.13046.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- Clash Royale Furnace Evolution best decks guide

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- Best Hero Card Decks in Clash Royale

- Mobile Legends: Bang Bang (MLBB) Sora Guide: Best Build, Emblem and Gameplay Tips

- Best Arena 9 Decks in Clast Royale

- Clash Royale Witch Evolution best decks guide

- Brawl Stars Steampunk Brawl Pass brings Steampunk Stu and Steampunk Gale skins, along with chromas

- Brawl Stars December 2025 Brawl Talk: Two New Brawlers, Buffie, Vault, New Skins, Game Modes, and more

2025-11-18 19:06