Author: Denis Avetisyan

Researchers have developed an autonomous agent, LawThinker, designed to conduct deep legal research and reasoning within the complex and ever-changing landscape of judicial proceedings.

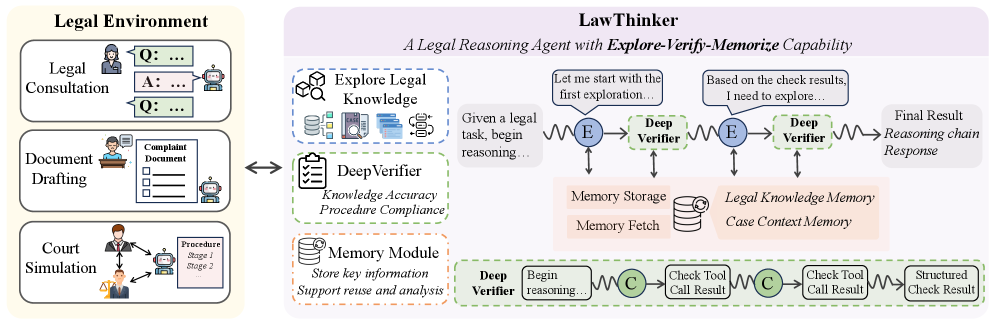

LawThinker employs an Explore-Verify-Memorize strategy with a DeepVerifier to ensure accurate, procedurally compliant legal reasoning using large language models and a specialized legal knowledge base.

Legal reasoning demands not only correct conclusions but also demonstrably sound procedural steps, a challenge for current automated systems prone to propagating errors through complex chains of thought. To address this limitation, we introduce LawThinker: A Deep Research Legal Agent in Dynamic Environments, an autonomous agent employing an Explore-Verify-Memorize strategy enforced by a novel DeepVerifier module. This approach ensures rigorous, step-level evaluation of knowledge accuracy, factual relevance, and procedural compliance, leading to significant performance gains-up to 24% on dynamic benchmarks-and improved generalization across static legal datasets. Can this methodology pave the way for more transparent, reliable, and trustworthy AI-driven legal assistance?

The Inherent Fragility of Legal Systems

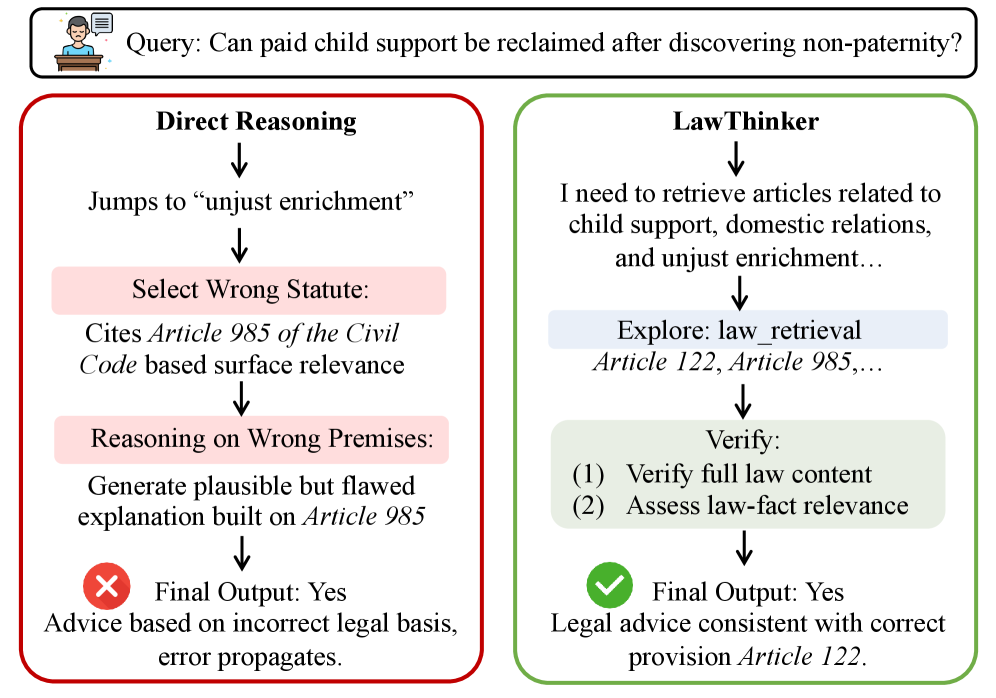

Even with the rapid development of Large Language Models, achieving dependable legal reasoning proves remarkably difficult due to the insidious nature of error propagation. These models, while adept at identifying patterns in vast datasets, can amplify minor inaccuracies present in initial data or during processing, leading to flawed conclusions. Unlike systems where a single error halts progress, legal reasoning demands a chain of inferences; thus, an initial mistake regarding a fact or the misinterpretation of a statute can cascade through subsequent steps, culminating in a significantly incorrect legal outcome. This is particularly problematic given the opacity of some models – the ‘black box’ nature makes identifying and correcting the source of these propagated errors incredibly challenging, hindering the reliable automation of legal tasks and demanding continued human oversight.

Legal reasoning presents a uniquely difficult challenge for automated systems because it demands more than simply identifying relevant information; it requires nuanced integration of disparate elements. Traditional approaches to legal analysis often falter when confronted with the intricate web of facts, statutory language, and procedural rules that define any given case. A correct legal conclusion isn’t simply derived from any single component, but from the careful consideration of how these elements interact – a process that necessitates discerning subtle relationships and anticipating potential exceptions. The sheer complexity of these interactions means even minor errors in interpreting a single fact or statute can propagate through the reasoning process, leading to demonstrably incorrect legal outcomes, highlighting the limitations of methods that struggle with holistic, contextual analysis.

Growing Robustness: An Iterative Approach

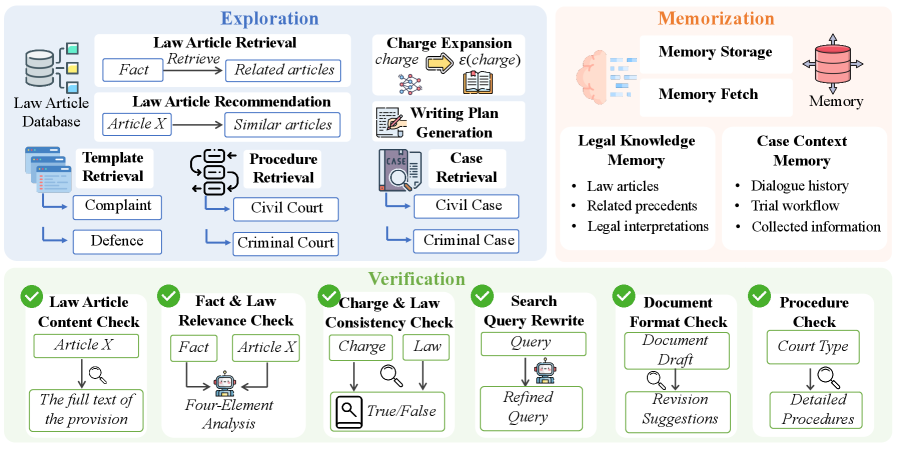

The Explore-Verify-Memorize strategy represents an iterative approach to building legal reasoning systems, prioritizing continual refinement through knowledge acquisition and validation. This paradigm begins with an ‘Explore’ phase, where the system retrieves potentially relevant legal information from external sources. Subsequently, a ‘Verify’ phase assesses the retrieved information for accuracy and relevance to the specific legal problem, often employing step-level checks and potentially human review. Finally, the ‘Memorize’ phase integrates validated knowledge into the system’s internal knowledge base, enhancing its capacity for future reasoning. This cycle repeats, allowing the system to continuously improve its performance and adapt to evolving legal precedents and information.

A modular approach is essential for implementing the Explore-Verify-Memorize strategy; this involves separating the reasoning process into distinct components. Specifically, systems must integrate external legal knowledge retrieval – accessing databases, statutes, and case law – with step-level verification mechanisms. This verification component assesses the relevance and accuracy of retrieved information at each stage of reasoning, preventing the propagation of errors or irrelevant data. The modular design allows for independent updates to the knowledge base and verification protocols without disrupting the core reasoning engine, enhancing system maintainability and adaptability. Furthermore, this separation enables focused evaluation of individual modules, improving overall system reliability and trustworthiness.

Workflow-based methods in legal reasoning decompose complex problems into a series of discrete, sequential steps, each representing a specific legal task or analysis. This structured approach typically begins with fact identification and issue framing, progresses through legal research and rule application, and culminates in a reasoned conclusion or recommendation. By predefining these steps, workflow methods enable systematic evaluation at each stage, facilitating error detection and ensuring comprehensive consideration of relevant legal principles. These methods are often implemented using rule engines, decision trees, or other automated reasoning tools to standardize the problem-solving process and improve consistency in legal analysis.

Deep Verification: Uncovering the Fault Lines

DeepVerifier functions as a critical component within the reasoning process by performing a detailed, step-by-step analysis. This assessment verifies the accuracy of knowledge utilized at each stage, confirms the relevance of facts and legal precedents applied, and ensures adherence to established procedural rules. Each reasoning step is subject to scrutiny, not simply the final conclusion, to identify potential errors in logic or the application of legal principles. This granular approach allows for the pinpointing of inaccuracies and facilitates a higher degree of confidence in the reliability and validity of the overall reasoning outcome.

DeepVerifier employs a suite of specialized Legal Tools to enable granular verification of reasoning steps. These tools include knowledge bases containing legal precedents, statutes, and regulations; fact-checking modules to confirm the accuracy of asserted facts; and compliance checkers designed to assess adherence to relevant legal procedures and rules. The integration of these tools allows DeepVerifier to independently validate each step of the reasoning process, identifying potential errors or inconsistencies and ultimately improving the reliability and defensibility of the overall output. This granular approach contrasts with holistic evaluations and pinpoints the specific basis for any verification failure.

The operational efficacy of DeepVerifier is fundamentally dependent on the quality and currentness of its legal knowledge base. This knowledge is not static; continuous validation against external sources – including updated statutes, recent case law, and regulatory changes – is essential to maintain accuracy. Discrepancies identified during this validation process trigger updates to the internal knowledge base, ensuring DeepVerifier’s reasoning remains aligned with prevailing legal standards. The system’s reliability is directly proportional to the frequency and thoroughness of these external validations and subsequent knowledge base refinements.

LawThinker: An Evolving Legal Mind

LawThinker utilizes an Explore-Verify-Memorize strategy to facilitate autonomous legal reasoning. This process begins with exploration, where the system researches relevant legal information. Subsequently, each finding undergoes verification to ensure accuracy and reliability. Critically, validated legal knowledge is then stored in a dedicated Memory Module, providing a persistent and readily accessible knowledge base. This allows LawThinker to build upon past reasoning, avoid redundant research, and improve the efficiency and accuracy of future legal analyses by leveraging previously confirmed information.

LawThinker operates through an autonomous research process, systematically investigating legal issues without human intervention. This involves iterative exploration of relevant legal resources, followed by verification of each identified step to ensure factual and legal accuracy. Validated findings are then stored within an integrated Memory Module, creating a persistent knowledge base. This capability facilitates dynamic and informed decision-making, as the system can leverage previously verified information, reducing redundant research and improving the efficiency and reliability of its legal reasoning process.

Evaluations of LawThinker on established legal reasoning benchmarks demonstrate significant performance gains. Specifically, the system achieved a 24% overall improvement on the J1-EVAL benchmark when compared to direct reasoning baselines, and an 11% improvement over workflow-based methods on the same benchmark. Across LexEval, LawBench, and UniLaw-R1-Eval, LawThinker exhibited an average accuracy improvement of 6%. Further analysis revealed that LawThinker attained the highest Court Stage Completion Rate across both civil and criminal court stages, indicating a robust ability to progress through complex legal reasoning tasks.

The pursuit of autonomous legal reasoning, as demonstrated by LawThinker, echoes a fundamental truth about complex systems. Every architectural promise of freedom – in this case, a fully automated agent navigating legal complexities – eventually demands sacrifices. The Explore-Verify-Memorize strategy, with its DeepVerifier, isn’t a solution, but a carefully constructed compromise, acknowledging the inherent uncertainty within dynamic judicial environments. As Andrey Kolmogorov observed, “The most important things are not those that are easy to measure.” The meticulous step-level verification isn’t about achieving perfect accuracy, but about building a resilient system capable of navigating inevitable failures – a temporary cache against the chaos of legal interpretation. The agent doesn’t solve legal reasoning; it adapts to it.

What’s Next?

LawThinker, as presented, is less an arrival than a beachhead. The architecture doesn’t solve legal reasoning; it merely relocates the points of failure. Step-level verification, while a pragmatic concession to fallibility, is a temporary bulwark against the inevitable entropy of complex systems. A guarantee of procedural compliance remains, predictably, a contract with probability. The system’s performance will, in time, be less about the initial knowledge base and more about its capacity to gracefully degrade – to identify useful errors, rather than striving for their elimination.

Future iterations will almost certainly grapple with the problem of adversarial environments. Legal systems are not static datasets; they are negotiated realities. A truly robust agent must anticipate not just what the law is, but how it will be contested. The exploration phase, currently focused on knowledge acquisition, will need to incorporate a theory of mind – an ability to model the intent and strategies of opposing counsel.

Stability, as currently understood, is merely an illusion that caches well. The real challenge isn’t building a system that works today, but one that can become something else tomorrow. Chaos isn’t failure – it’s nature’s syntax. The next phase of research should focus less on optimizing for specific legal tasks and more on cultivating a capacity for emergent behavior – for lawful, yet unpredictable, adaptation.

Original article: https://arxiv.org/pdf/2602.12056.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Married At First Sight’s worst-kept secret revealed! Brook Crompton exposed as bride at centre of explosive ex-lover scandal and pregnancy bombshell

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

- Gold Rate Forecast

- ‘Reacher’s Pile of Source Material Presents a Strange Problem

- Meme Coins Drama: February Week 2 You Won’t Believe

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Olivia Dean says ‘I’m a granddaughter of an immigrant’ and insists ‘those people deserve to be celebrated’ in impassioned speech as she wins Best New Artist at 2026 Grammys

- Why Andy Samberg Thought His 2026 Super Bowl Debut Was Perfect After “Avoiding It For A While”

- February 12 Update Patch Notes

2026-02-14 01:26