Author: Denis Avetisyan

Researchers have developed a new system that uses AI and robotic assistance to reduce the burden on scientists performing delicate tasks under a microscope.

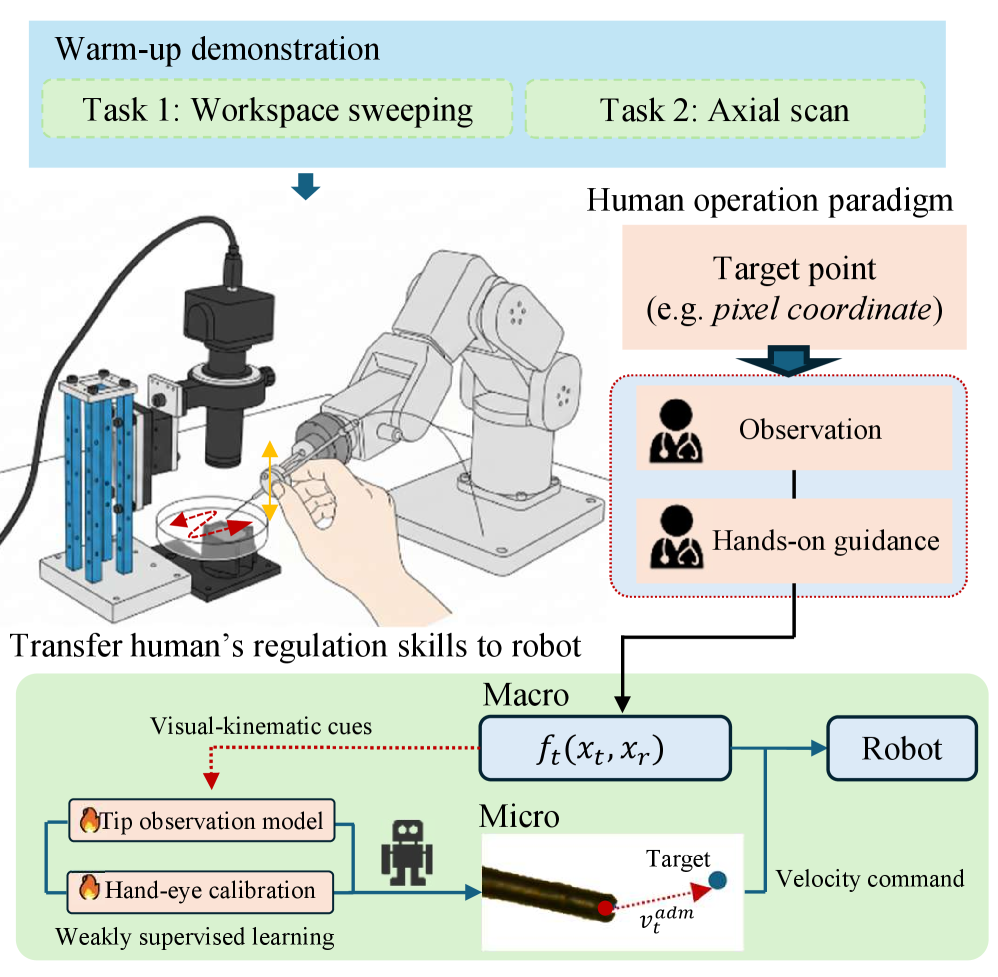

A weakly supervised framework enables a robot to learn from human demonstrations and provide precise, safe assistance in micromanipulation tasks, leveraging visual servoing and 3D reconstruction.

Precise robotic micromanipulation demands high accuracy, yet traditional automation often relies on laborious and expensive 2D labeling. This work, ‘Learning From a Steady Hand: A Weakly Supervised Agent for Robot Assistance under Microscopy’, introduces a novel weakly supervised framework that leverages human demonstrations and calibration-aware perception to achieve robust and accurate steady-hand robotic assistance. By extracting implicit spatial information from reusable trajectories, the system attains [latex]49\,\mu m[/latex] lateral and [latex]\leq 291\,\mu m[/latex] depth accuracy, demonstrably reducing operator workload by 77.1% in user studies ([latex]\mathcal{N}=8[/latex]). Could this approach unlock more accessible and reliable robotic tools for delicate biomedical interventions and beyond?

The Inherent Challenges of Microscopic Manipulation

Robotic micromanipulation, the precise control of robots at the microscopic level, is fundamentally challenged by the scaling of physical limitations. As devices shrink, forces like friction and inertia become disproportionately significant, making smooth, predictable movements exceedingly difficult to achieve. Minute imperfections in robotic construction, previously negligible at larger scales, now introduce substantial errors in positioning. Furthermore, the very actuators designed to generate these microscopic movements often exhibit inherent backlash and hysteresis – delays and inconsistencies in response – that compromise accuracy. These factors combine to create a scenario where even the most meticulously designed robotic systems struggle to consistently and reliably perform delicate tasks within complex, microscopic environments, demanding innovative control strategies and advanced sensing technologies to overcome these inherent physical hurdles.

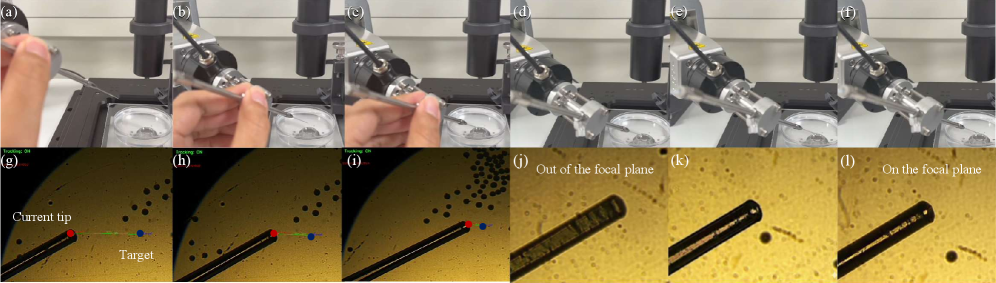

The efficacy of robotic micromanipulation is substantially challenged when operating with transparent tools within complex environments. Visual tracking systems struggle to accurately identify and delineate the position of transparent instruments-like glass capillaries or silica needles-against similarly refractive backgrounds, leading to significant errors in positional estimation. This difficulty is compounded in cluttered scenes where overlapping structures and limited contrast further obscure the tool’s outline, hindering depth perception. Consequently, the robot’s ability to precisely interact with microscopic samples is diminished, impacting the success of delicate procedures such as cell injection or microassembly, and demanding sophisticated image processing techniques to compensate for these inherent visibility limitations.

Despite advances in robotic systems, current micromanipulation techniques frequently demand significant operator involvement, creating a bottleneck in efficiency and reliability. The delicate nature of microscopic tasks means humans must often oversee and correct the robot’s movements, a process that is both time-consuming and prone to error. This reliance on manual control not only slows down procedures but also introduces operator fatigue, which can degrade precision and repeatability over time. Prolonged concentration and the need for minute adjustments contribute to physical and mental strain, impacting the consistency of results and hindering the potential for automation in fields like microassembly, biological research, and nanomedicine. Consequently, a critical need exists for more autonomous and robust micromanipulation systems capable of operating with minimal human intervention.

Synergistic Control: Bridging Human Intuition with Robotic Precision

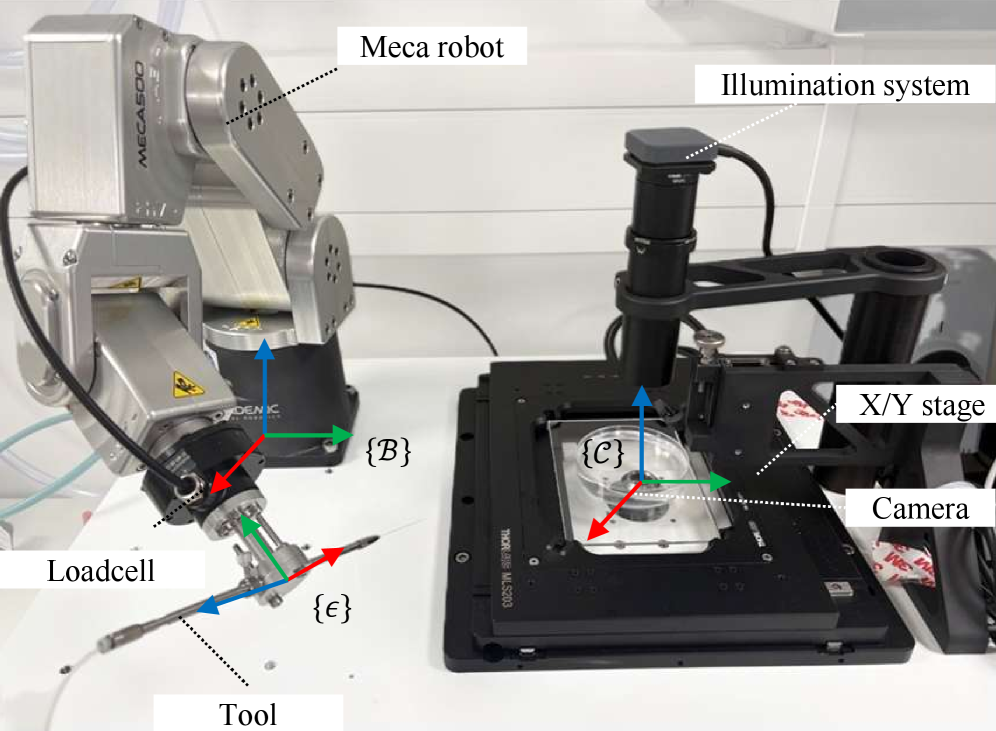

The proposed co-manipulation technique leverages human-robot collaboration by allowing a human operator to directly guide a robotic arm during tasks. This approach capitalizes on the operator’s inherent intuition and dexterity, qualities difficult to fully replicate in autonomous robotic systems. The human provides high-level direction and nuanced adjustments, while the robotic arm provides strength, precision, and the ability to reach areas inaccessible to the human operator. This collaborative strategy aims to combine the adaptability of human control with the repeatability and power of robotic actuation, resulting in improved performance and efficiency in complex manipulation tasks.

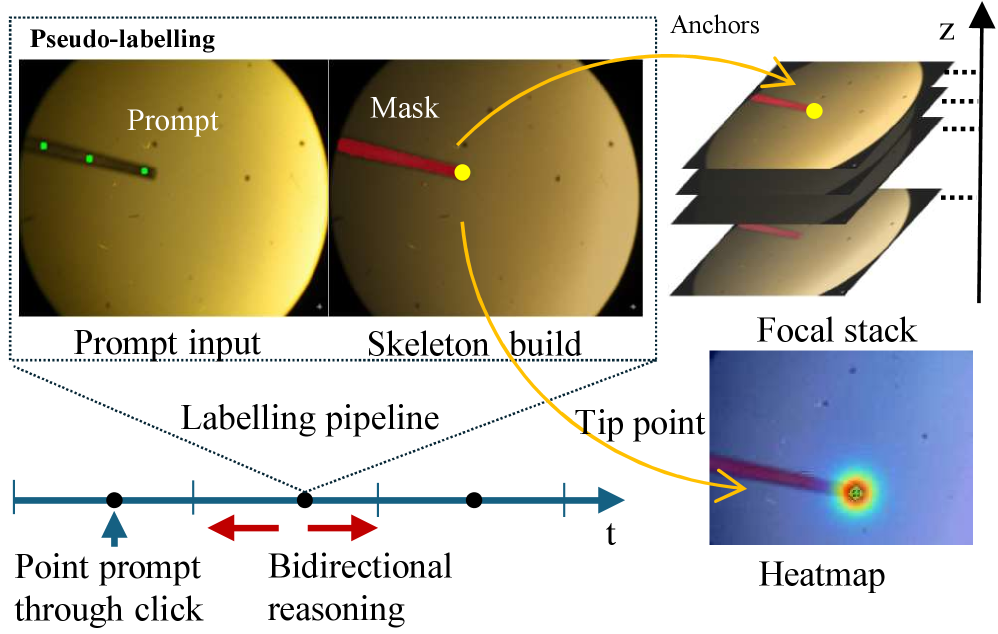

Depth estimation within the system utilizes a defocus-based method, functioning by measuring the degree of blur present in the image. Increased defocus correlates to greater distance from the camera, allowing for the calculation of depth values. This approach provides robustness in challenging environments – including those with low texture or variable lighting – as it is less reliant on feature detection compared to stereo or structured light techniques. The system analyzes the circle of confusion created by out-of-focus points to determine distance, enabling 3D reconstruction even with minimal discernible features in the scene. Data is processed using algorithms to map blur radius to distance, generating a depth map for real-time environmental understanding.

The robotic system utilizes visual servoing and admittance control to achieve stable and precise movements during co-manipulation. Visual servoing employs feedback from a camera to minimize the error between the desired and actual robot pose, enabling the robot to track a target guided by human input. Concurrently, admittance control modulates the robot’s impedance – its resistance to external forces – allowing it to react flexibly to human guidance and environmental contact. This combination allows the robot to respond to both visual corrections and force inputs from the human operator, ensuring smooth, stable, and intuitive co-manipulation even with imprecise or dynamic human movements. Parameters for both visual servoing gains and admittance control are tuned to prioritize stability and responsiveness during the shared control task.

Quantifying Performance: Establishing a Benchmark for Precision

System calibration involved establishing a precise coordinate transformation between the robotic platform and the imaging modality, utilizing a checkerboard pattern with known dimensions to minimize distortion. Dataset acquisition comprised capturing images of a diverse set of surgical phantoms and anatomical specimens under controlled lighting conditions, ensuring a balanced representation of anatomical variability and potential artifacts. A total of 1500 images were collected, manually segmented by three independent experts, and subsequently reconciled to create a gold standard dataset for supervised learning. Data augmentation techniques, including rotations, translations, and scaling, were employed to artificially expand the dataset size and improve the system’s robustness to variations in image quality and anatomical presentation. This meticulous approach to data preparation was essential for minimizing bias and maximizing the generalizability of the trained model.

The system’s demonstrated accuracy in axial and lateral dimensions was quantified through rigorous testing, yielding a mean axial depth accuracy of 291 μm and a lateral accuracy of 49 μm. These values represent the system’s performance at a 95% confidence level, indicating that 95% of measurements fall within these tolerances. This level of precision is statistically comparable to that achieved by experienced human operators performing the same tasks, as confirmed by comparative analysis of measurement data obtained from both the system and human subjects.

Operator workload reduction was quantified through the NASA Task Load Index (NASATLX), a subjective, multi-dimensional assessment tool. Experimental data indicates a 77.1% decrease in overall workload when utilizing the proposed system compared to traditional steady-hand control methods. The NASATLX measures workload across six subscales: mental demand, physical demand, temporal demand, performance, effort, and frustration. Analysis of NASATLX scores demonstrated statistically significant reductions in all subscales, contributing to the overall 77.1% workload reduction and suggesting a substantial decrease in cognitive and physical strain for operators.

![Despite optical blur from varying depth, lateral visual servoing maintains precision, as demonstrated by comparable error ellipse sizes of approximately [latex]10\sigma[/latex] pixels for both defocused and focused conditions.](https://arxiv.org/html/2601.20776v1/x9.png)

Expanding the Horizon: Towards Autonomous and Scalable Systems

The development of truly automated micromanipulation systems hinges on reducing the laborious task of data annotation. Recent advancements leverage weakly supervised learning, a technique that minimizes the need for precise, manual labeling of training data. Instead of requiring detailed outlines of objects or precise manipulation points, the system learns from more readily available cues – such as identifying the presence of a target cell rather than its exact boundaries. This approach dramatically reduces the time and expertise required to prepare datasets for machine learning algorithms, fostering scalability and opening doors to broader implementation in diverse experimental settings. By intelligently inferring information from limited or imprecise data, these techniques are poised to unlock a new era of autonomous robotic manipulation at the microscopic level.

The developed micromanipulation framework benefits from potential integration with hand-eye calibration techniques, promising significant gains in both accuracy and adaptability. This process establishes the precise spatial relationship between the robotic arm’s end-effector and the imaging system-typically a microscope-allowing the system to translate visual information into accurate physical movements. By calibrating the hand-eye transformation, the framework can reliably locate and manipulate objects within the field of view, even as the experimental setup changes or the camera’s perspective shifts. This enhanced robustness is critical for applications requiring precise targeting, such as cell injection or microassembly, and facilitates seamless transitions between diverse experimental configurations without requiring tedious re-calibration or manual adjustments.

Continued development centers on refining the precision of robotic micromanipulation by directly tackling the inherent uncertainties in depth estimation – a crucial factor when operating within the microscopic realm. Researchers are investigating advanced control strategies, moving beyond traditional methods to incorporate real-time feedback and adaptive algorithms that can compensate for these uncertainties and environmental disturbances. This pursuit of fully autonomous operation envisions systems capable of not only locating and manipulating microscale objects but also of making independent decisions regarding task execution, potentially revolutionizing fields like drug discovery, single-cell biology, and microassembly through increased throughput and reduced human intervention.

The pursuit of robotic assistance in micromanipulation, as demonstrated in this work, echoes a fundamental tenet of system design: elegance through simplicity. The framework’s reliance on weakly supervised learning and visual servoing isn’t about achieving flashy complexity, but about extracting maximal performance from limited data – a pragmatic approach to a challenging problem. As Robert Tarjan aptly stated, “Data structures and algorithms are the heart of programming.” This research embodies that principle; the chosen data representation and algorithmic approach facilitate a robust, adaptable system capable of assisting in delicate tasks like cell manipulation, reducing operator burden without sacrificing precision. If the system looks clever, it’s probably fragile; this work prioritizes a steady, reliable hand over intricate, potentially brittle solutions.

The Steady Hand Evolves

The pursuit of robotic assistance in micromanipulation, as demonstrated by this work, inevitably reveals the limitations inherent in transferring skill. The framework rightly acknowledges the value of human demonstration, yet the very act of distilling that demonstration into a trainable system introduces a simplification – a reduction of nuanced control to quantifiable parameters. Every new dependency on learned models is the hidden cost of freedom, a trade-off between autonomy and the unpredictable grace of a skilled operator. Future iterations must address not just what a human does, but why, and how that intention adapts to unforeseen circumstances.

A critical path forward lies in embracing the inherent messiness of biological systems. Current approaches often demand precise 3D reconstructions and calibrations, imposing a rigidity that clashes with the dynamic nature of the samples themselves. The system’s performance, while promising, remains tethered to the quality of these foundational datasets. A more robust architecture will likely require methods for continuous learning and adaptation during manipulation, integrating visual feedback not merely for servoing, but for refining the model of the environment itself.

Ultimately, the challenge isn’t simply to automate the movements of a steady hand, but to create a system capable of recognizing its own limitations – a robotic assistant that knows when to defer to human expertise, and when to attempt a delicate maneuver with carefully calculated risk. The elegance of such a solution will not be found in algorithmic complexity, but in the simplicity of its underlying principles: observation, adaptation, and a healthy respect for the fragility of life.

Original article: https://arxiv.org/pdf/2601.20776.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- 1xBet declared bankrupt in Dutch court

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-01-29 20:16