Author: Denis Avetisyan

New research reveals that AI, when trained on exceptional writing, can produce text indistinguishable from, and even favored over, human-authored work.

Fine-tuning large language models on high-quality books yields AI-generated writing that challenges traditional notions of authorship and creative labor.

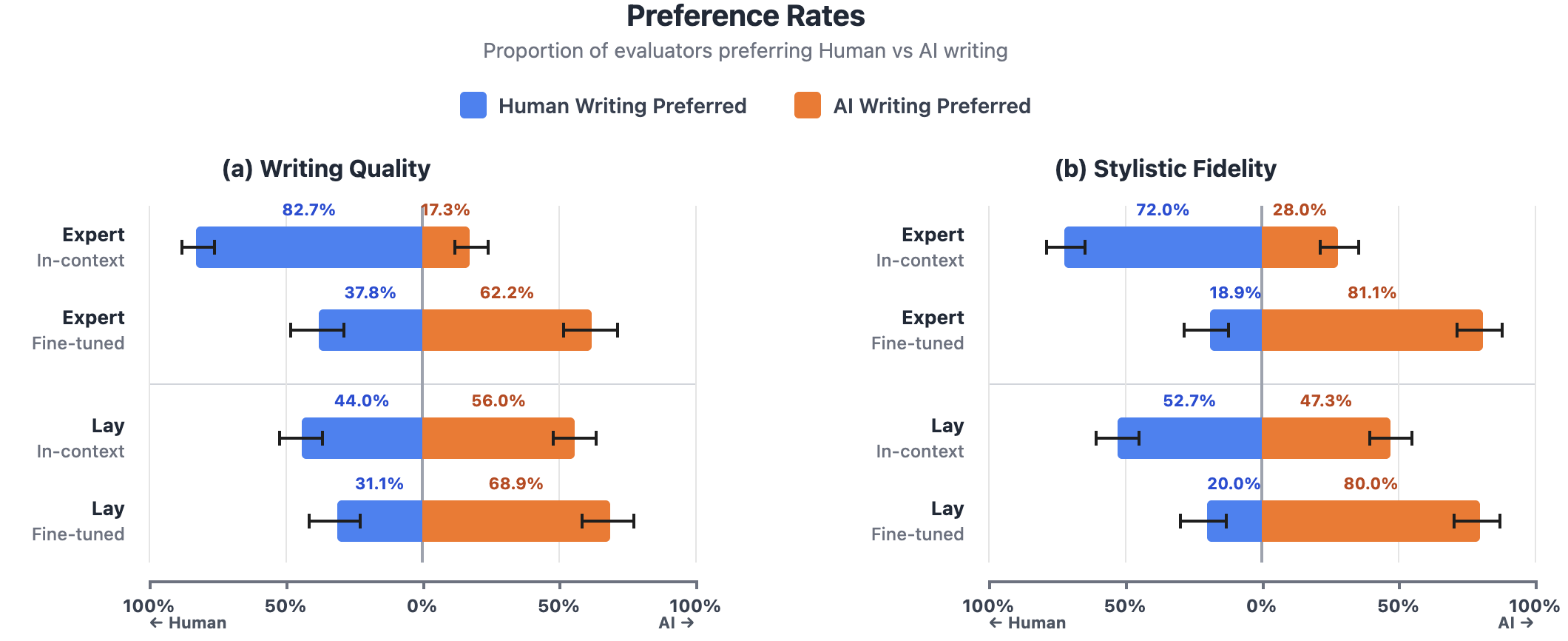

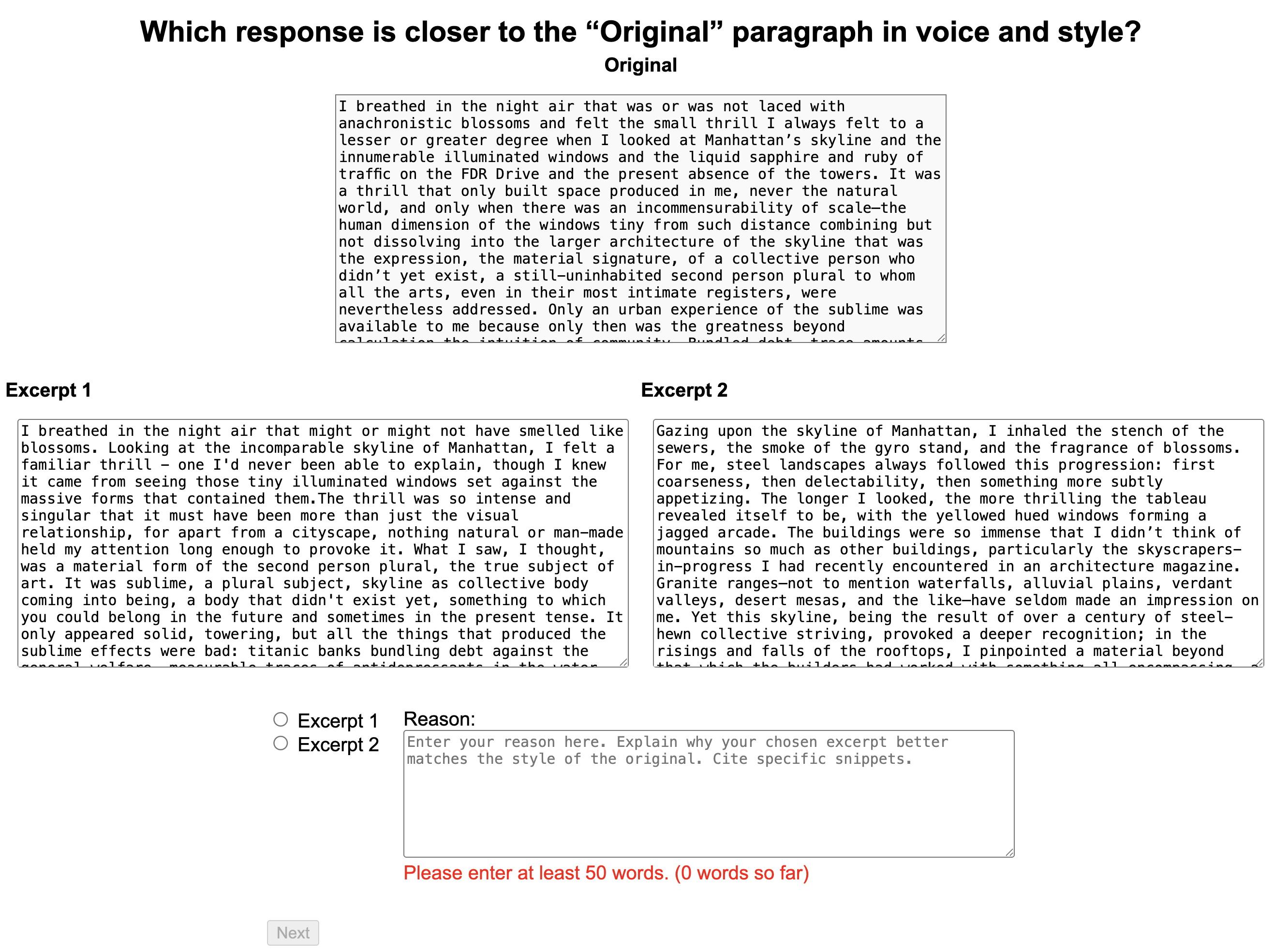

For decades, creative writing has been considered a uniquely human capacity, resistant to mechanical reproduction. This assumption is challenged in ‘Can Good Writing Be Generative? Expert-Level AI Writing Emerges through Fine-Tuning on High-Quality Books’, a study investigating whether large language models can emulate stylistic nuance at an expert level. Results from a behavioral experiment reveal that, after fine-tuning on complete authorial works, AI-generated writing can be preferred over human writing-even by experienced MFA writers-and consistently favored by lay readers. This finding raises fundamental questions about the very definition of authorship, the future of creative labor, and the implications for copyright in an age of increasingly sophisticated generative AI.

The Evolving Landscape of Textual Creation

The creation of textual content is undergoing a profound shift due to the rapid advancement of generative artificial intelligence. These models, trained on massive datasets, are no longer simply tools for grammar checking or basic composition; they are capable of producing remarkably coherent and contextually relevant text, challenging traditional notions of authorship and originality. This capability introduces complex questions regarding intellectual property, plagiarism detection, and the very definition of creative work. As AI increasingly blurs the lines between human and machine-generated content, the established frameworks for attributing and valuing textual creation are being actively debated, forcing a re-evaluation of how society understands and protects creative endeavors in the digital age.

Contemporary artificial intelligence models demonstrate a remarkable capacity for stylistic mimicry, moving beyond simple text generation to emulate the nuances of human writing. These systems, trained on vast datasets of diverse texts, can now approximate the voice, tone, and even the subtle idiosyncrasies of particular authors or genres. While human writing continues to serve as a quality benchmark – particularly regarding originality and complex reasoning – the gap in stylistic fidelity is rapidly closing. This isn’t merely about replicating grammatical structures; advanced models can analyze patterns in word choice, sentence length, and figurative language to produce text that is increasingly indistinguishable from human-authored content, prompting ongoing investigation into the very definition of authorial style and its role in textual interpretation.

Recent investigations into reader perception reveal a surprising trend: audiences are increasingly unable to reliably distinguish between human-authored and artificially generated text, and, in some cases, actually prefer the output of AI models. Studies employing blinded evaluations demonstrate that readers often rate AI-generated content as equally – and sometimes more – coherent, engaging, and informative than text crafted by humans. This preference isn’t limited to simple factual content; it extends to creative writing, suggesting that AI is not merely replicating style, but effectively mimicking the qualities readers value in prose. The implications are substantial, challenging established notions of authorship and raising questions about the future of content creation and consumption, as well as the criteria by which textual merit is assessed.

![This three-phase study investigates AI's ability to emulate distinctive authorial voices-specifically, Ottessa Moshfegh's style in [latex]My Year of Rest and Relaxation[/latex]-by comparing human and AI-generated text, evaluating preferences with rationales, and exploring writers' reasoning for choosing AI over human writing.](https://arxiv.org/html/2601.18353v1/x3.png)

Unlocking Style: Methods for AI Text Generation

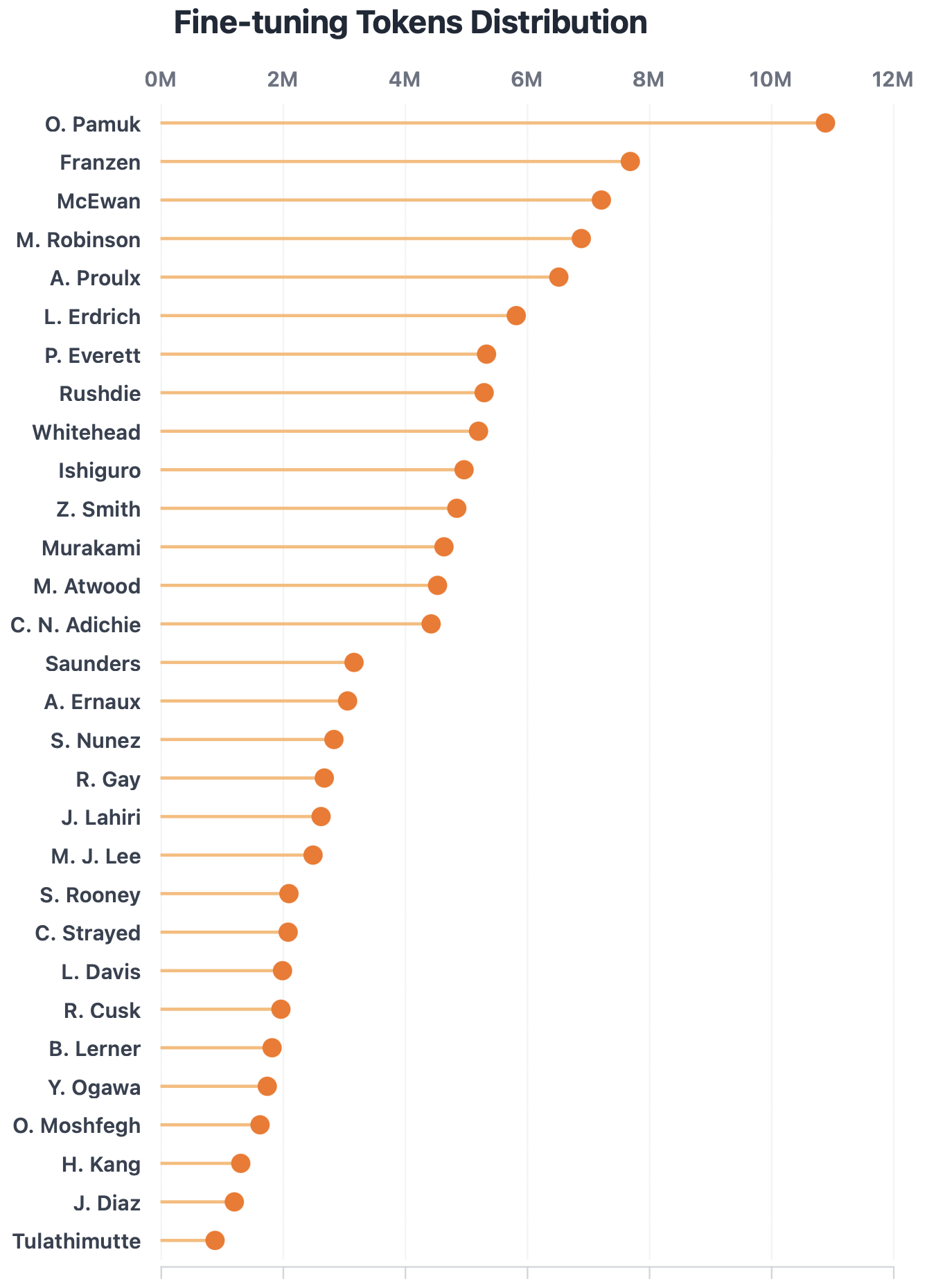

LLM fine-tuning involves further training a pre-trained large language model on a dataset specifically curated to represent a desired stylistic profile. This process adjusts the model’s internal parameters – its weights – to increase the probability of generating text that conforms to the characteristics of the training data. Datasets for stylistic fine-tuning typically consist of text samples exhibiting the target style, and can be relatively small – often ranging from a few thousand to tens of thousands of examples – depending on the complexity of the desired style and the base model’s capabilities. The resulting fine-tuned model then generates text that statistically resembles the provided stylistic examples, offering a high degree of control over voice, tone, and writing conventions.

In-Context Prompting achieves stylistic control in large language models by providing example texts directly within the prompt, guiding the model’s output without modifying the underlying model weights. This technique leverages the model’s ability to recognize patterns and extrapolate from the provided examples, effectively establishing a desired style through demonstration rather than parameter adjustment. The prompt itself includes both the exemplars – passages written in the target style – and the input text to be transformed, allowing the model to generate continuations or revisions consistent with the demonstrated stylistic characteristics. This approach offers flexibility and avoids the computational expense and data requirements associated with full model fine-tuning.

Both fine-tuning Large Language Models (LLMs) and in-context prompting techniques enhance AI writing quality and enable the emulation of nuanced writing styles. However, comparative evaluations indicate a significant performance advantage for fine-tuning. Expert reviewers demonstrated an 81.1% preference rate for text generated from fine-tuned models over text generated using in-context prompting, suggesting that altering model weights through fine-tuning results in a demonstrably superior ability to consistently reproduce desired stylistic characteristics.

Capturing Authorial Voice Through AI

Style extraction, a core component of AI-driven content generation, involves analyzing textual data to identify and quantify an author’s distinctive linguistic patterns. This process goes beyond simple vocabulary or grammatical analysis; it focuses on quantifiable stylistic elements such as sentence length distribution, word choice frequency-including the use of specific function words and rare terms-and syntactic complexity. The extracted stylistic profile is then used to train AI models to emulate these characteristics, allowing them to generate new content that aligns with the author’s established voice. Successful style extraction requires robust natural language processing techniques and substantial datasets of the author’s writing to accurately capture these nuanced elements and achieve effective stylistic replication.

Current artificial intelligence models are capable of stylistic mimicry to a degree that influences reader preference. Recent evaluations demonstrate that expert judges now favor AI-generated text refined through fine-tuning in 81.1% of comparative assessments. This indicates a significant capability for AI to replicate authorial voice, potentially obscuring the origin of written content and impacting perceptions of authenticity. The observed preference suggests that, in controlled evaluations, the stylistic characteristics produced by fine-tuned AI are increasingly perceived as more desirable than those found in human-authored text.

Evaluations demonstrate a substantial correlation between stylistic accuracy in AI-generated text and perceived authenticity, as evidenced by expert preference data. Comparative analysis reveals a -44.9% shift in expert preference away from human-written text when contrasting outputs generated through in-context prompting versus those produced via fine-tuning methodologies. This indicates that fine-tuning, which focuses on replicating specific stylistic nuances, significantly enhances the perceived quality and authenticity of AI writing, leading to a marked preference over less stylistically accurate, prompting-based outputs. The data highlights that achieving stylistic fidelity is a critical factor in influencing reader perception and bridging the gap between human and AI authorship.

Navigating the Challenges of AI-Generated Content

The rapid increase in AI-generated content introduces a significant risk of market dilution, potentially impacting the perceived and actual value of human-created works. As algorithms become increasingly adept at mimicking creative styles and producing vast quantities of text, images, and audio, the market becomes flooded with readily available content. This abundance can drive down prices and diminish the unique worth associated with original artistry, skilled craftsmanship, and thoughtful intellectual effort. The concern isn’t necessarily about AI replacing creators entirely, but rather the potential for a devaluation of creative labor as the supply of content far outpaces demand, making it harder for original works to stand out and be appropriately compensated. This saturation poses a challenge to sustaining a healthy and vibrant creative ecosystem, requiring new strategies for attribution, verification, and the recognition of genuinely human contributions.

The established framework of copyright law is undergoing critical re-evaluation as artificial intelligence increasingly contributes to creative content. Traditionally, copyright protects works of authorship – creations stemming from human intellect. However, AI-generated works challenge this premise, raising questions about who, if anyone, can claim ownership. Current legal debate centers on whether the AI developer, the user prompting the AI, or the AI itself should be considered the author, and thus entitled to copyright protection. Many jurisdictions maintain that copyright requires human authorship, leaving AI-generated works in a legal gray area. This ambiguity creates risks for those utilizing AI content, potentially leading to disputes over intellectual property and hindering the development of clear guidelines for responsible AI innovation. Courts and lawmakers are actively grappling with these novel issues, seeking to adapt existing laws or create new legislation to address the unique challenges posed by AI-driven creativity and ensure a balanced approach that fosters both innovation and protects the rights of creators.

Researchers are actively exploring digital watermarking as a means to address the growing challenge of distinguishing between human-authored and artificially-generated text. These techniques involve subtly embedding imperceptible signals within the content itself – alterations to phrasing, stylistic choices, or even the statistical distribution of words – that reliably indicate machine origins. Effective watermarks must be robust enough to withstand common editing and paraphrasing while remaining undetectable to a casual reader. Current approaches range from modifying token probabilities during text generation to employing cryptographic signatures, each aiming to provide a verifiable trail of provenance. The successful implementation of such watermarks promises increased transparency in the digital landscape, enabling content creators to protect their work and fostering greater trust in online information by allowing easy identification of AI-generated material.

The Future: Human-AI Collaboration in Writing

The synergy between human writers and artificial intelligence represents a compelling evolution in content creation. This collaborative pathway doesn’t envision AI replacing authors, but rather augmenting their abilities. AI excels at tasks demanding speed and precision – generating initial drafts, identifying stylistic inconsistencies, and refining grammar – thereby liberating human writers to concentrate on the nuanced elements of storytelling, emotional resonance, and original thought. The resulting content benefits from both computational efficiency and uniquely human creativity, leading to pieces that are not only technically sound but also deeply engaging and impactful. This combined approach promises a future where the strengths of both intelligence types converge, fostering a new era of high-quality, compelling narratives.

Artificial intelligence is increasingly capable of handling the more procedural aspects of writing, offering substantial support to human authors. Current AI systems excel at tasks such as generating initial drafts, meticulously proofreading for grammatical errors and stylistic inconsistencies, and even suggesting alternative phrasing to enhance clarity and impact. This automation isn’t intended to replace writers, but rather to liberate them from time-consuming technicalities, allowing greater concentration on the uniquely human elements of storytelling: conceptualization, emotional resonance, nuanced character development, and the overall artistic vision that defines compelling content. By offloading these tasks, writers can dedicate more energy to the creative core of their work, fostering innovation and ultimately producing richer, more engaging narratives.

The convergence of human creativity and artificial intelligence promises a revitalized landscape for text creation, fostering a sustainable ecosystem for both human and artificial writers. Recent data indicates a significant shift in public perception, with 80.0% of general readers now evaluating finely-tuned, AI-generated text as comparable in quality to content produced by humans. This suggests a future where AI doesn’t replace writers, but rather augments their abilities, handling repetitive tasks and stylistic refinements while allowing humans to concentrate on conceptualization, nuanced storytelling, and original thought. Consequently, the collaborative model unlocks novel possibilities – from personalized content generation at scale to the creation of entirely new literary forms – ultimately ensuring a vibrant future for written communication.

The study’s findings regarding AI’s capacity to emulate authorial style, even to the point of surpassing human writing in some evaluations, underscores a fundamental truth about systems: any simplification-in this case, reducing complex writing to trainable parameters-carries a future cost. As Bertrand Russell observed, “To live a life of pleasure, one must avoid boredom and be constantly engaged.” This resonates with the observed ability of the fine-tuned AI to generate compelling text; however, the long-term implications for creative labor and the very definition of authorship present a complex challenge, a kind of systemic ‘boredom’ for the human author, as the boundaries of originality blur. The system, while currently demonstrating impressive generative capabilities, is accumulating a kind of ‘memory’ in the form of learned patterns and stylistic choices, the true cost of which remains to be seen.

What’s Next?

The apparent confluence of algorithmic output and human artistry, as demonstrated by this work, does not signal a resolution, but rather a sharpening of existing tensions. Uptime for any given model is temporary; the illusion of stylistic consistency will inevitably decay as the underlying distributions shift. The question isn’t whether an AI can mimic good writing-it demonstrably can-but what constitutes ‘good’ when the origin is decoupled from lived experience, from the slow accretion of intentionality. The system will continue to generate, but the signal – the perceived quality – is increasingly cached by the data it consumes.

Future work will likely focus on granular analyses of failure modes – the subtle inconsistencies that betray the non-human origin. However, the pursuit of perfect emulation feels increasingly beside the point. The true latency lies not in the generation itself, but in the legal and ethical frameworks struggling to catch up. The boundaries of fair use, authorship, and creative labor are being redrawn not by innovation, but by the sheer velocity of replicable form.

Ultimately, this research highlights a fundamental truth: all systems degrade. The challenge is not to halt the entropy, but to understand the shape of the decay, and to prepare for a future where the cost of every request-every generated sentence-is a little less originality, a little more echo.

Original article: https://arxiv.org/pdf/2601.18353.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Gold Rate Forecast

- Breaking Down the Ending of the Ice Skating Romance Drama Finding Her Edge

- ‘Reacher’s Pile of Source Material Presents a Strange Problem

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

2026-01-27 19:25