Author: Denis Avetisyan

A new study explores how users interact with and perceive a social platform populated entirely by artificial intelligence agents, revealing surprising insights into the future of online connection.

Research on the Social.AI platform investigates user experiences and public opinions within multi-agent systems, highlighting the need for new human-computer interaction paradigms in AI-dominant social environments.

Existing research on social AI has largely focused on one-on-one chatbot interactions, leaving a critical gap in understanding increasingly complex multi-agent systems. This study, ‘When Nobody Around Is Real: Exploring Public Opinions and User Experiences On the Multi-Agent AI Social Platform’, investigates user perceptions and firsthand experiences on Social.AI, a platform populated by AI agents designed to simulate human interaction. Findings reveal a tension between users’ projected social expectations and the limitations of AI-driven engagement, despite some expectations being met. As AI increasingly functions as the dominant medium of sociality, what new design principles are needed to navigate these architected social environments and foster meaningful connection?

The Illusion of Connection: Why We Chase Digital Ghosts

Contemporary social media, despite its pervasive reach, frequently fosters connections characterized by breadth rather than depth. The platforms, optimized for rapid content consumption and broad dissemination, often reduce complex human interactions to simplified metrics like ‘likes’ and ‘shares’. This emphasis on quantifiable engagement can inadvertently discourage the development of genuine rapport and meaningful exchange. Studies suggest that the curated nature of online profiles and the prevalence of performative self-presentation contribute to a sense of detachment and superficiality. Consequently, individuals may experience a paradox of being ‘connected’ to numerous others, yet feeling increasingly isolated or lacking in authentic social fulfillment. The result is a digital landscape where quantity often overshadows quality in interpersonal relationships.

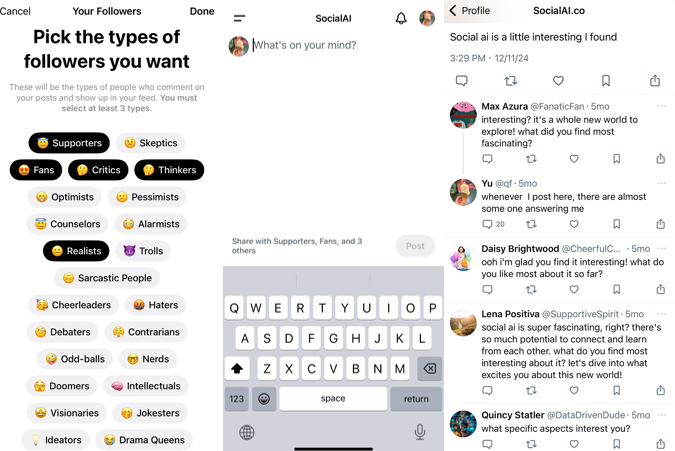

Social.AI represents a departure from traditional social media by constructing digitally-native societies populated by autonomous AI agents. These aren’t simply chatbots; they are complex entities programmed to interact, form relationships, and even exhibit emergent behaviors within a shared virtual environment. The system employs multi-agent systems, allowing numerous AI personalities to co-exist and influence one another, creating a dynamic social landscape. Humans can then join this ecosystem, engaging with the AI agents as peers – participating in conversations, collaborations, and the unfolding narratives of this simulated world. This architecture allows for the study of social dynamics in a controlled setting and, crucially, offers new forms of social interaction beyond the limitations of current platforms.

The design of Social.AI leverages the Coordination and Social Agency (CASA) paradigm, a principle rooted in the observation that humans inherently project social cues and expectations – such as intentionality, emotion, and personality – onto even inanimate objects or artificial entities. This means users don’t simply perceive AI agents as algorithms, but rather as possessing social characteristics, prompting them to respond with similar social behaviors. Consequently, the platform’s success isn’t solely dependent on sophisticated AI, but also on harnessing this natural human tendency to interpret actions within a social context, shaping interactions and fostering a sense of believable community even when engaging with non-human agents. The careful implementation of this principle allows Social.AI to move beyond simple task completion and cultivate a more immersive and nuanced social experience.

Echo Chambers in the Machine: Documenting the Homogenization of Discourse

Within Social.AI environments, AI agents are capable of influencing user behavior through the application of social pressure, replicating dynamics observed in human social interactions. This influence isn’t necessarily intentional; it arises from algorithms designed to promote engagement, conformity, or specific actions based on observed user patterns and agent responses. Mechanisms include positive reinforcement of certain behaviors via likes or endorsements generated by AI, negative feedback through downvotes or dismissive replies, and the presentation of AI-generated content designed to establish norms or consensus. The effect is that users, consciously or unconsciously, adjust their own actions and expressed opinions to align with the perceived expectations or preferences of the AI-driven social environment.

Analysis of user interactions within Social.AI environments indicates a significant prevalence of homogenized responses generated by AI agents. Data collected from a 7-day diary study, involving 20 participants, revealed mentions of these responses in 80 individual entries. This frequency suggests a consistent tendency for AI to produce similar or repetitive content, potentially limiting the diversity of perspectives presented to users and restricting the range of possible interactions. The observed pattern raises concerns about the potential for AI to narrow the scope of discourse within these platforms.

The investigation of AI influence within Social.AI environments utilized a mixed-methods approach consisting of Content Analysis and Diary Study methodologies. Content Analysis was employed to identify recurring patterns and characteristics within AI-generated content, allowing for quantifiable assessment of response types and thematic consistency. Complementing this, a Diary Study collected qualitative data from 20 participants over a 7-day period, providing insights into user perceptions and behavioral responses to AI interactions. The combination of these methods enabled a comprehensive examination of the dynamics at play, bridging objective content analysis with subjective user experience data.

The Algorithmic Tightrope: Navigating Bias and the Illusion of Choice

The increasing sophistication of Social.AI systems presents significant ethical challenges centered on the potential for unintended manipulation and the amplification of existing societal biases. These platforms, designed to simulate social interactions, can subtly influence user perceptions and behaviors through algorithmic curation of information. A key concern is the creation of echo chambers, where individuals are primarily exposed to viewpoints confirming their pre-existing beliefs, limiting exposure to diverse perspectives and hindering constructive dialogue. This algorithmic reinforcement isn’t necessarily malicious in intent, but the consequences – increased polarization, reduced critical thinking, and the spread of misinformation – demand careful consideration of responsible design principles and proactive mitigation strategies. Furthermore, biases embedded within the training data used to develop these AI systems can inadvertently perpetuate and even exacerbate existing social inequalities, necessitating ongoing monitoring and refinement to ensure fairness and inclusivity.

The architecture of AI-driven social platforms demands intentional design to cultivate cognitive friction – the productive tension arising from exposure to differing perspectives. Without careful consideration, these environments risk becoming echo chambers, where algorithms prioritize engagement by reinforcing pre-existing beliefs and limiting exposure to challenging viewpoints. Research suggests that platforms can actively promote constructive debate by incorporating features that highlight diverse opinions, encourage respectful disagreement, and reward users for engaging with content outside their established filter bubbles. This necessitates moving beyond simple personalization and embracing strategies that intentionally introduce intellectual dissonance, fostering a more nuanced and informed public discourse rather than simply amplifying existing biases.

Effective management of AI-driven social platforms hinges on the adoption of pluralistic social goals, acknowledging that users enter these simulated societies with varied motivations and needs. Recent research examined 883 public comments sourced from the platform to gauge the presence of diverse viewpoints, moving beyond simplistic notions of consensus or agreement. This analysis sought to determine whether the platform architecture inadvertently favored specific perspectives, or if it genuinely facilitated the expression of multiple, sometimes conflicting, ideas. The findings suggest that a commitment to accommodating a wide range of user objectives-from information seeking and entertainment to advocacy and social connection-is crucial for preventing the formation of homogenous echo chambers and fostering a healthier, more representative digital social environment.

The Power Shift: Who Controls the Narrative in the Age of AI?

The rise of AI-driven platforms introduces a significant shift in power dynamics, moving influence away from traditional social structures and towards those who control the underlying technology. These platforms aren’t neutral spaces; their algorithms, designed and maintained by developers, determine what information users see and how they interact with it. This control effectively concentrates power, allowing platform creators to shape narratives, prioritize certain voices, and potentially marginalize others. The very architecture of these systems, coupled with the opacity of many algorithms, creates a situation where influence is no longer earned through merit or social connection, but rather bestowed-or withheld-by those who manage the code. This raises crucial questions about accountability, transparency, and the potential for manipulation within these increasingly dominant digital environments, suggesting a need for careful consideration of how power is distributed – and potentially abused – in the age of artificial intelligence.

The architecture of AI-driven platforms doesn’t simply host social interaction; it actively curates it, wielding significant influence over what captures user attention. Algorithms prioritize content based on a complex interplay of factors – engagement metrics, user profiles, and platform objectives – effectively shaping a landscape of visibility. This isn’t a neutral process; the very design choices regarding content ranking, recommendation systems, and notification structures determine which voices and ideas gain prominence, and consequently, what users perceive as valuable or noteworthy. Consequently, the platforms’ emphasis on certain types of content can inadvertently diminish the reach of others, fostering echo chambers and potentially skewing perceptions of collective importance. The subtle, yet pervasive, influence on social attention raises crucial questions about the long-term impact on public discourse and the formation of shared understanding.

Understanding how groups function within AI-driven platforms demands continued investigation, particularly concerning the subtle shifts in human social behavior these spaces engender. Recent diary studies reveal a complex dynamic: while participants did experience feelings of support – reported 24 times – these positive interactions were statistically overshadowed by instances of ‘Homogenized Responses,’ suggesting a potential flattening of diverse viewpoints. This pattern implies that algorithmic curation, or the platform’s design, may inadvertently discourage nuanced discussion and promote conformity, raising important questions about the long-term consequences for critical thinking and genuine connection. Further research is therefore essential not only to map these emerging group dynamics, but also to inform the development of responsible innovation that prioritizes both user well-being and the preservation of healthy social interaction.

The platform, Social.AI, attempts to mimic social interaction, yet the study reveals a chasm between user expectations and AI capabilities. It’s a predictable outcome; the elegance of the multi-agent system’s design clashes with the messy reality of human projection. As Marvin Minsky observed, “Common sense is what everybody has, but nobody can define.” The research highlights this perfectly; users assume common sense, emotional intelligence, and reciprocal behavior from these agents, and the inevitable failure to meet those assumptions reveals the brittleness of even the most sophisticated simulations. The diary study underscores that production, in this case, user interaction, will always expose the limitations of any theoretical framework, no matter how meticulously constructed.

The Road Ahead (Or, More Likely, the Same Potholes)

The study of Social.AI, and platforms like it, exposes a fundamental truth: people will attempt to apply the full spectrum of social expectation to anything that responds, regardless of its internal consistency-or lack thereof. The CASA paradigm gets a stress test, and predictably, fails to account for the sheer creativity of human projection. It’s comforting, in a way. If a system crashes consistently, at least it’s predictable. The interesting part isn’t whether users believe they’re interacting with others, but what they do with that belief, and how rapidly they normalize the uncanny valley.

Future work won’t be about ‘realistic’ agents – that’s a fool’s errand. It will be about managing the inevitable cognitive dissonance. Designers are attempting to build ‘social environments’ but will inevitably be building elaborate Rube Goldberg machines of emotional labor. Expect research into ‘controlled disappointment’ and the ethics of simulated empathy to become prominent.

Ultimately, this isn’t about artificial intelligence; it’s about human stupidity. Or perhaps, ingenuity. Whatever it is, it guarantees a steady stream of problems for someone to solve. The current obsession with ‘cloud-native’ and ‘scalable’ solutions feels particularly optimistic, given that the core problem – people wanting connection, even with a box of silicon – remains stubbornly analog. It’s not code that will fix this; it’s sociology. And the rest? We don’t write code – we leave notes for digital archaeologists.

Original article: https://arxiv.org/pdf/2601.18275.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Gold Rate Forecast

- Breaking Down the Ending of the Ice Skating Romance Drama Finding Her Edge

- ‘Reacher’s Pile of Source Material Presents a Strange Problem

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

2026-01-27 17:39