Author: Denis Avetisyan

A new framework, ReTracing, explores how generative AI systems encode and perpetuate biases through the choreography of human, robotic, and virtual interactions.

ReTracing investigates algorithmic bias within motion synthesis by analyzing the interplay between bodies, machines, and generative AI systems.

The increasing ubiquity of generative artificial intelligence raises critical questions about the encoding of cultural assumptions within automated systems. This paper introduces ‘ReTracing: An Archaeological Approach Through Body, Machine, and Generative Systems’, a multi-agent performance investigating how AI shapes movement through an embodied archaeological lens. By choreographing interactions between a human, a quadruped robot, and AI-generated prompts derived from science fiction, we reveal how biases are embedded within seemingly neutral choreographic guides. Ultimately, ReTracing constructs a digital archive of motion traces, prompting us to consider what it means to navigate a world increasingly populated by AI entities that move, think, and leave their own indelible marks.

The Eloquence of Motion: Bridging Literature and Artificial Embodiment

Current advancements in embodied artificial intelligence frequently struggle to replicate the subtleties of human movement and interaction, often resulting in robotic actions that appear stilted or unnatural. This limitation stems from a reliance on purely kinematic or functional goals, neglecting the rich tapestry of cultural context and experiential nuance that informs human motion. Many systems prioritize how a movement is executed – its efficiency or speed – rather than why it is performed, overlooking the emotional, social, and historical layers embedded within even the simplest gestures. Consequently, these approaches often produce robotic behaviors that, while technically proficient, lack the expressiveness and authenticity characteristic of human movement, hindering true integration into human-centric environments and applications.

The ReTracing framework pioneers a new approach to embodied artificial intelligence by directly linking movement – both human and robotic – to the rich descriptive power of literature. Rather than relying on abstract parameters, this system utilizes literary prompts – passages evoking specific actions, emotions, or atmospheres – as the foundation for generating and analyzing motion. This allows for a deeper exploration of how nuanced human experience manifests in physical form, and how those forms can be replicated, or creatively reinterpreted, by robotic systems. By bridging the traditionally separate domains of artistic expression, scientific inquiry, and technological innovation, ReTracing fosters a uniquely interdisciplinary environment for studying and recreating the complex relationship between narrative, movement, and intelligence.

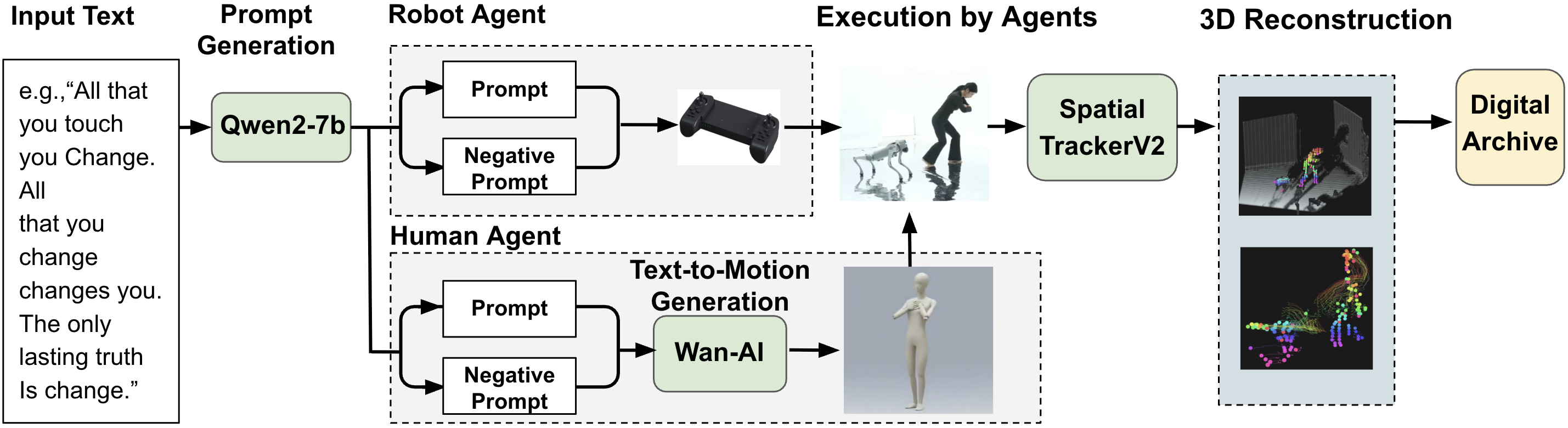

From Text to Embodied Form: A Generative Pipeline

ReTracing’s generative process is fundamentally based on large language models, specifically utilizing the Qwen-2.5 architecture. The system employs a generation temperature of 0.7, a parameter that controls the randomness of the output. A lower temperature results in more predictable and conservative text generation, while a higher temperature increases creativity but may reduce coherence. The selected temperature of 0.7 represents a compromise, aiming to produce varied and plausible movement sequences without sacrificing logical consistency in the choreography. This balance is crucial for generating both realistic human motions and adaptable instructions for robotic agents.

Following text prompt generation, diffusion-based models are employed to synthesize 3D human pose sequences. These models operate by iteratively refining a random initial pose, guided by the textual input, to create realistic and coherent movements. The output of this process is not limited to human representation; the generated pose sequences can be directly applied to control the movement of robotic agents, enabling the choreography of both virtual human performers and physical robots. This allows for the creation of synchronized and complex movements across different embodiments, facilitating applications in areas like animation, virtual reality, and robotic dance.

Vision-Language-Action (VLA) models contribute to robotic movement refinement by interpreting visual inputs and natural language instructions to predict appropriate actions. This allows robots to understand desired behaviors expressed through commands or observed demonstrations. Subsequent Deep Reinforcement Learning (DRL) further optimizes these actions through a trial-and-error process, maximizing a reward signal that quantifies both the accuracy of the movement – its adherence to the intended task – and its expressiveness, measured by qualities like smoothness and naturalness. This combined approach enables robotic agents to not only perform tasks correctly, but also to execute movements in a manner that is both precise and aesthetically pleasing, improving human-robot interaction and performance in complex scenarios.

Decoding Movement: Capturing and Analyzing Embodied Data

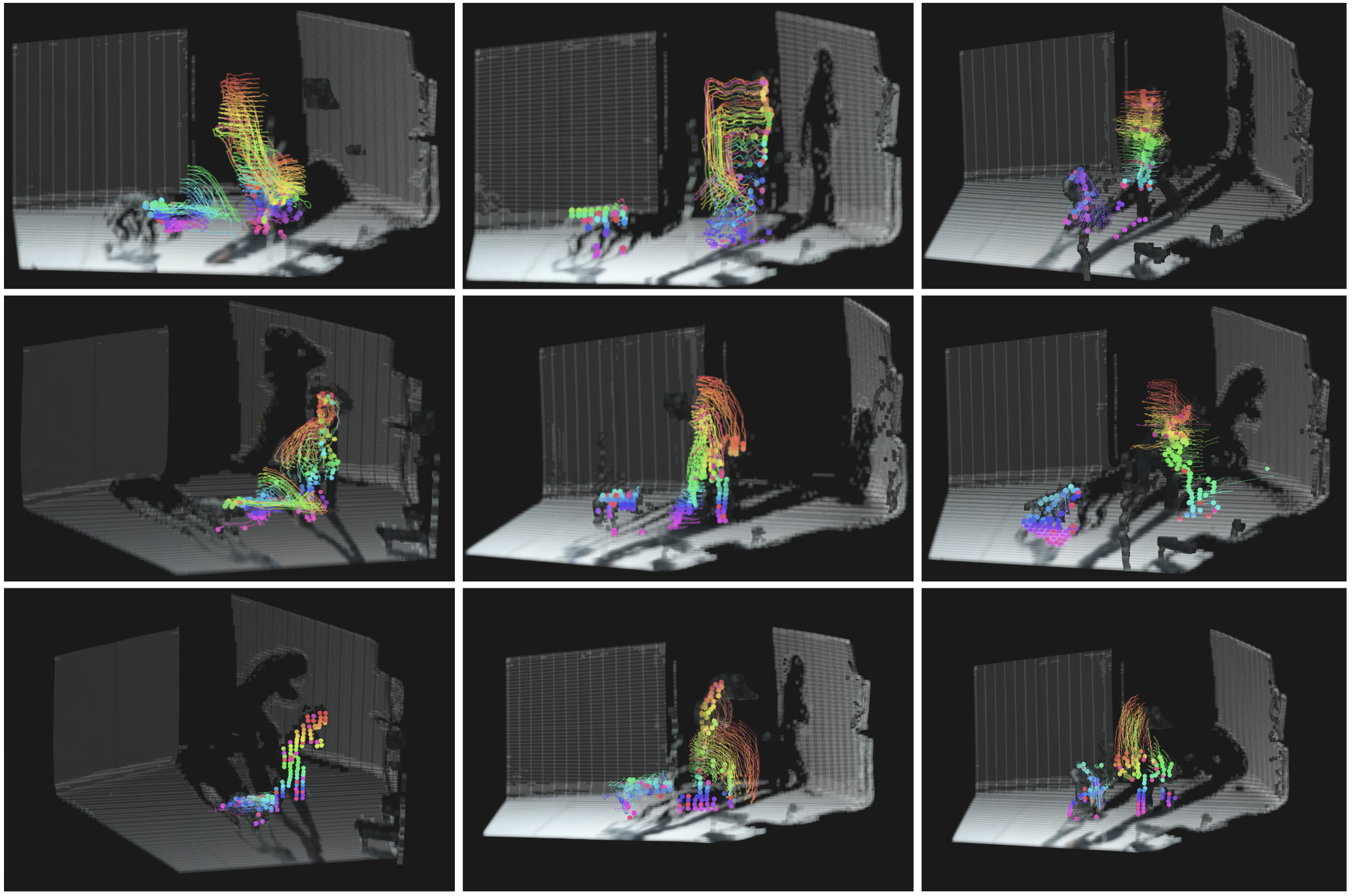

Monocular 3D point tracking models utilize single-camera video input to reconstruct the 3D position of key points on both human and robotic subjects over time. These models achieve this by identifying and tracking features within each frame, then applying algorithms to infer depth and spatial location without requiring stereoscopic vision or depth sensors. The resulting data – a time series of 3D coordinates for multiple points – forms the core of the 3D Motion Trace Dataset, providing a standardized format for representing and analyzing movement kinematics. This reconstruction process allows for the capture of nuanced movements and facilitates quantitative comparisons between different motion sequences and subjects, including both humans and robots performing similar tasks.

The 3D Motion Trace Dataset functions as a comprehensive digital archive of human and robotic movements, captured and reconstructed in three-dimensional space. This archive facilitates comparative analysis of movement patterns across different subjects and actions, allowing researchers to identify similarities, differences, and anomalies. Critically, the dataset provides ground truth data for training and evaluating generative models designed to synthesize realistic and diverse movements; discrepancies between generated and archived movements provide quantifiable feedback for model refinement, enabling iterative improvements in the accuracy and naturalness of synthesized motion. The availability of a standardized, large-scale archive also promotes reproducibility and benchmarking within the field of motion analysis and generation.

Prompt engineering is integral to the categorization of movements within the 3D Motion Trace Dataset because the specificity of prompts directly influences how the system classifies and responds to different actions. The dataset’s structure necessitates detailed prompts to define movement types; variations in phrasing or the inclusion of specific attributes can yield drastically different categorizations. This process exposes inherent biases and limitations within the underlying algorithms – effectively revealing algorithmic “permissions” regarding recognized movements and “prohibitions” relating to actions the system fails to interpret or categorizes incorrectly. Analysis of these prompt-response relationships allows for the identification of systematic errors and a deeper understanding of the model’s internal constraints and learned representations.

The Echo of Bias: Unveiling Algorithmic Control and Bodily Representation

Generative artificial intelligence models, while capable of creating remarkably realistic human movements, are susceptible to perpetuating societal biases present within their training data. The ReTracing framework illuminates how these models don’t simply generate movement, but rather reproduce patterns learned from existing datasets – datasets often reflecting historical and ongoing inequalities. Consequently, algorithms trained on biased data can inadvertently reinforce stereotypes in the movements they create, leading to algorithmic bias where certain demographics are consistently depicted with limited or prejudiced motion profiles. This isn’t a matter of flawed code, but a systemic issue where ingrained social biases become embedded within the very architecture of AI, subtly shaping perceptions of bodies and movement through technology.

Algorithmic bias within generative AI is far more than a simple technical error; it represents a contemporary manifestation of historical power dynamics related to the body. These systems, trained on existing datasets, inadvertently encode societal biases concerning race, gender, and identity directly into the generated movements and representations. This isn’t a neutral process; it actively inscribes these biases into the very architecture of the AI, effectively exercising a form of bodily disciplinary power. The resulting outputs aren’t merely inaccurate; they reinforce and potentially amplify existing stereotypes, suggesting that generative AI can function as a tool for the normalization – and even perpetuation – of prejudiced perspectives on the human form and its capabilities. Consequently, analyzing these biases requires a critical examination of how power structures are embedded within these seemingly objective technological systems.

An emerging field, the archaeology of AI, posits that generative systems are not neutral tools, but rather complex artifacts embodying specific, often unacknowledged, logics and power structures. This practice moves beyond simply identifying algorithmic bias to meticulously deconstructing how those biases become embedded within the system’s architecture, tracing the lineage of training data, design choices, and underlying assumptions. By excavating these hidden layers, researchers can reveal the historical and societal forces shaping algorithmic outputs, ultimately challenging the notion of objective AI and fostering a more critical understanding of its impact on areas like movement generation and bodily representation. This deconstructive approach is essential for mitigating the reinforcement of harmful stereotypes and promoting more equitable and transparent AI systems.

The pursuit of ‘ReTracing’ echoes a fundamental principle of elegant design: revealing the underlying structure that dictates function. This framework, by meticulously choreographing interactions between human movement, robotic execution, and generative AI, doesn’t simply create motion, it excavates the logic embedded within it. Fei-Fei Li aptly observes, “AI is not about replacing humans; it’s about augmenting and amplifying human potential.” This resonates deeply with ‘ReTracing’ as the system isn’t intended to replicate movement perfectly, but to expose how algorithmic biases shape and control it, offering a path toward more equitable and transparent human-robot interaction. The beauty of the approach lies in its ability to make the invisible mechanisms of control visible, akin to uncovering the elegant simplicity of a well-designed system.

The Echo in the Machine

The exercise of ‘ReTracing’ – of meticulously charting the flow of influence between body, machine, and algorithm – reveals, predictably, that control isn’t a singular imposition, but a distributed, often obscured, negotiation. The framework doesn’t solve algorithmic bias; rather, it exposes the choreography of bias, the subtle ways in which past movements, encoded in data, become the constraints on future action. This isn’t a flaw to be corrected, but a fundamental characteristic of systems built on the echoes of prior states.

Future work will likely necessitate a broadening of the interpretive lens. The current emphasis on motion – while potent – skirts the issue of intentionality. Can a framework designed to reveal the logic of control also account for the unpredictable flourishes of human (or, potentially, artificial) agency? The true challenge isn’t simply identifying bias, but understanding how systems can accommodate – or even learn from – deviations from the expected script.

One wonders if, in striving for ever-more-refined models of movement, the field risks a peculiar form of self-fulfilling prophecy. Each iteration of ‘ReTracing’ – each meticulous reconstruction of the past – inevitably shapes the possibilities of the future. The elegance of a system, after all, isn’t merely aesthetic; it’s a measure of how well it conceals its own limitations.

Original article: https://arxiv.org/pdf/2602.11242.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Gold Rate Forecast

- Star Wars Fans Should Have “Total Faith” In Tradition-Breaking 2027 Movie, Says Star

- KAS PREDICTION. KAS cryptocurrency

- Christopher Nolan’s Highest-Grossing Movies, Ranked by Box Office Earnings

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- eFootball 2026 is bringing the v5.3.1 update: What to expect and what’s coming

- Jujutsu Kaisen Season 3 Episode 8 Release Date, Time, Where to Watch

- Jason Statham’s Action Movie Flop Becomes Instant Netflix Hit In The United States

- Jessie Buckley unveils new blonde bombshell look for latest shoot with W Magazine as she reveals Hamnet role has made her ‘braver’

2026-02-13 13:39