Author: Denis Avetisyan

A new framework proposes moving beyond simple compliance to foster genuine trust in artificial intelligence through a focus on relationships and shared values.

![The methodology integrates trust-by-design principles directly into the agile AI development lifecycle, ensuring inherent reliability and verifiability throughout iterative refinement-a process formalized by establishing mathematically provable invariants [latex] \mathbb{P}(outcome \mid model, data) \ge \tau [/latex] at each stage, where τ represents a predefined threshold for acceptable confidence.](https://arxiv.org/html/2601.22769v1/Figures/Trust_AI_Health.png)

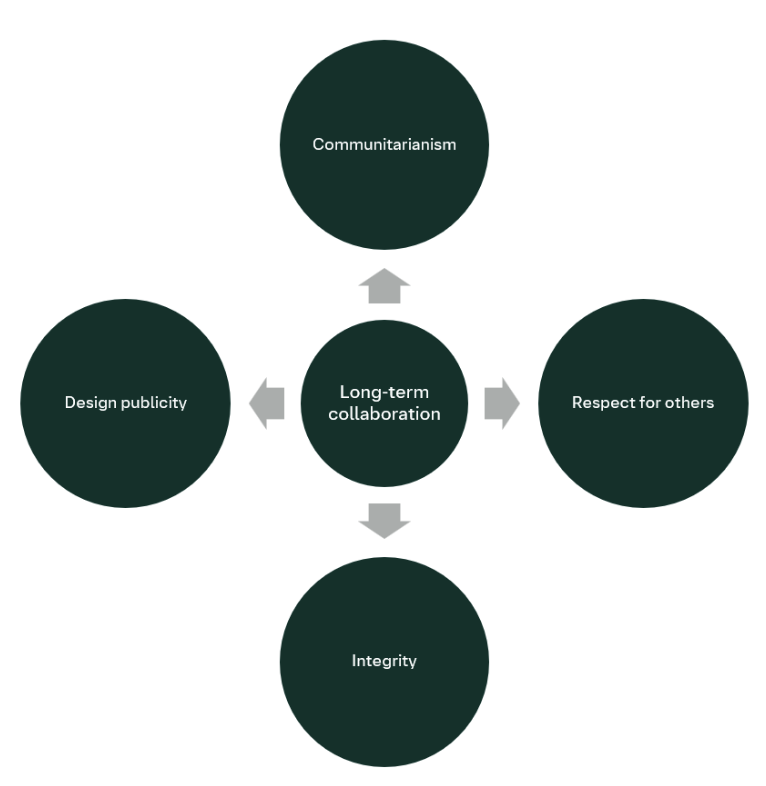

This review operationalizes trust in AI as a moral relationship grounded in relational ethics, Ubuntu philosophy, and principles of design publicity.

Current approaches to trustworthy AI often treat trust as a technical property to be engineered, overlooking its inherently relational and culturally embedded nature. This paper, ‘Beyond Abstract Compliance: Operationalising trust in AI as a moral relationship’, proposes a framework for fostering genuine trust in AI systems by grounding development in relational ethics, particularly drawing on the African philosophy of Ubuntu. By prioritizing long-term collaboration with communities and values like respect and integrity, AI design can move beyond mere compliance to build meaningful relationships. Can centering these principles unlock more equitable and context-sensitive AI that truly earns public confidence?

The Fragility of Trust: A Foundation for Responsible AI

Despite remarkable progress in artificial intelligence, public confidence remains surprisingly fragile, largely due to a pervasive sense of opacity and concerns regarding unfairness. Many advanced AI systems operate as ‘black boxes’, making it difficult – if not impossible – to understand the reasoning behind their decisions. This lack of transparency fuels skepticism, particularly when those decisions impact critical areas like loan applications, criminal justice, or healthcare. Moreover, biases embedded within training data can lead to discriminatory outcomes, reinforcing existing societal inequalities and eroding trust in the technology. These issues aren’t merely theoretical; documented instances of algorithmic bias have sparked public outcry and highlighted the urgent need for greater accountability and explainability in AI development. Consequently, overcoming this trust deficit is crucial not only for widespread AI adoption, but also for ensuring equitable and beneficial outcomes for all.

The relentless pursuit of increasingly accurate and efficient artificial intelligence often overshadows crucial ethical dimensions, fostering a significant trust deficit among potential users and the wider public. Contemporary AI development frequently centers on maximizing performance metrics – speed, precision, and scalability – with ethical considerations relegated to secondary importance, or addressed only as post-hoc mitigation strategies. This prioritization creates systems that, while technically impressive, can lack transparency, exhibit biases reflecting the data they were trained on, and operate in ways that are difficult to understand or predict. Consequently, individuals and communities may hesitate to adopt AI-driven solutions, fearing unintended consequences or unfair outcomes, ultimately hindering the responsible integration of this powerful technology into everyday life and limiting its potential for broad societal benefit.

The erosion of public trust in artificial intelligence doesn’t simply represent a public relations challenge; it actively constrains the technology’s capacity to deliver societal benefits. When individuals harbor skepticism about an AI’s fairness, accuracy, or intent, adoption rates decline, hindering progress in crucial fields like healthcare, education, and environmental sustainability. This reluctance isn’t irrational; anxieties surrounding algorithmic bias, data privacy, and job displacement are legitimate concerns that, if unaddressed, foster widespread apprehension. Consequently, the potential for AI to solve complex problems and enhance human capabilities remains largely untapped, while fears of automation and unchecked technological power continue to intensify, creating a cycle of distrust that demands proactive mitigation through transparency and ethical design.

The persistent fragility of public trust in artificial intelligence demands a fundamental restructuring of development practices, moving beyond solely prioritizing performance metrics. This paper advocates for a paradigm shift centered on proactive trustworthiness, achieved not through post-hoc explainability, but through the deliberate integration of long-term collaboration and broad community involvement throughout the entire AI lifecycle. Such an approach necessitates inclusive design processes, where diverse perspectives shape the very foundations of AI systems, ensuring they align with societal values and expectations. By fostering co-creation and shared ownership, developers can build AI that isn’t simply capable, but demonstrably reliable, fair, and ultimately, deserving of public confidence – unlocking the full potential of this transformative technology while mitigating its inherent risks.

Trust by Design: A Systemic Ethical Framework

‘Trust by Design’ implements a systematic process for integrating ethical assessments throughout each stage of AI development, from initial problem definition and data collection to model training, deployment, and ongoing monitoring. This methodology moves beyond post-hoc ethical reviews by requiring developers to proactively identify and mitigate potential harms, biases, and unintended consequences. Key components include establishing clear ethical guidelines, conducting regular impact assessments, incorporating diverse stakeholder feedback, and implementing mechanisms for accountability and redress. The framework emphasizes documentation of ethical considerations alongside technical specifications, ensuring a traceable audit trail and facilitating continuous improvement in AI ethics practices.

Relational ethics, as applied to AI development, moves beyond a simple user-system trust model to emphasize a reciprocal relationship built on mutual responsibility. This framework posits that AI systems should not merely earn trust, but actively cultivate it through transparent operation and accountable performance. Bidirectional trust necessitates that AI systems are designed to recognize and respond to user trustworthiness, adapting behavior based on demonstrated reliability. Furthermore, responsibility is shared; developers are accountable for designing systems that facilitate ethical interaction, while users are responsible for engaging with those systems appropriately, fostering a collaborative and ethically sound AI ecosystem.

The Ubuntu philosophy, originating in Southern African cultures, posits a worldview centered on interconnectedness and the belief that a person is a person through other people. Applied to AI design, this translates to prioritizing the collective impact of AI systems and recognizing that AI does not operate in isolation. Equitable AI development, guided by Ubuntu, necessitates considering the needs and well-being of all stakeholders – including marginalized communities – and fostering AI systems that contribute to communal harmony rather than exacerbate existing inequalities. This approach emphasizes shared responsibility in the design, deployment, and ongoing evaluation of AI, moving beyond individualistic metrics to focus on the broader societal implications and ensuring AI benefits all members of the community.

The ‘Trust by Design’ framework centers on the development of AI systems that demonstrably respect human values through prioritized respect for persons and operational transparency. This is achieved by grounding trustworthy AI design in relational ethics, which emphasizes bidirectional accountability between users and systems, and fostering long-term collaborative relationships. Specifically, the framework seeks to move beyond purely technical solutions to ethical concerns, instead advocating for the integration of ethical considerations throughout the entire AI development lifecycle, from initial design to deployment and ongoing maintenance. This approach aims to build AI systems where trust is earned and maintained through consistent alignment with human expectations and demonstrable responsibility.

Practical Tools for Fostering Trustworthy AI Systems

Agile development methodologies, traditionally used in software engineering, are increasingly applied to AI system development to facilitate continuous improvement and ethical alignment. This iterative approach emphasizes short development cycles – sprints – allowing for frequent evaluation and modification of models based on feedback. Crucially, ethical considerations are not treated as a post-development add-on, but are integrated into each sprint, enabling developers to proactively address potential biases or unintended consequences. This continuous feedback loop, involving stakeholders and ethicists, ensures that the AI system is refined not only for performance but also for responsible and trustworthy behavior throughout its lifecycle. Regular testing and validation within each sprint allow for early detection and mitigation of issues, reducing the risk of deploying AI systems with harmful or unfair outcomes.

Data governance for AI models necessitates establishing policies and procedures to manage data throughout its lifecycle, encompassing acquisition, storage, processing, and eventual archival or deletion. This includes defining data quality standards – accuracy, completeness, consistency, and timeliness – and implementing mechanisms for data validation and error correction. Crucially, data governance must address data security concerns through access controls, encryption, and anonymization techniques to protect sensitive information and comply with relevant regulations such as GDPR and CCPA. Furthermore, robust data lineage tracking is essential to understand the origin and transformations applied to data, enabling reproducibility and facilitating the identification of potential biases or errors that could impact model performance and fairness.

Red Teaming, in the context of AI system evaluation, involves employing independent teams to simulate adversarial attacks and identify potential weaknesses before deployment. These teams actively probe the system for vulnerabilities, including biases in model predictions, susceptibility to data poisoning, and robustness against unexpected inputs. This process differs from standard quality assurance by specifically focusing on identifying how a system could fail, rather than simply verifying that it functions as intended. Findings from Red Teaming exercises are then used to refine the AI system, improve its security, and mitigate potential risks associated with biased or unreliable outputs, ultimately increasing user trust and minimizing harm.

AI Governance frameworks establish a systematic approach to managing the risks and benefits associated with artificial intelligence. These frameworks typically incorporate policies, procedures, and controls addressing areas such as data privacy, algorithmic bias, transparency, accountability, and security. Key components often include designated roles and responsibilities for AI development and deployment, risk assessment processes, mechanisms for monitoring and auditing AI systems, and procedures for addressing ethical concerns and ensuring compliance with relevant regulations – such as those pertaining to data protection and non-discrimination. Implementation varies by organization and industry, but commonly relies on standards like NIST AI Risk Management Framework or ISO/IEC 42001 to provide a structured methodology for responsible AI practices.

AI for the Common Good: Expanding Societal Impact

Communitarianism offers a crucial ethical framework for artificial intelligence, shifting the focus from individual optimization to collective well-being. This principle posits that AI systems should be designed and deployed with the explicit intention of benefiting all members of society, rather than exacerbating existing inequalities or serving the interests of a privileged few. Such an approach necessitates careful consideration of diverse needs and vulnerabilities, ensuring equitable access to AI-driven resources and opportunities. It requires proactively mitigating potential harms, such as algorithmic bias and job displacement, and prioritizing applications that address shared challenges like public health, environmental sustainability, and social justice. Ultimately, a communitarian vision for AI champions a future where technological advancement strengthens the social fabric and promotes a more inclusive and flourishing society for everyone.

Design publicity proposes a fundamental shift in how artificial intelligence systems are constructed and understood, advocating for transparency that extends beyond mere explainability. It posits that the very goals, underlying logic, and driving motivations of an AI should be openly defined and readily accessible – not hidden within complex algorithms or proprietary code. This isn’t simply about understanding how a decision was reached, but why the system was designed to pursue that particular outcome in the first place. By making these foundational elements public, design publicity fosters genuine accountability, allowing individuals and communities to assess whether an AI’s objectives align with broader societal values and enabling meaningful oversight to prevent unintended consequences or biased outcomes. Ultimately, it aims to move beyond a reliance on technical metrics of performance and cultivate trust through demonstrable clarity and openness.

The consistent application of integrity is paramount throughout the lifecycle of artificial intelligence systems. This extends beyond simply avoiding malicious intent; it necessitates a holistic approach to development and deployment where ethical considerations are interwoven with technical functionality. A truly integral AI prioritizes honesty in data handling, ensuring transparency regarding limitations and potential biases. Furthermore, coherence across all stages – from initial design and data collection to algorithmic construction and real-world application – is crucial. Without this sustained ethical framework, AI risks eroding public trust and exacerbating existing societal inequalities, hindering its potential to deliver genuine, widespread benefit. The pursuit of technical advancement, therefore, must be inextricably linked to a commitment to consistent, honest, and ethically sound practices.

The transformative potential of artificial intelligence hinges not merely on technological advancement, but on a conscientious alignment with societal well-being. This work emphasizes that unlocking AI’s full capacity to address complex challenges – from healthcare disparities to climate change – demands a shift in focus beyond purely technical performance metrics. Genuine trust, the paper argues, is cultivated through the intentional application of principles like communitarianism, design publicity, and integrity, ensuring AI systems are demonstrably accountable, transparent in their objectives, and consistently ethical in operation. By prioritizing these values, developers can move beyond creating sophisticated tools and instead build AI that genuinely serves the common good, fostering broader acceptance and maximizing positive impact on the human condition.

The pursuit of trustworthy AI, as detailed in this exploration of relational ethics and the Ubuntu philosophy, demands a departure from mere technical compliance. It necessitates a system built on demonstrable integrity and sustained community collaboration. This aligns perfectly with Linus Torvalds’ assertion: “Most programmers think that if their code ‘works’ they have done their job. But that’s not entirely true.” The article’s emphasis on ‘design publicity’ – making the AI’s decision-making process transparent and understandable – echoes this sentiment. A system that merely appears to function isn’t sufficient; the underlying logic must be provable, verifiable, and open to scrutiny, ensuring that trust isn’t granted blindly but earned through demonstrable correctness and respect for communal values.

What Remains Invariant?

The proposition to ground trustworthy AI in relational ethics, specifically through the lens of Ubuntu, presents an interesting, if predictably human-centric, approach. The core challenge isn’t merely operationalising concepts like ‘respect’ or ‘integrity’ – algorithms are indifferent to such notions. Rather, it’s the assumption that long-term collaborative design, even with dedicated community engagement, can reliably constrain emergent behaviour. Let N approach infinity – what remains invariant? Not human intention, certainly. The system, however meticulously designed, will eventually encounter inputs and edge cases unforeseen by its creators, and its actions will be judged by outcomes, not initial ethical scaffolding.

The emphasis on ‘design publicity’ – making the AI’s reasoning transparent – feels almost quaint. Transparency, while desirable, does not equate to trustworthiness. A perfectly transparent system capable of demonstrably unethical actions remains, fundamentally, unethical. The true difficulty lies in formalizing a robust, mathematically verifiable notion of ‘communitarian benefit’ – a metric currently reliant on subjective interpretation. Until such formalization is achieved, the framework remains, at best, a well-intentioned heuristic.

Future research must address the inherent limitations of anchoring AI ethics in shifting human values. The pursuit of ‘trustworthy AI’ may, paradoxically, require a move away from explicitly human-centric models, towards systems grounded in demonstrably invariant principles – principles which, while potentially less intuitively ‘ethical’, are at least, provably, consistent. The goal isn’t to build AI that feels right, but AI that is correct, by some absolute, verifiable standard.

Original article: https://arxiv.org/pdf/2601.22769.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars February 2026 Brawl Talk: 100th Brawler, New Game Modes, Buffies, Trophy System, Skins, and more

- Gold Rate Forecast

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- ‘The Mandalorian and Grogu’ Trailer Finally Shows What the Movie Is Selling — But is Anyone Buying?

- Overwatch Domina counters

- Prestige Requiem Sona for Act 2 of LoL’s Demacia season

- Brent Oil Forecast

2026-02-03 04:36