Author: Denis Avetisyan

A new algorithm allows groups of autonomous agents to seamlessly switch between leading and herding behaviors for more efficient collective transport.

This review details an adaptive approach to collective transport that improves performance with heterogeneous agents by dynamically optimizing between leading and herding strategies.

Autonomous collective transport systems face a fundamental challenge: agents may respond differently to guidance, exhibiting both avoidance and following behaviors. This limitation motivates the work ‘Re-Solving the Shepherding Problem: Lead When Possible, Herd When Necessary’, which introduces an algorithm that adaptively switches between leading and herding strategies based on agent response. Through simulation, this mixed approach demonstrates robust transport performance across heterogeneous groups, outperforming algorithms reliant on single strategies. Could this dynamic adaptation not only improve control, but also mitigate habituation and unlock the full potential of robotic transport systems for a wider range of applications?

Decoding Collective Motion: The Challenge of Coordination

The orchestration of movement across multiple robotic agents represents a core difficulty within the fields of robotics and control systems. This isn’t simply a matter of programming each unit individually; the complexity arises from the need for decentralized coordination, where agents must react to each other and the environment without a central authority dictating every action. Scalability poses a significant hurdle – as the number of agents increases, the computational demands for planning and execution grow exponentially. Furthermore, real-world applications demand adaptability; agents must navigate unpredictable scenarios, avoid collisions, and maintain formation in dynamic surroundings. Addressing these challenges requires innovative algorithms and control strategies capable of handling the inherent complexities of multi-agent systems, ultimately paving the way for more efficient and robust collective behaviors in applications ranging from warehouse automation to search and rescue operations.

Conventional approaches to multi-agent coordination, frequently reliant on centralized planning or pre-defined trajectories, demonstrate limitations when applied to large-scale or fluctuating scenarios. These methods often encounter computational bottlenecks as the number of agents increases, hindering real-time responsiveness and efficient path planning. Furthermore, their rigidity makes them susceptible to disruptions from unexpected obstacles or changes in the environment, resulting in suboptimal transport performance and increased energy expenditure. The inability to dynamically adjust to unforeseen circumstances often leads to agents colliding, becoming blocked, or deviating from intended routes, highlighting the necessity for more robust and adaptive coordination strategies capable of handling the complexities of real-world applications.

Effective collective movement hinges on recognizing the distinct behavioral tendencies of individual agents within a group. Research indicates that agents consistently fall into one of two primary roles: ‘Evader Agents’ prioritize maintaining distance from others and navigating open space, while ‘Follower Agents’ exhibit a tendency to gravitate towards and align with the movement of nearby individuals. This dichotomy is not necessarily programmed, but rather emerges from simple local interaction rules; each agent responds to its immediate surroundings without global knowledge of the collective’s objective. Consequently, understanding and predicting these individual roles – the proportion of evaders versus followers, and how these roles shift in response to changing conditions – is paramount for designing robust and adaptable coordination algorithms. By accounting for this inherent behavioral diversity, robotic swarms and multi-agent systems can achieve more efficient, resilient, and natural-looking collective movement patterns.

Guiding the Swarm: Harnessing Herding and Leading Techniques

The Shepherding Algorithm functions as a decentralized control system where agents are guided towards a designated target via the application of repulsive forces. Unlike methods relying on central direction, this algorithm distributes influence by calculating repulsive vectors between agents and the target location. These vectors create a ‘pushing’ effect, encouraging agents to move away from the target’s immediate vicinity, but within a defined operational space. This indirect approach facilitates collective movement towards the target without requiring explicit path planning for each agent, making it suitable for large-scale simulations and dynamic environments where centralized control is impractical or inefficient. The strength of the repulsive force is typically weighted by distance, ensuring greater influence at closer ranges and preventing overly aggressive maneuvering.

The Leading Algorithm functions by applying attractive forces between the ‘Transporter’ and each ‘Agent’ within the population. This force vector is calculated based on the distance and desired path, pulling agents towards a specified goal or along a predetermined trajectory. The magnitude of the attractive force diminishes with distance, ensuring that agents closer to the transporter experience a stronger pull. Implementation requires careful calibration of the force magnitude to prevent excessive acceleration or oscillation, and to maintain stable group movement along the intended path. Unlike repulsive forces used in the Shepherding Algorithm, the Leading Algorithm directly guides agents by incentivizing movement towards a defined location or route.

Both the Shepherding and Leading algorithms function by modulating forces applied to the agent population via a central component designated the ‘Transporter’. The Transporter acts as the intermediary, calculating and applying either repulsive (Shepherding) or attractive (Leading) forces to individual agents. These forces are not directly calculated by the algorithms themselves, but are instead determined by the Transporter based on agent positions, target locations, and algorithm-specific parameters. The Transporter’s calculations are then translated into velocity changes for each agent, effectively steering the population. Without the Transporter’s function of force application and propagation, neither algorithm can exert influence over the agent group.

Implementation of the Shepherding Algorithm requires consideration of agent-agent repulsion to avoid undesirable behaviors. Without incorporating repulsive forces between agents, the algorithm can result in collisions and a breakdown of group cohesion as agents converge on the target. This inter-agent repulsion is typically modeled as a short-range force inversely proportional to the distance between agents, effectively creating a minimum separation distance. Adjusting the strength of this repulsive force allows for fine-tuning of group density and stability, balancing collision avoidance with the maintenance of a tightly packed, directed group movement towards the designated target.

A Dynamic Strategy: Observing the Mixed Transport Approach

The Mixed Transport Strategy functions by dynamically allocating control between herding and leading behaviors to optimize group movement. Herding involves the transporter actively guiding agents from behind, useful when agents are hesitant or require directional correction, while leading involves the transporter moving ahead and agents following voluntarily. The strategy assesses agent responses – specifically, proximity to the desired path and velocity alignment – in real-time to determine the optimal behavior. This adaptive approach leverages the efficiency of leading when agents are cooperative and the robustness of herding when intervention is necessary, resulting in a more flexible and efficient transport mechanism compared to relying solely on either technique.

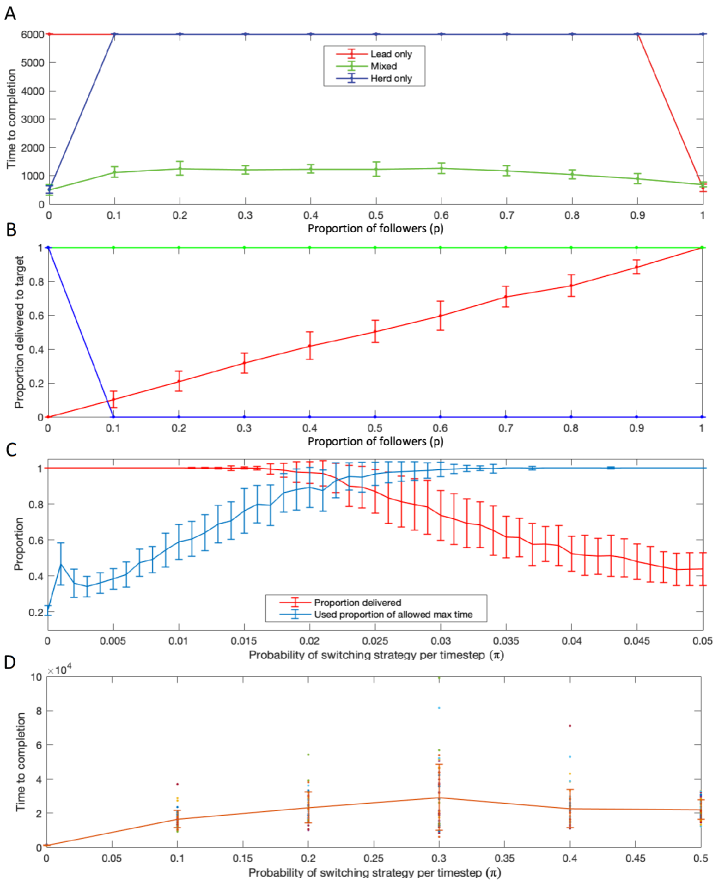

The efficacy of the Mixed Transport Strategy is directly correlated to the ‘Proportion of Followers’ within the agent group. A higher proportion of followers-indicating a greater tendency for agents to align with a designated leader-suggests that a leading-based approach will be more efficient in directing the group. Conversely, a lower proportion of followers, implying a more independent or resistant group dynamic, necessitates a greater emphasis on herding techniques to maintain cohesion and prevent dispersal. The optimal balance between leading and herding is therefore determined by this proportion, requiring real-time adjustment to maximize transport efficiency.

The ‘Strategy Switching Rate’ represents the frequency with which the control algorithm alternates between herding and leading behaviors during agent transportation. Optimization of this parameter is critical because a rate that is too high can introduce instability and prevent convergence on an efficient transport solution, while a rate that is too low may result in the algorithm becoming trapped in suboptimal configurations. Specifically, a higher switching rate allows for faster adaptation to changing agent dynamics but increases computational cost and potentially introduces oscillations; conversely, a lower rate reduces computational load but may hinder the system’s ability to respond effectively to variations in agent behavior or environmental conditions. Determining the ideal switching rate requires balancing these competing factors and is heavily influenced by the specific characteristics of the agent population and the transport environment.

The Collection-Driving Strategy operates as a component within the Shepherding Algorithm to improve agent collection efficiency through deliberate transporter positioning. This implementation calculates optimal transporter locations based on the distribution of agents, aiming to minimize the overall travel distance required to guide the entire group towards the target. By proactively positioning the transporter, the strategy reduces the need for reactive adjustments, thereby decreasing collection time and improving the algorithm’s performance, particularly in scenarios with dispersed or irregularly shaped agent clusters. The strategy dynamically adjusts the transporter’s position based on real-time agent locations, ensuring continued optimization throughout the collection process.

From Simulation to System: Observing Performance and Implications

Simulations reveal a remarkable capability within the ‘Mixed Transport Strategy’: complete success in delivering all agents, irrespective of the ratio between leaders and followers. This robustness stems from the strategy’s inherent adaptability, allowing the collective to maintain task completion even with a significant proportion of agents passively following established paths. The system doesn’t falter as follower numbers increase; every agent reaches its destination consistently, demonstrating a high degree of fault tolerance and efficient resource allocation. This consistent performance suggests a powerful mechanism for coordinating multi-agent systems in complex environments, potentially overcoming limitations observed in strategies reliant on equal participation or rigid leadership structures.

Simulations reveal a compelling efficiency within the ‘Mixed Transport Strategy’ when agents dynamically adjust their roles. Specifically, at moderate switching rates – defined as up to [latex]π ≈ 0.01[/latex] – the system consistently completes the transport task within 6000 timesteps. This indicates a robust and predictable performance level, crucial for real-world applications demanding timely execution. The ability to maintain such swift completion times, even as agents transition between leading and following roles, highlights the strategy’s adaptability and potential for optimization in complex environments. This timeframe offers a practical benchmark for deploying multi-agent systems where speed and reliability are paramount.

The study demonstrates a remarkable robustness in the ‘Mixed Transport Strategy’ even under conditions of rapid agent role-switching. Specifically, simulations reveal that the mean time required to complete the transport task remains consistently around or below 20000 timesteps, even when agents change between leading and following roles at a high rate – up to [latex]π ≈ 0.5[/latex]. This sustained performance, despite frequent disruptions to the established formation, highlights the strategy’s inherent adaptability and fault tolerance. The findings suggest that this approach could be particularly valuable in real-world robotic deployments where unpredictable environmental factors or individual agent failures might necessitate dynamic role adjustments without significantly compromising overall mission efficiency.

The demonstrated success of the ‘Mixed Transport Strategy’ translates directly into advancements for collective robotics, offering a pathway toward more dependable and efficient multi-agent systems. By maintaining a 100% delivery rate across varying follower proportions and consistently completing tasks within reasonable timeframes-even under dynamic switching conditions-this research provides a foundational strategy for real-world applications. This robustness is particularly crucial in scenarios where agents may fail or become temporarily unavailable, as the system’s inherent adaptability ensures continued progress. Consequently, these findings support the development of robotic teams capable of operating reliably in complex and unpredictable environments, paving the way for innovations in fields like automated logistics, coordinated exploration, and distributed sensing.

The principles demonstrated by this research extend beyond the initial transport problem, offering a flexible framework for tackling a diverse range of collective tasks. The dynamically adjusted follower-leader roles, proven effective in coordinating agent movement, hold considerable promise for applications demanding adaptable group behavior. Scenarios such as search and rescue operations, where robots must collaboratively explore unknown environments and efficiently cover ground, could greatly benefit from this approach. Similarly, environmental monitoring tasks – encompassing area mapping, data collection, and anomaly detection – stand to gain from a system enabling robust coordination and efficient resource allocation amongst multiple robotic agents, even in the face of changing conditions or agent failures. This work therefore suggests a pathway towards more resilient and versatile multi-robot systems capable of operating effectively in complex, real-world scenarios.

The study of collective transport, as demonstrated in this work, reveals a fascinating interplay between individual agent behavior and emergent group dynamics. This echoes Søren Kierkegaard’s assertion: “Life can only be understood backwards; but it must be lived forwards.” The algorithm’s adaptive switching between leading and herding strategies – a biomimetic approach to managing heterogeneous agents – embodies this principle. Just as one gains understanding of life’s journey through retrospective analysis, the system ‘learns’ optimal control by evaluating past performance and adjusting its approach. This mirrors the core concept of the paper: achieving robust collective transport requires a dynamic, historically-informed approach to navigating complex systems.

Where Do We Go From Here?

The demonstrated adaptability between leading and herding strategies reveals a fundamental truth: effective collective transport isn’t about imposing a single solution, but recognizing when to nudge and when to direct. However, the current model operates within a simplified framework. Future iterations must confront the inevitable complexities of truly heterogeneous agent groups – differing perceptual ranges, communication bandwidths, and even motivational biases. A fascinating, if thorny, question emerges: can a system learn to identify and exploit these individual differences to optimize collective performance, or does homogeneity remain an unspoken ideal?

Furthermore, the present work largely sidesteps the issue of scalability. While effective with modest groups, the computational cost of dynamic strategy switching could become prohibitive as the number of agents increases. Perhaps the solution lies not in centralized decision-making, but in distributed algorithms that allow agents to negotiate roles and responsibilities autonomously, mirroring the emergent behaviors observed in biological systems. The pursuit of robust, scalable collective behavior necessitates a move beyond mere mimicry, toward a deeper understanding of the underlying principles governing self-organization.

Ultimately, the ‘shepherding problem’ is less about solving a technical challenge and more about acknowledging the inherent messiness of complex systems. The most promising avenues for future research lie not in seeking perfect control, but in designing systems that are resilient to uncertainty, adaptable to change, and capable of thriving in a world defined by its inherent unpredictability.

Original article: https://arxiv.org/pdf/2602.16750.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- 1xBet declared bankrupt in Dutch court

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- James Van Der Beek grappled with six-figure tax debt years before buying $4.8M Texas ranch prior to his death

- eFootball 2026 Show Time Worldwide Selection Contract: Best player to choose and Tier List

2026-02-22 04:39